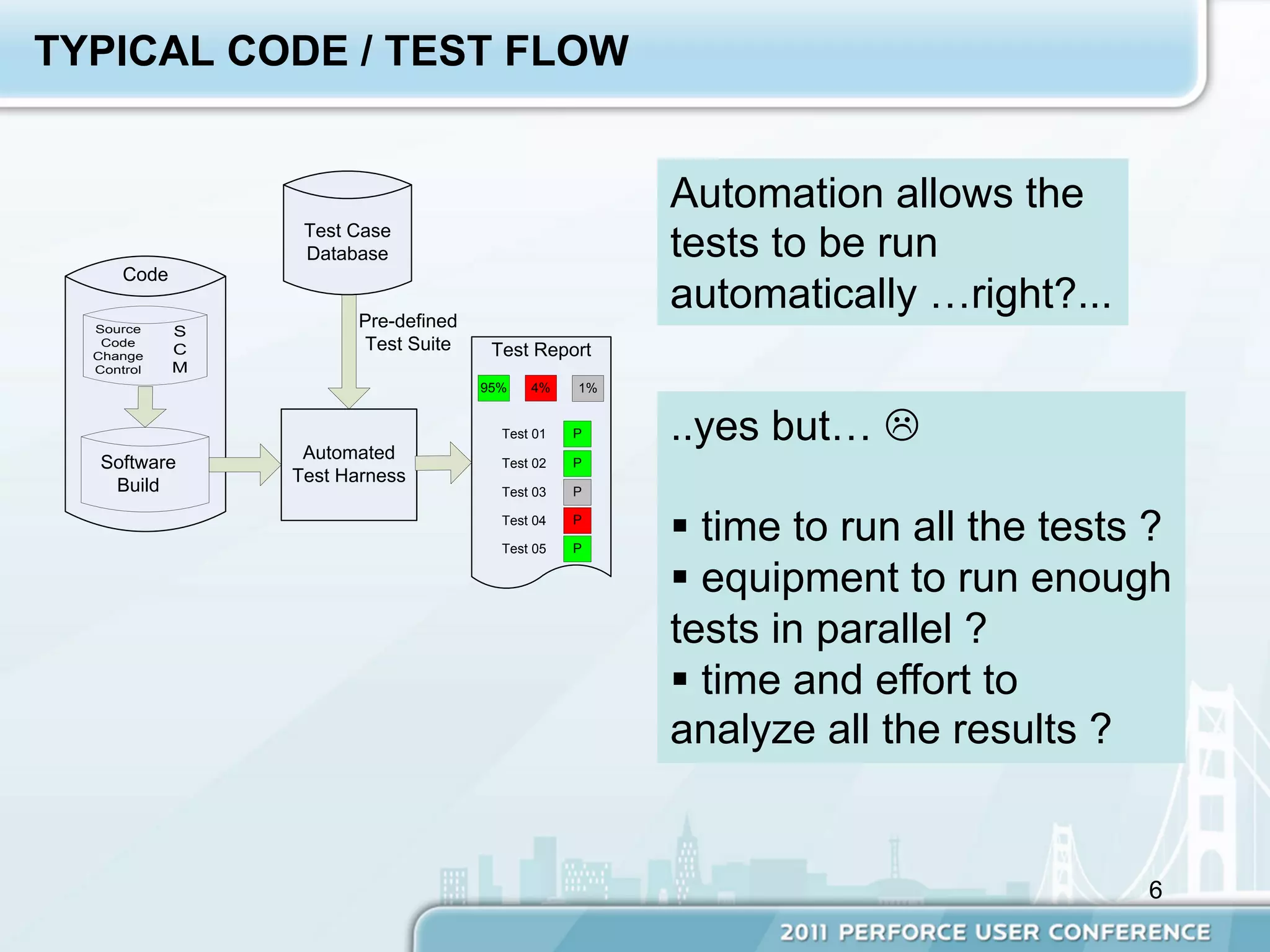

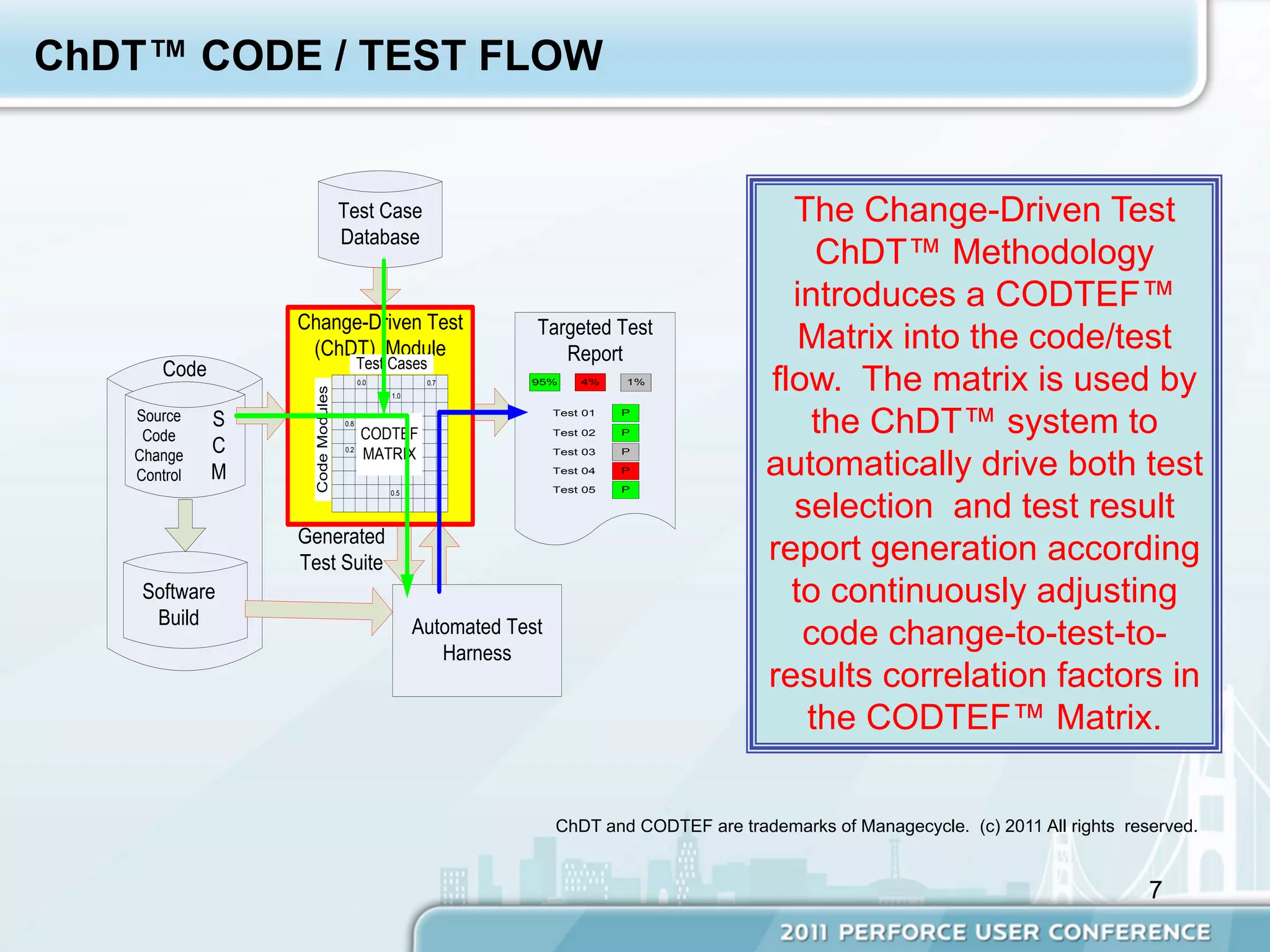

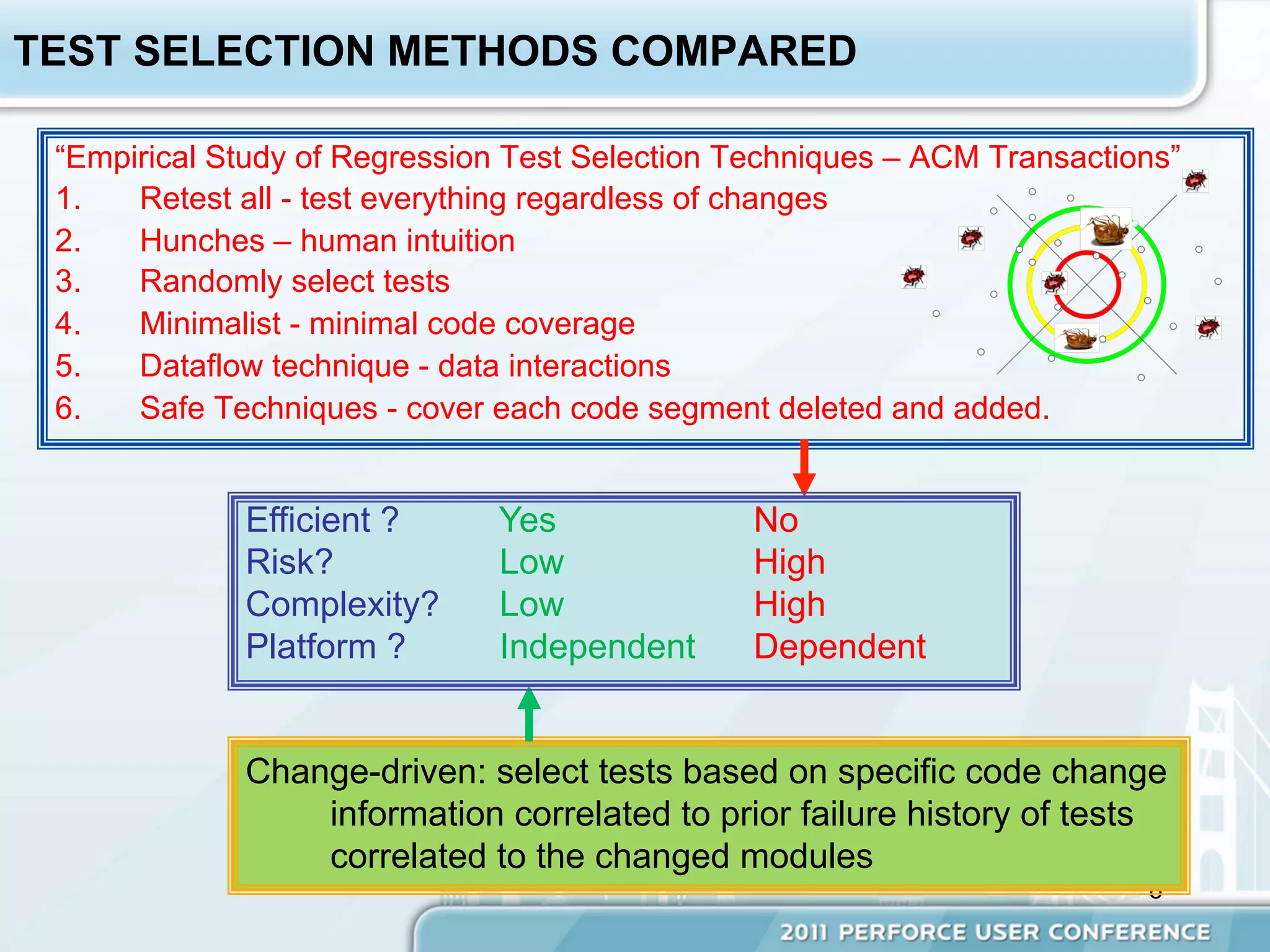

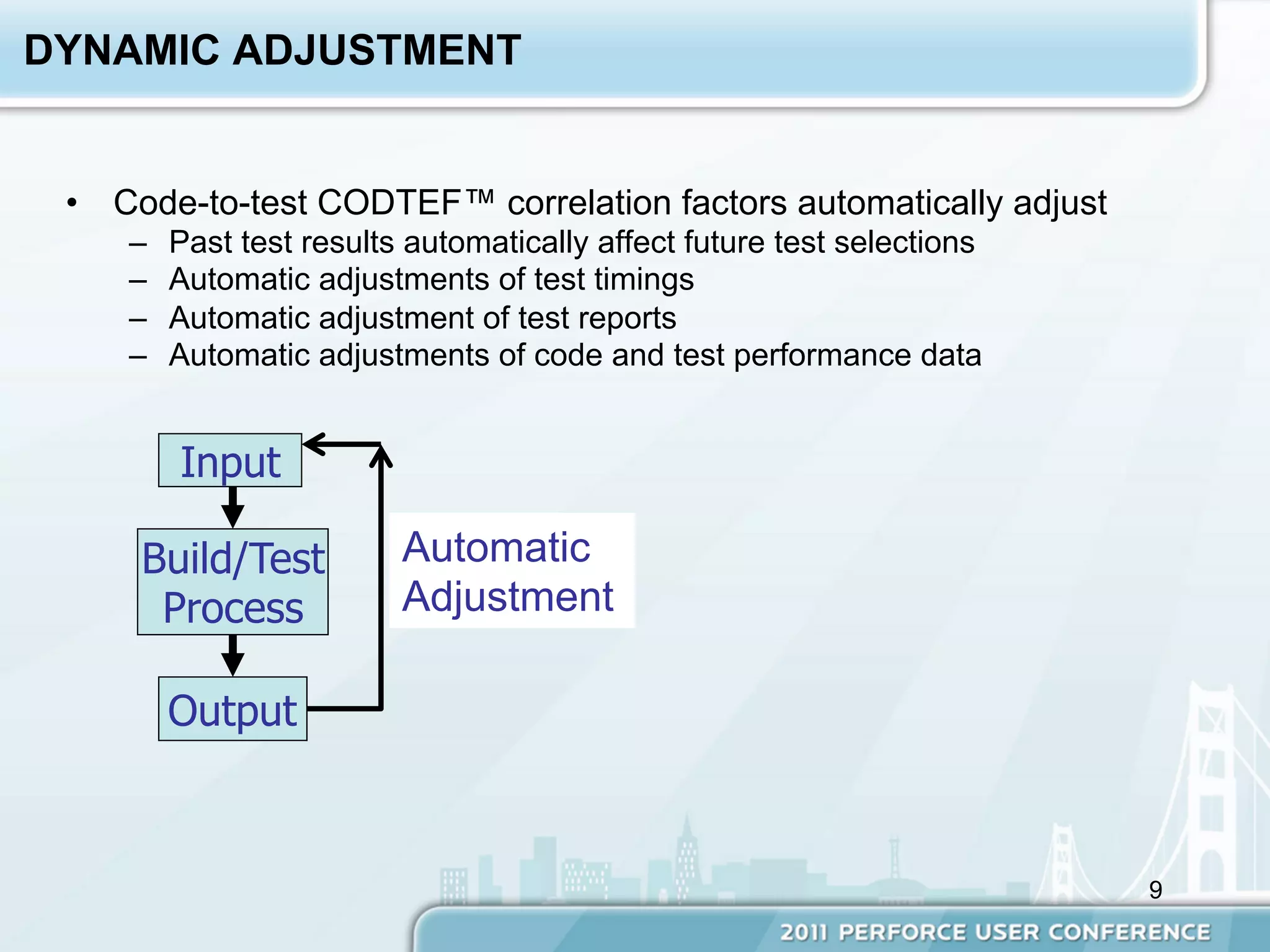

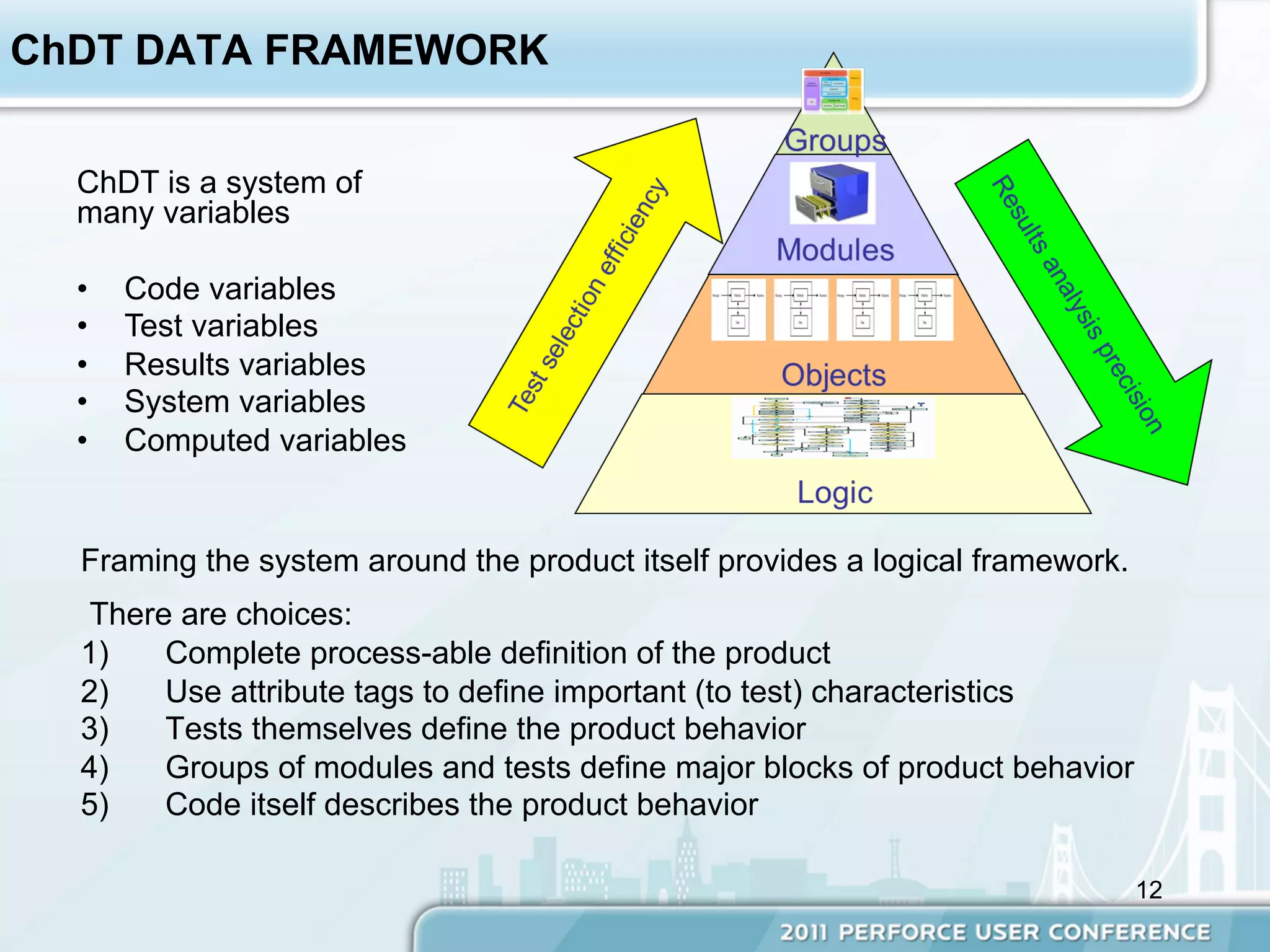

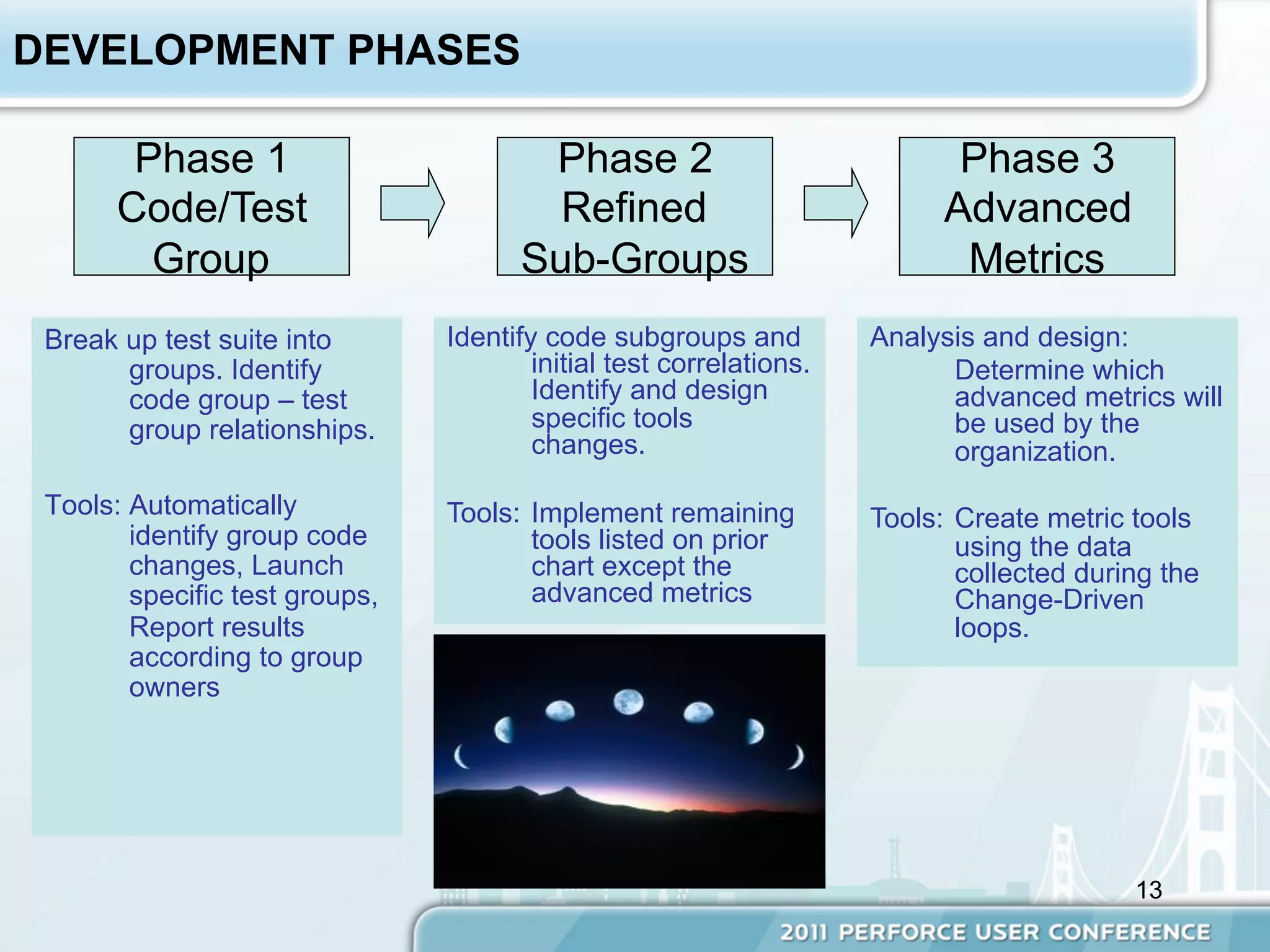

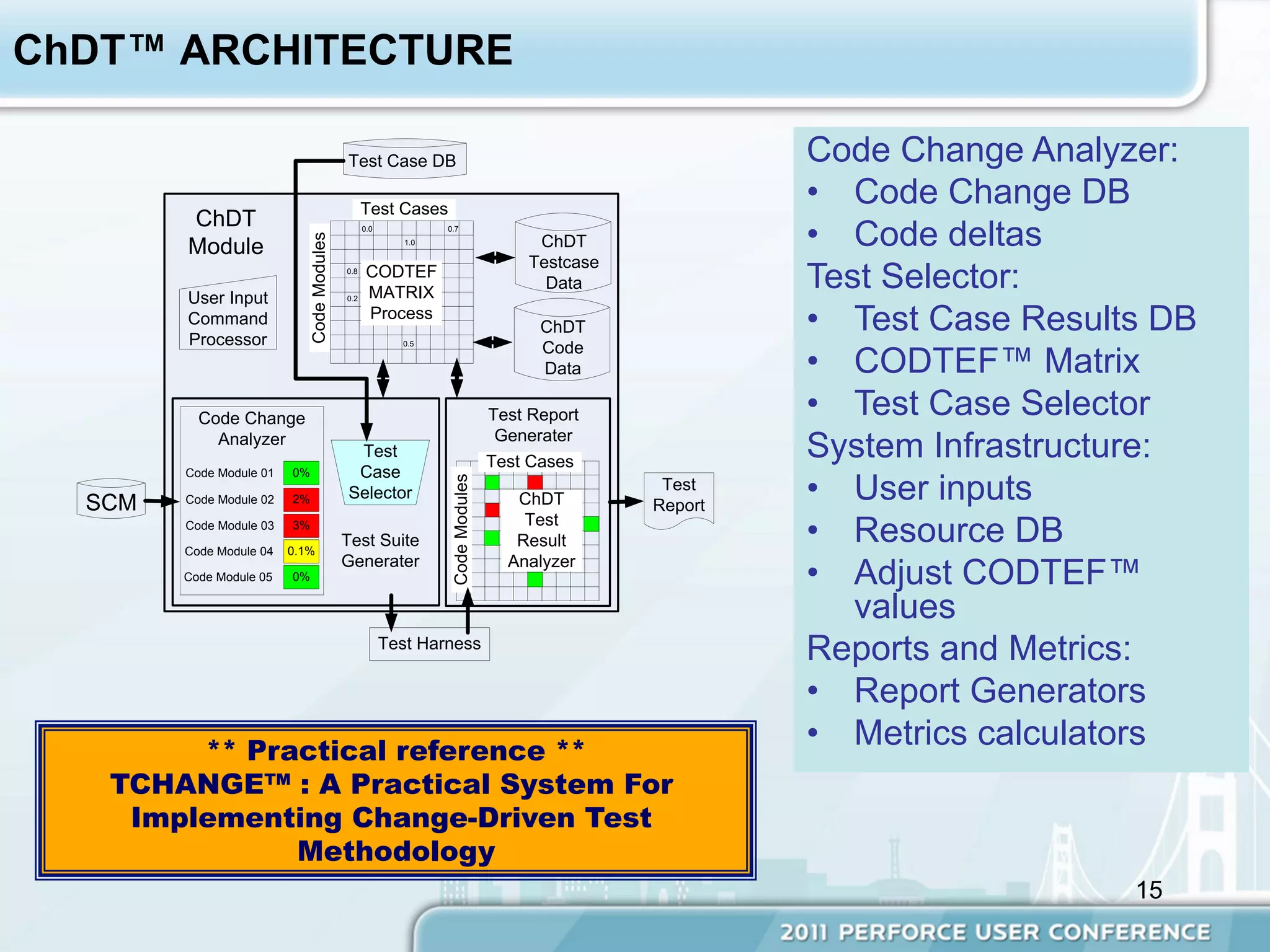

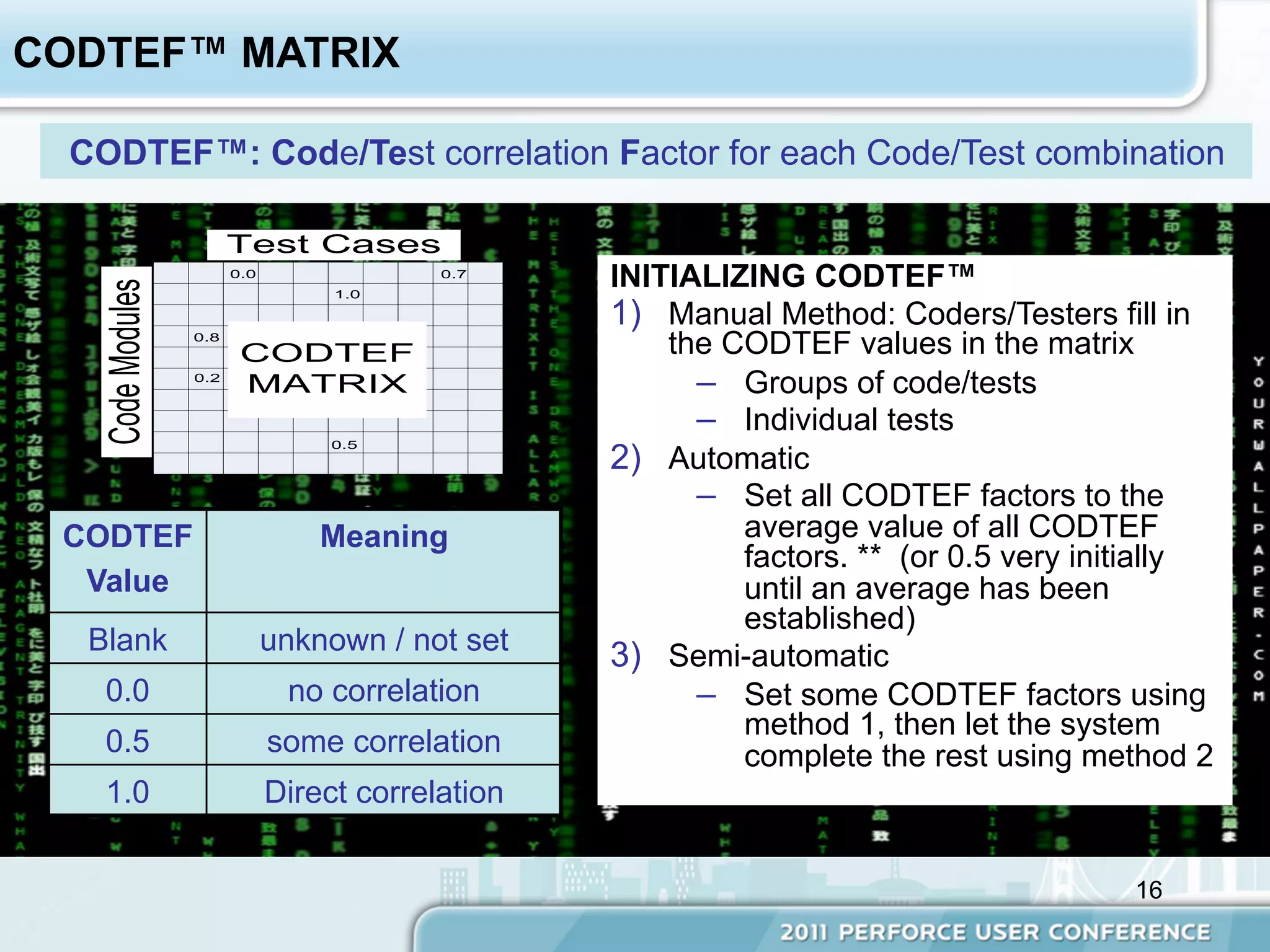

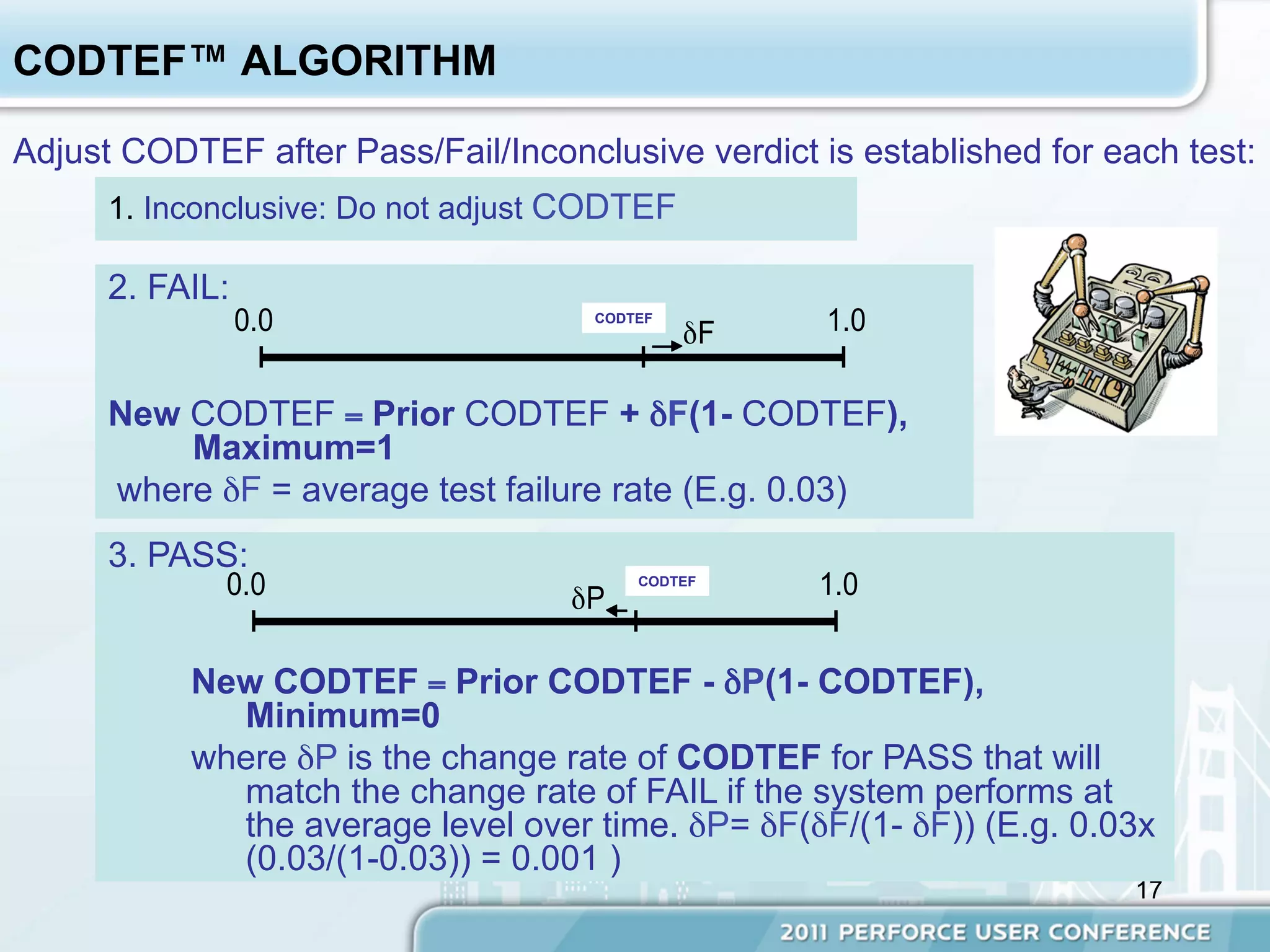

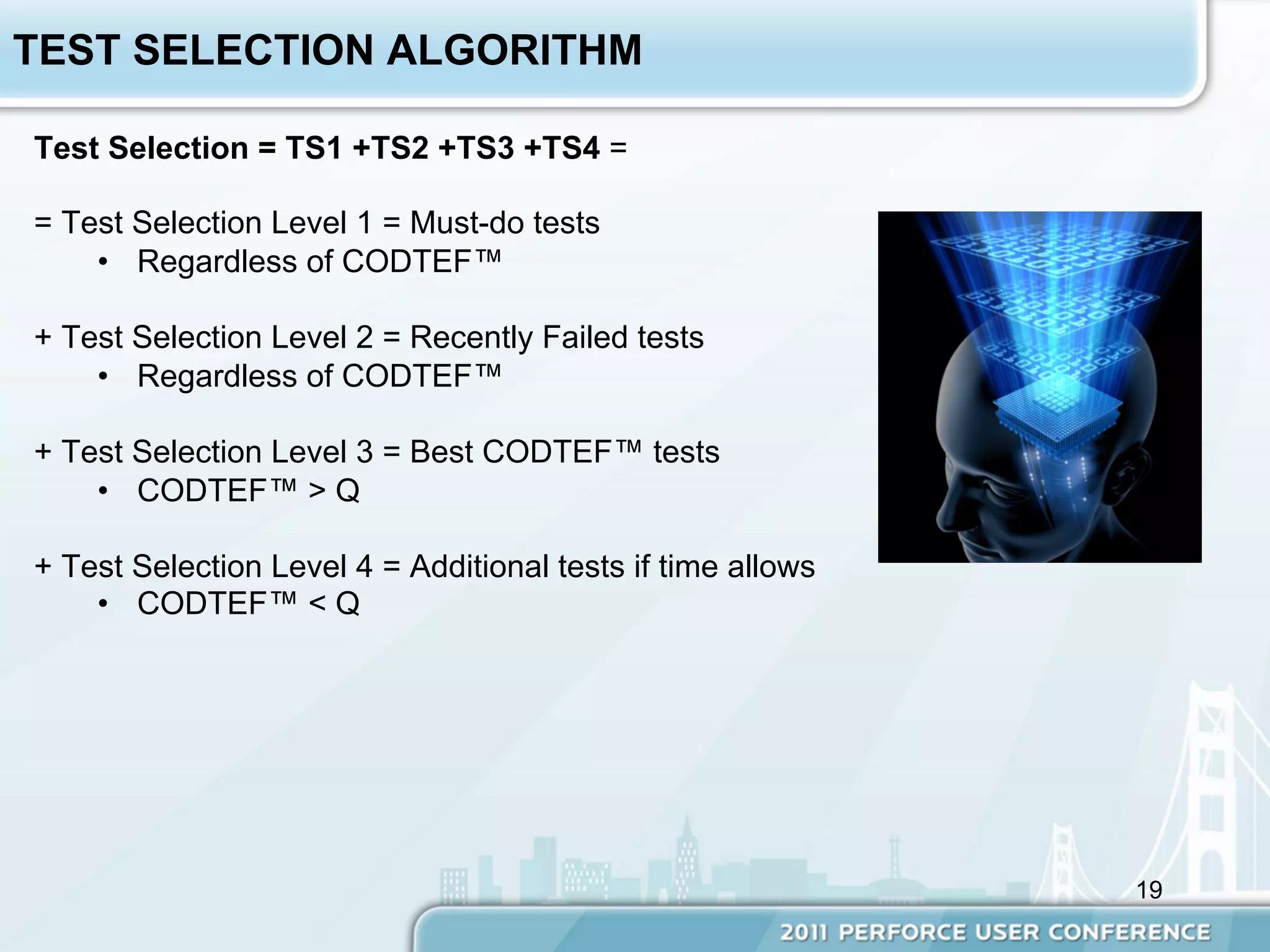

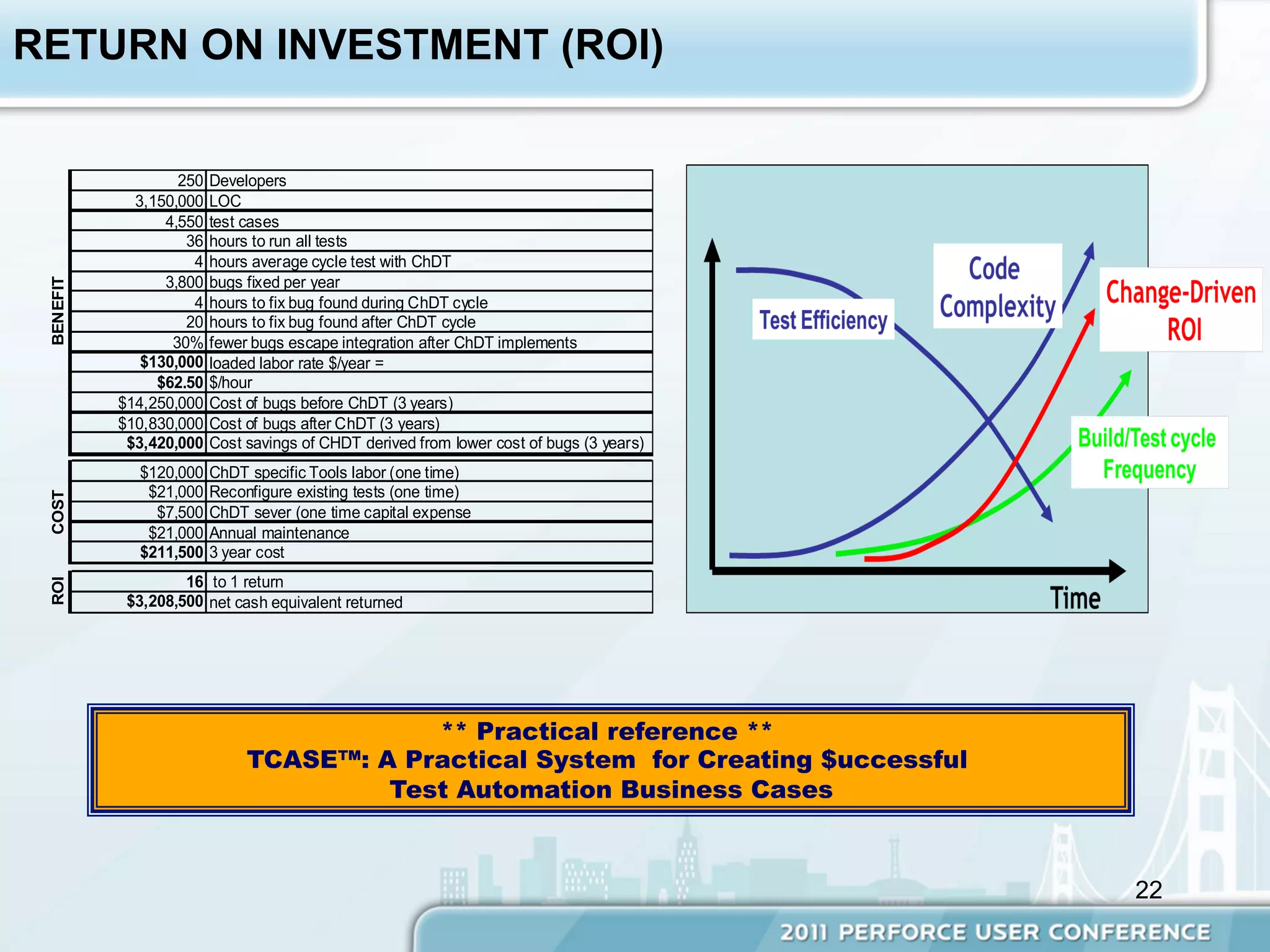

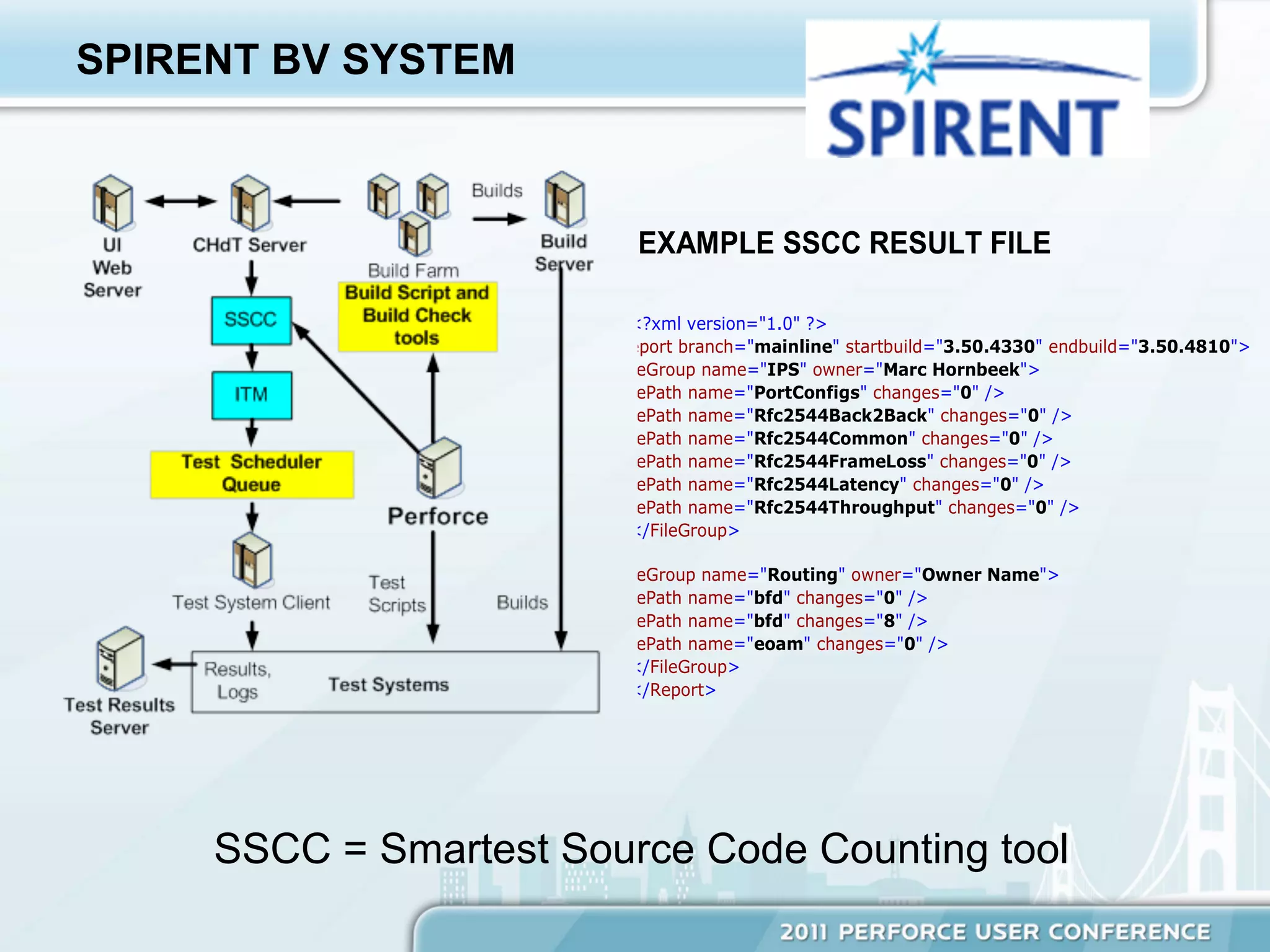

The document outlines the Continuous Change-Driven Build Verification (CHDT) methodology created by Marc Hornbeek at Spirent Communications, which improves efficiency in automated build verification through dynamic testing of code changes using a correlation matrix. This approach reduces testing bottlenecks in large-scale systems by automating test selection and result analysis based on historical test performance. The methodology has proven cost-effective, significantly reducing the costs associated with bugs in the software development process.