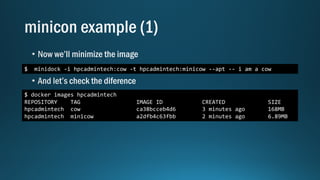

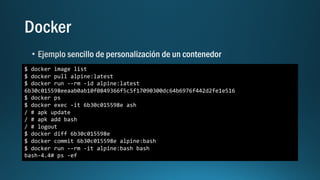

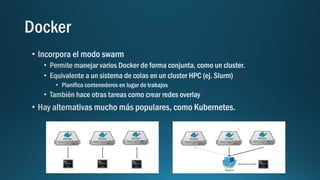

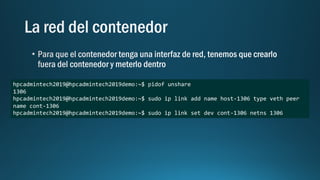

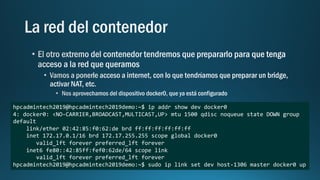

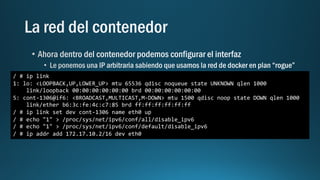

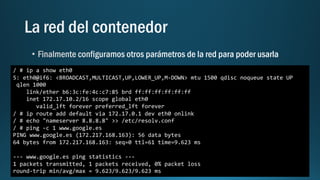

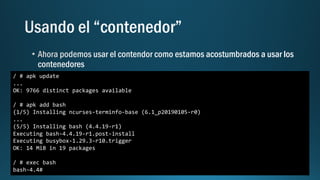

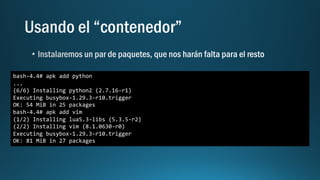

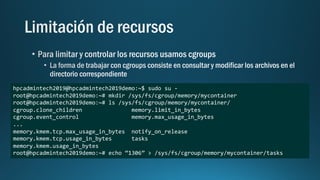

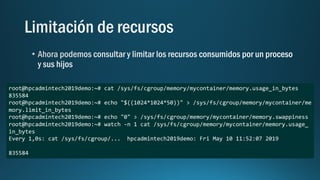

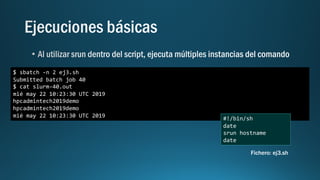

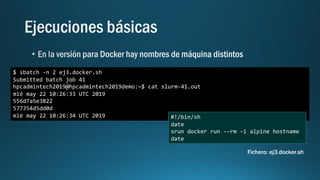

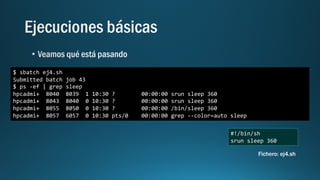

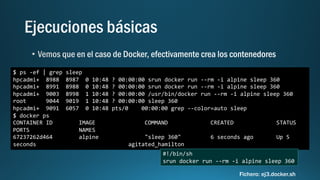

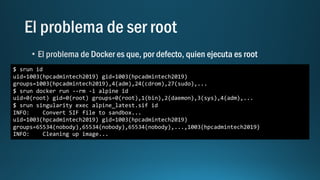

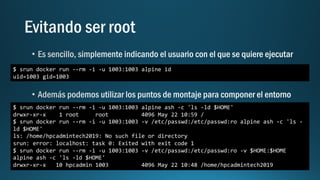

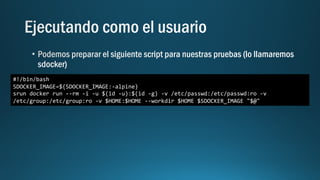

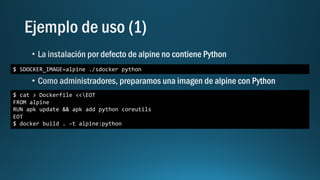

The document details various commands and procedures involving Docker, LXC, and Singularity to manage Alpine Linux containers and implement networking configurations. It includes operations like pulling images, installing packages, setting up cgroups, and managing network interfaces. The content serves as a technical guide for setting up and manipulating containers with a focus on memory management and process control.

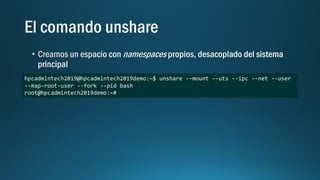

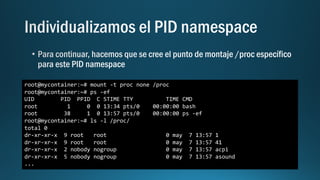

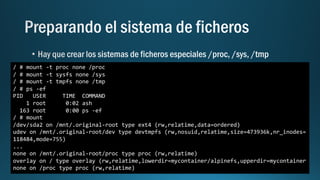

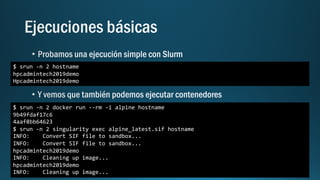

![root@mycontainer:~# id

uid=0(root) gid=0(root) groups=0(root),65534(nogroup)

root@mycontainer:~# ps -ef

UID PID PPID C STIME TTY TIME CMD

nobody 1 0 0 12:38 ? 00:00:01 /sbin/init maybe-ubiquity

nobody 2 0 0 12:38 ? 00:00:00 [kthreadd]

nobody 4 2 0 12:38 ? 00:00:00 [kworker/0:0H]

...

root 1472 1471 0 13:32 pts/0 00:00:00 -bash

root 1505 1472 0 13:34 pts/0 00:00:00 unshare --mount --uts --ipc --net

root 1507 1505 0 13:34 pts/0 00:00:00 bash

nobody 1527 2 0 13:44 ? 00:00:00 [kworker/u2:0]

root 1529 1507 0 13:48 pts/0 00:00:00 ps -ef

root@mycontainer:~#](https://image.slidesharecdn.com/containersforsysadmins-190528140336/85/Containers-for-sysadmins-28-320.jpg)

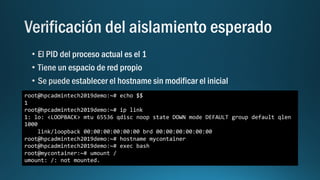

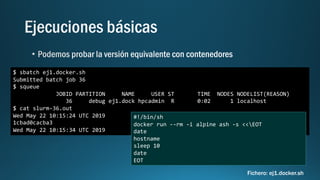

![$ sbatch ej2.sh

Submitted batch job 38

$ cat slurm-38.out

Ubuntu 18.04.2 LTS n l

UID PID PPID C STIME TTY TIME CMD

root 1 0 0 08:49 ? 00:00:12 /sbin/init maybe-ubiquity

root 2 0 0 08:49 ? 00:00:00 [kthreadd]

...

#!/bin/sh

cat /etc/issue

ps -ef

Fichero: ej2.sh](https://image.slidesharecdn.com/containersforsysadmins-190528140336/85/Containers-for-sysadmins-54-320.jpg)

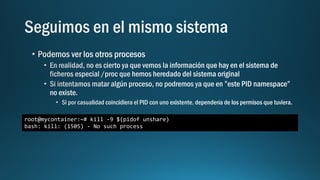

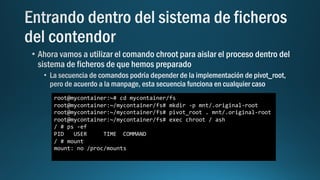

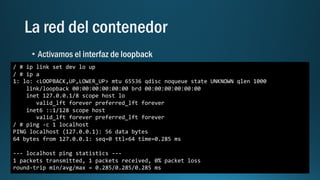

![FROM ubuntu

RUN apt-get update && apt-get install -y cowsay

ENTRYPOINT ["/usr/games/cowsay"]

$ docker build . -t hpcadmintech:cow

...

$ docker run --rm -it hpcadmintech:cow i am a cow in a fat container

_______________________________

< i am a cow in a fat container >

-------------------------------

^__^

(oo)_______

(__) )/

||----w |](https://image.slidesharecdn.com/containersforsysadmins-190528140336/85/Containers-for-sysadmins-77-320.jpg)