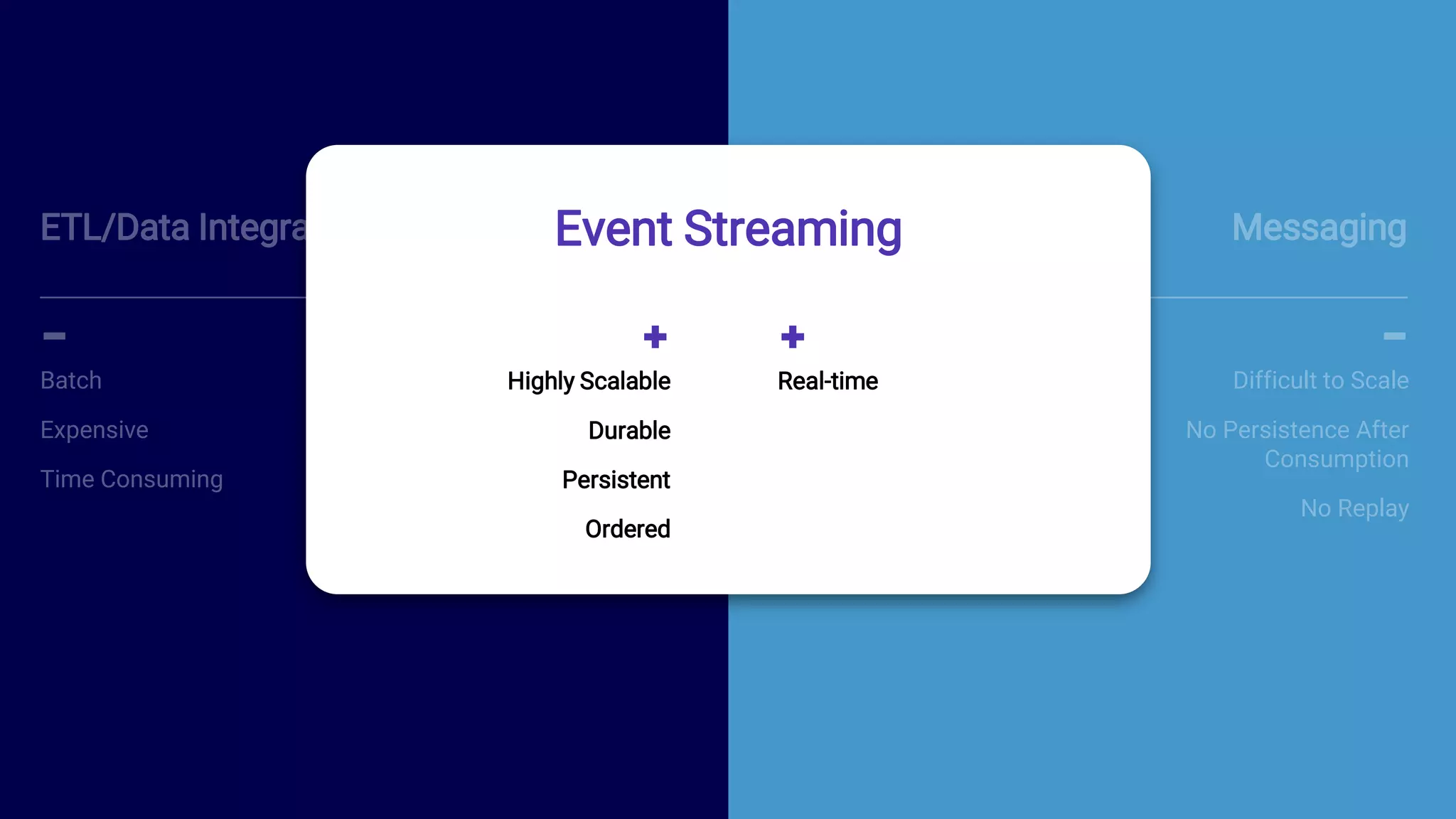

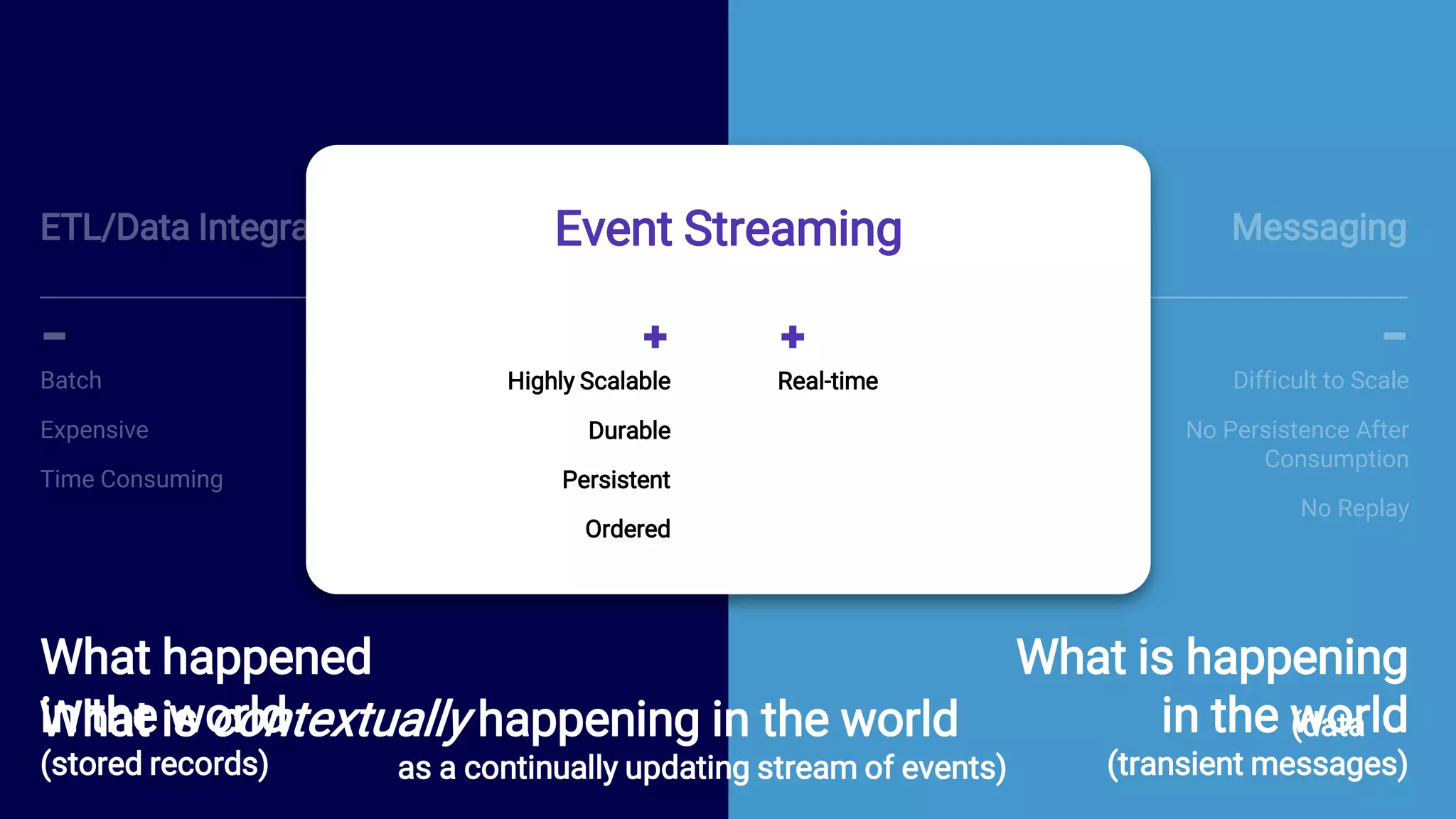

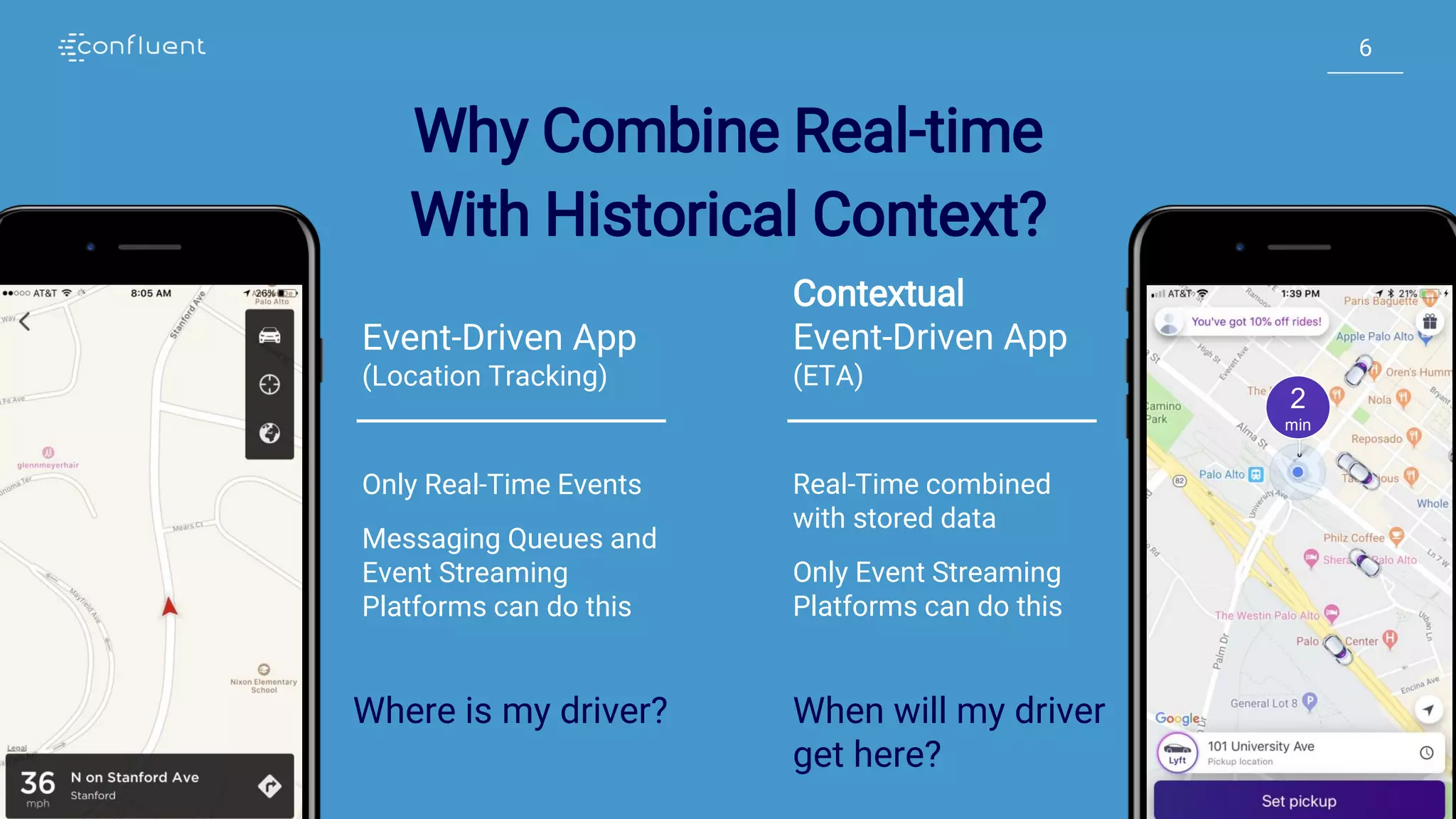

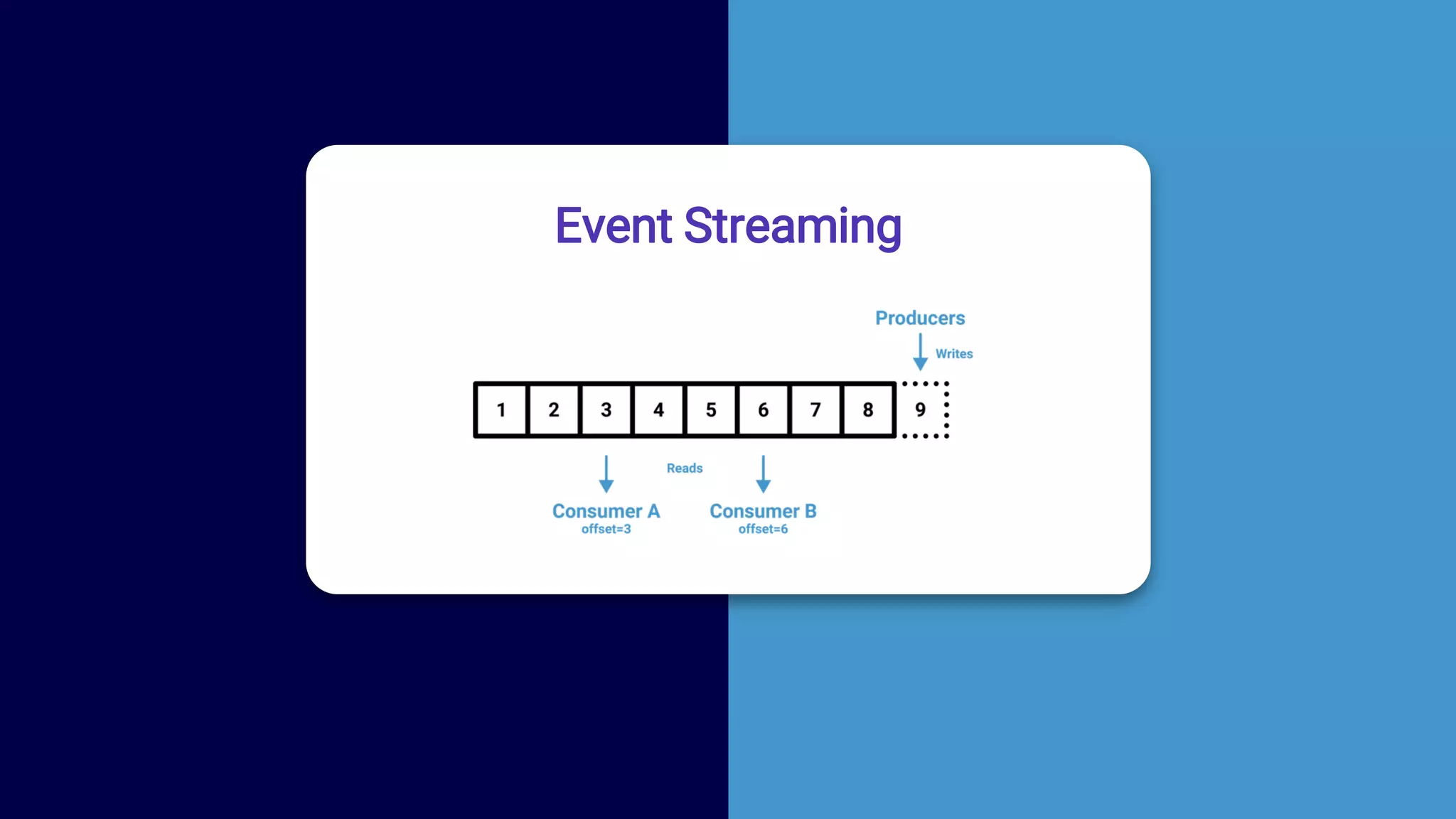

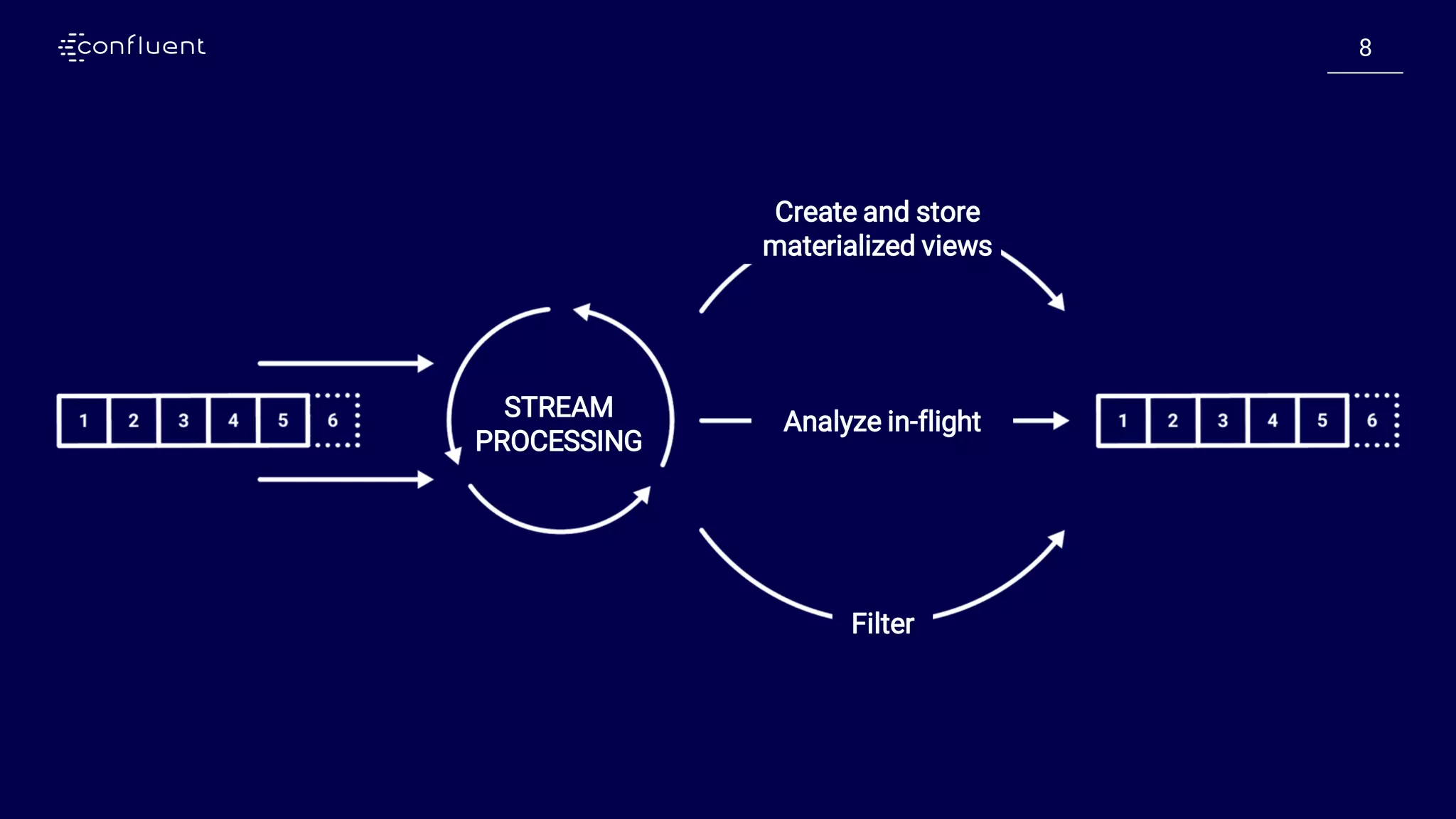

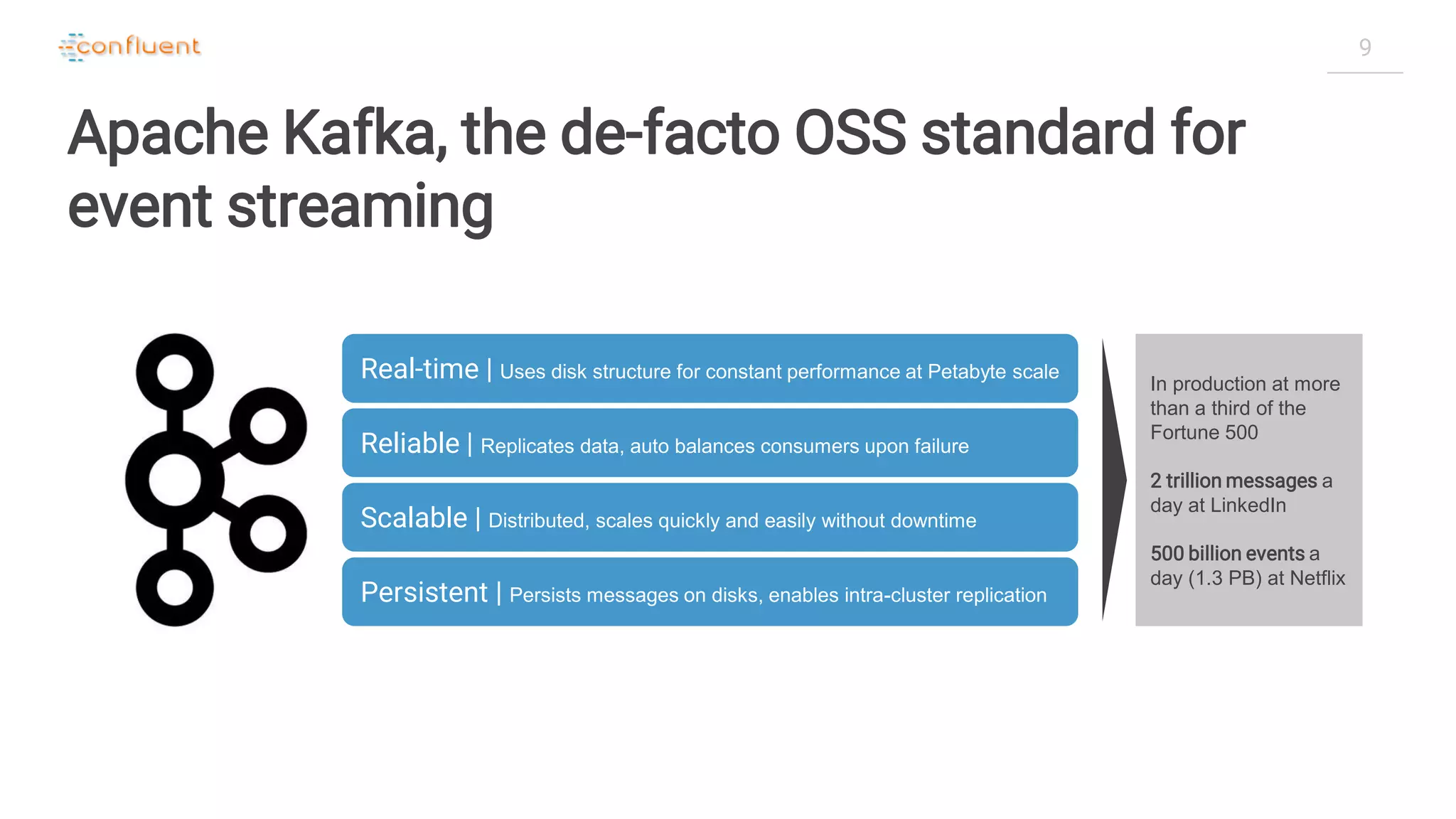

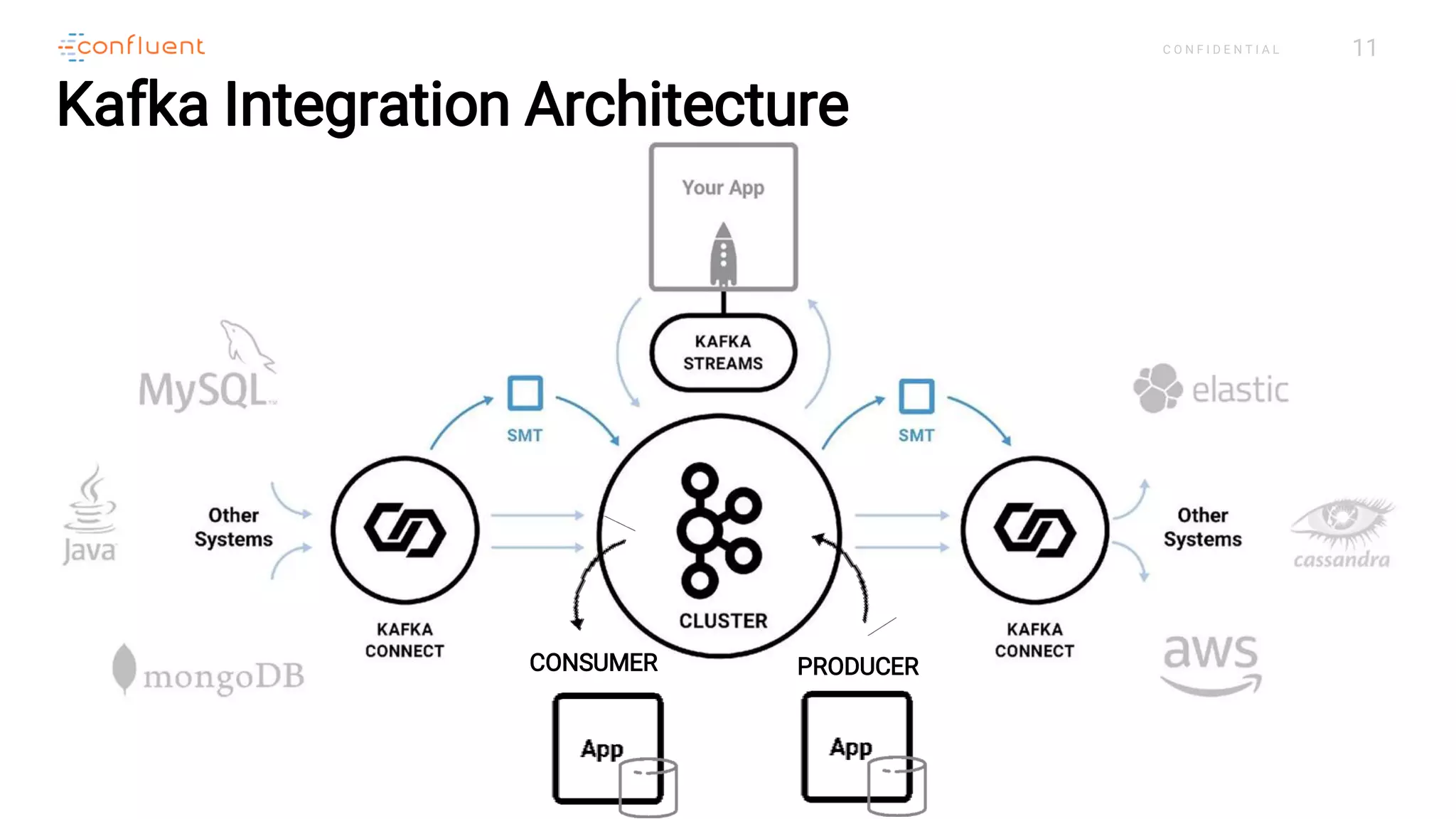

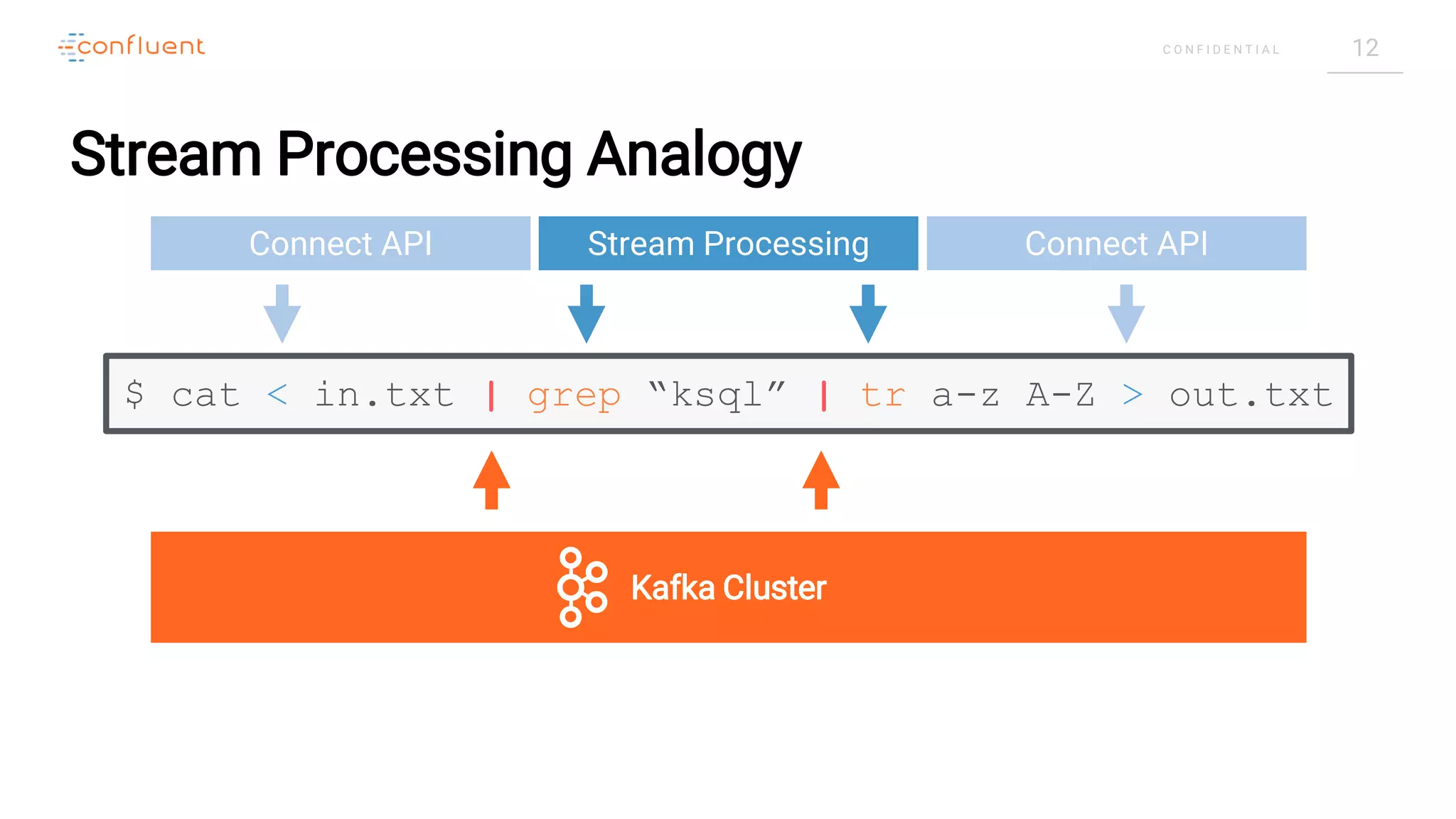

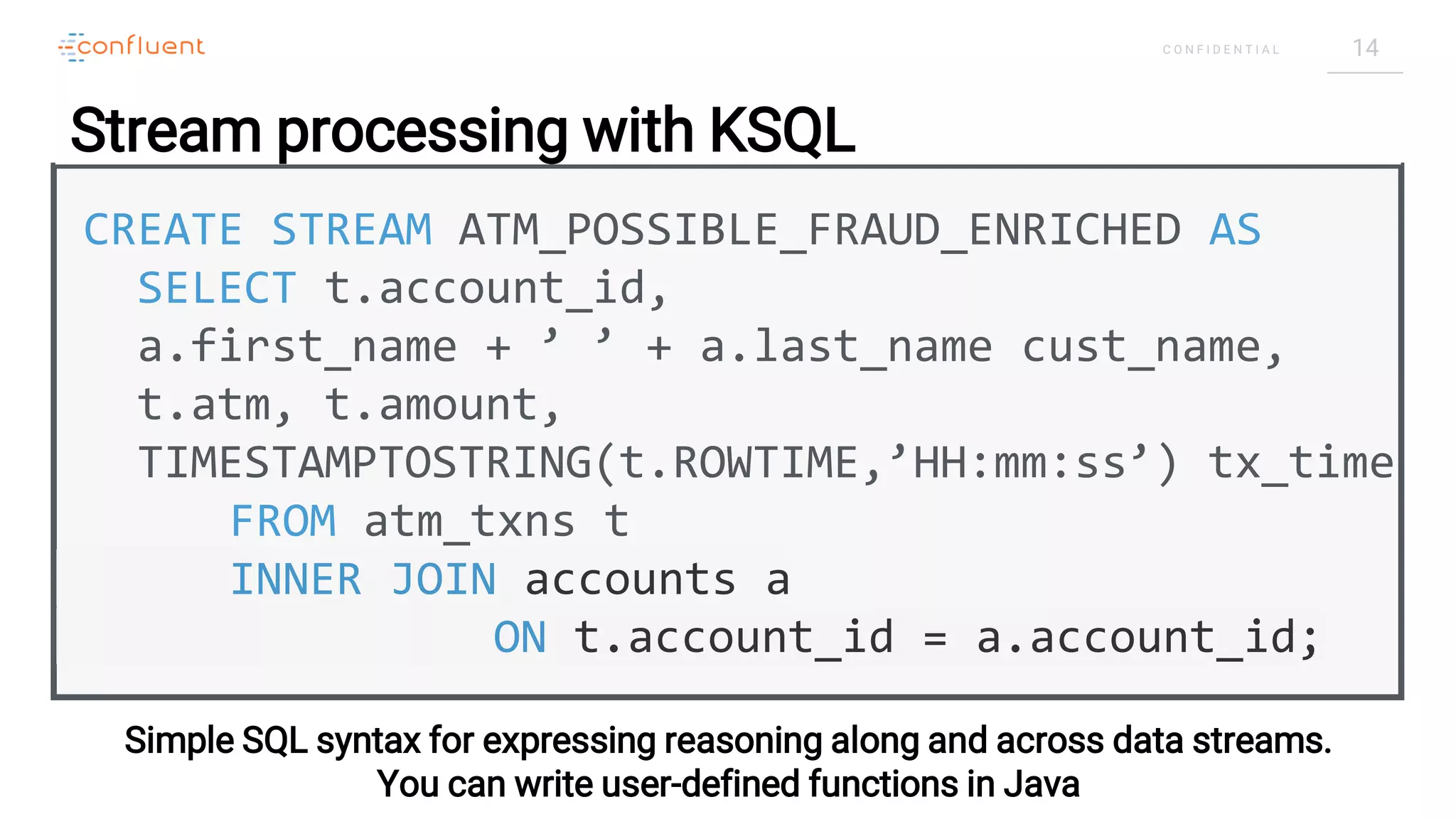

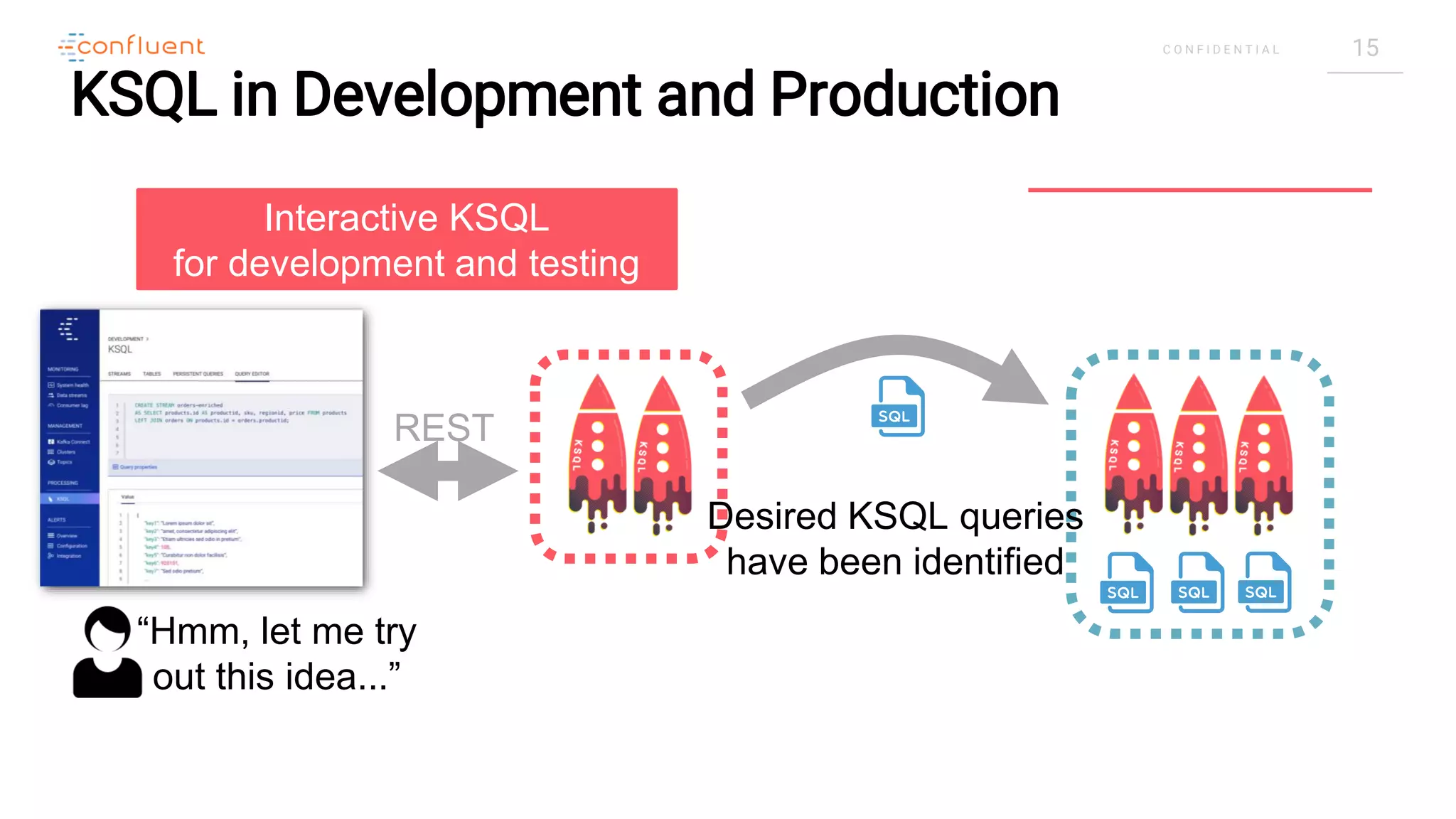

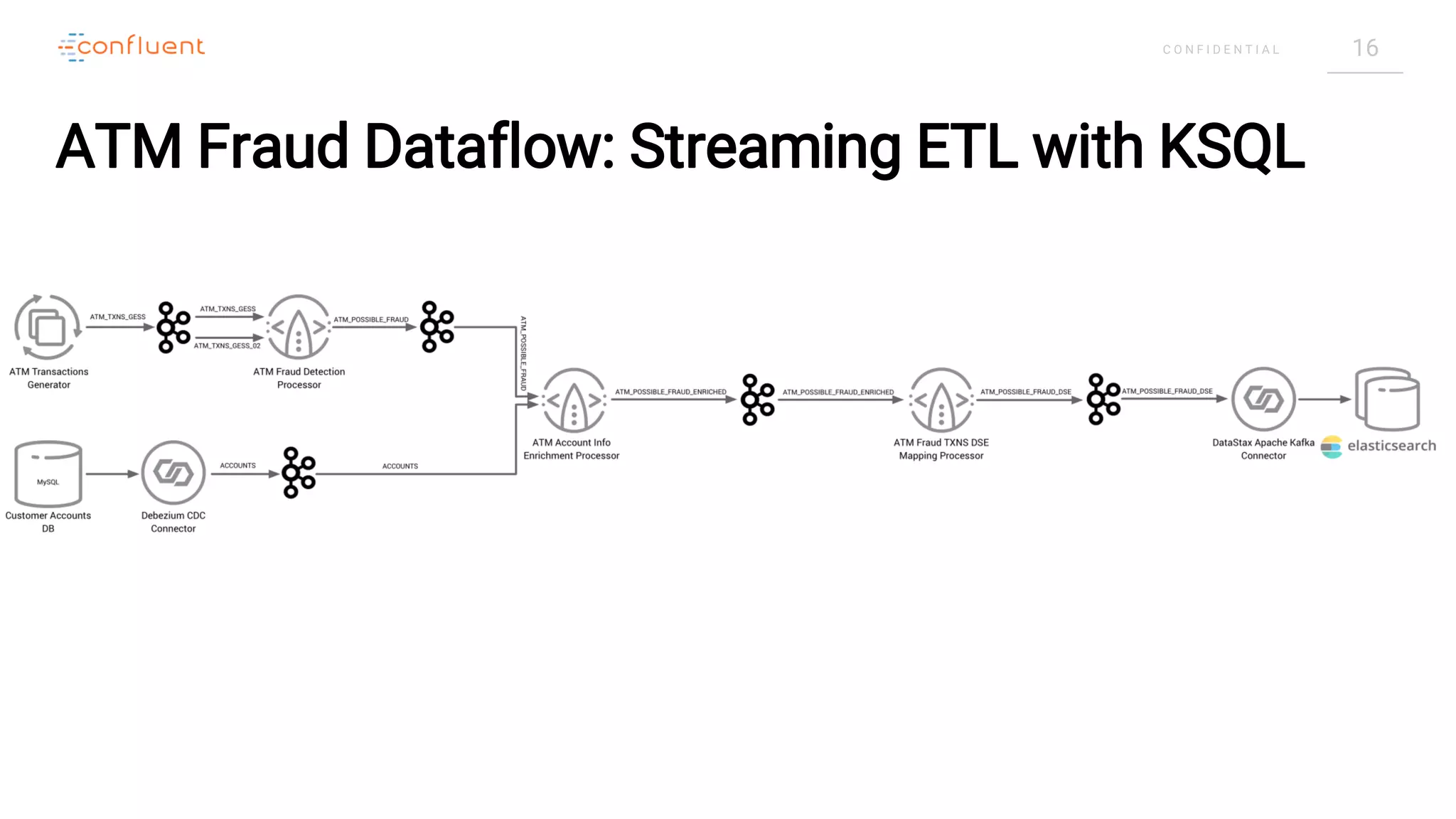

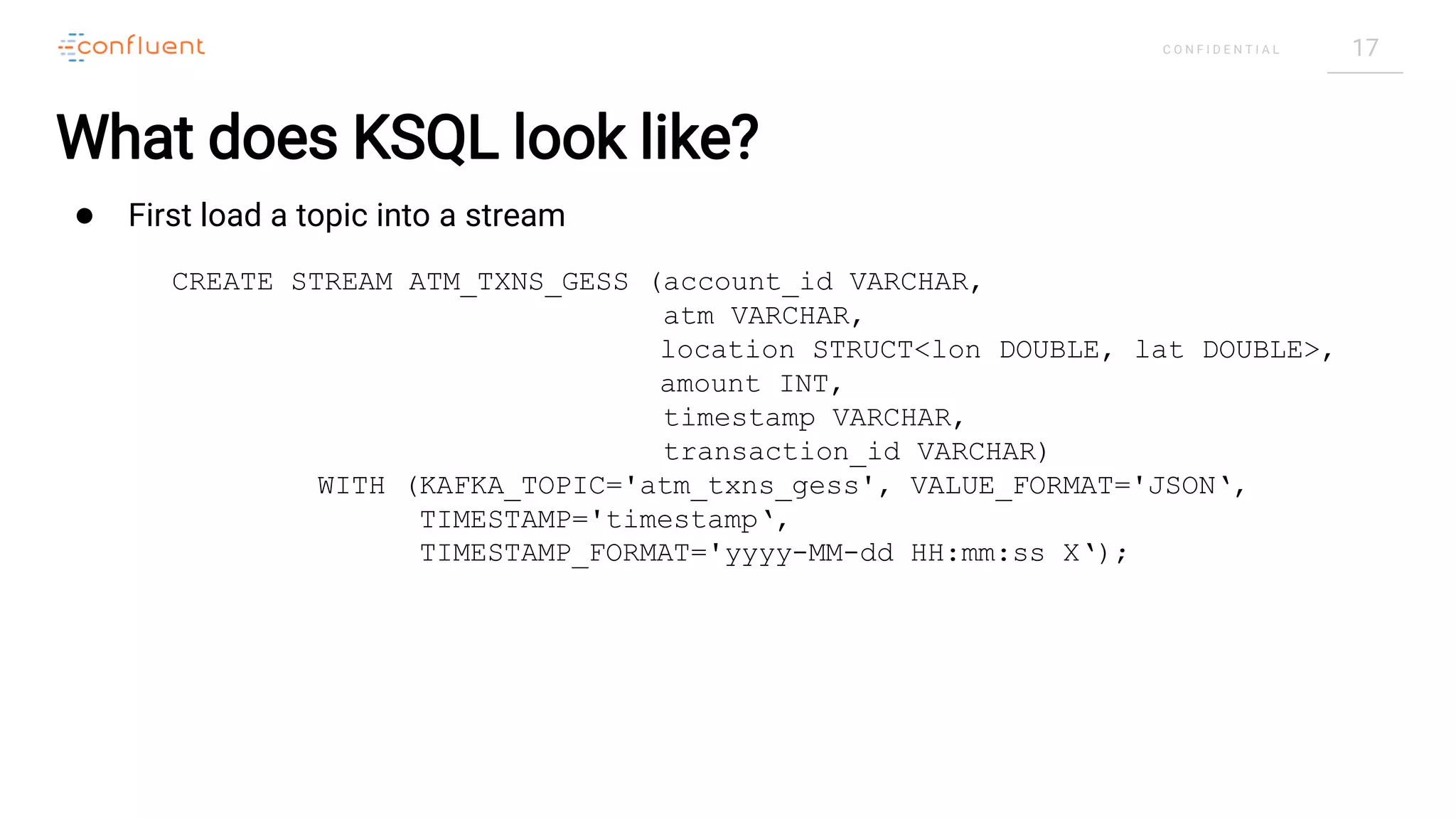

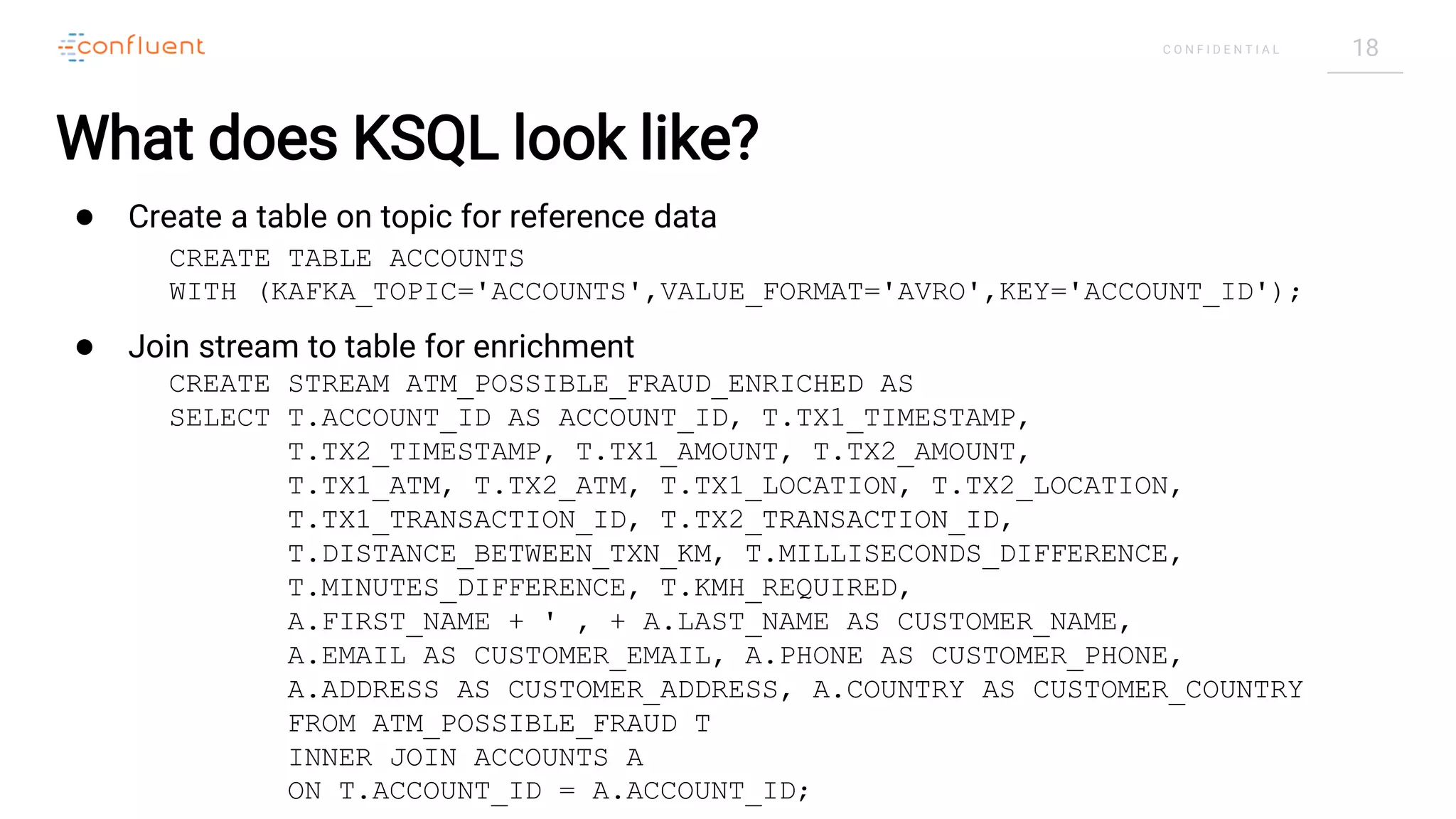

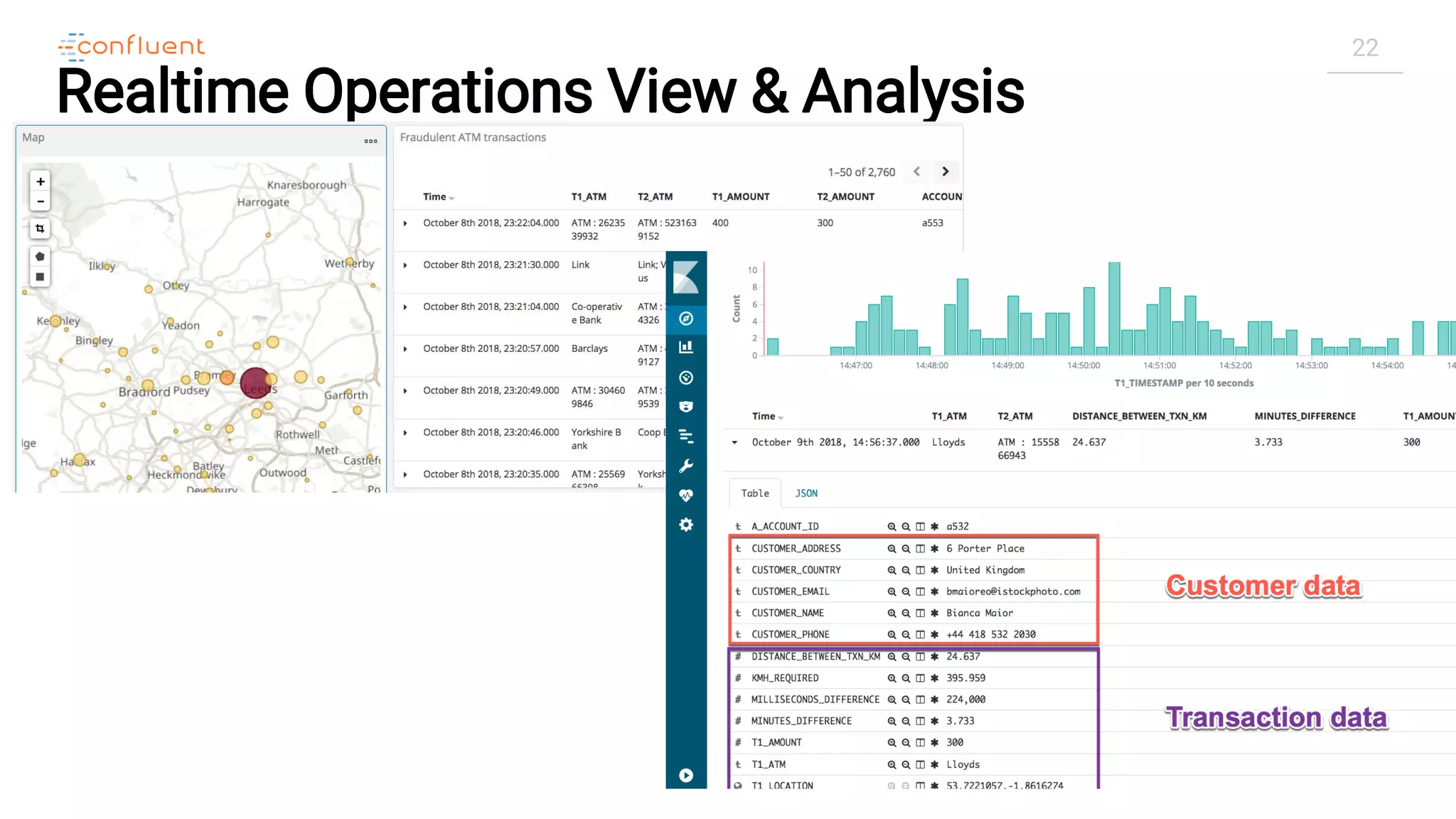

The document discusses Apache Kafka as a leading open-source event streaming platform designed for real-time data processing and ETL/data integration. It highlights Kafka's features such as scalability, durability, and persistence, and its use in handling massive message volumes for companies like LinkedIn and Netflix. Additionally, the document introduces KSQL as a streaming SQL engine for Kafka, facilitating the creation and management of streams for applications such as ATM fraud detection.