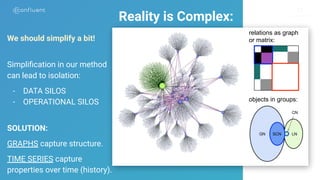

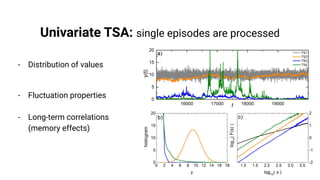

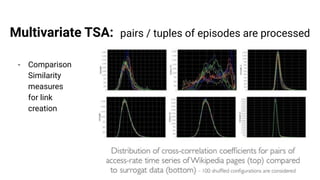

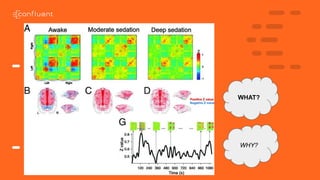

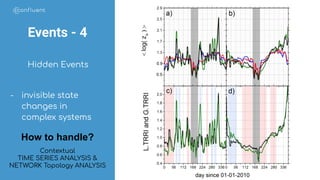

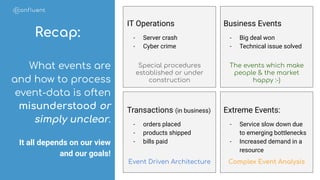

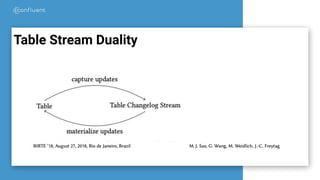

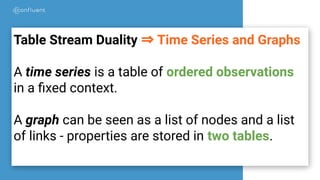

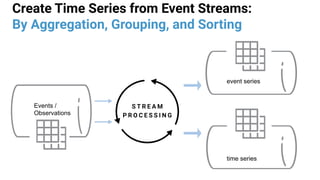

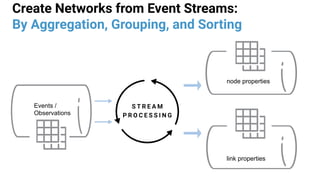

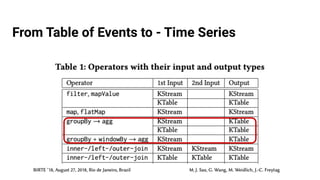

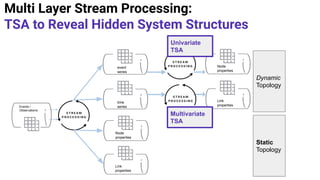

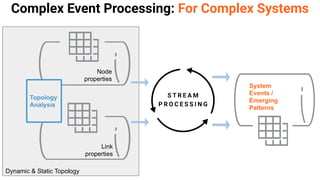

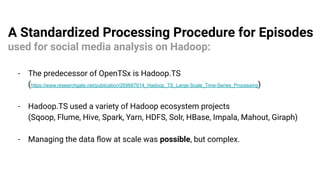

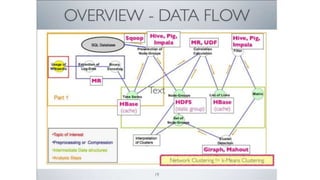

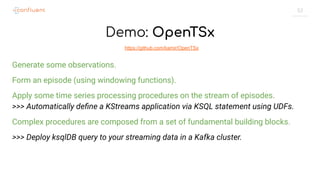

(1) The document discusses using an event streaming platform like Apache Kafka for advanced time series analysis (TSA). Typical processing patterns are described for converting raw data into time series and reconstructing graphs and networks from time series data.

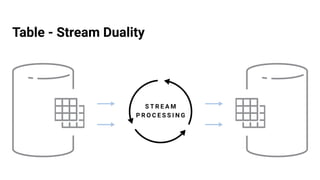

(2) A challenge discussed is integrating data streams, experiments, and decision making. The document argues that stream processing using Kafka is better suited than batch processing for real-time applications and iterative research projects.

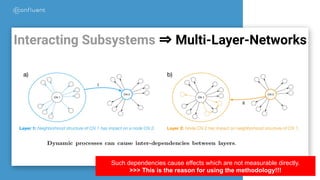

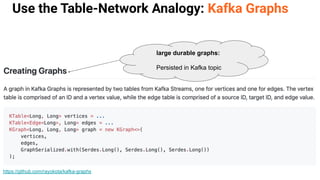

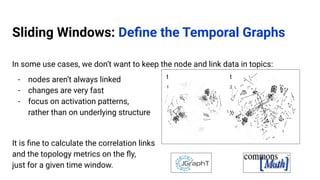

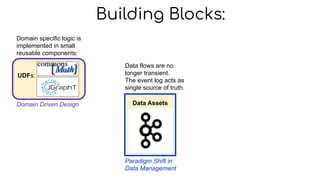

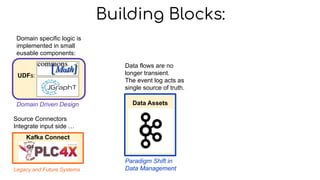

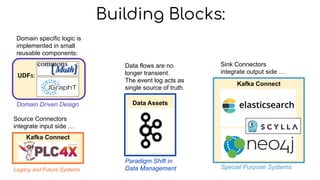

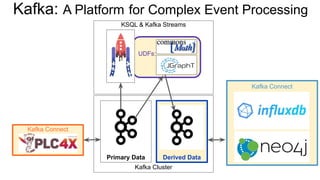

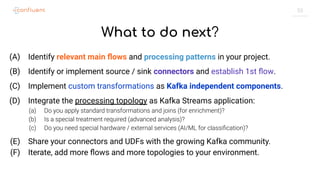

(3) The document then covers approaches for TSA and network analysis using Kafka, including creating time series from event streams, creating graphs from time series pairs, and architectures using reusable building blocks for complex stream processing.