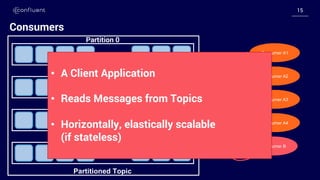

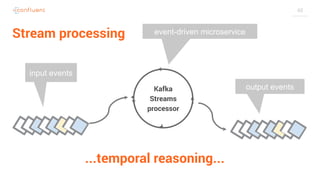

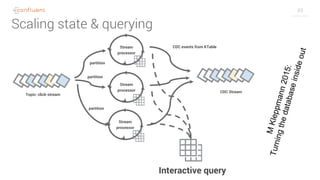

The document discusses the concepts and patterns for streaming services using Apache Kafka, highlighting its capabilities as an event-driven streaming platform. It covers various topics such as Kafka's architecture, the importance of partitions and replicas, as well as the integration of Kafka with microservices, event-driven architectures, and tools like KSQL and Kafka Streams for real-time data processing. Additionally, it promotes community engagement through Confluent's Slack channel and encourages users to explore Kafka with promotional offers.

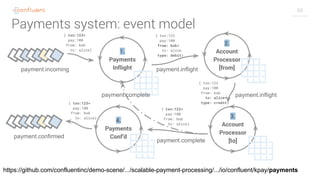

![6161

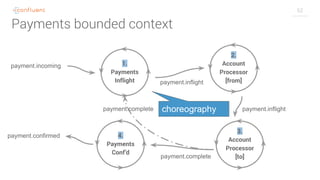

Bounded context

“Payments”

1. Payments inflight

2. Account processing [debit/credit]

3. Payments confirmed](https://image.slidesharecdn.com/concepts-and-patterns-for-streaming-services-with-apache-kafka-200323080016/85/Concepts-and-Patterns-for-Streaming-Services-with-Kafka-61-320.jpg)

![63

Payments system: bounded context

[1] How much is being processed?

Expressed as:

- Count of payments inflight

- Total $ value processed

[2&3] Update the account balance

Expressed as:

- Debit

- Credit [4] Confirm successful payment

Expressed as:

- Total volume today

- Total $ amount today](https://image.slidesharecdn.com/concepts-and-patterns-for-streaming-services-with-apache-kafka-200323080016/85/Concepts-and-Patterns-for-Streaming-Services-with-Kafka-63-320.jpg)

![64

Payments system: AccountProcessor

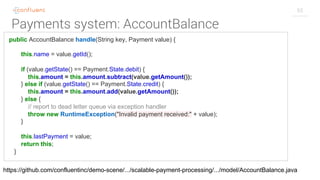

accountBalanceKTable = inflight.groupByKey()

.aggregate(

AccountBalance::new,

(key, value, aggregate) -> aggregate.handle(key, value), accountStore);

KStream<String, Payment>[] branch = inflight

.map((KeyValueMapper<String, Payment, KeyValue<String, Payment>>) (key, value) -> {

if (value.getState() == Payment.State.debit) {

value.setStateAndId(Payment.State.credit);

} else if (value.getState() == Payment.State.credit) {

value.setStateAndId(Payment.State.complete);

}

return new KeyValue<>(value.getId(), value);

})

.branch(isCreditRecord, isCompleteRecord);

branch[0].to(paymentsInflightTopic);

branch[1].to(paymentsCompleteTopic);

https://github.com/confluentinc/demo-scene/blob/master/scalable-payment-processing/.../AccountProcessor.java

KTable state

(Kafka Streams)](https://image.slidesharecdn.com/concepts-and-patterns-for-streaming-services-with-apache-kafka-200323080016/85/Concepts-and-Patterns-for-Streaming-Services-with-Kafka-64-320.jpg)