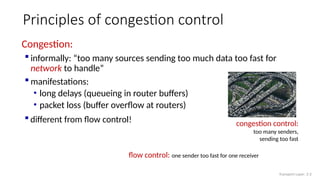

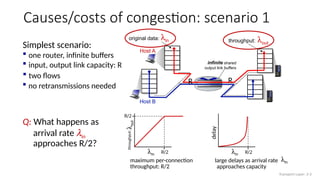

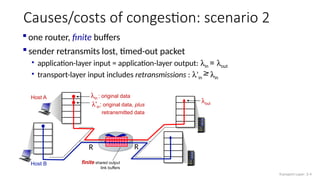

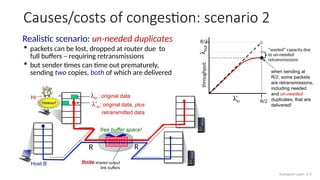

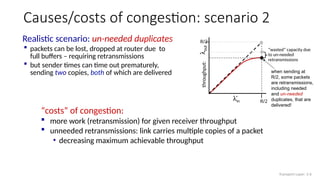

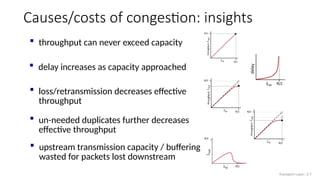

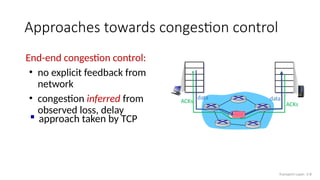

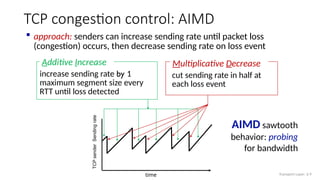

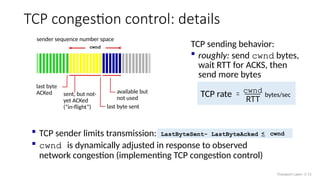

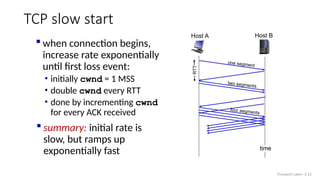

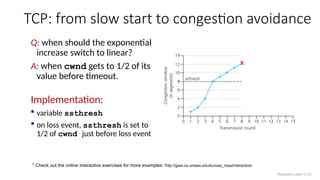

The document discusses TCP congestion control mechanisms, highlighting the difference between congestion and flow control, causes of congestion, and costs associated with packet loss and retransmissions. It explains the Additive Increase Multiplicative Decrease (AIMD) approach used in TCP to adjust the sending rate based on observed network conditions, such as delays and packet losses. The document also covers the transition from slow start to congestion avoidance in the TCP protocol, emphasizing its behavior during different network load scenarios.