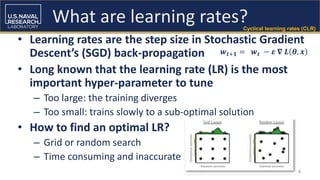

- Leslie Smith discusses their research into optimizing learning rates for training neural networks. They developed cyclical learning rates which vary the learning rate between a minimum and maximum value during training. This allows networks to train faster with larger learning rates.

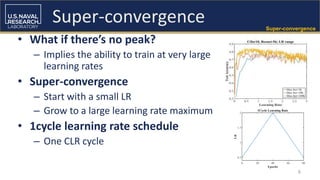

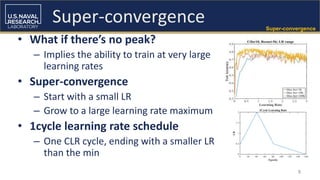

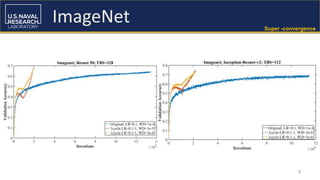

- Smith applied a technique called "super-convergence" which starts with a small learning rate and increases it to a large maximum, enabling very fast training. They developed a "1cycle" learning rate schedule that applies one cycle of this.

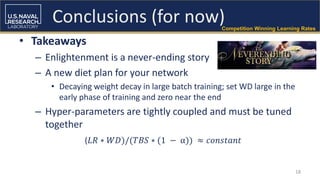

- Smith's learning rate optimization techniques helped teams win competitions like DAWNBench and Kaggle challenges by enabling fast training of models. Smith's research also showed that weight decay optimization is important and decaying it over time can improve large