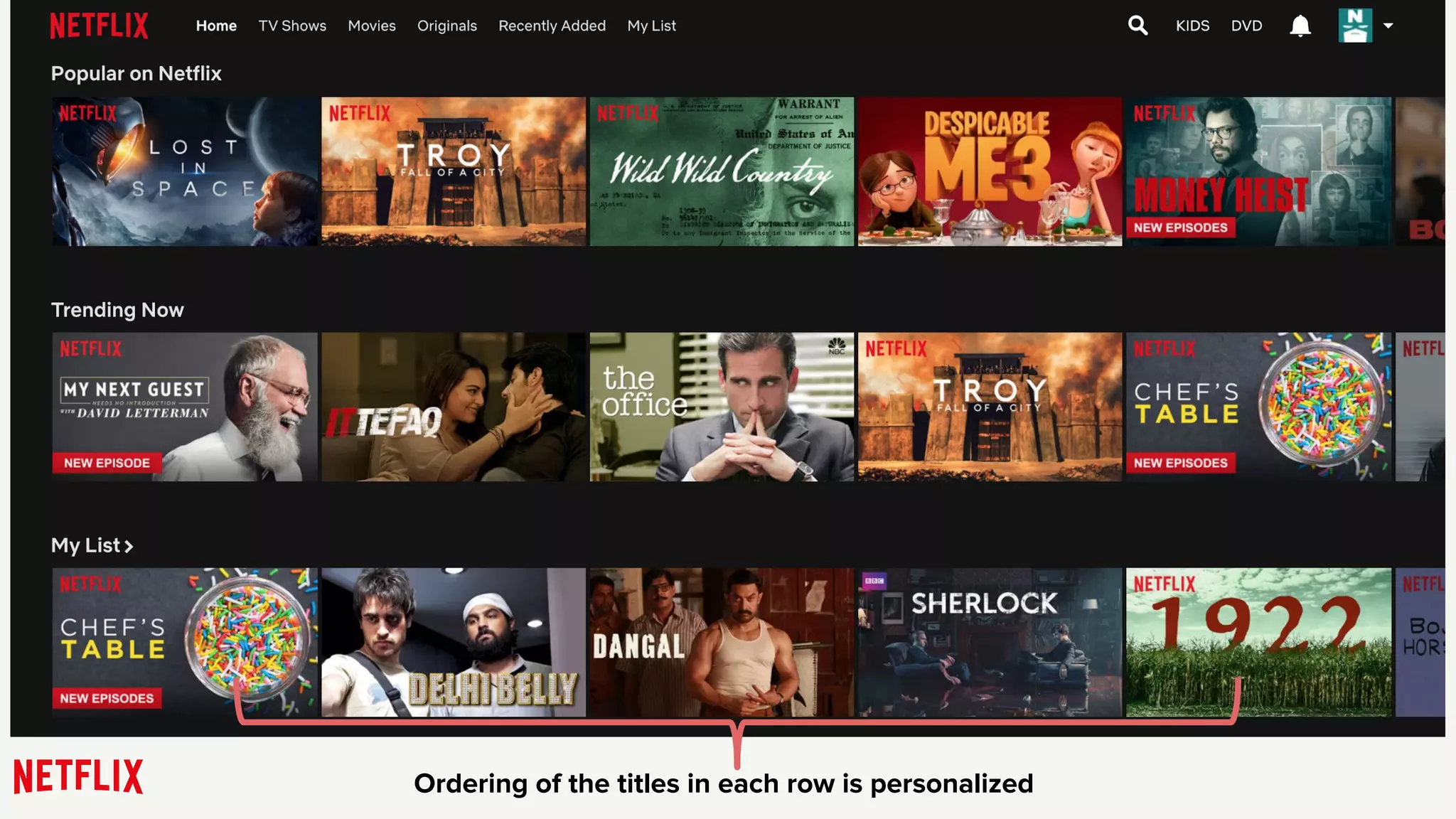

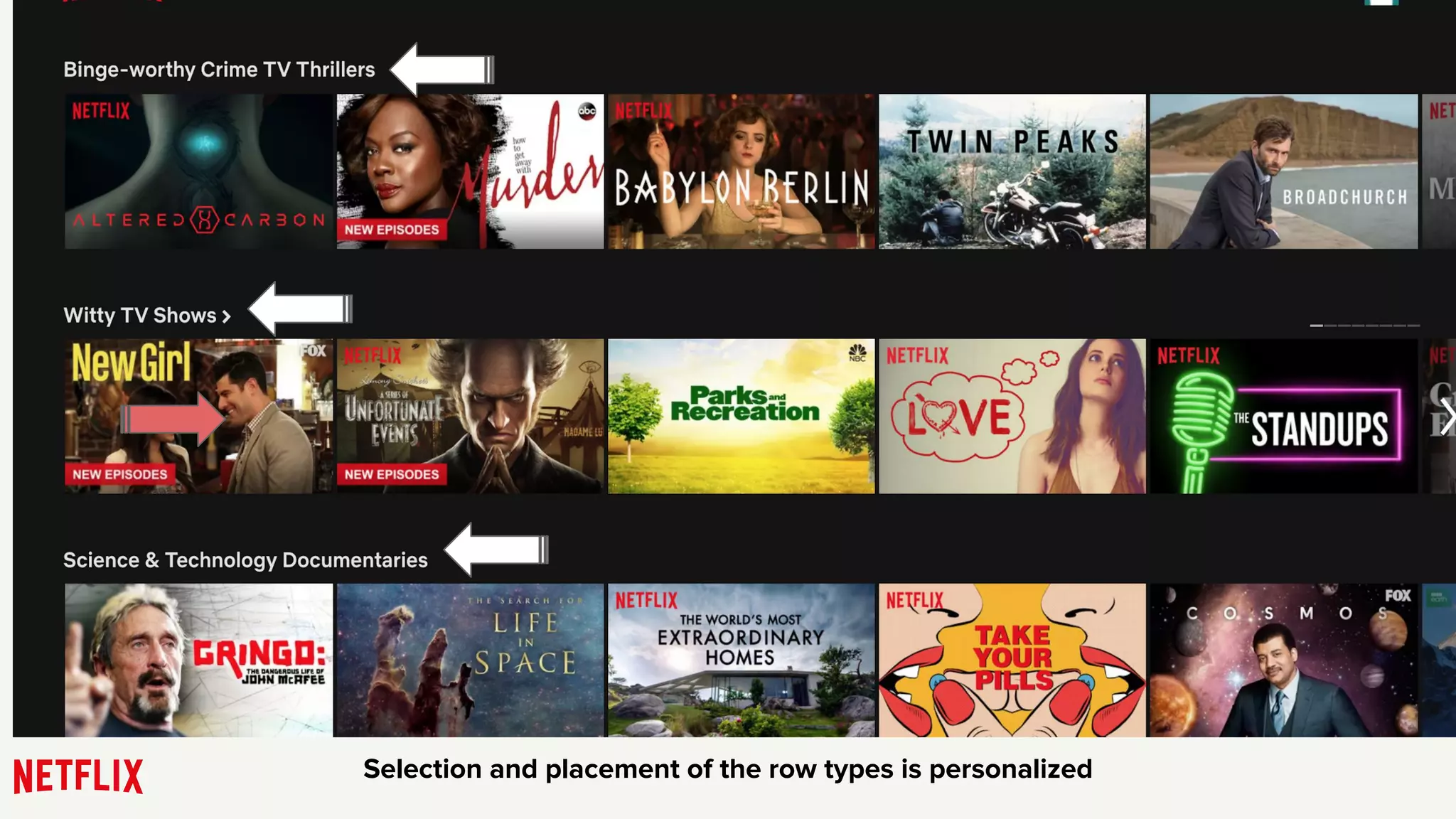

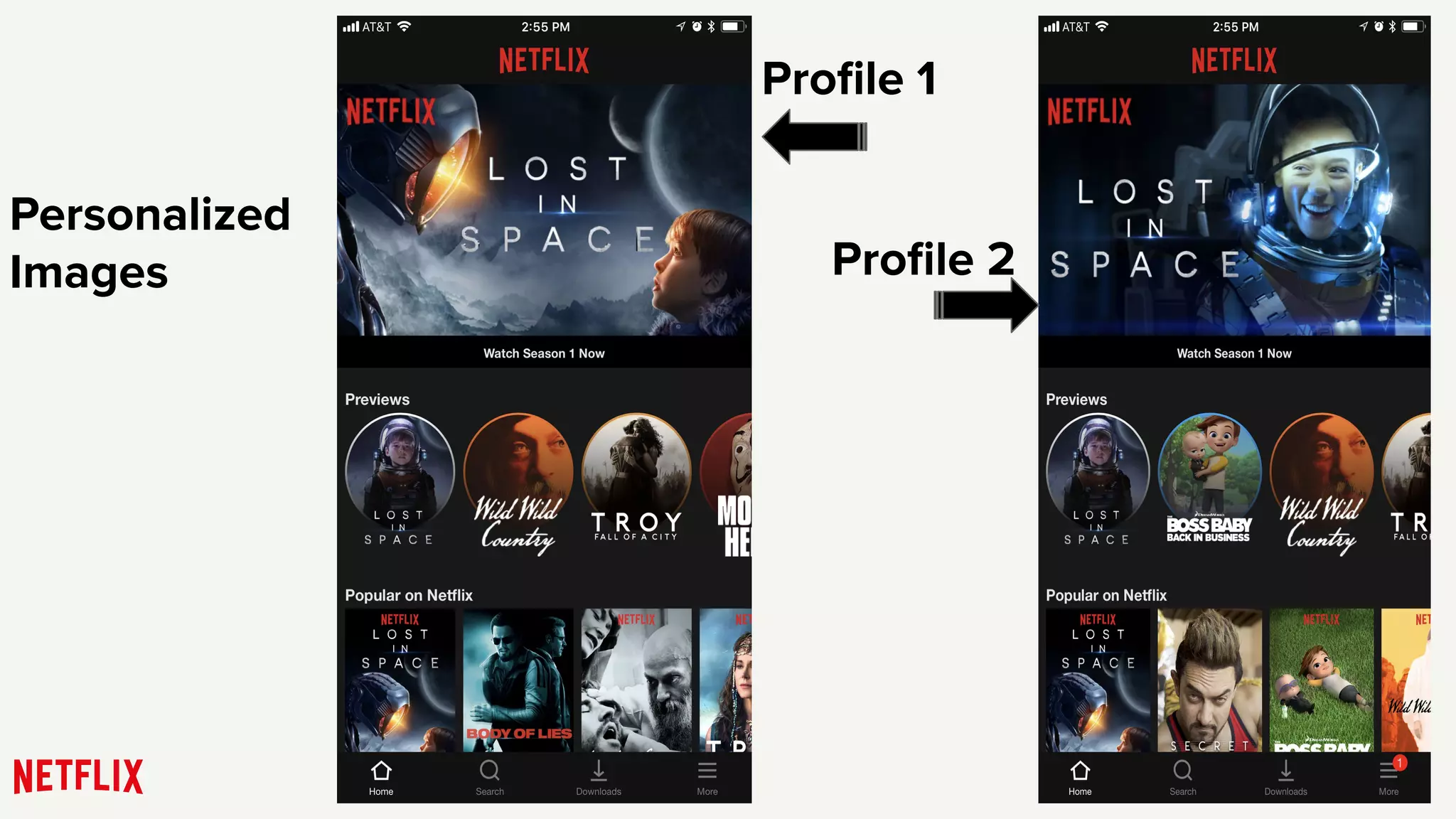

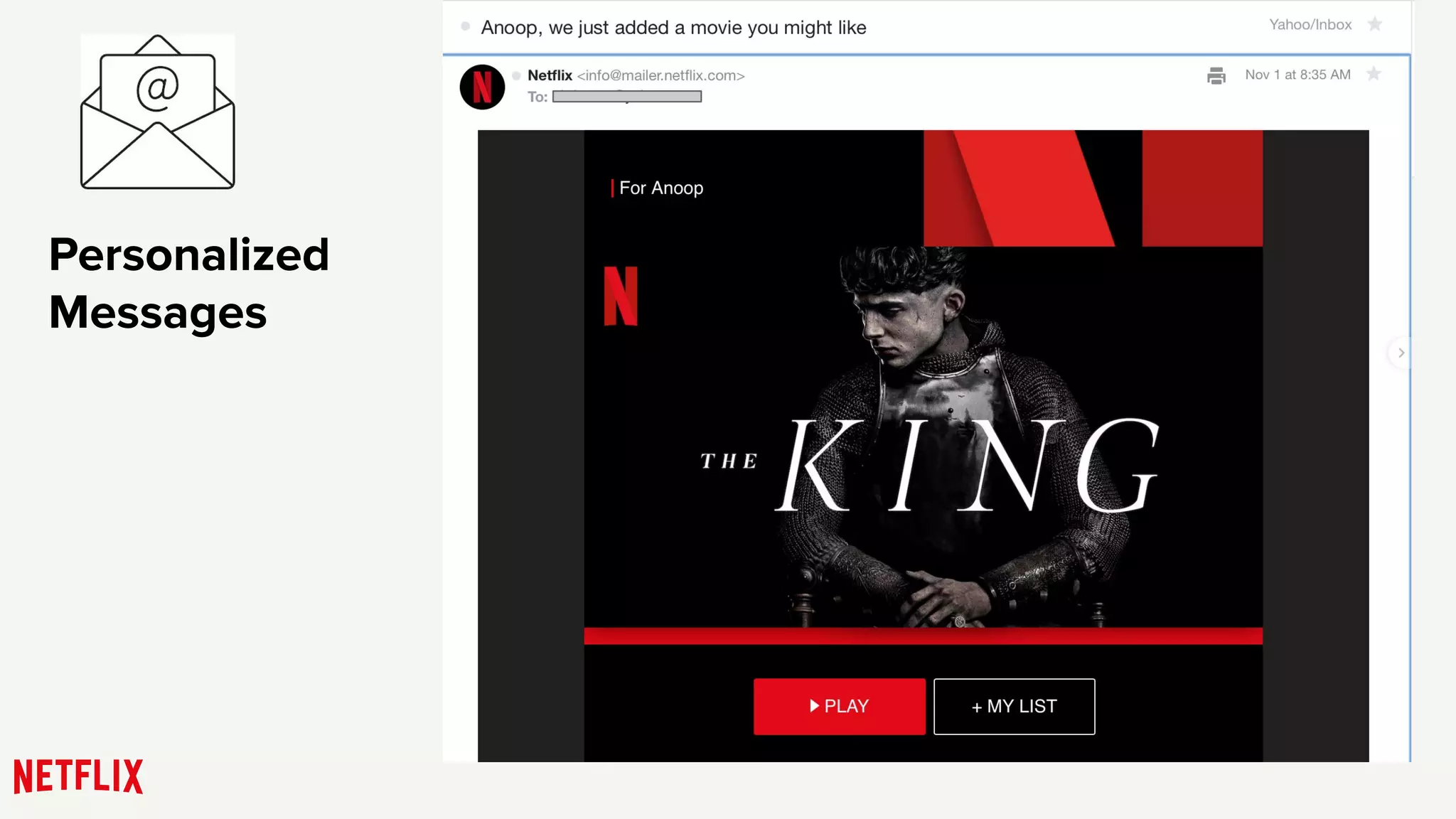

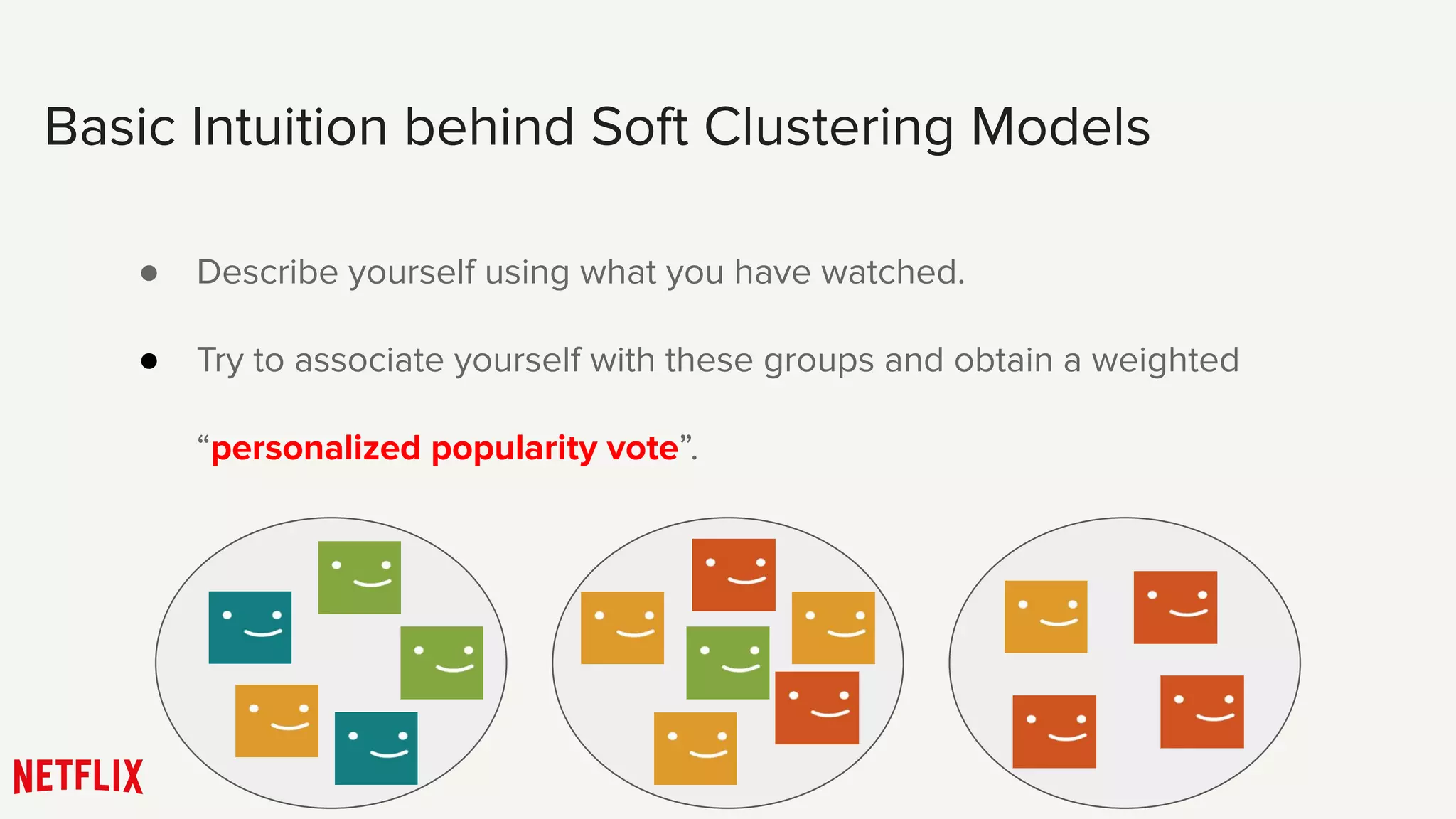

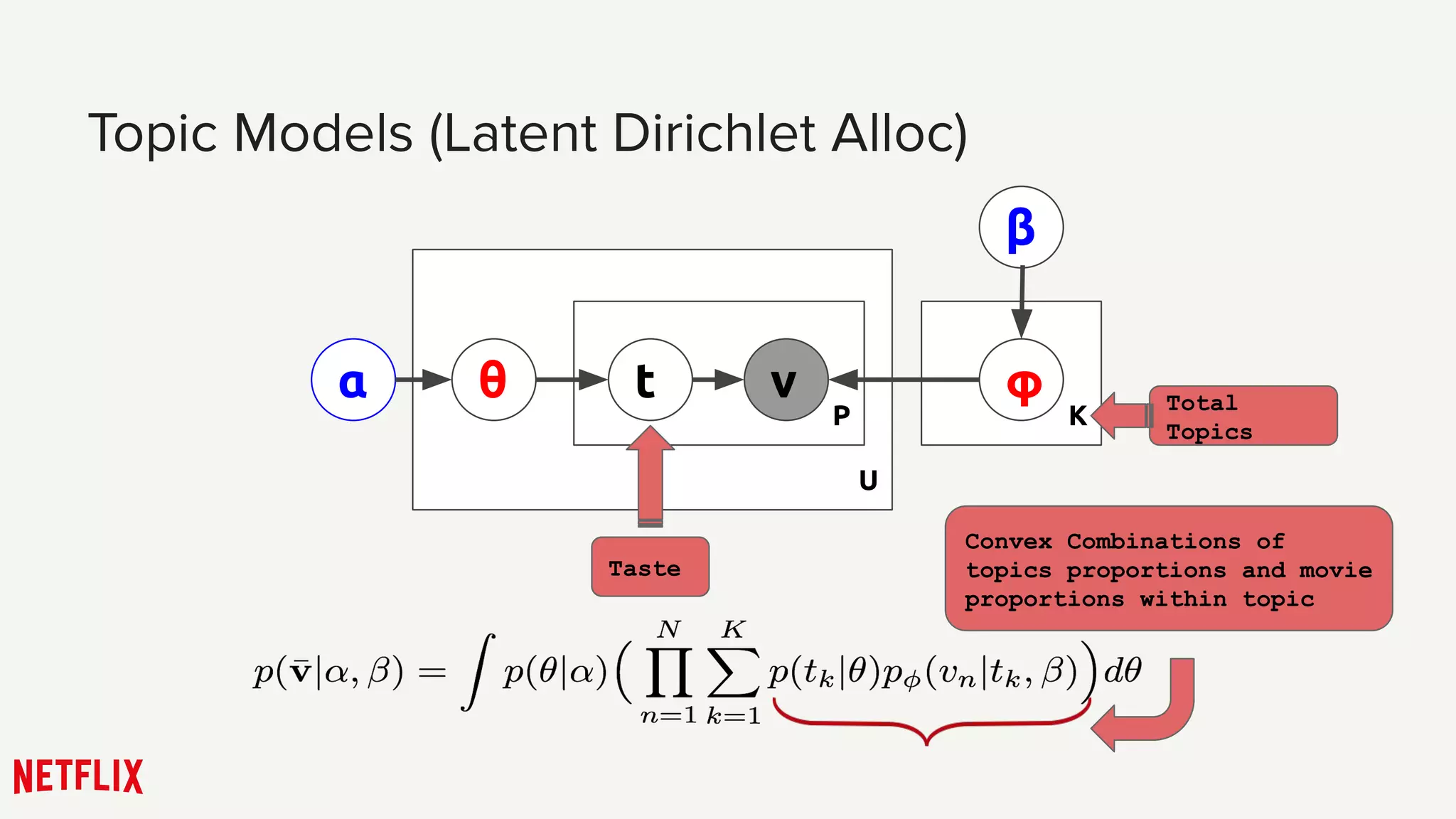

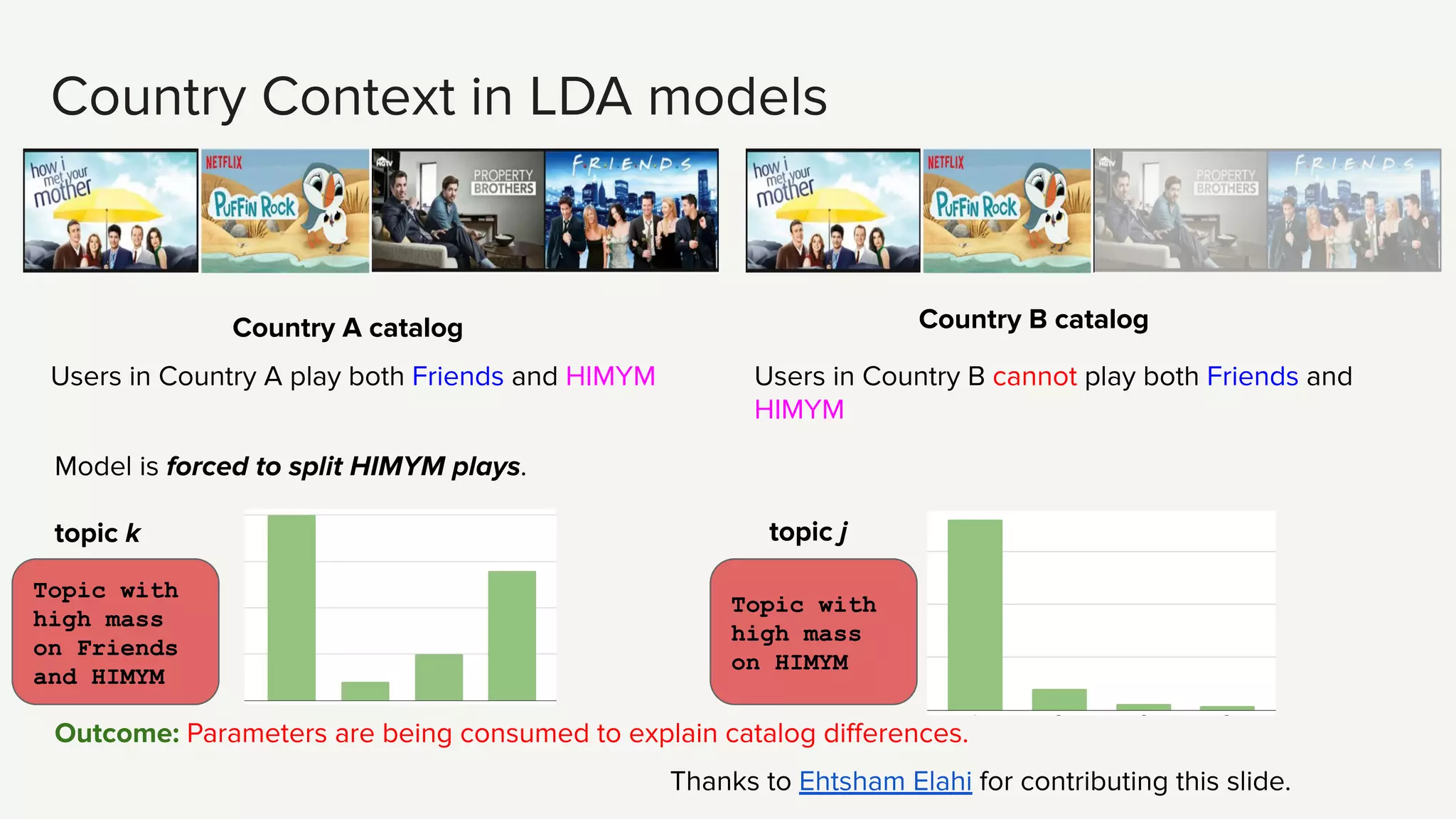

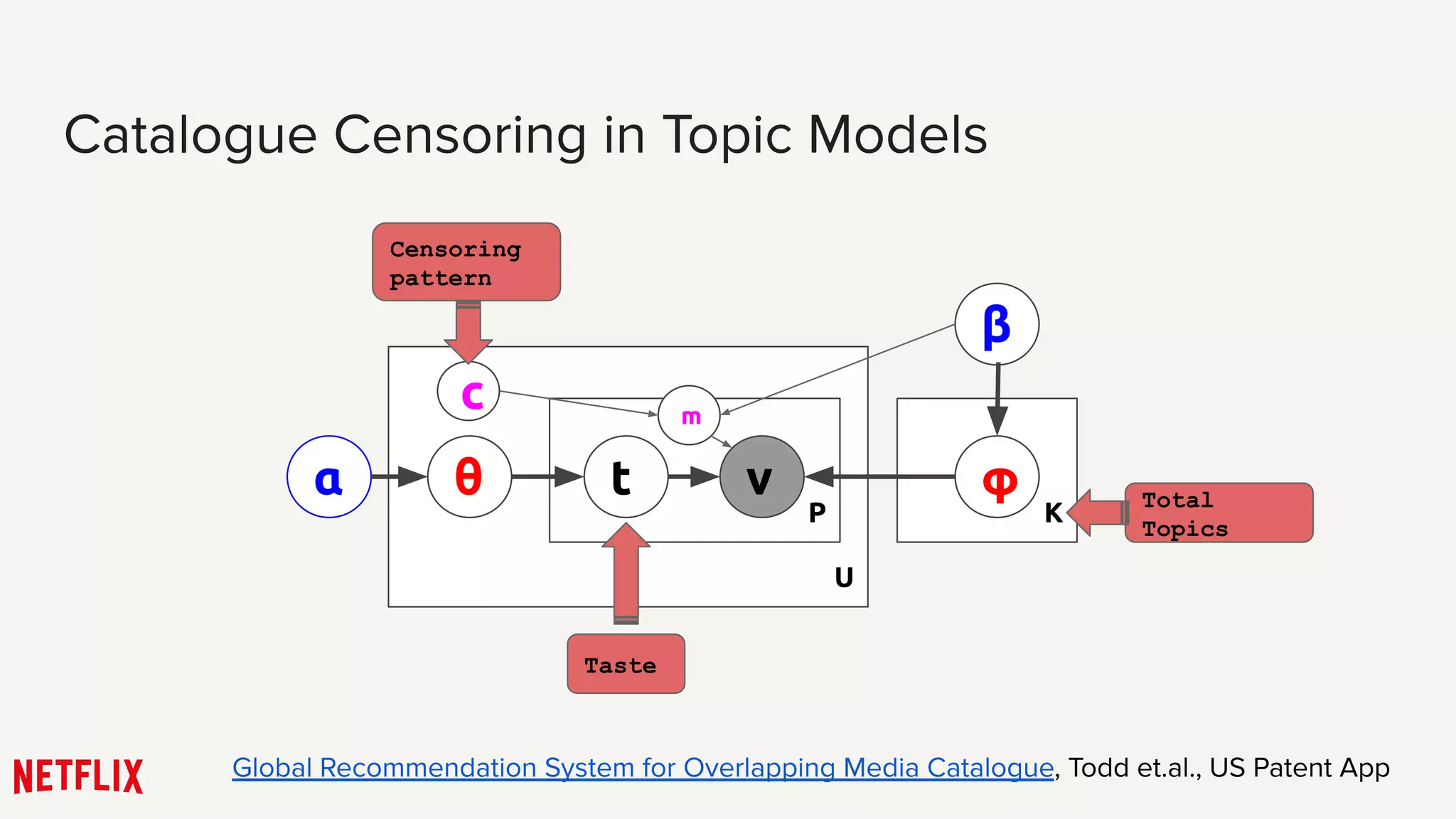

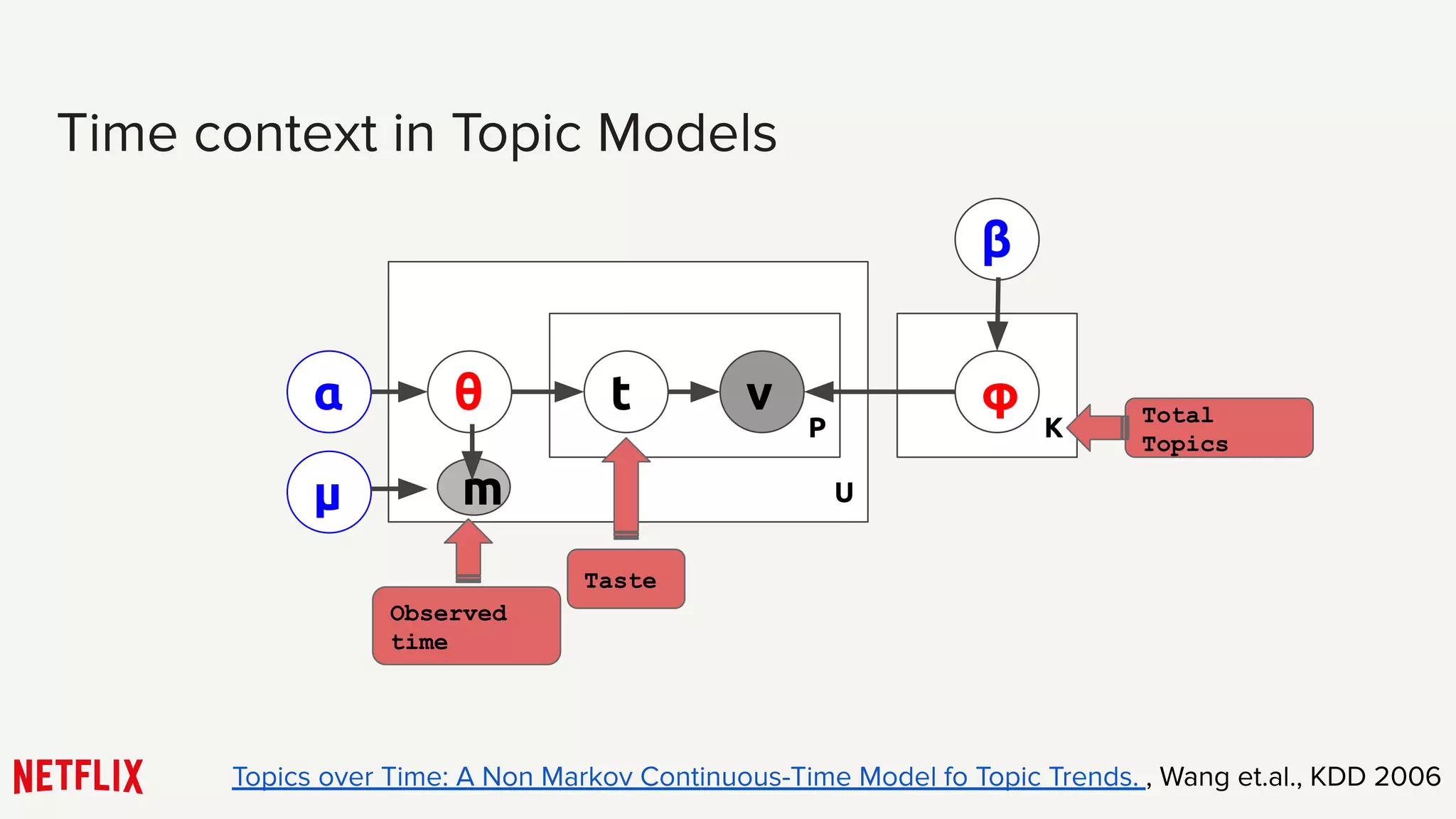

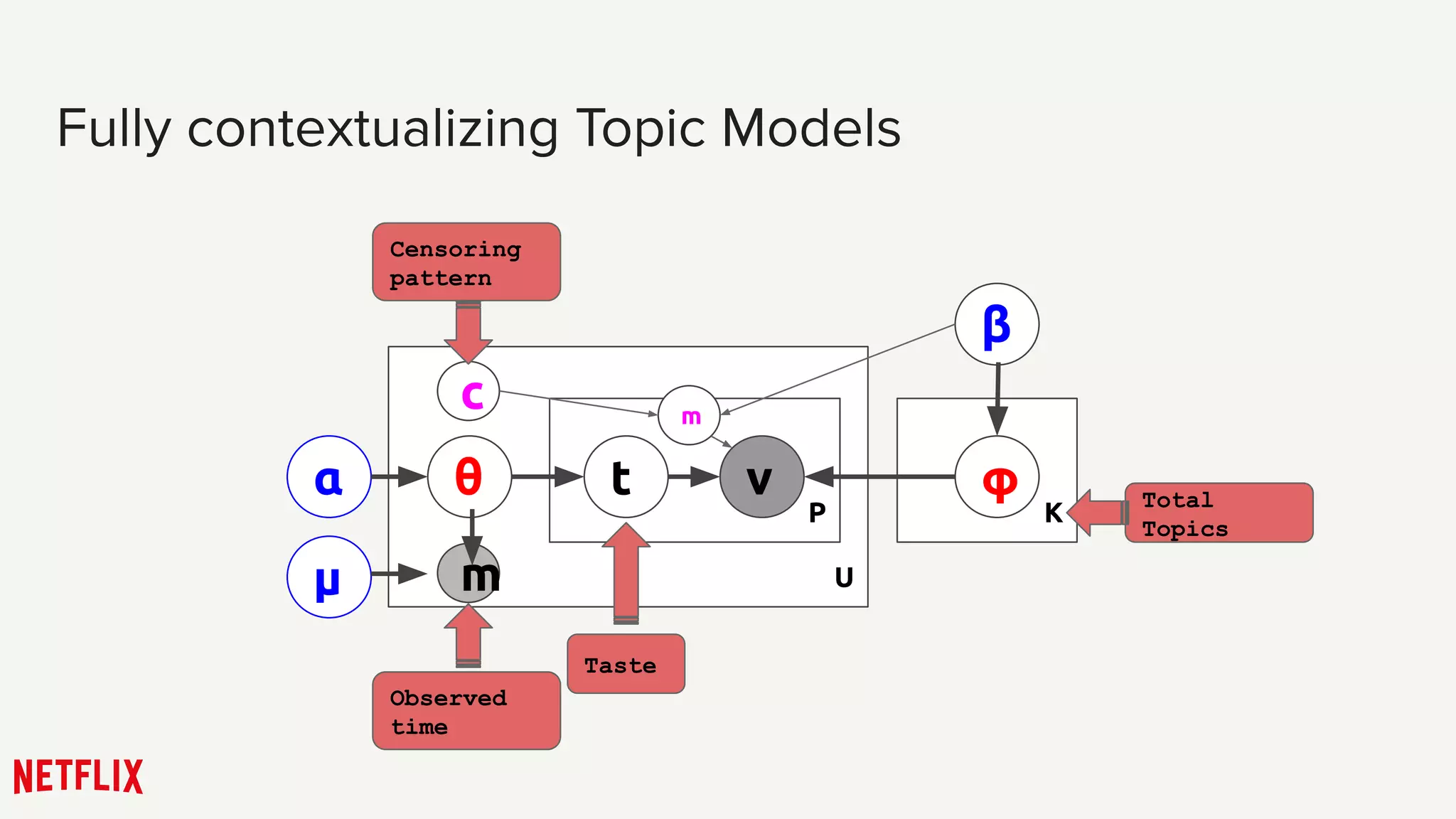

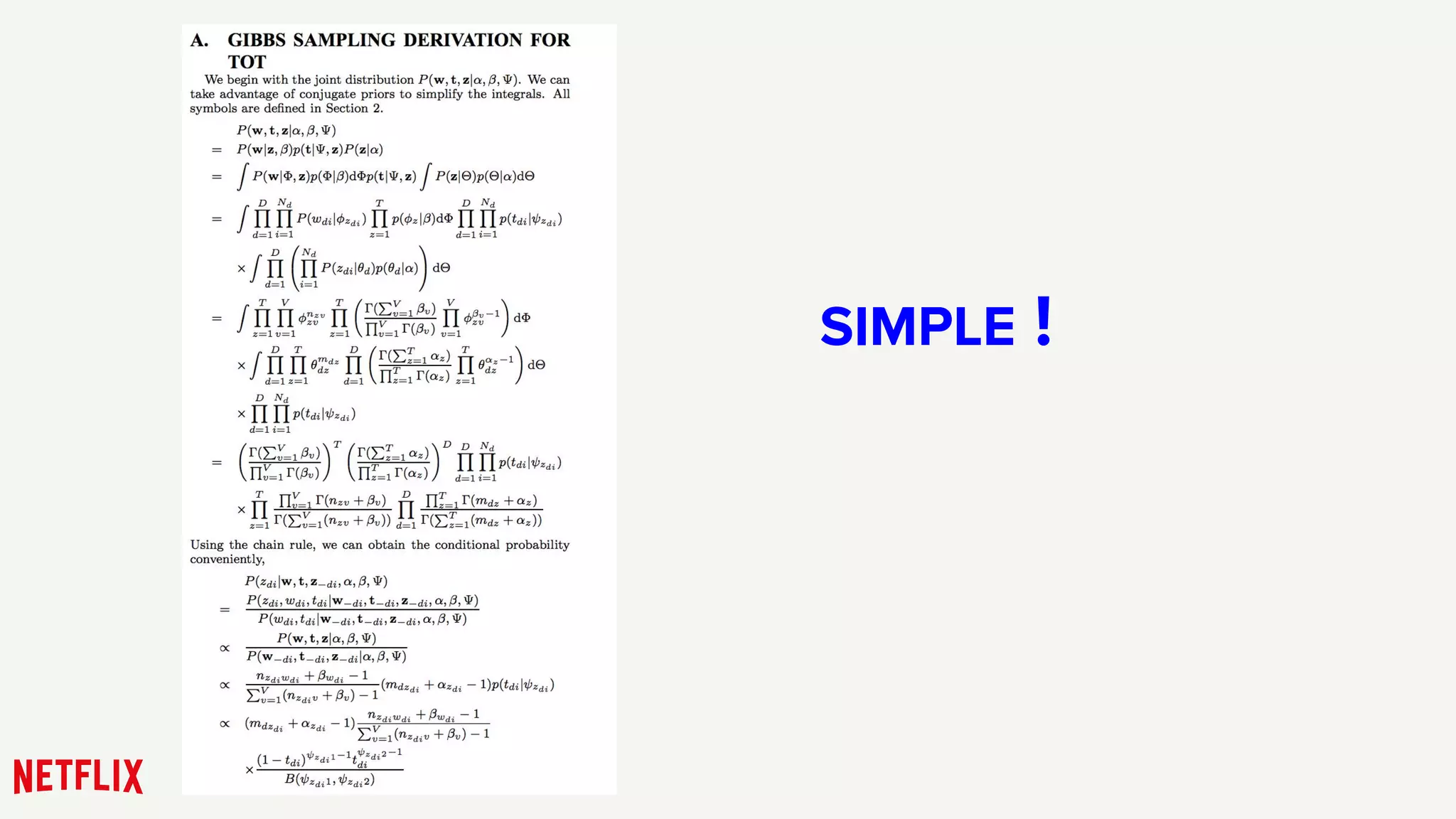

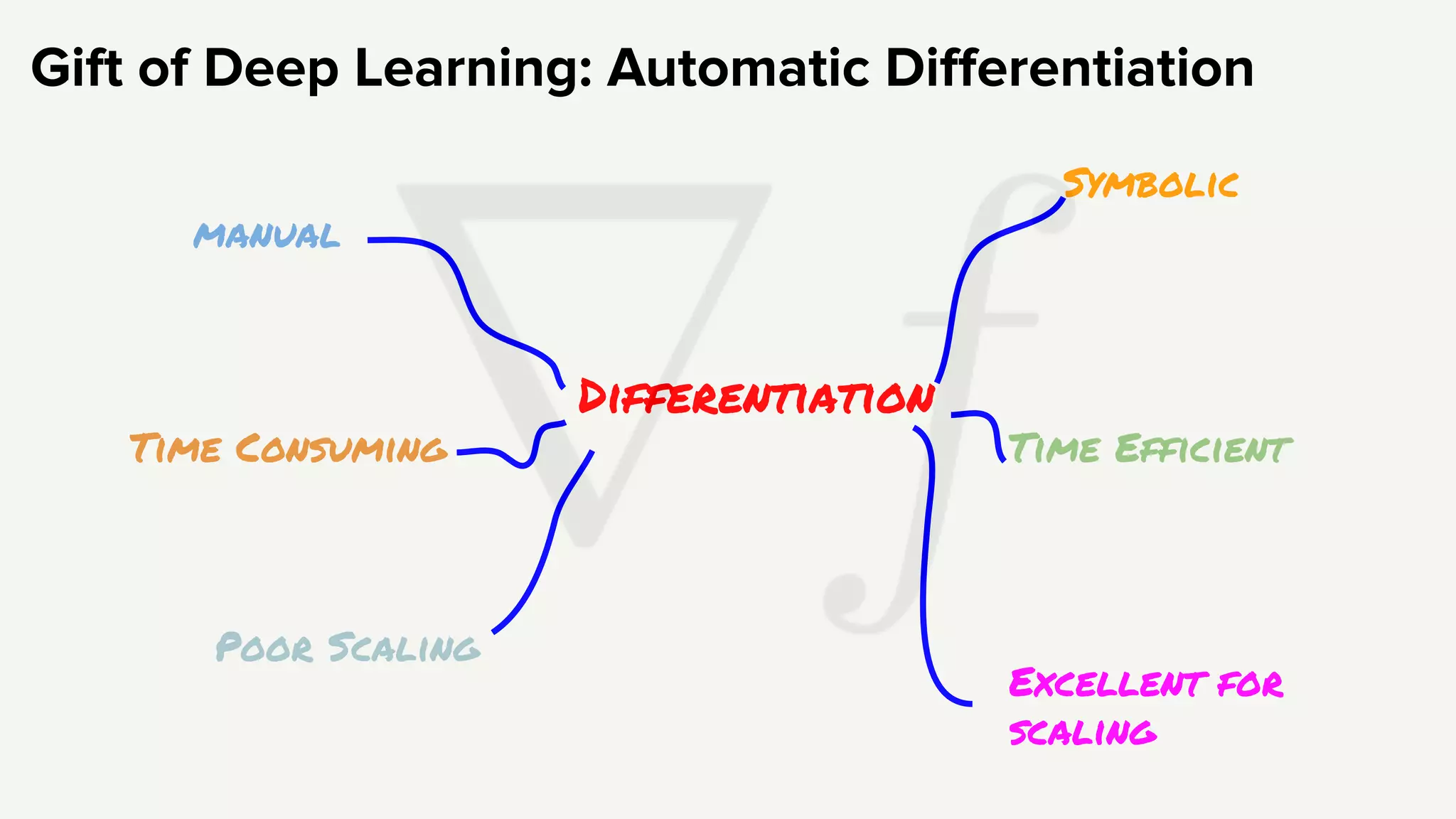

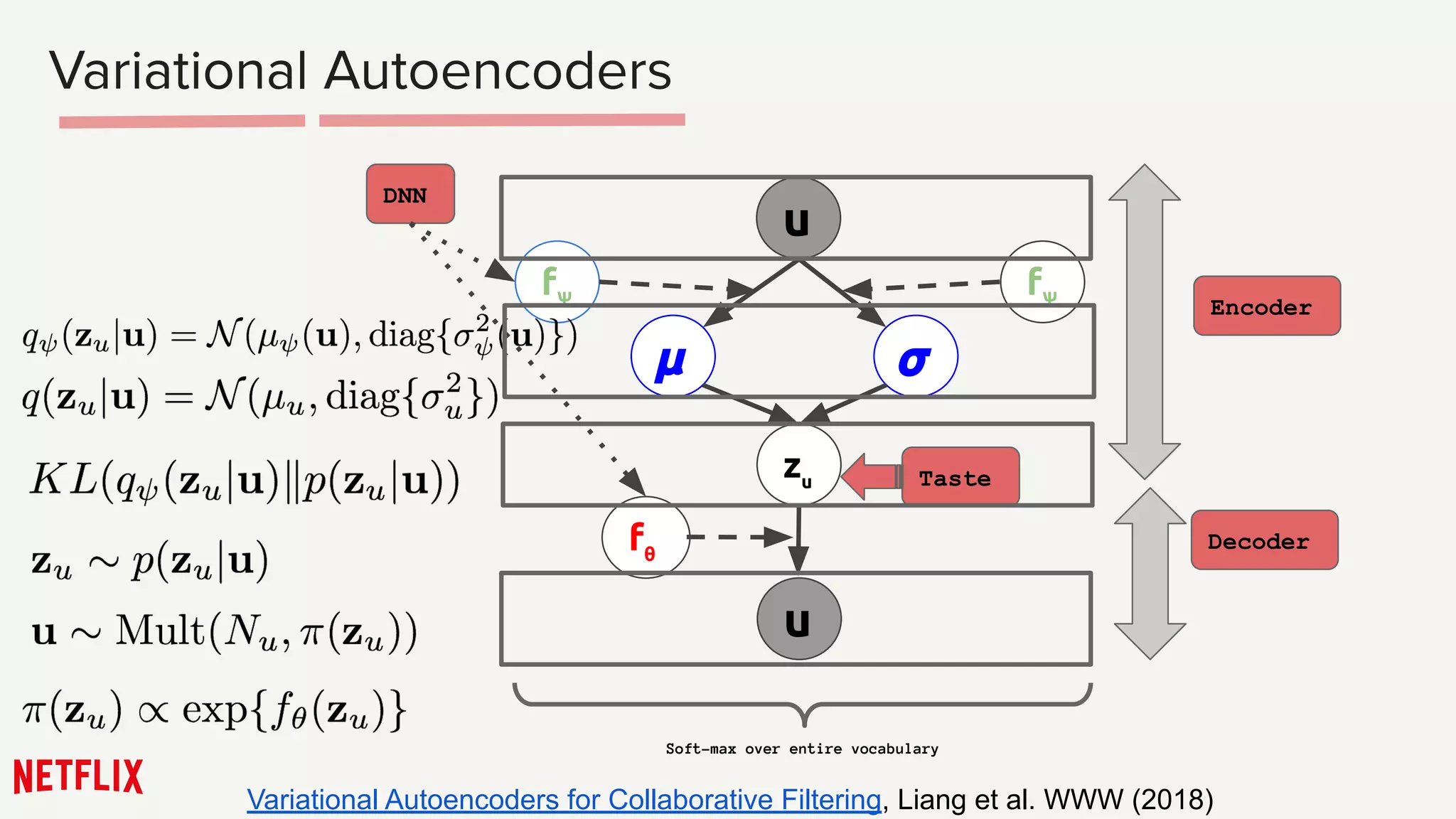

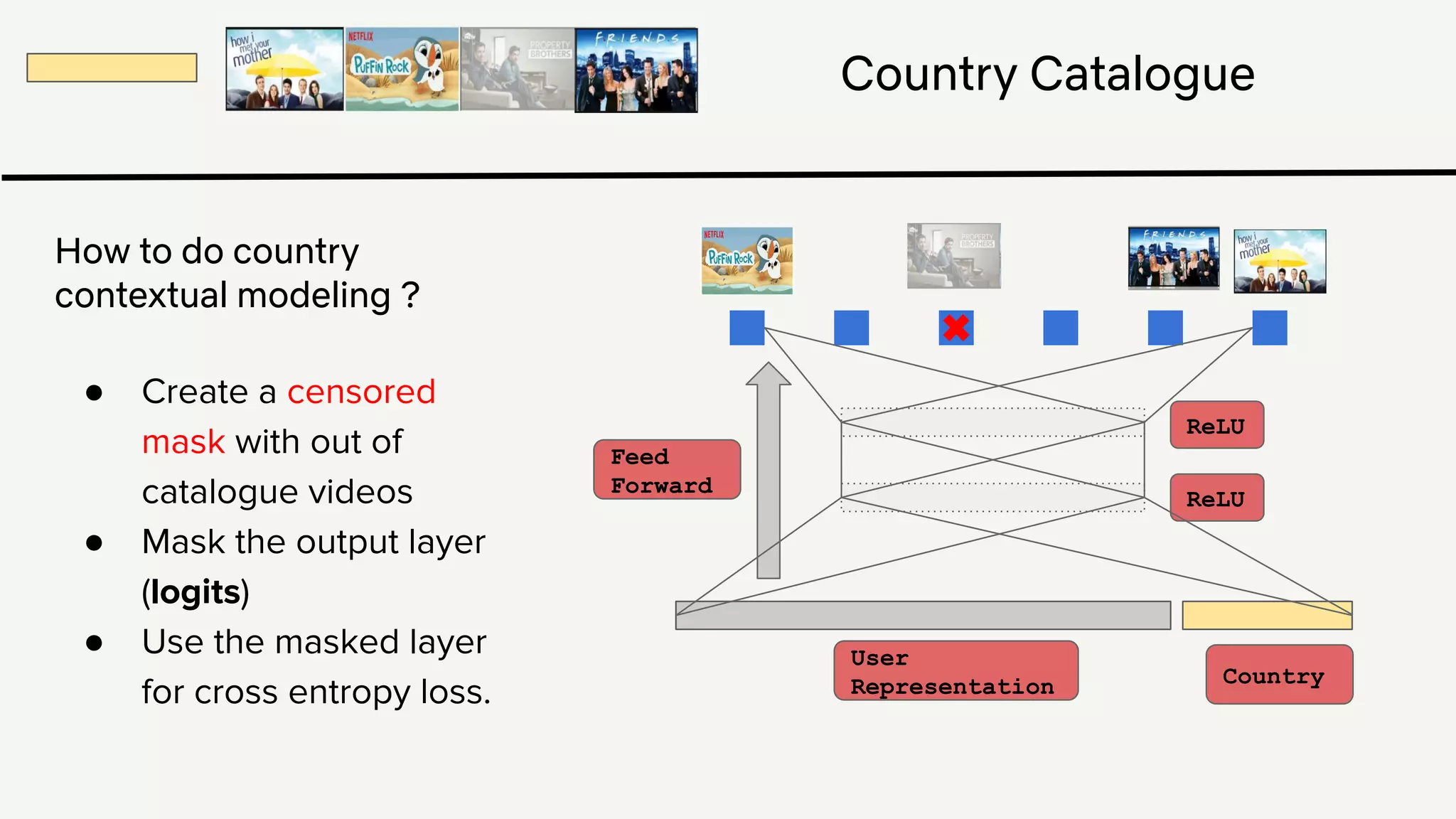

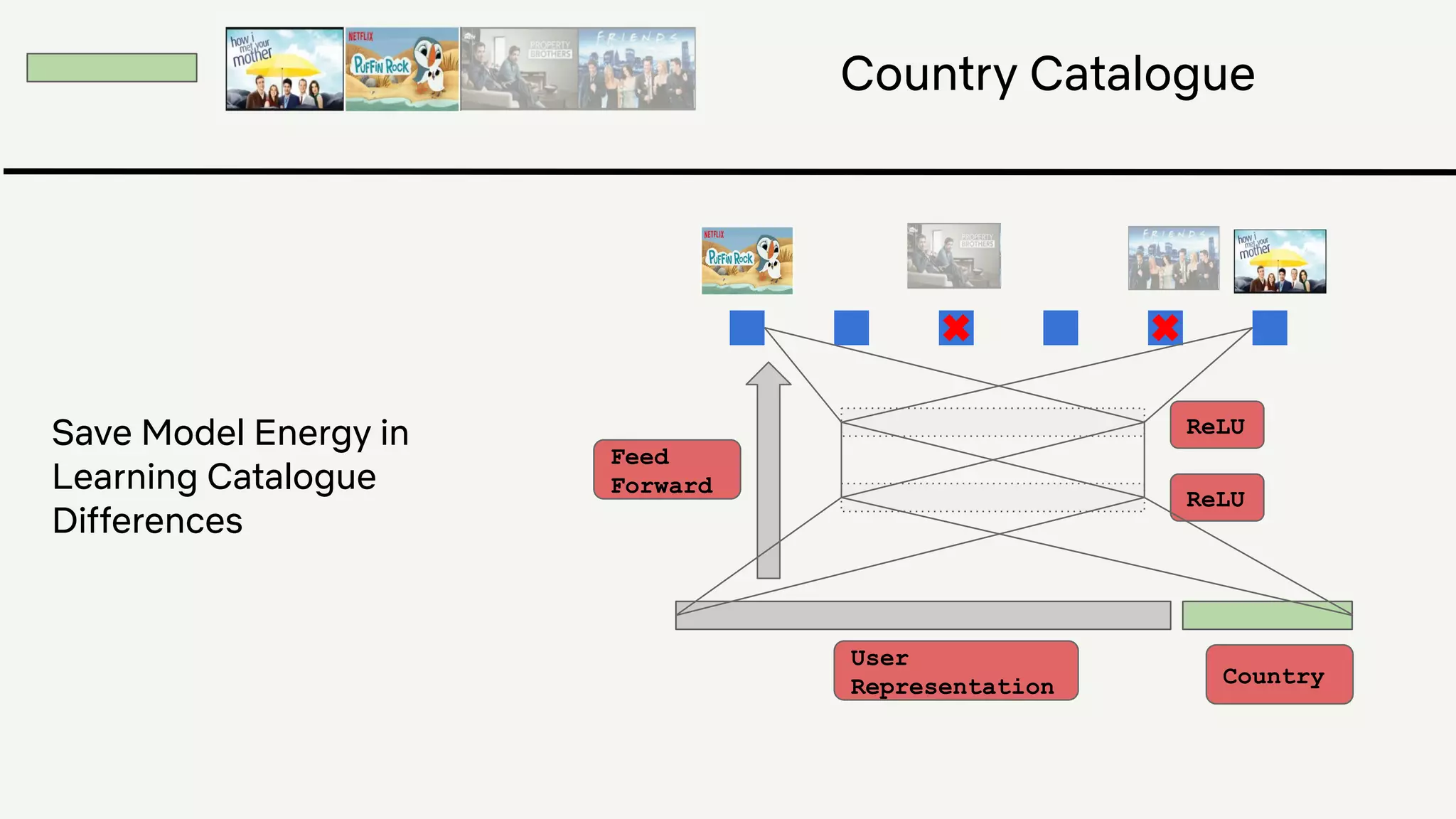

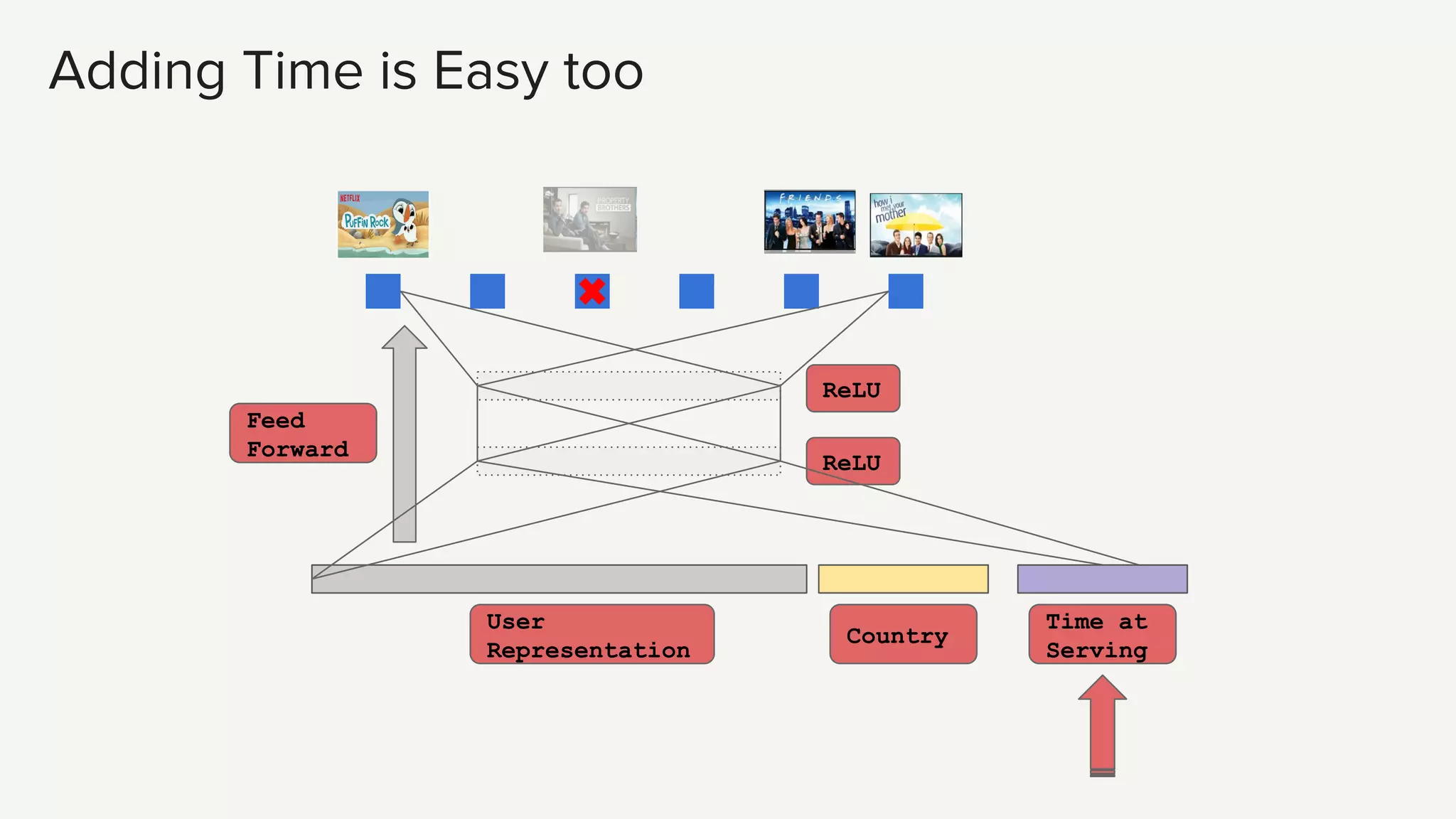

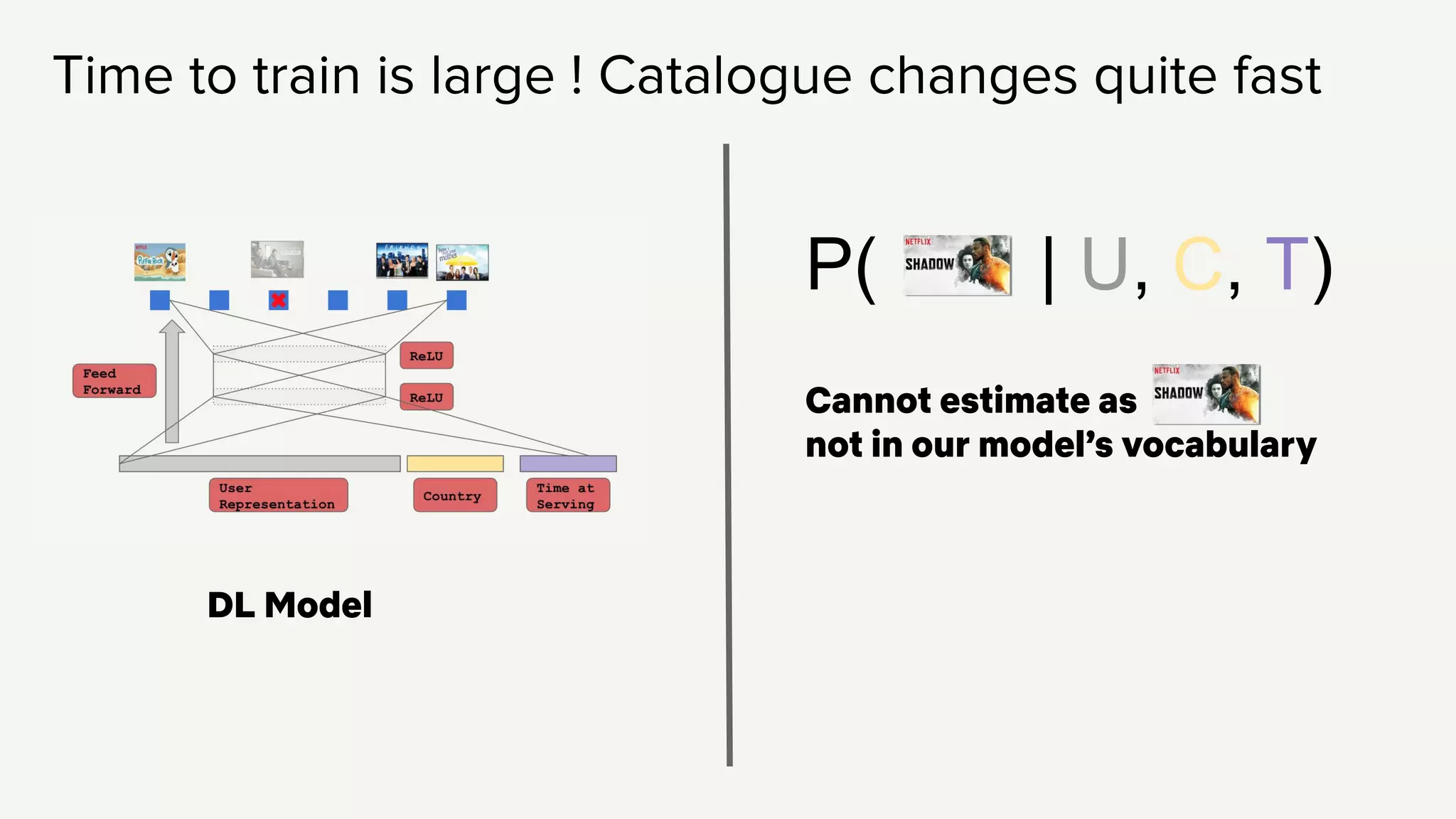

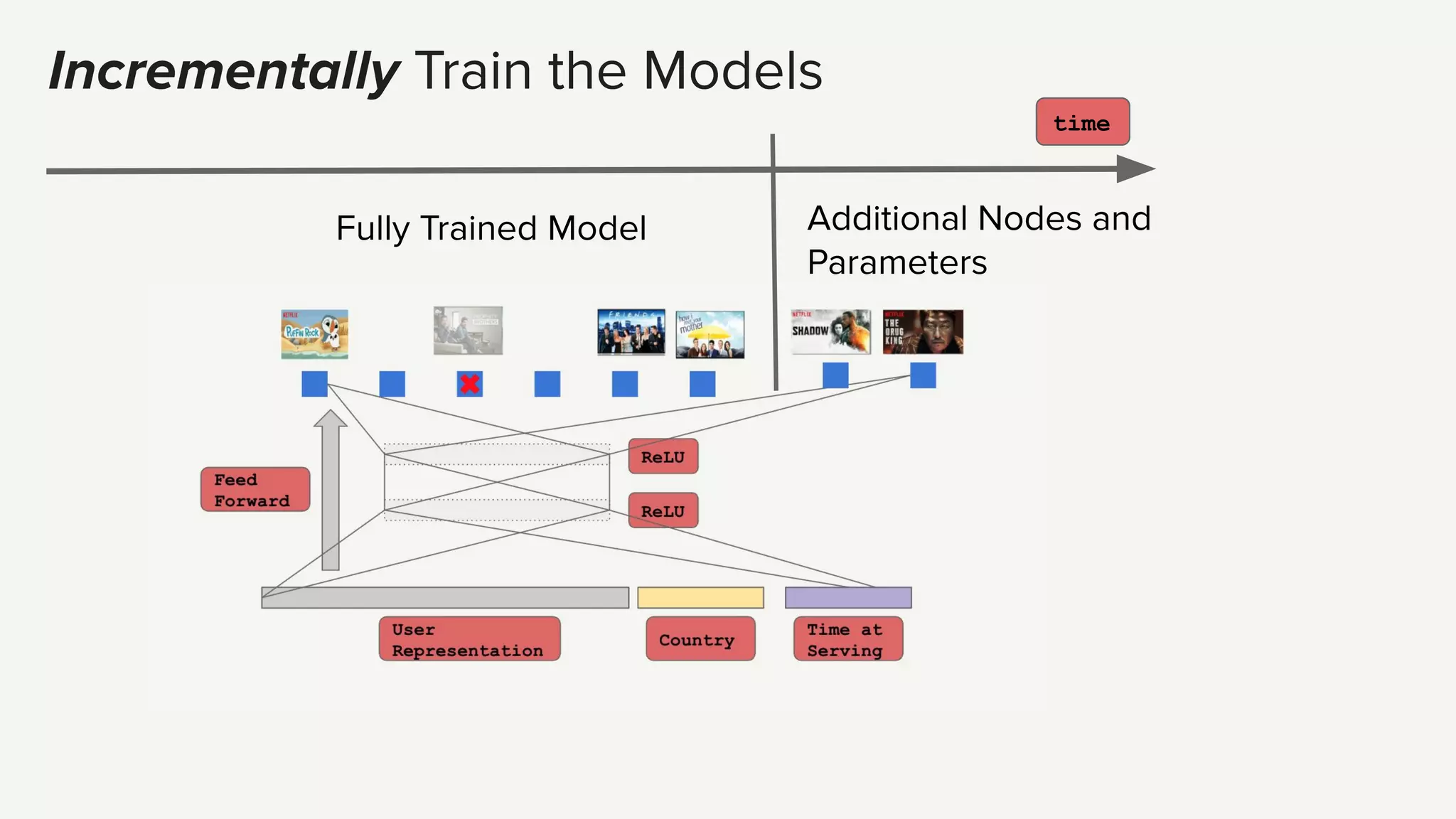

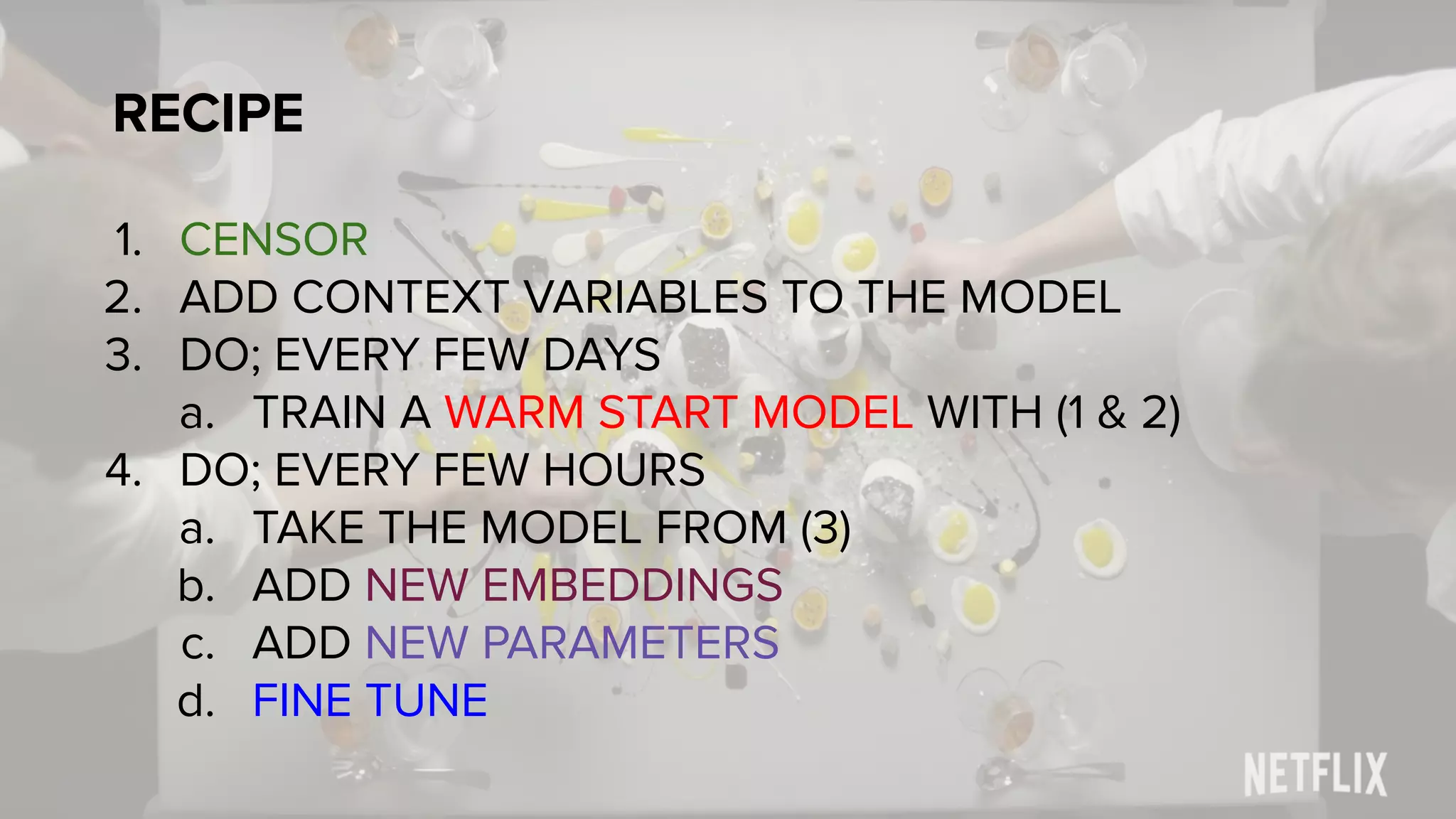

This document discusses Netflix's global deep learning recommender system model. It describes how Netflix recommends content to over 150 million members across 190 countries using personalized recommendations. The system utilizes collaborative filtering techniques like soft clustering models to group users with similar tastes and generate weighted popularity votes. It also leverages topic models to model users' tastes as distributions over topics and content. The challenges of scaling these models globally to account for factors like country-specific catalogs and trends over time are discussed. The solution presented is to incrementally train the models by first censoring unavailable content and adding contextual variables, then periodically training warm start models with new embeddings and parameters to efficiently update the models at scale.