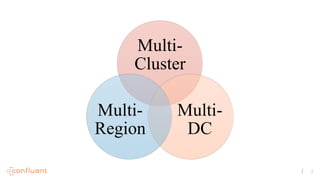

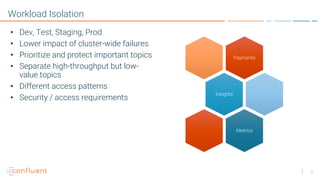

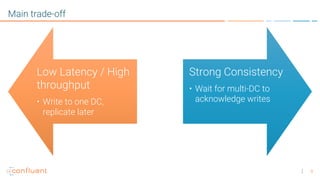

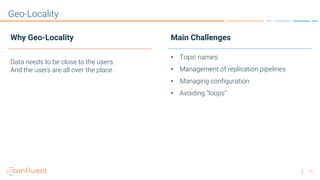

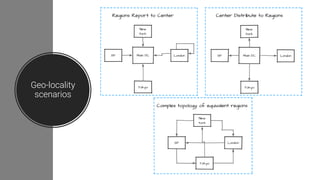

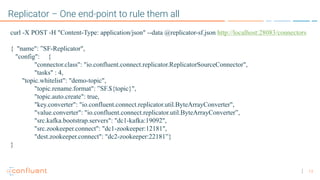

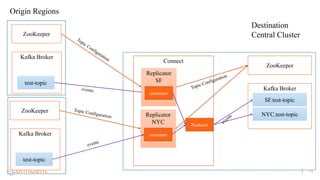

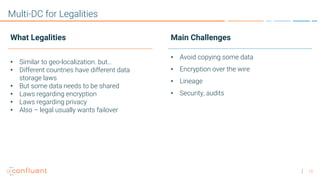

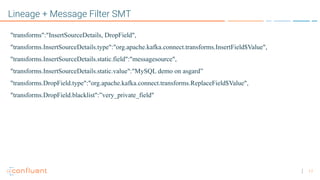

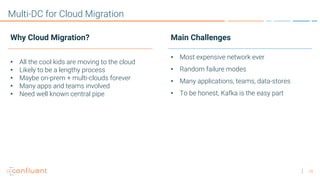

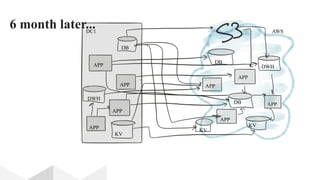

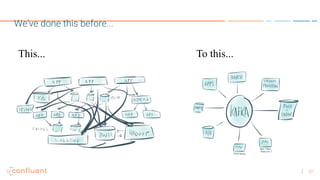

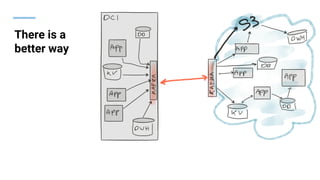

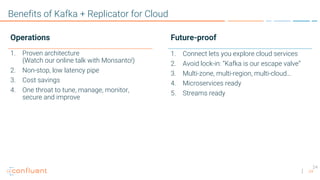

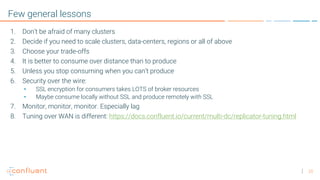

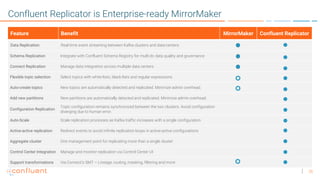

The document discusses the necessity and complexities of implementing multi-cluster and multi-data center Kafka architectures, highlighting reasons such as workload isolation, geo-locality, and legal compliance. It addresses trade-offs including latency and consistency, operational challenges, and the need for effective monitoring and configuration management. It also presents solutions like the Confluent Replicator to streamline data replication and integration across multiple centers.