The document discusses using knowledge base semantics in context-aware entity linking. It proposes a collective entity linking approach that identifies all entities mentioned in a text at once by leveraging an RDF knowledge base. It introduces a weighted semantic relatedness measure to compute the collective coherence score between candidate entities. Experimental results show the approach outperforms state-of-the-art methods on several benchmark datasets.

![10/18

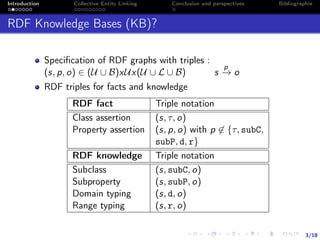

Introduction Collective Entity Linking Conclusion and perspectives Bibliographie

Candidate Entities Generation + Local score

Candidate Entities Generation :

Dictionary : Cross-Wiki

cross-wiki["stevejobs"] => [[’7412236’, 0.99],[’5042765’,0.01]]

Search engine : Wikipedia search

Local score (φ) :

Cosine similarity : cosine(Vmention, Vcandidate entity)

Wikipedia popularity(mention, candidate entity)

pop(m, e) =

n(m, e)

e ∈W

n(m, e )

(1)

n(m, e) = number of time m occurs as anchor of e](https://image.slidesharecdn.com/doceng2019-190926080124/85/Collective-entity-linking-with-WSRM-DocEng-19-13-320.jpg)

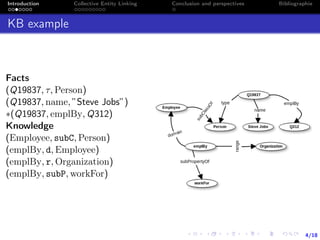

![11/18

Introduction Collective Entity Linking Conclusion and perspectives Bibliographie

Word2vec computes φ()

Learning word representation based on its context.

Two models

Continuous Bag-of-Words (CBOW) : predicting word from

its context

Skip-Gram : predicting for a given word, its context

Example

dataset : the cat sits on the mat.

half-window : 1

CBOW :

([the,sits],cat),([cat,on],sits),([sits,the],on),([on,mat],the)

Skip-Gram :

(cat,[the,sits]),(sits,[cat,on]),(on,[sits,the]),(the,[on,mat])

Reflects semantic proximity

Computes cosine similarity](https://image.slidesharecdn.com/doceng2019-190926080124/85/Collective-entity-linking-with-WSRM-DocEng-19-14-320.jpg)

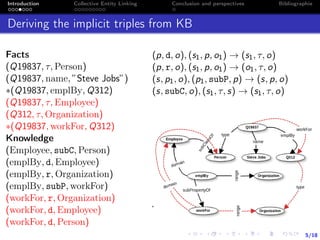

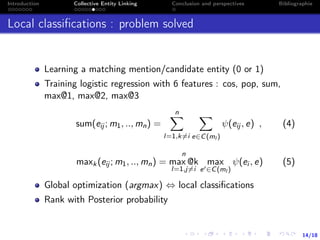

![15/18

Introduction Collective Entity Linking Conclusion and perspectives Bibliographie

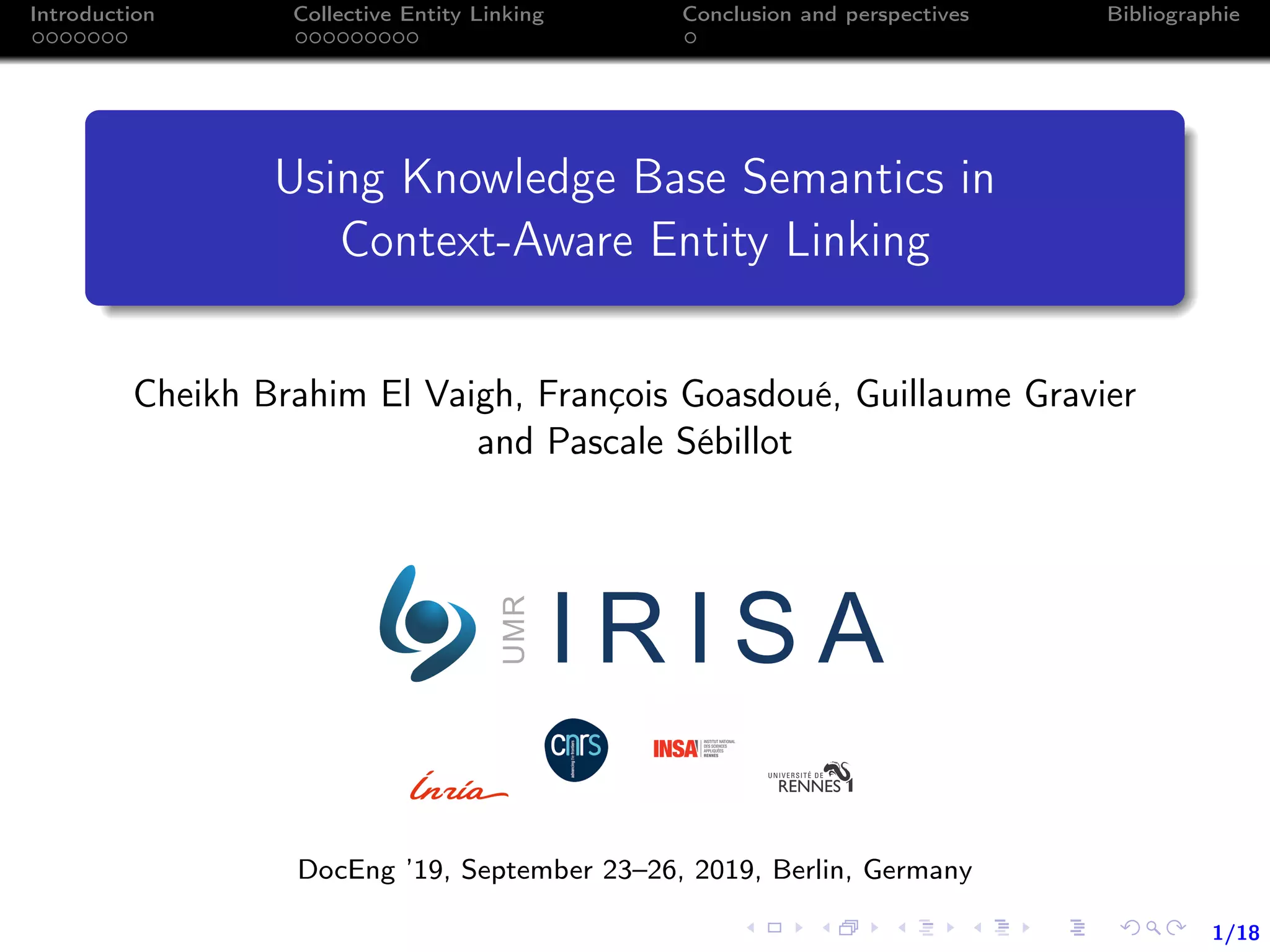

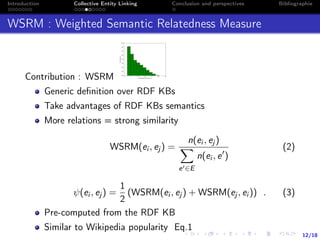

Datasets

AIDA [4] : entity annotated corpus of Reuters news

documents

Reuters128 [9] : Economic news articles

RSS500 [9] : RSS feeds including all major worldwide

newspapers

TAC-KBP 2016-2017 datasets [5, 6] : Newswire and

forum-discussion documents

Dataset Nb. docs Nb. mentions Avg nb. mentions/doc

TAC-KBP 2016 eval 169 9231 54.6

TAC-KBP 2017 eval 167 6915 41.4

AIDA-train 846 18519 21.9

AIDA-valid 216 4784 22.1

AIDA-test 231 4479 19.4

Reuters128 128 881 6.9

RSS-500 500 1000 2

Table: Statistics on the used datasets.](https://image.slidesharecdn.com/doceng2019-190926080124/85/Collective-entity-linking-with-WSRM-DocEng-19-18-320.jpg)

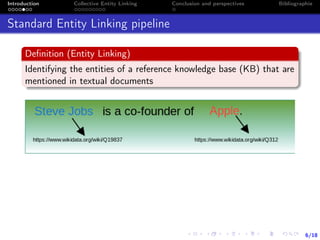

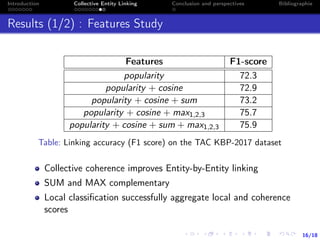

![17/18

Introduction Collective Entity Linking Conclusion and perspectives Bibliographie

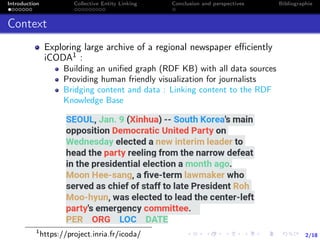

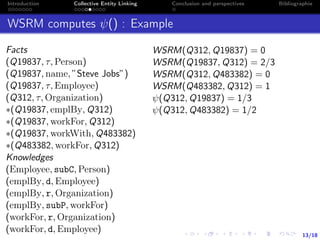

Results (2/2) : Comparison to State-of-the-Art Approaches

Approach AIDA-A AIDA-B Reuters128 RSS-500

CNN [2] (Entity-by-Entity) - 85.5 - -

End-to-End [7] 89.4 82.4 54.6 42.2

NCEL [1] 79.0 80.0 - -

AGDISTIS [10] 57.5 57.8 68.9 54.2

Babelfy [8] 71.9 75.5 54.8 64.1

AIDA [4] 74.3 76.5 56.6 65.5

PBoH [3] 79.4 80.0 68.3 55.3

WSRM 90.6 87.7 79.9 79.3

Table: Micro-averaged F1 score for different methods on the four

datasets

WSRM stable for different datasets](https://image.slidesharecdn.com/doceng2019-190926080124/85/Collective-entity-linking-with-WSRM-DocEng-19-20-320.jpg)