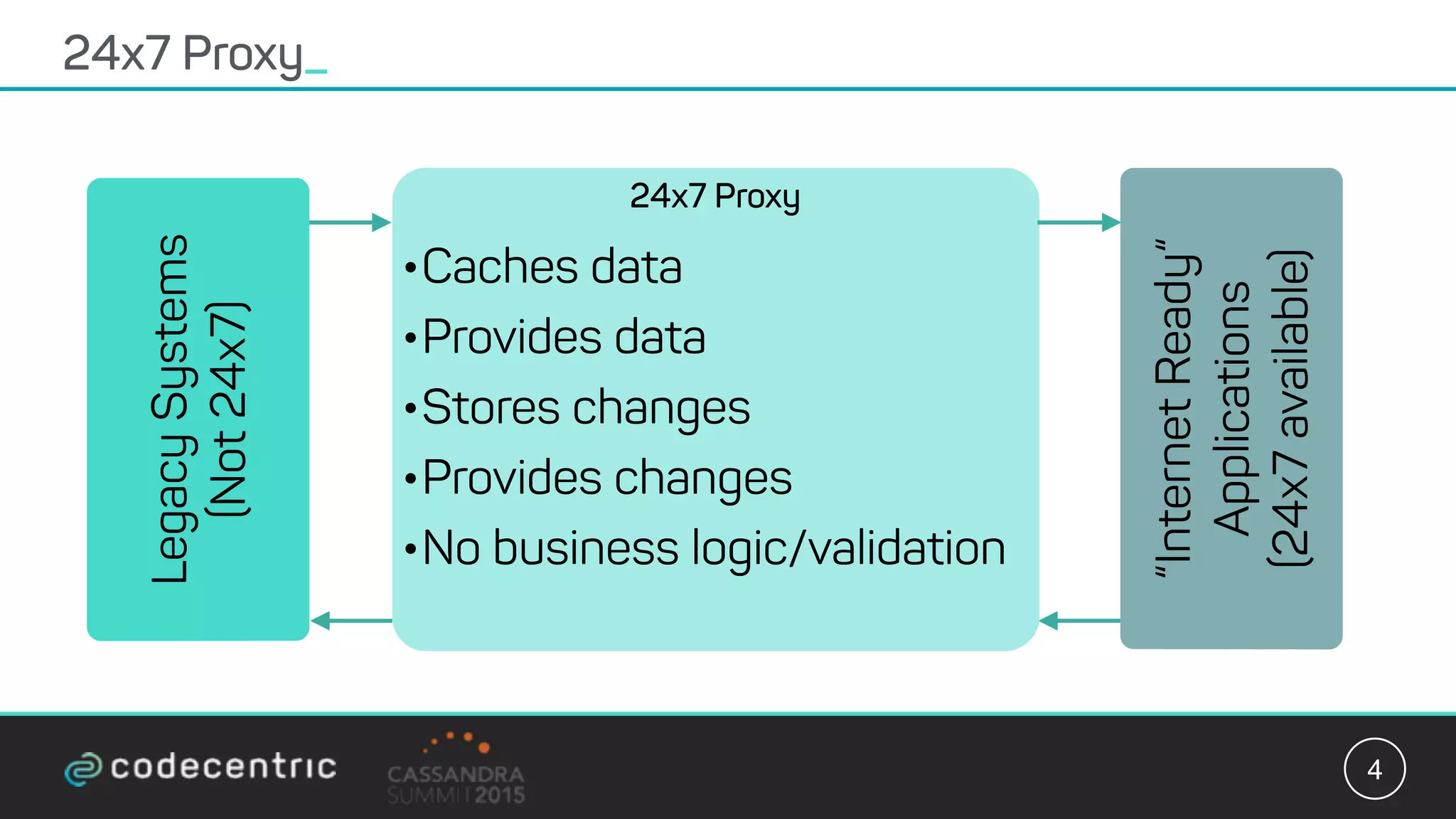

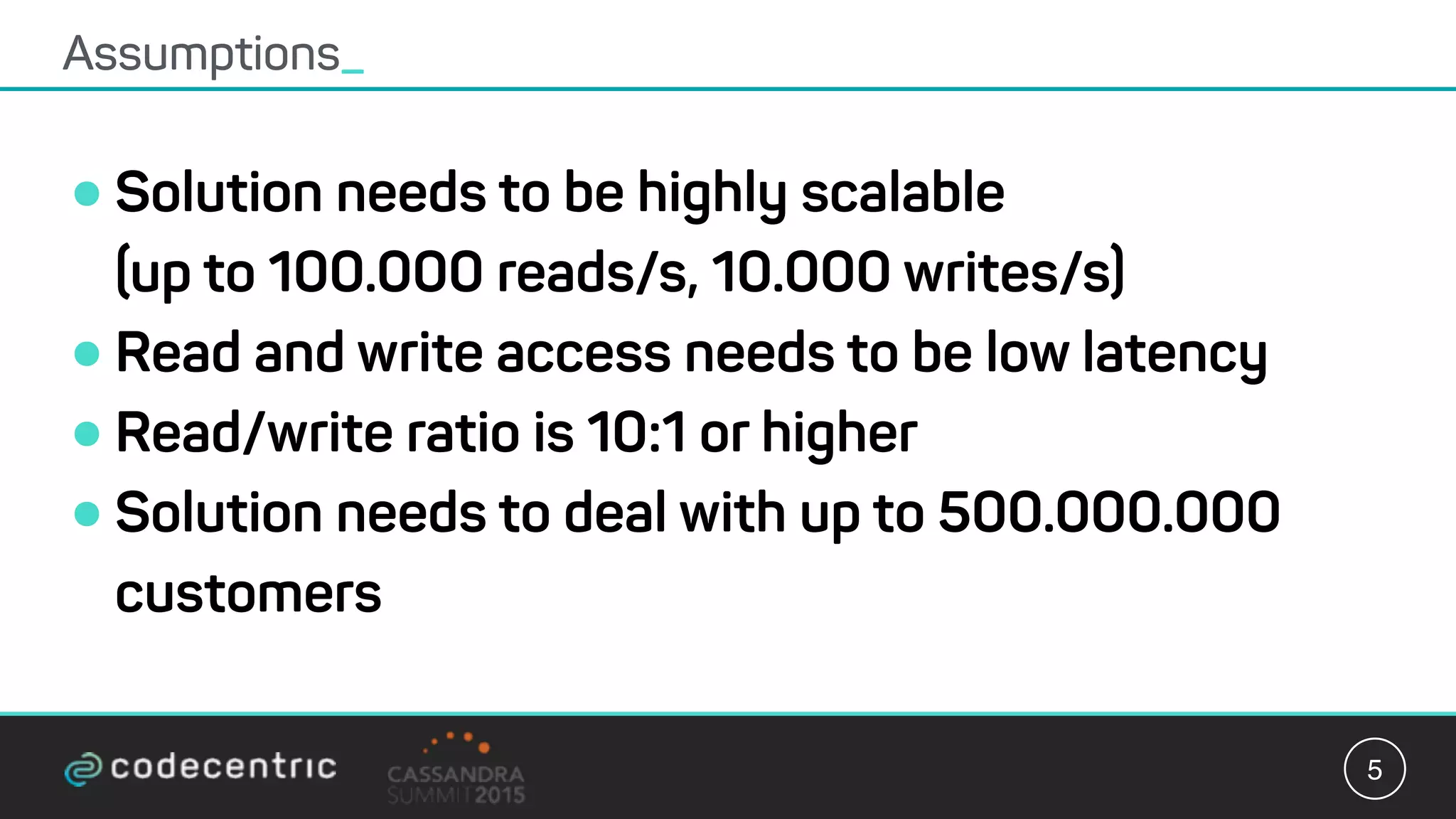

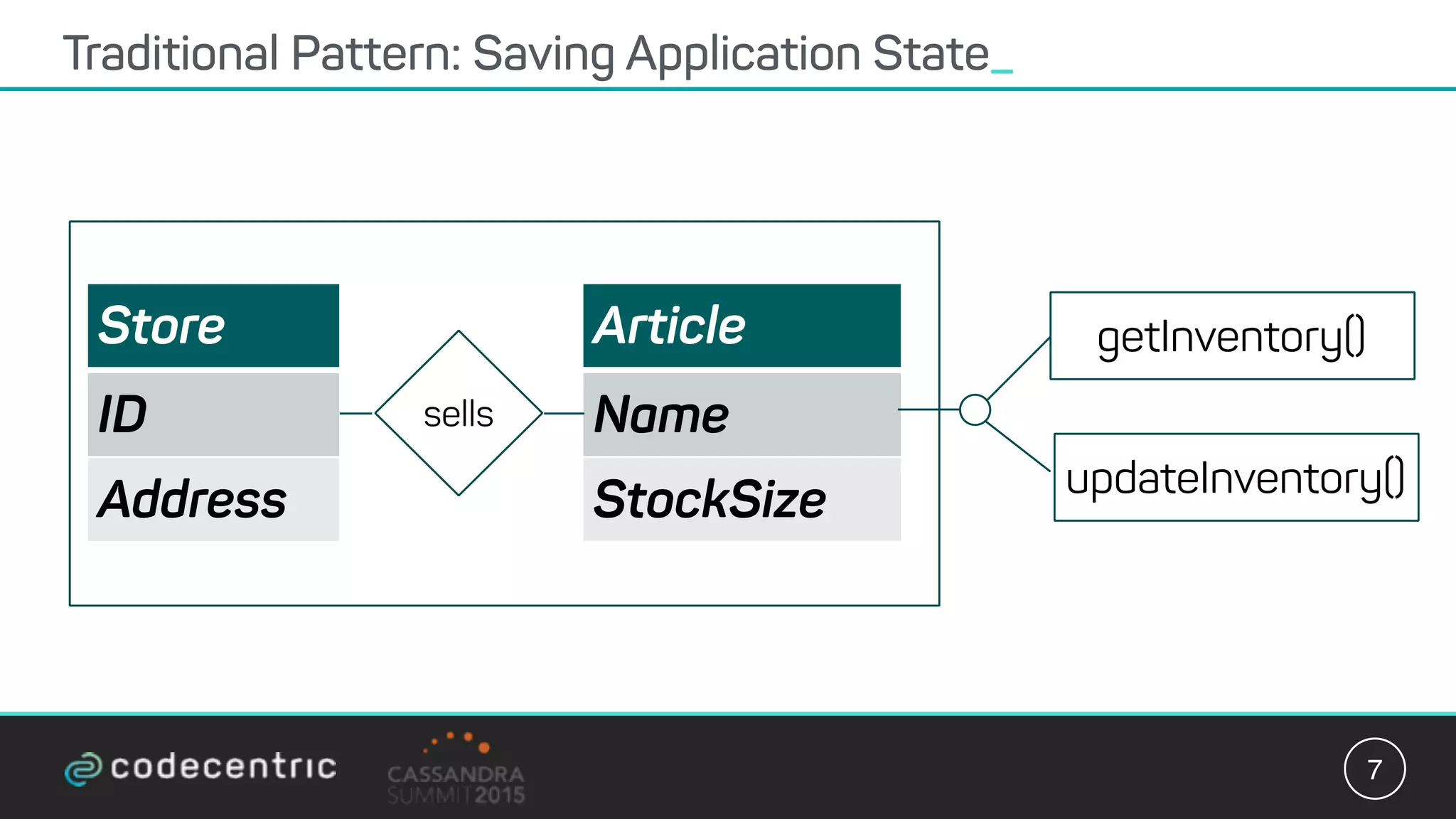

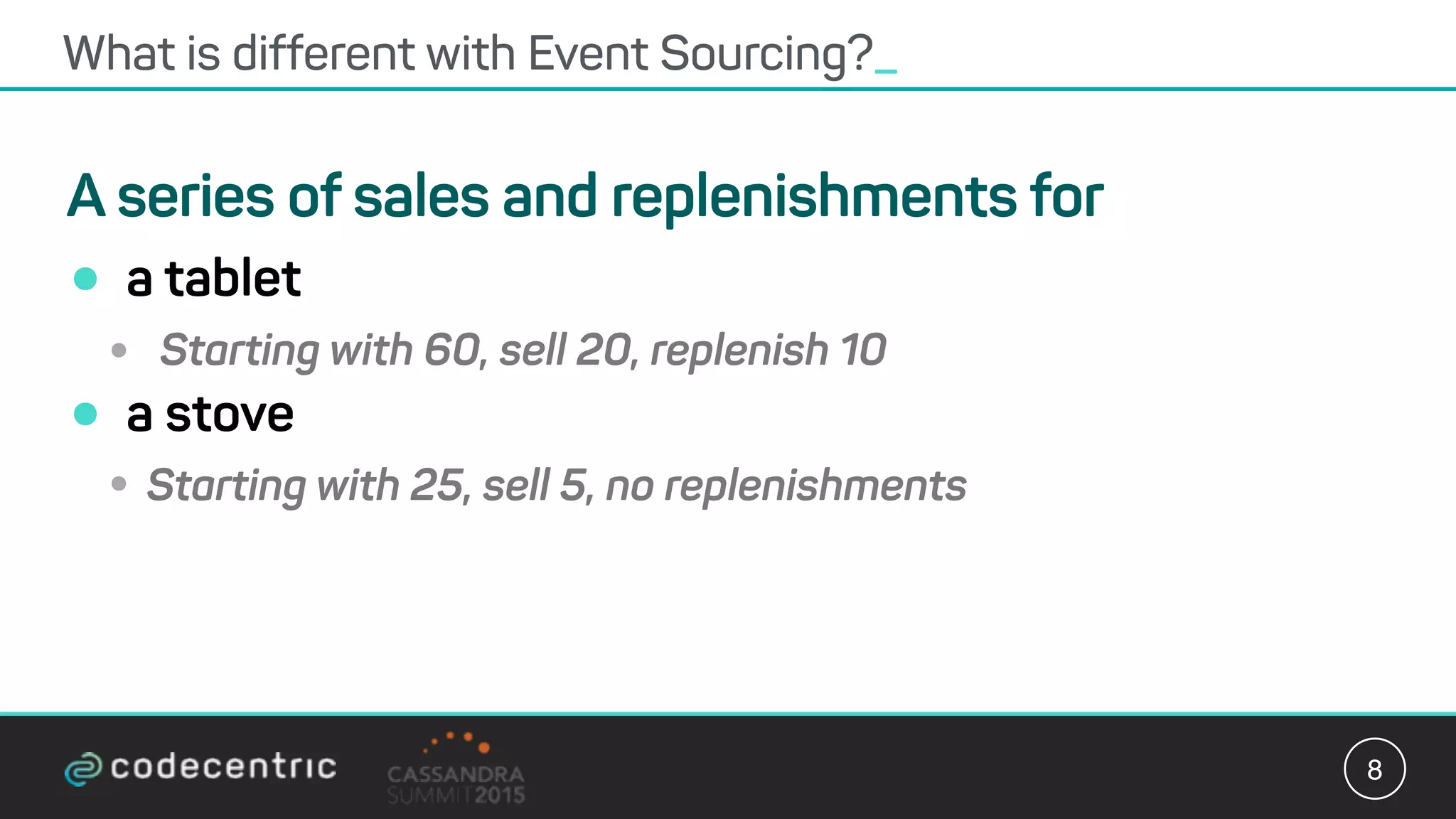

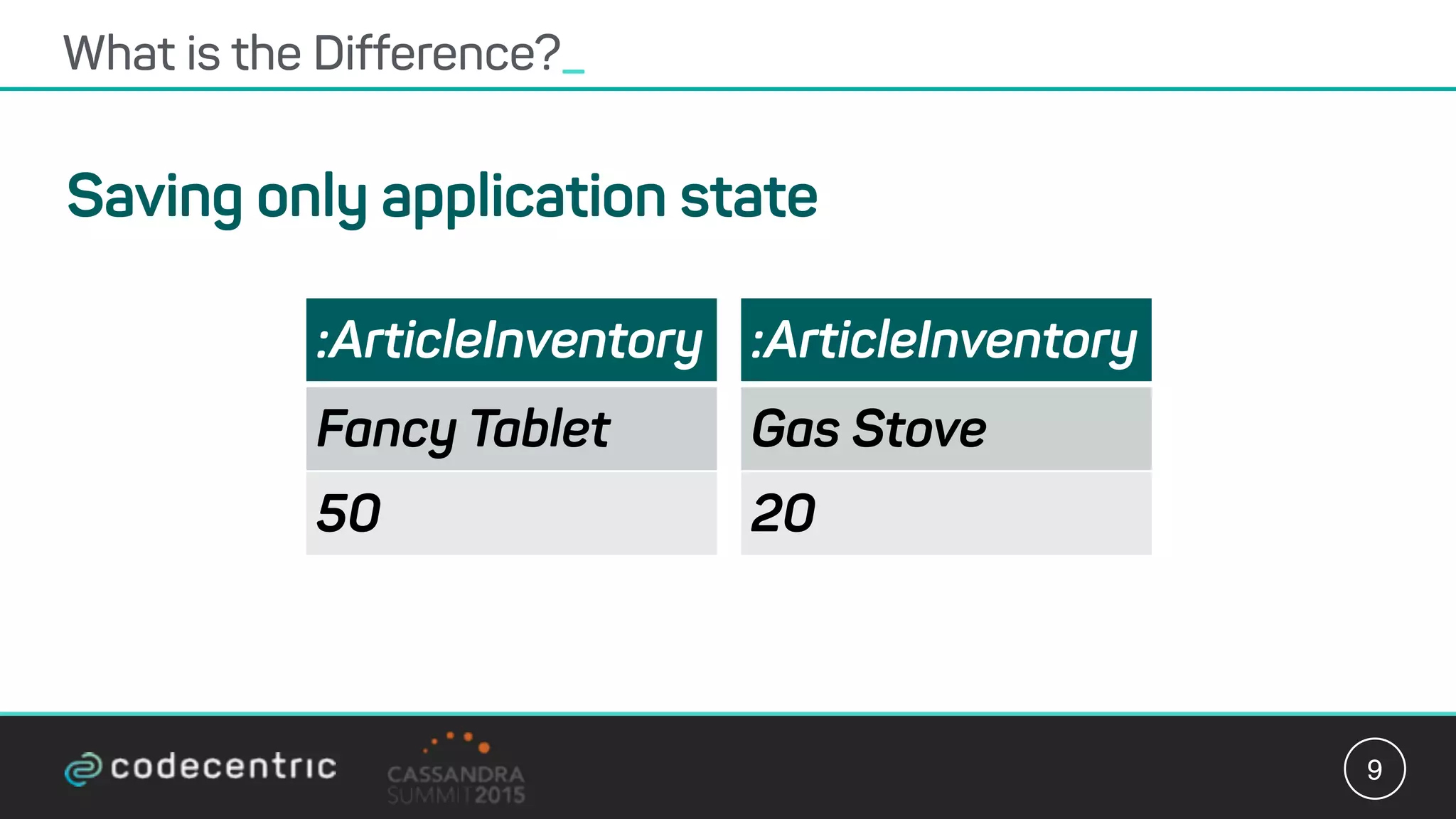

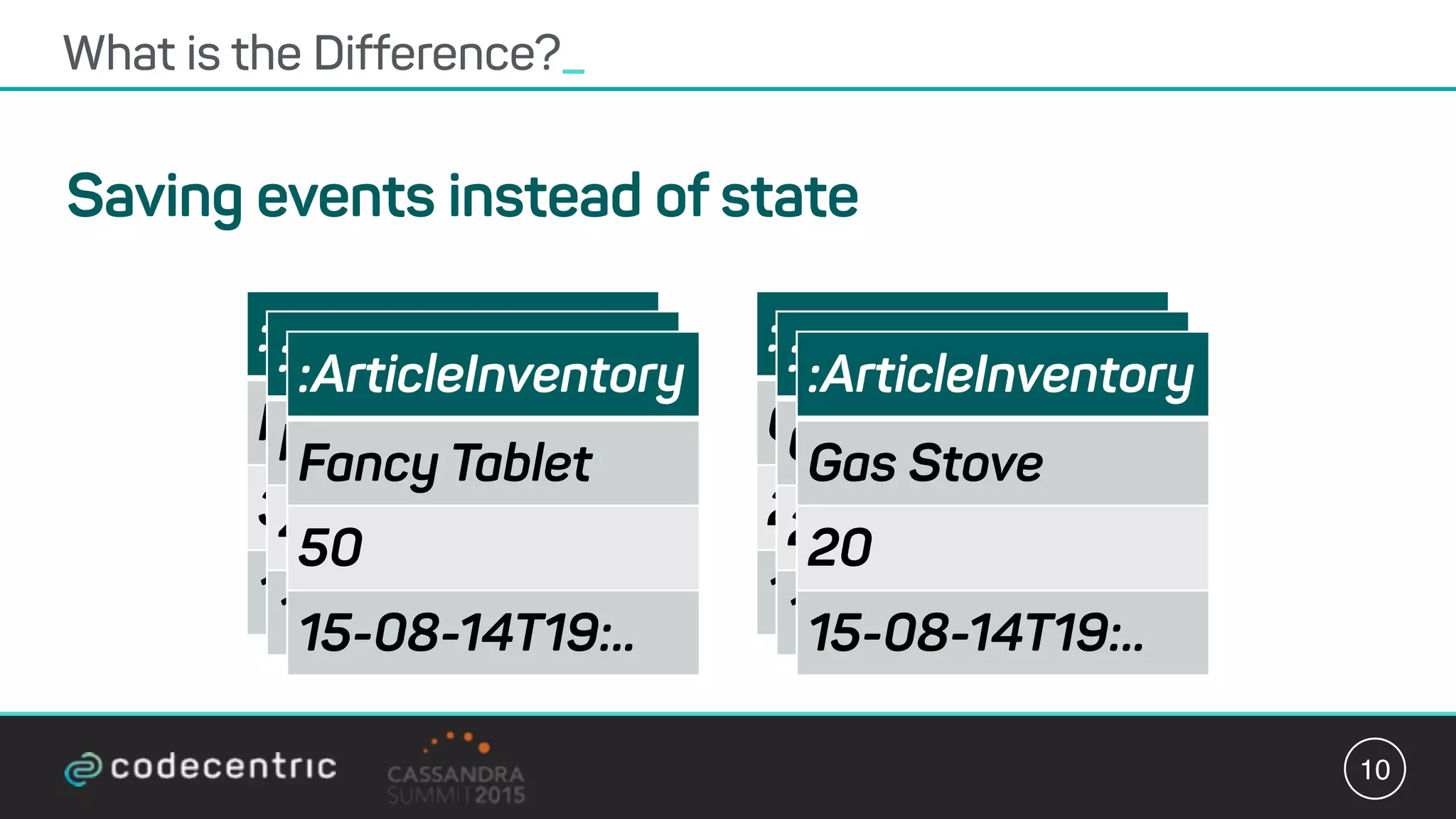

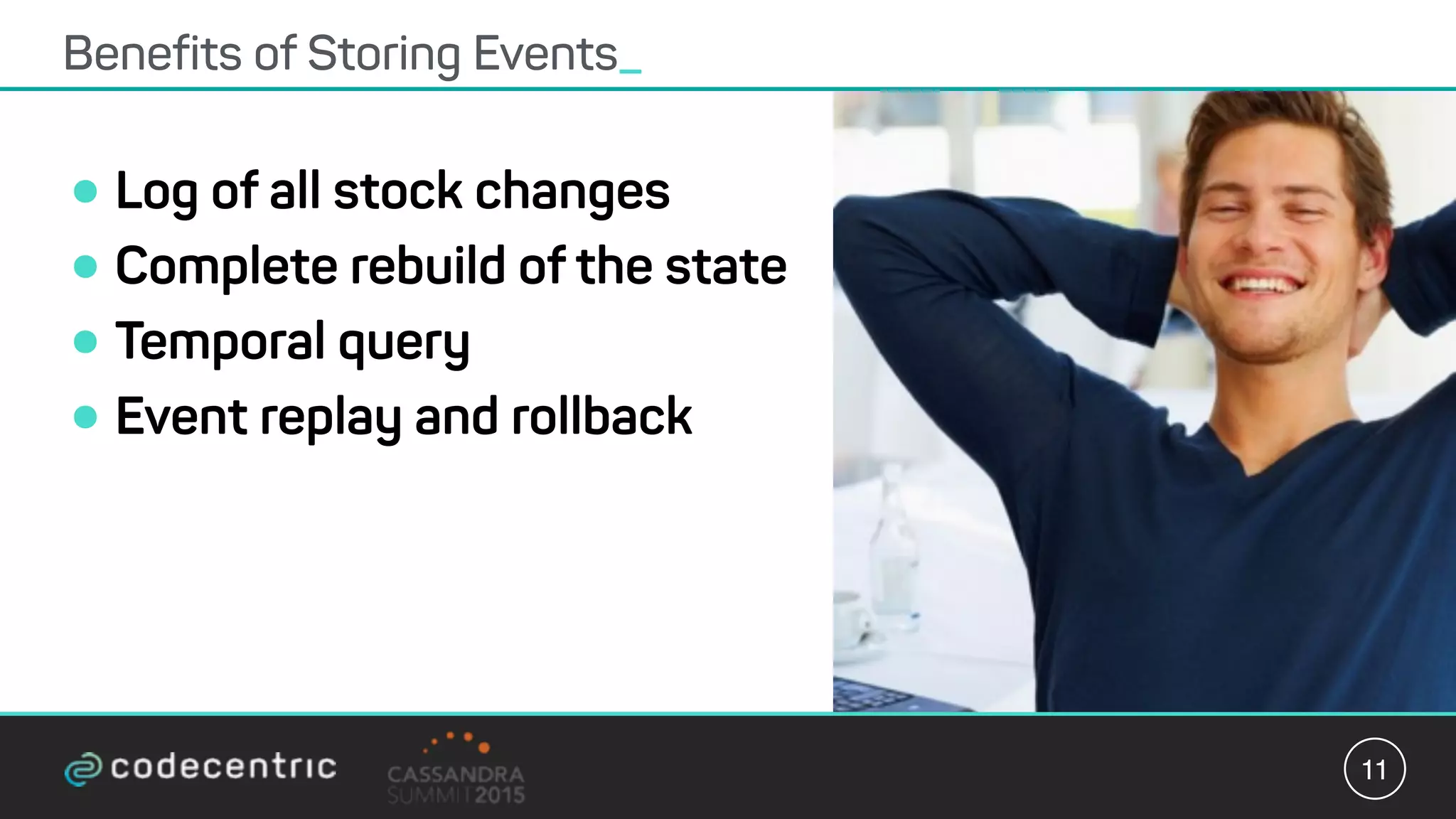

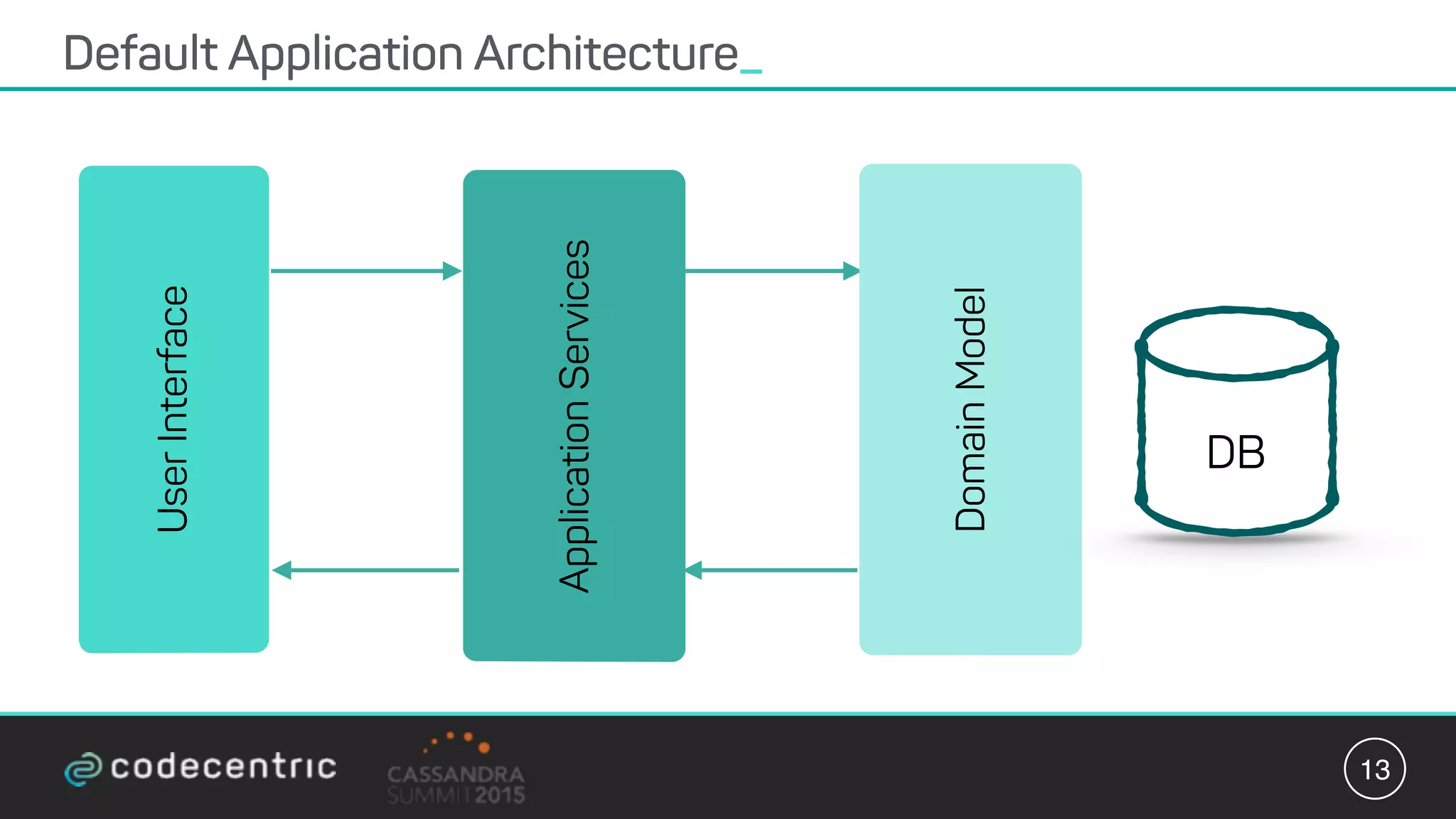

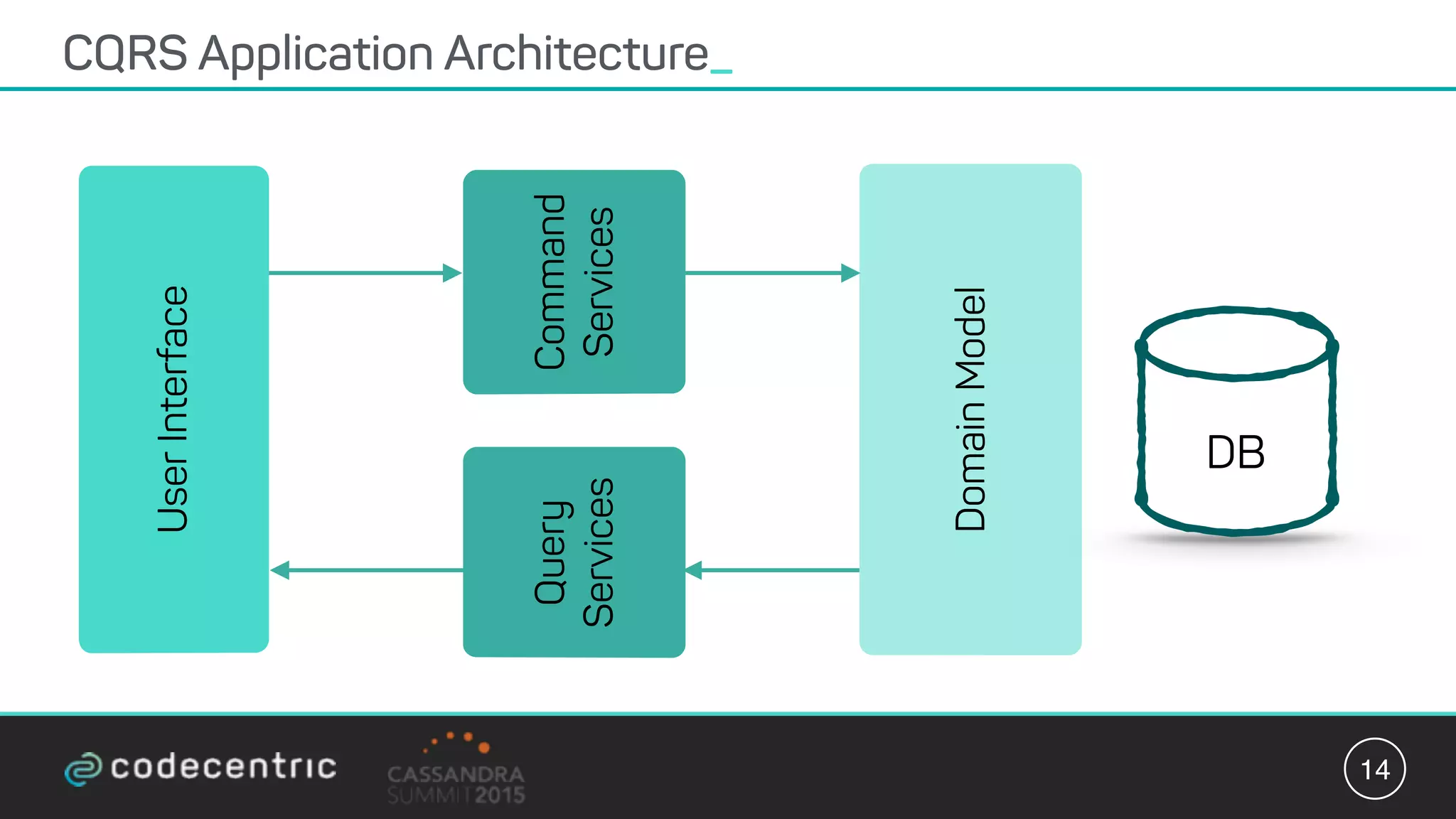

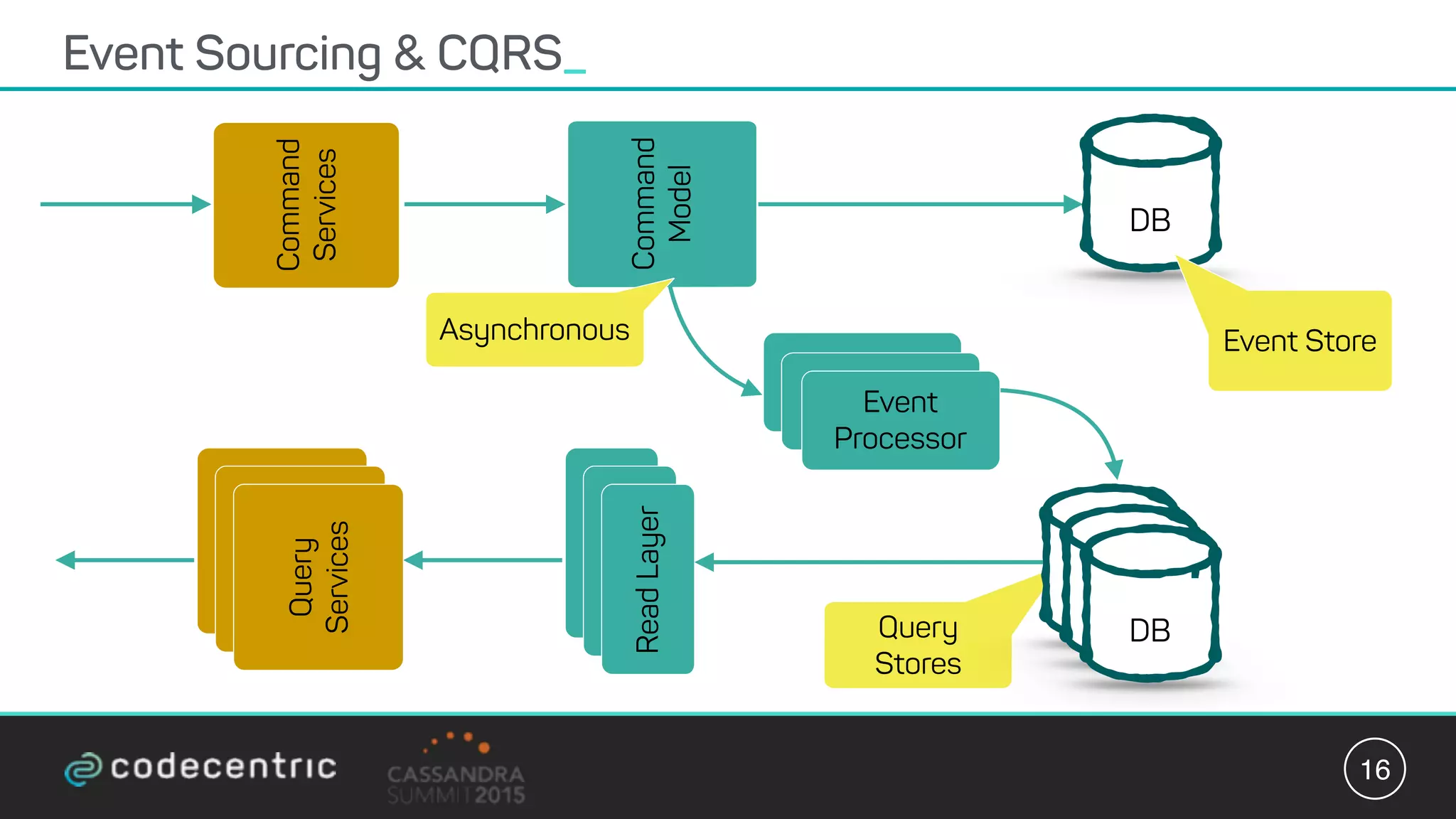

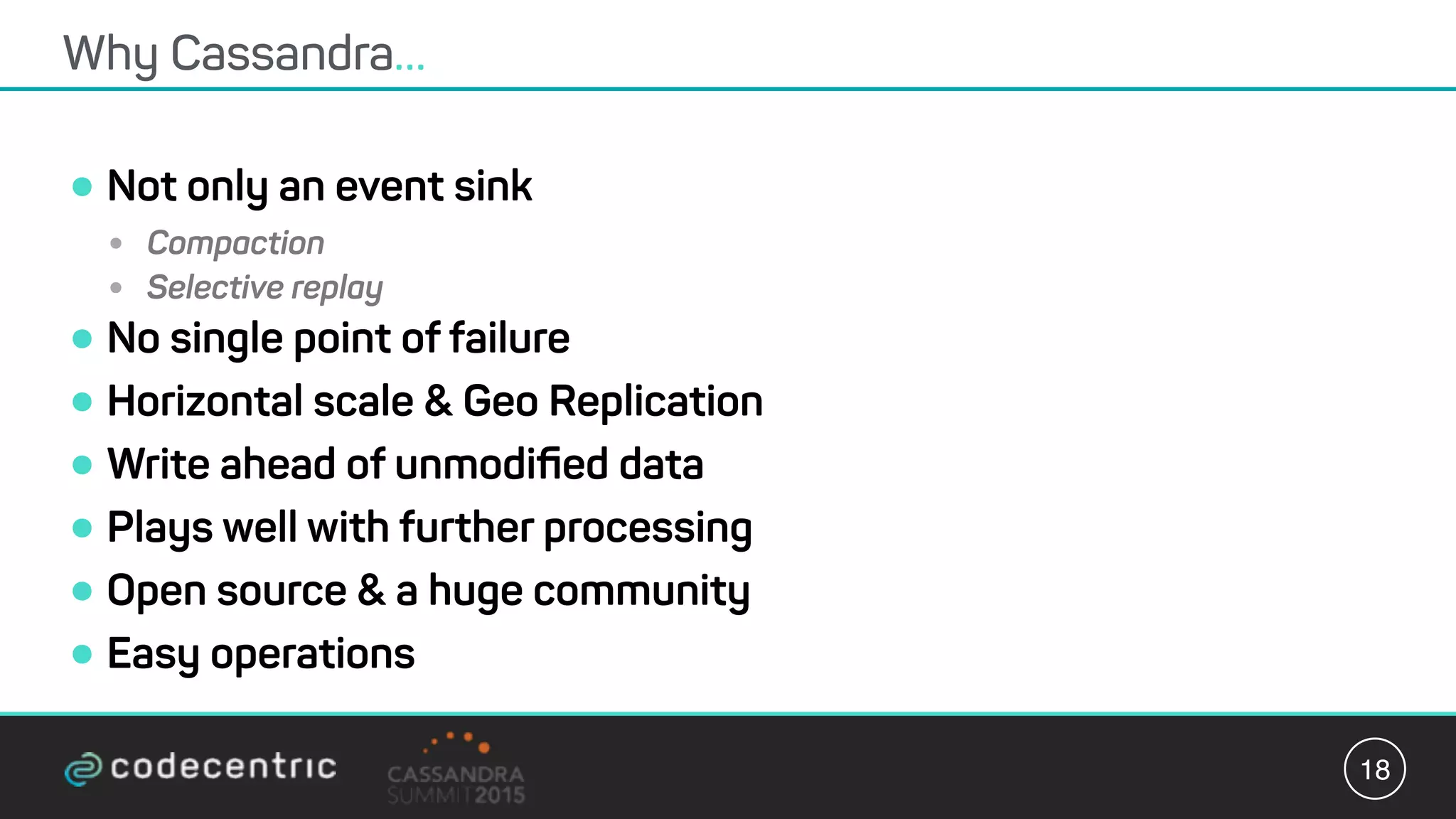

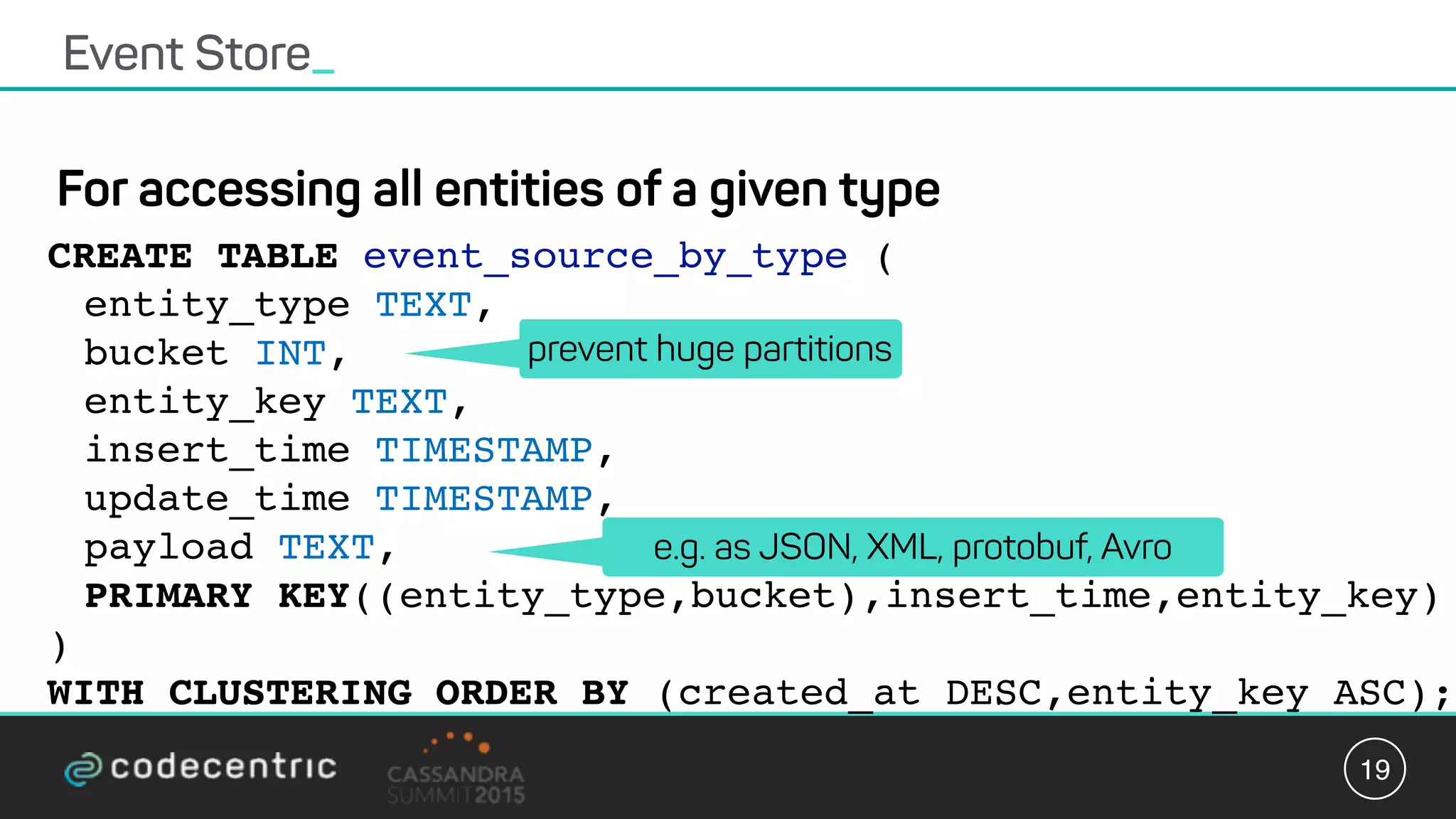

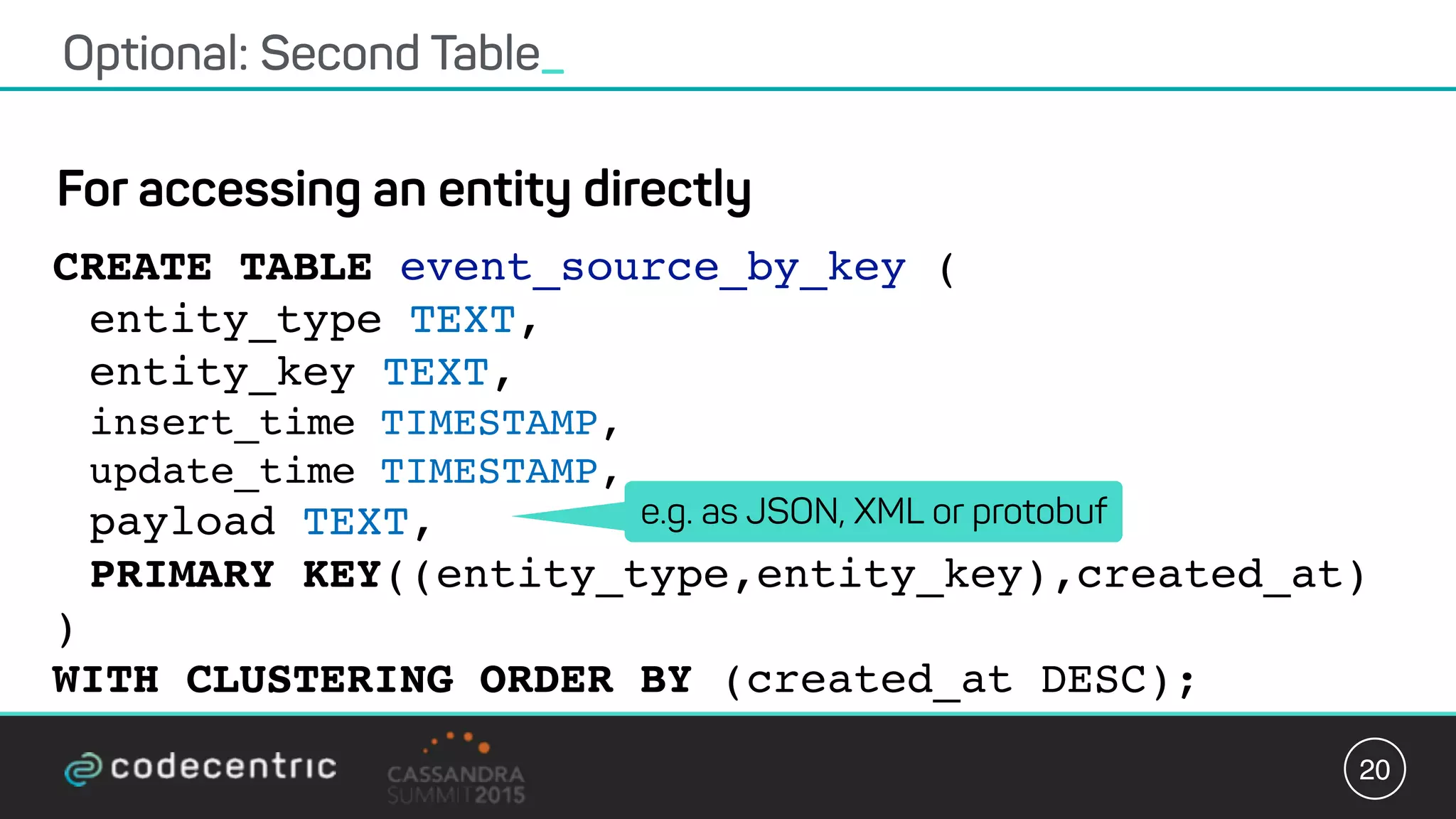

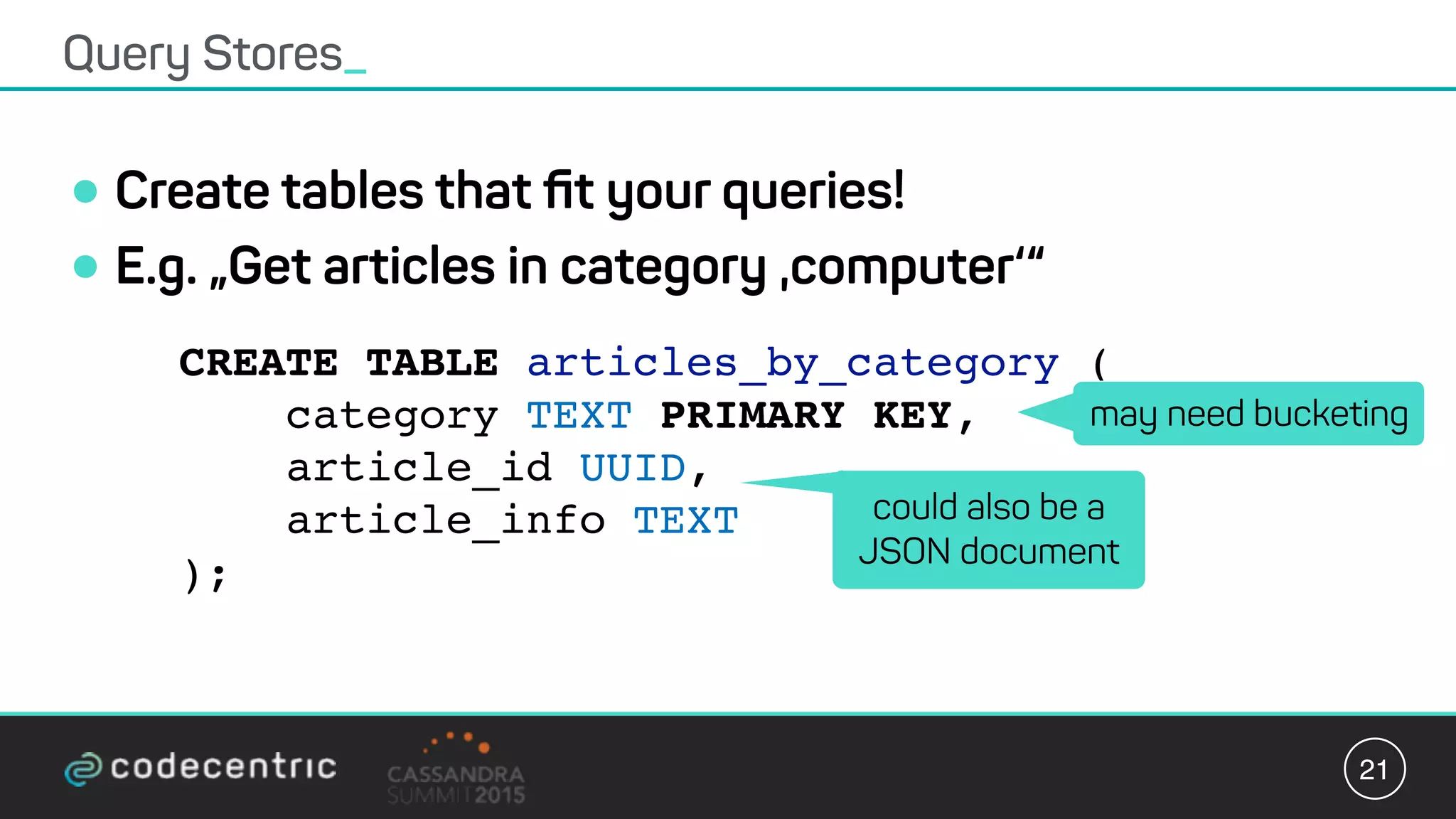

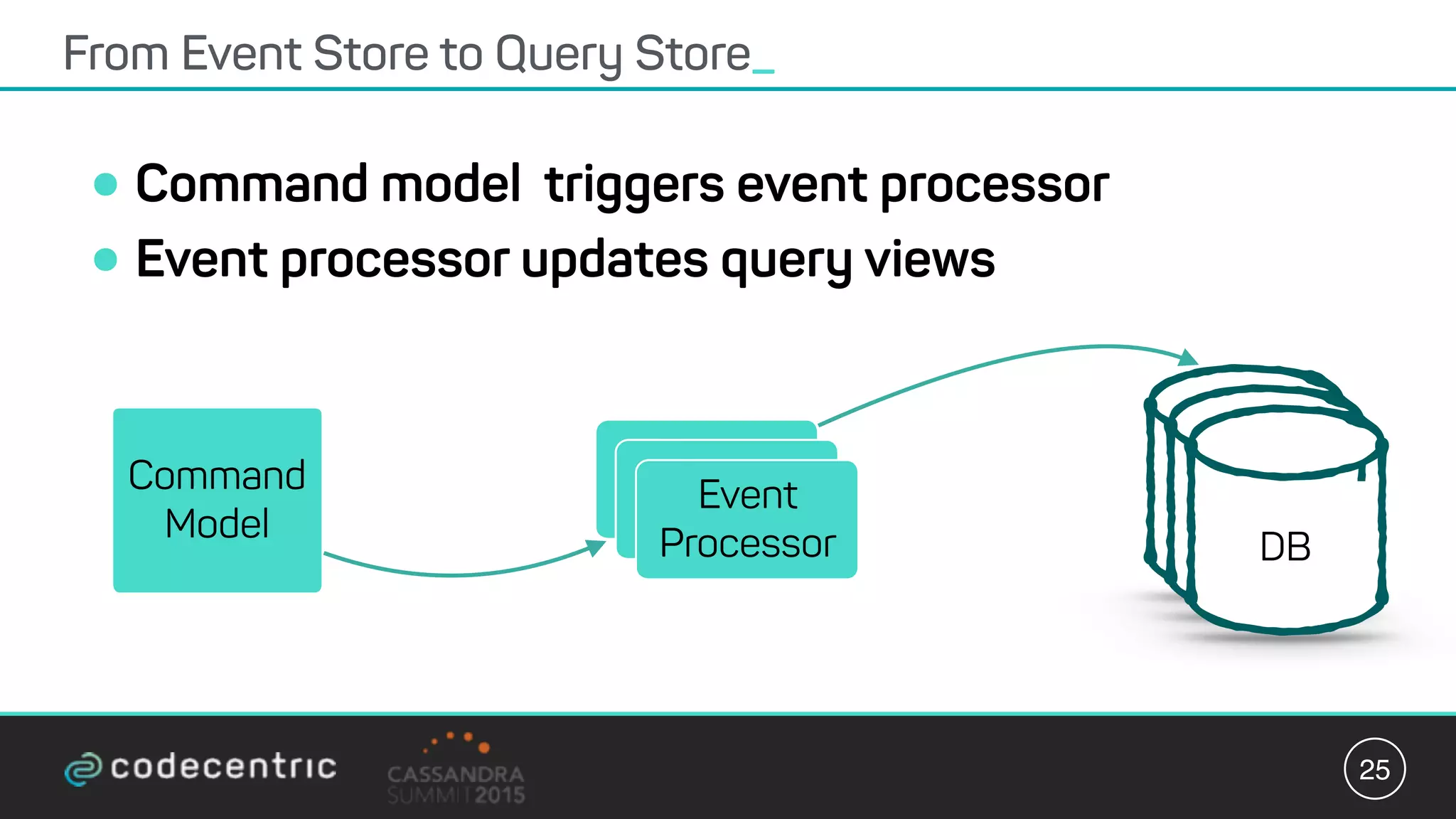

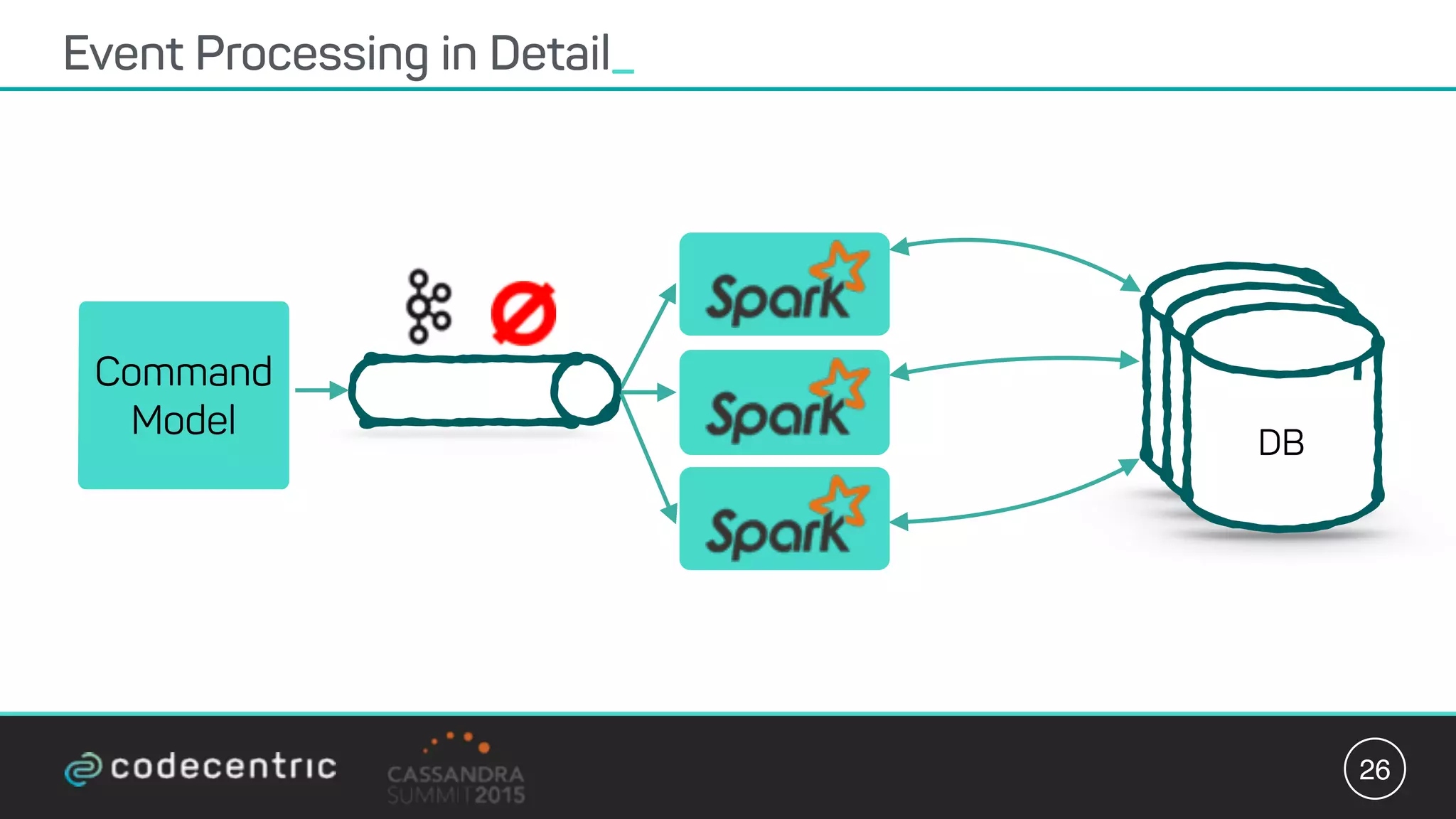

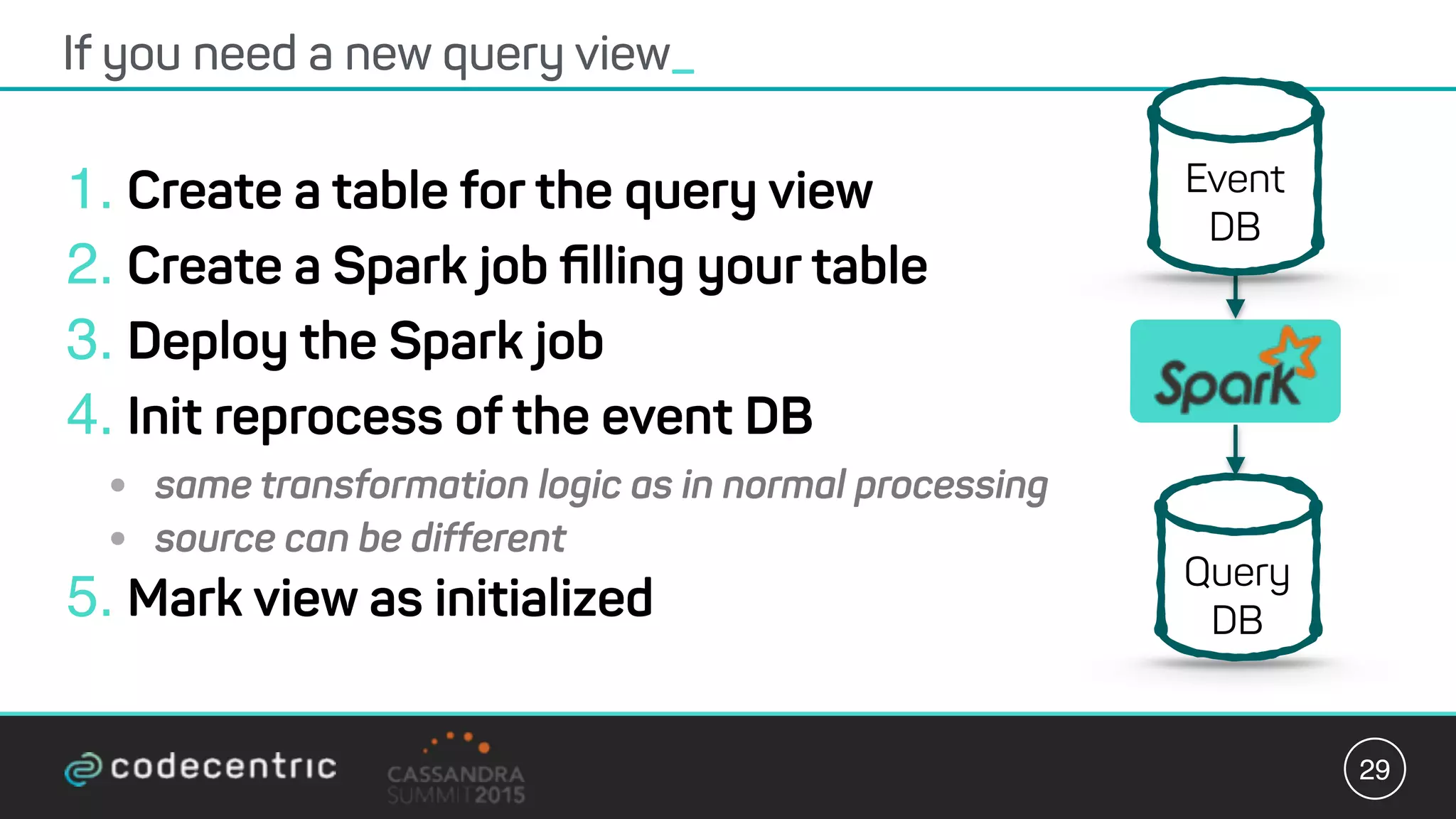

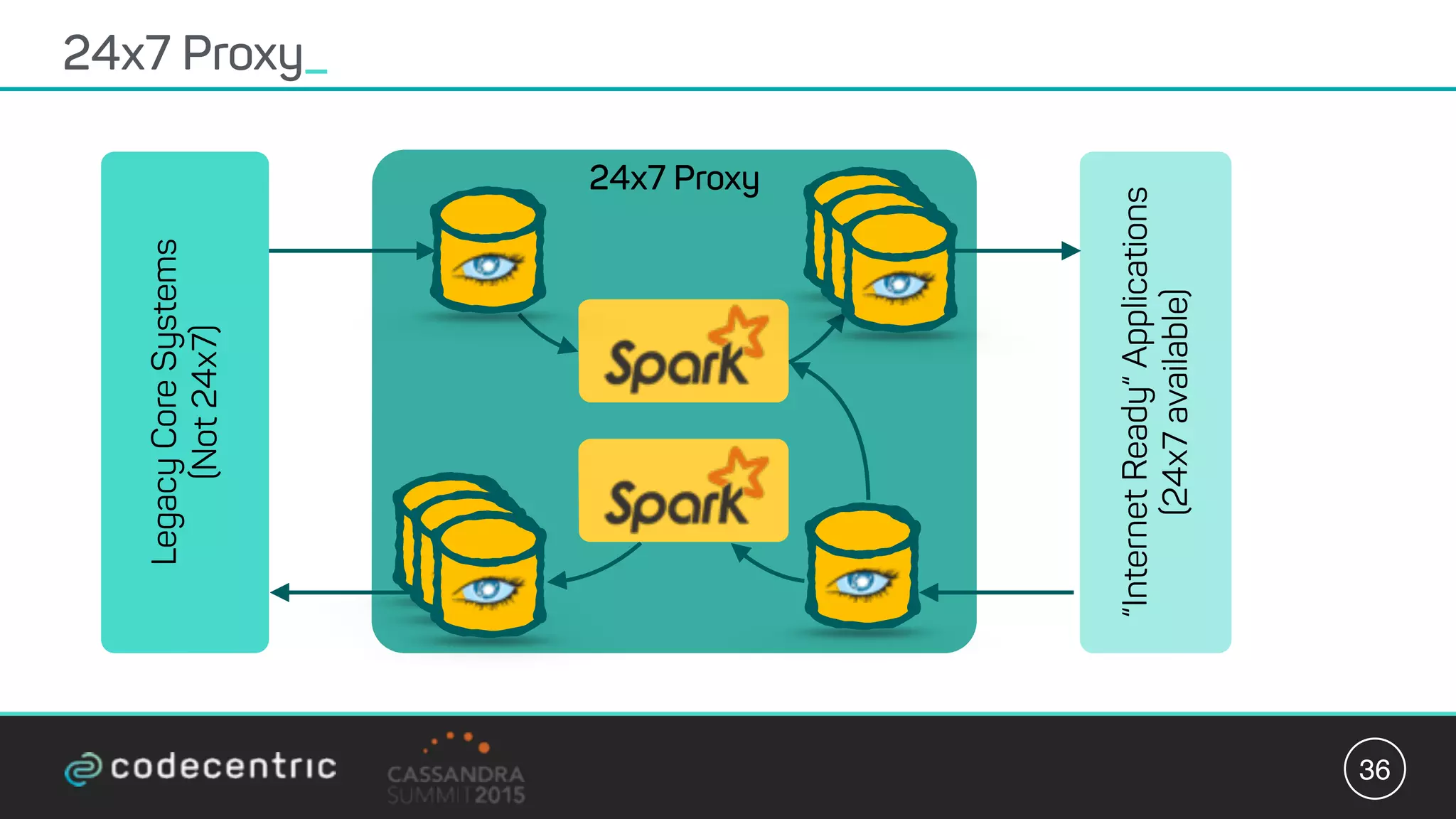

Matthias Niehoff's presentation discusses the integration of CQRS and event sourcing with Cassandra for building scalable, 24/7 applications. The approach emphasizes the benefits of storing events over traditional state saving, allowing for improved querying and processing using frameworks like Spark. Niehoff outlines both the advantages and challenges of this architecture, including scalability and complexity in handling concurrent writes.