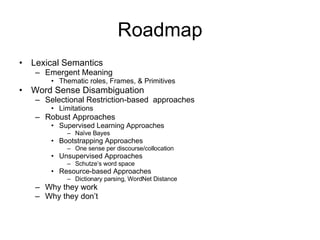

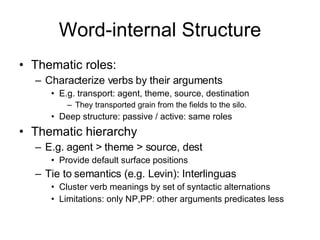

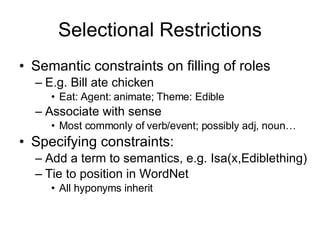

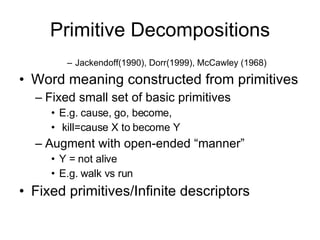

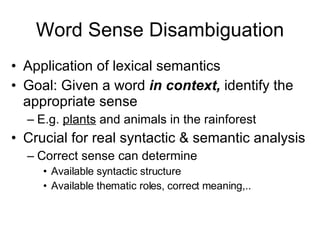

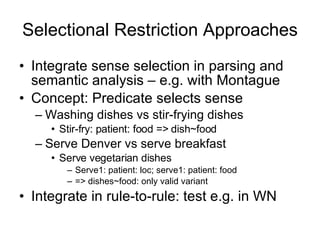

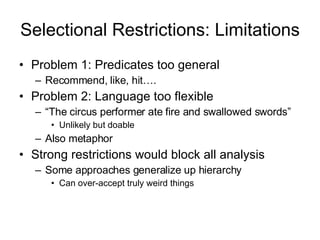

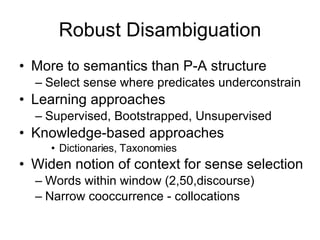

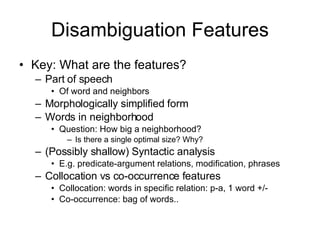

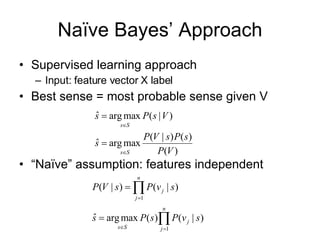

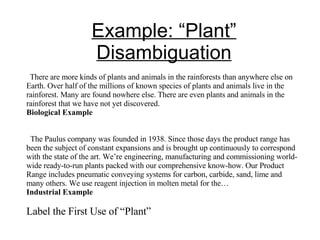

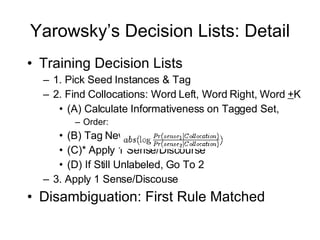

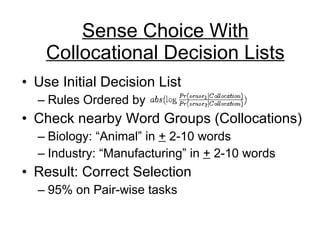

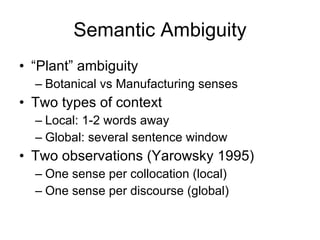

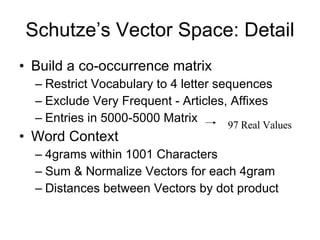

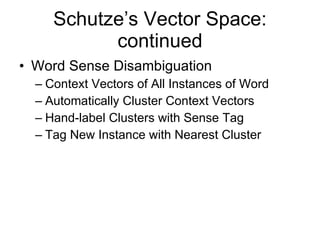

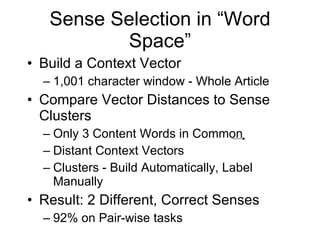

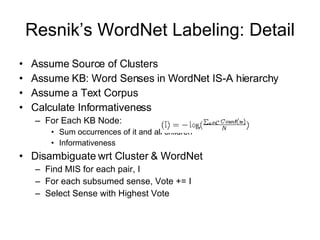

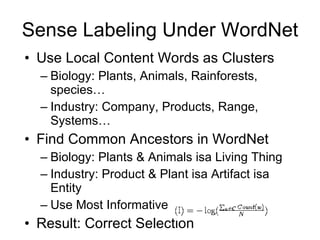

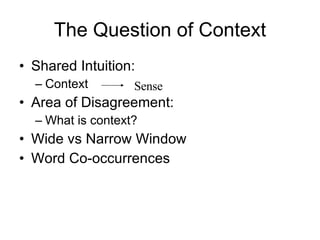

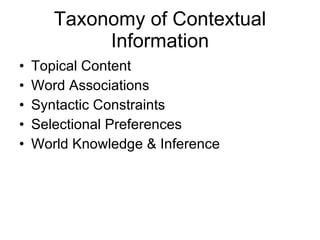

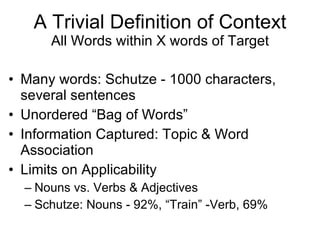

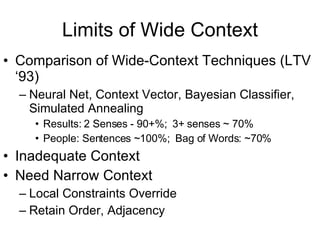

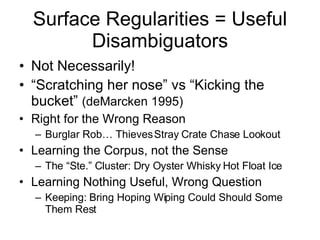

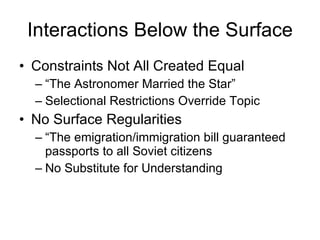

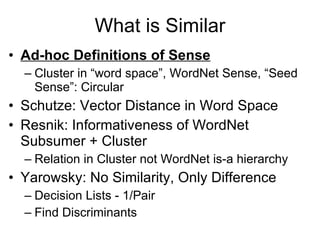

The document discusses various approaches to word sense disambiguation including supervised learning approaches like Naive Bayes classifiers, bootstrapping approaches like assigning one sense per discourse, and unsupervised approaches like Schutze's word space model. It also discusses using lexical semantic information like thematic roles, selectional restrictions, and WordNet to disambiguate word senses in context.