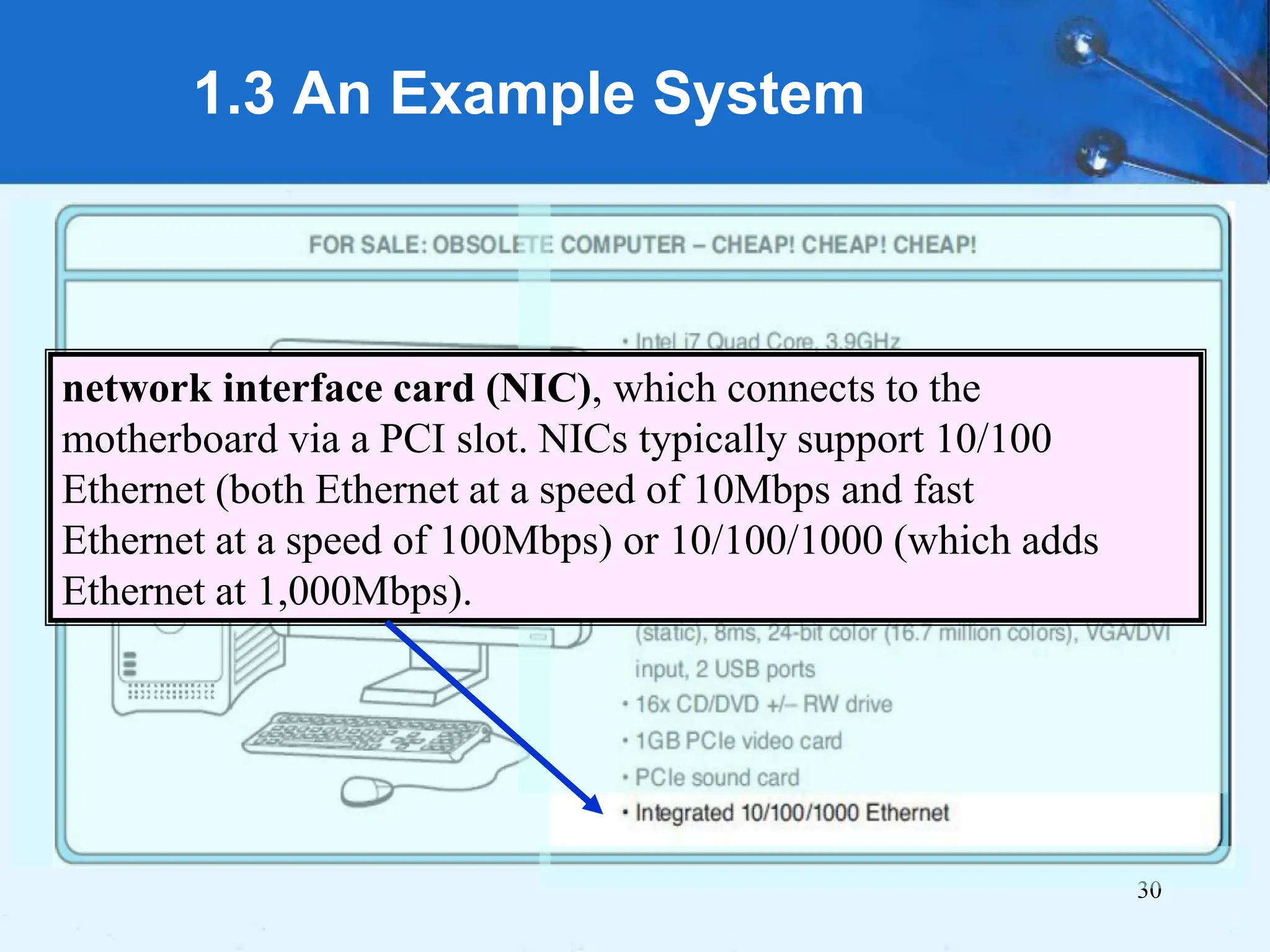

This document discusses key concepts in computer organization and architecture. It begins by defining computer organization as focusing on physical components and control signals, while computer architecture refers to logical and abstract aspects seen by programmers. It then provides examples to illustrate components like processors, memory, and I/O devices. The document also covers historical developments in computing from mechanical to modern devices, the hierarchy of abstraction layers in computer systems, and concepts like cloud computing.