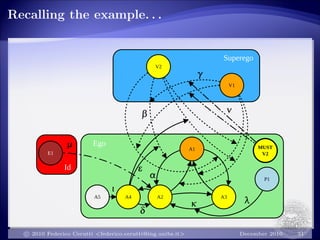

1. The document discusses an argumentation-based framework for decision support that uses structural models of personality.

2. It presents an argumentation framework that represents practical arguments, preferences, emotions, values, facts, and other elements to model decision making.

3. The framework translates decision problems into an argumentation framework with recursive attacks to define concepts like defeat and determine preferred extensions to recommend decisions.

![“Good Advice”

The advice should be presented in a form which can be readily

understood by decision makers

There should be ready access to both information and reasoning

underpinning the advice

If decision support involves details which are unusual to the

decision maker, it is of primary importance that s/he can discuss

these details with his advisor [Girle et al., 2003]

c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 2

Practical Reasoning about “What to do”

Knowledge Representation Computation of Outcomes](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-2-320.jpg)

![Argument (and Attack) schemes

Argument schemes for knowledge representation [Walton, 1996]

Structure which contains the information supporting a given

conclusion

Modelling conflicts by “attack scheme”

Structure which contains the information supporting a given

conflict

c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 4](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-4-320.jpg)

![The main concepts

Circumstance: a state of the world

Fact: a particular circumstance assumed to be true

Goal: a state of the world we want to achieve

Action: support for the achievement of a goal

Emotion: “a strong feeling deriving from one’s circumstances,

[or] mood[. . . ]”

Preference: “[. . . ] a greater liking for one alternative over

another or others [. . . ]”

Value: “Worth or worthiness [. . . ] in respect of rank or personal

qualities”

Must Value: a value that we are committed to promote

c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 5](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-5-320.jpg)

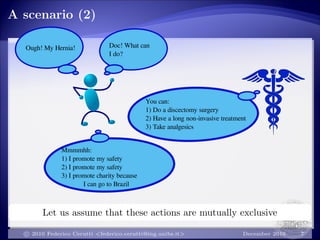

![A scenario (2): practical arguments

A2: circumstance: in my situation,

action: discectomy surgery,

goal: reducing disc herniation,

value: safety,

sign: +.

[Greenwood et al., 2003]

c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 8

Mumble](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-8-320.jpg)

![A scenario (2): practical arguments

α [PAtS1]: source: A1,

target: A2,

conditions: A1.action and A2.action

are incompatible.

c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 8

Mumble](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-9-320.jpg)

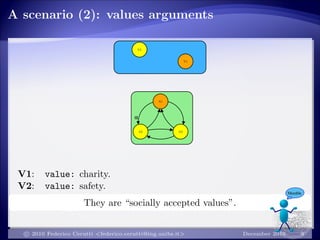

![A scenario (2): values defence (i)

β [VDes1]: source: V2,

target: α,

conditions: α.target.value = V2.value and

α.source.value = A2.value.

Each value is committed to protect each practical

argument that promotes it! And. . .

c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 10

Mumble](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-11-320.jpg)

![A scenario (2): values defence (ii)

γ [VDes2]: source: V1,

target: β,

conditions: β.source = V1 and

β.target.source.value = V1.value.

Each value is committed to protect each attack

grounded on each practical argument that promotes it!

c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 11

Mumble](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-12-320.jpg)

![A scenario (2): values defence (ii)

β [VDefence]: defending: A2,

defended: V2,

conditions: defending.value =

defended.value and defended.sign = +.

c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 11

Mumble](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-13-320.jpg)

![A scenario (2): values defence (ii)

γ [VDefence]: defending: A1,

defended: V1,

conditions: defending.value =

defended.value and defended.sign = +.

c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 11

Mumble](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-14-320.jpg)

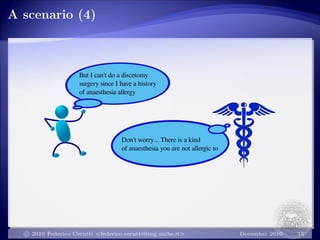

![A scenario (3): contradictory pract. arg. (i)

δ [PAtS2]: source: A4,

target: A2,

conditions: A4.action = ¬ A2.action.

c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 13

Mumble](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-17-320.jpg)

![A scenario (3): contradictory pract. arg. (ii)

[VAAtS]: source: A4,

target: β,

conditions: A4.action = ¬ β.defended.action

and A4.value = β.defended.value and

A4.sign = − and β.defended.sign = +.

c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 14

Mumble](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-18-320.jpg)

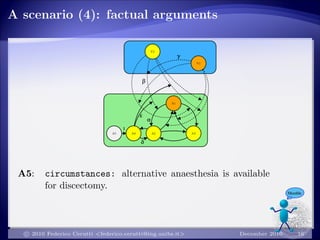

![A scenario (4): factual arguments

ι [FAtS1]: source: A5,

target: A4,

conditions: A5.circumstances =

¬ A4.circumstances.

c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 16

Mumble](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-21-320.jpg)

![A scenario (5): preferences. . .

λ [PRAtS]: source: P1,

target: κ,

conditions: P1.preferred = κ.target

and P1.notpreferred = κ.source.

c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 18

Mumble](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-24-320.jpg)

![A scenario (5): . . . and emotions

µ [EAtS1]: source: E1,

target: A2,

conditions: e(E1,A2) = −.

with e(E1, A2) = −

c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 19

Mumble](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-26-320.jpg)

![A scenario (6): Must Value

ν [MAtS2]: source: MUST V2,

target: γ,

conditions: MUST V2.value =

γ.target.source.value and

MUST V2.value = γ.source.value.

c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 21

Mumble](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-29-320.jpg)

![Argumentation Framework with Recursive

Attacks (AFRA)

An Argumentation Framework with Recursive Attacks (AFRA) is a

pair A, R where A is a set of arguments and R is a set of attacks,

namely pairs (A, X ) s.t. A ∈ A and (X ∈ R or X ∈ A).

Given an attack α = (A, X ) ∈ R, we will say that A is the source of

α, denoted as src(α) = A and X is the target of α, denoted as

trg(α) = X . [Baroni et al., 2009b]

c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 26](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-36-320.jpg)

![Conclusions

Extension of a previous proposal [Baroni et al., 2009a]

Human emotions in decision making process

Main contribution: preliminary description of a mapping between

argumentation based decision process and Freud’s three entities

model of personality

c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 34](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-44-320.jpg)

![References

[Baroni et al., 2009a] Baroni, P., Cerutti, F., Giacomin, M., and

Guida, G. (2009a).

An argumentation-based approach to modeling decision support

contexts with what-if capabilities.

In AAAI Fall Symposium. Technical Report SS-09-06, pages 2–7.

AAAI Press.

[Baroni et al., 2009b] Baroni, P., Cerutti, F., Giacomin, M., and

Guida, G. (2009b).

Encompassing attacks to attacks in abstract argumentation

frameworks.

In Proc. of ECSQARU 2009, pages 83–94, Verona, I.

[Girle et al., 2003] Girle, R., Hitchcock, D. L., McBurney, P., and

Verheij, B. (2003).

Decision support for practical reasoning: A theoretical and

computational perspective.

In Reed, C. and Norman, T. J., editors, Argumentation Machines.

New Frontiers in Argument and Computation, pages 55–84. Kluwer.c 2010 Federico Cerutti <federico.cerutti@ing.unibs.it> December 2010 37](https://image.slidesharecdn.com/ceruttiargaip10-130518091037-phpapp01/85/Cerutti-ARGAIP-2010-47-320.jpg)