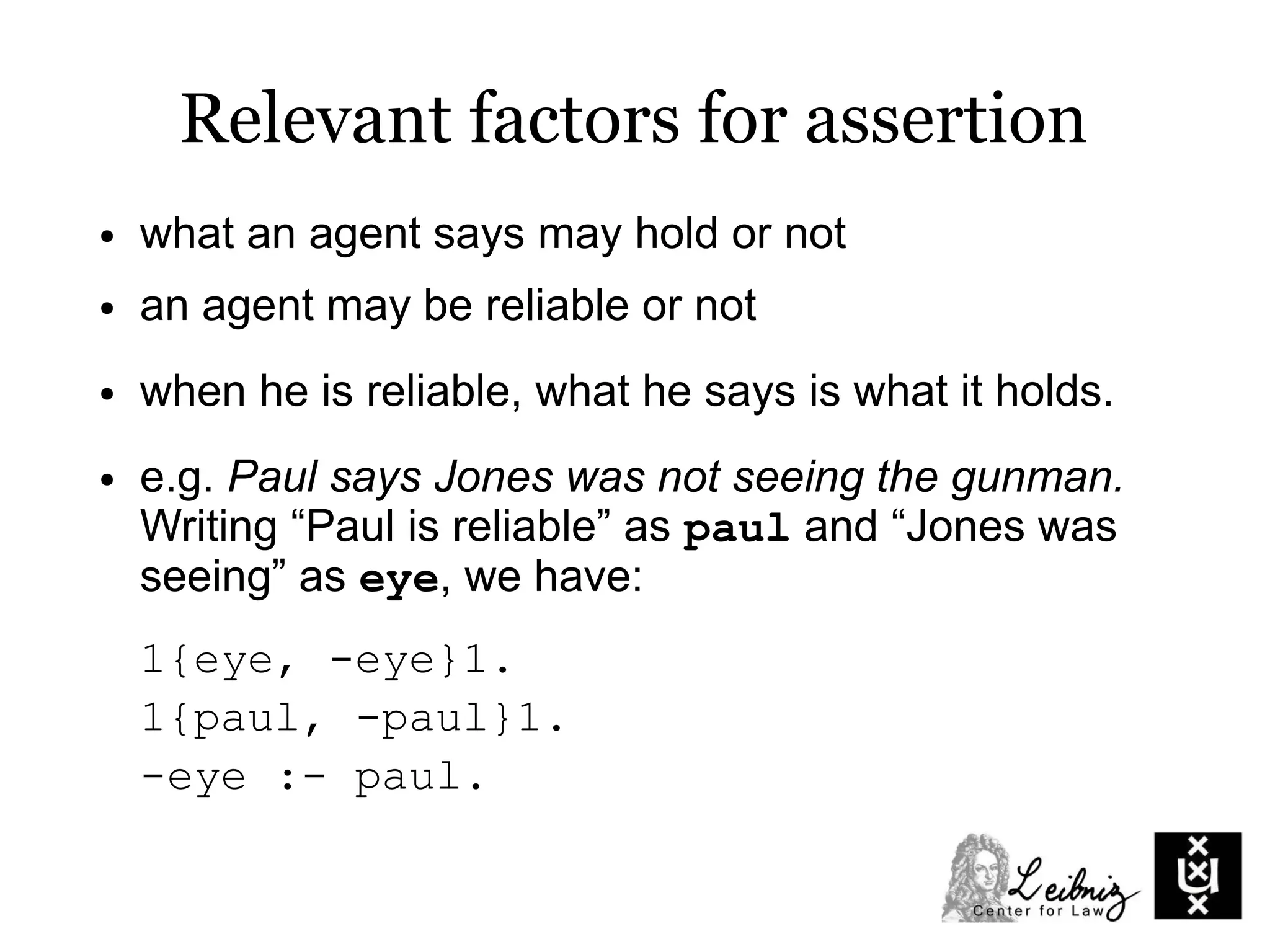

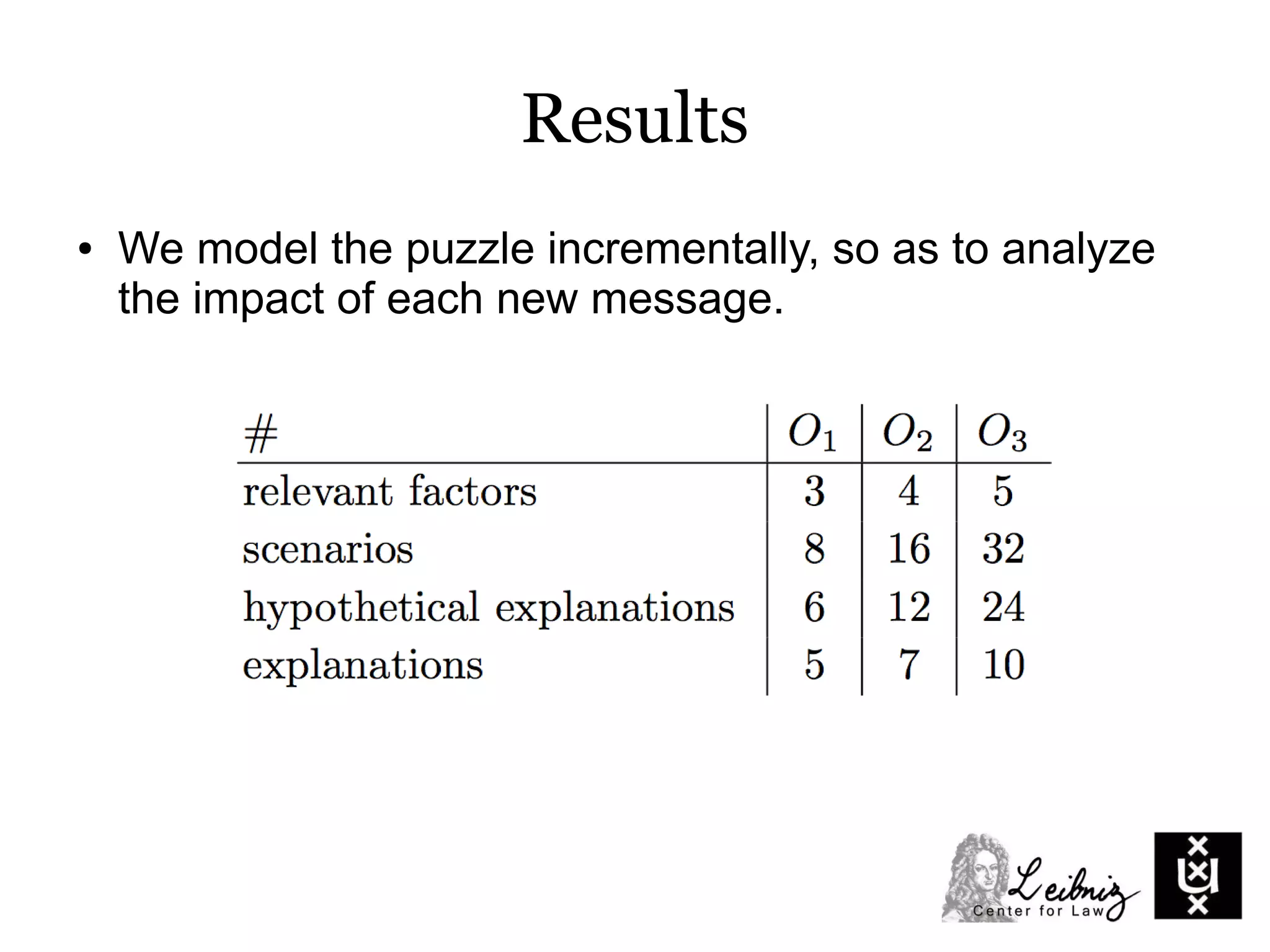

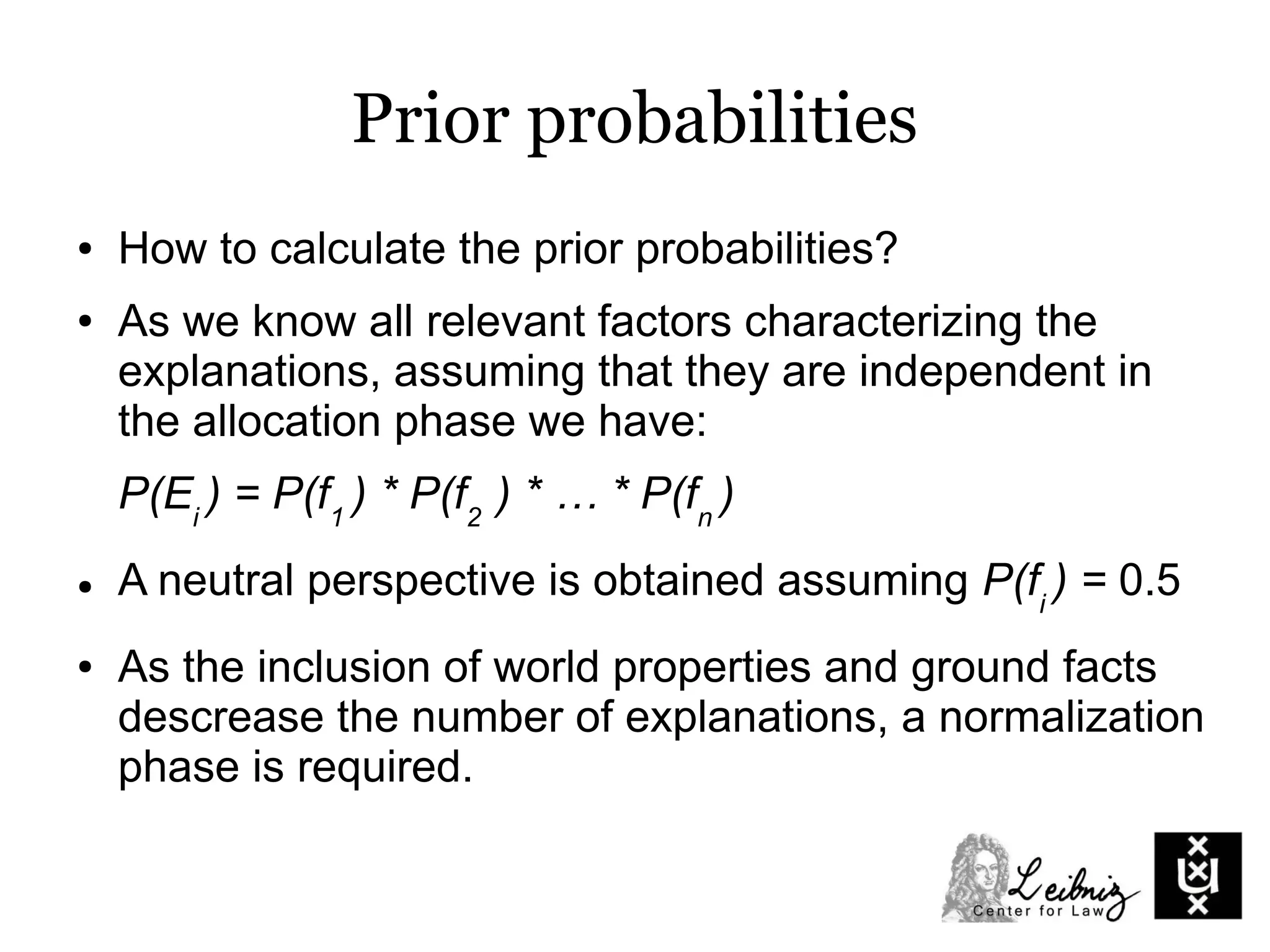

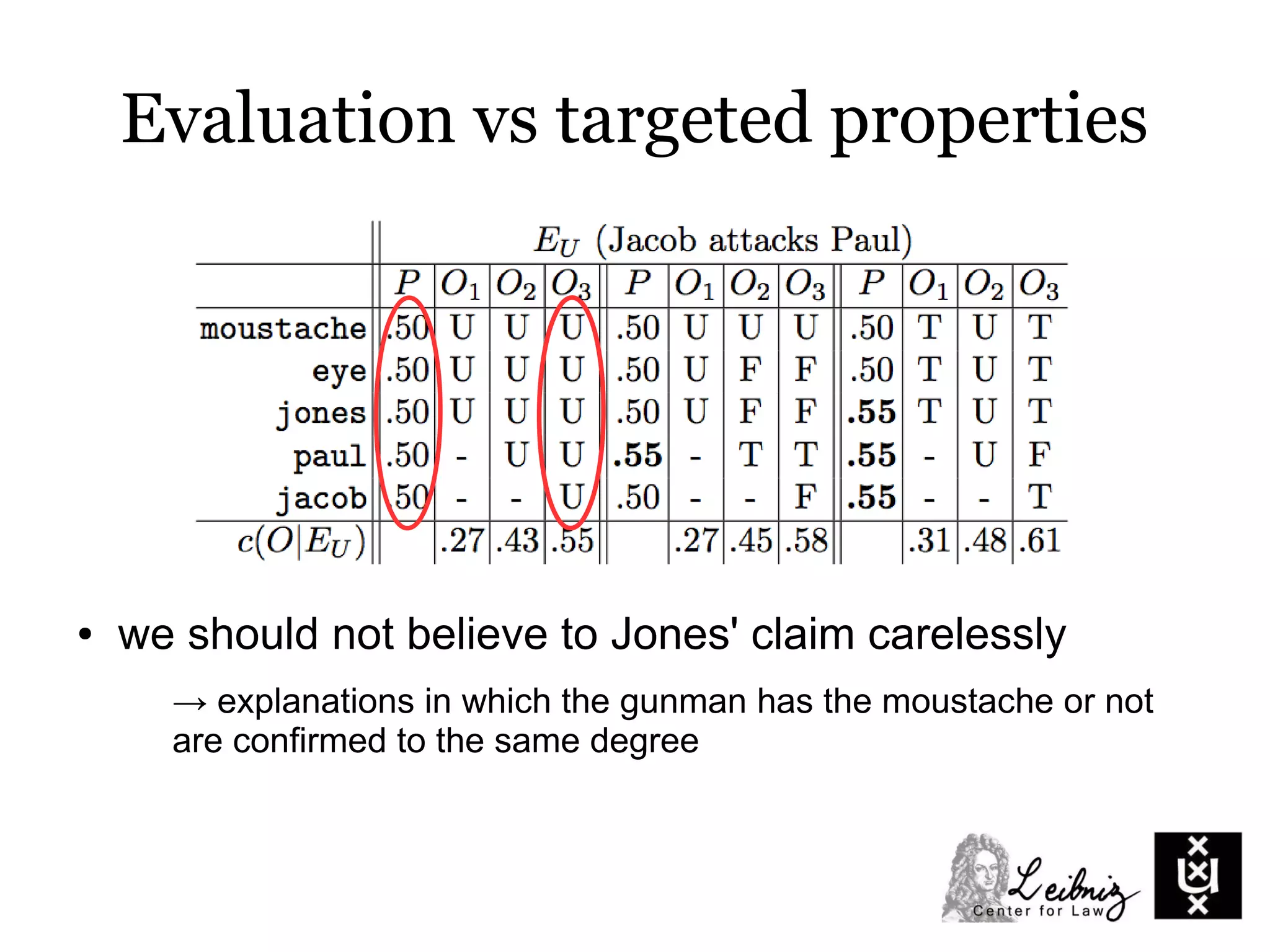

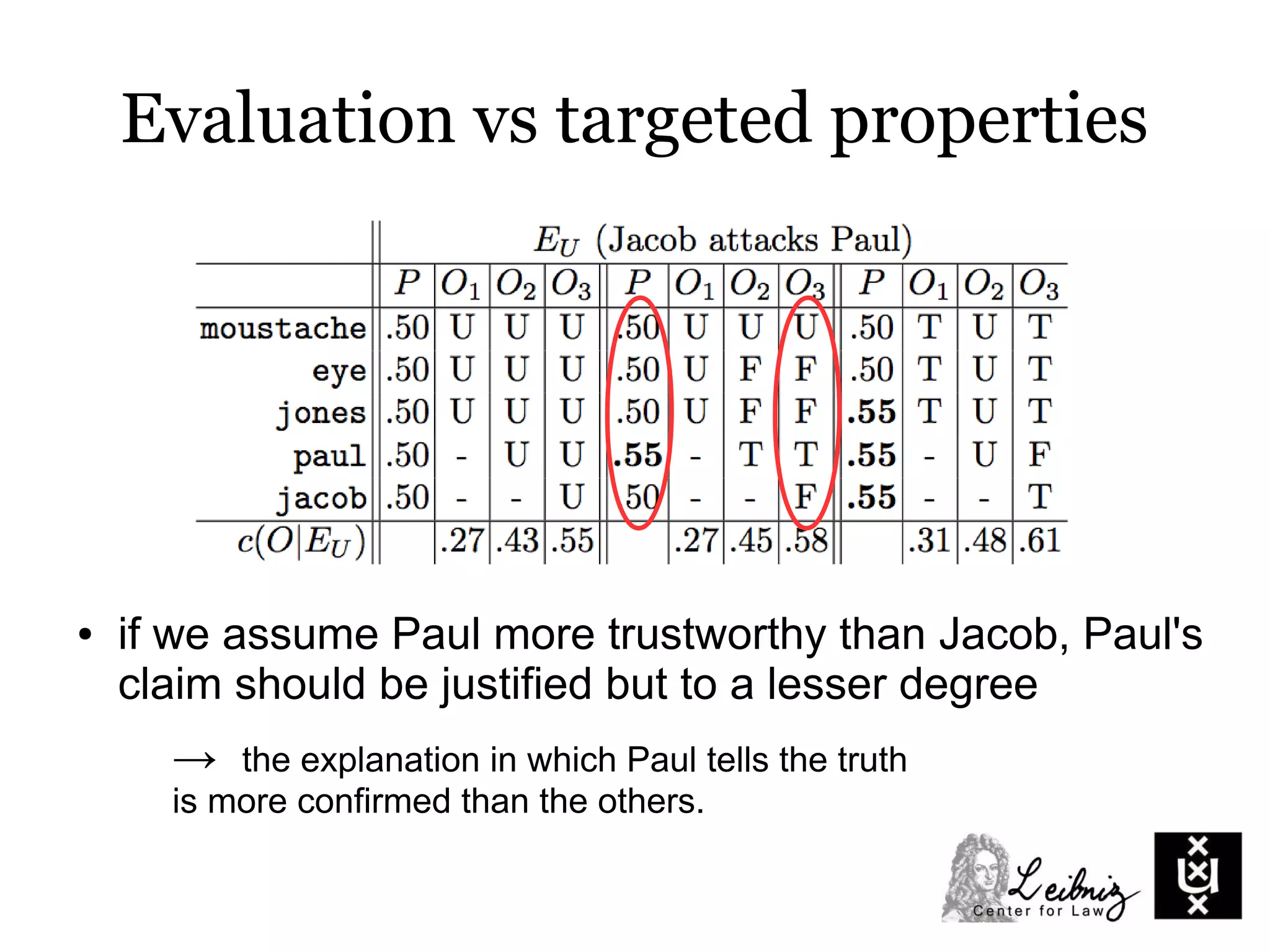

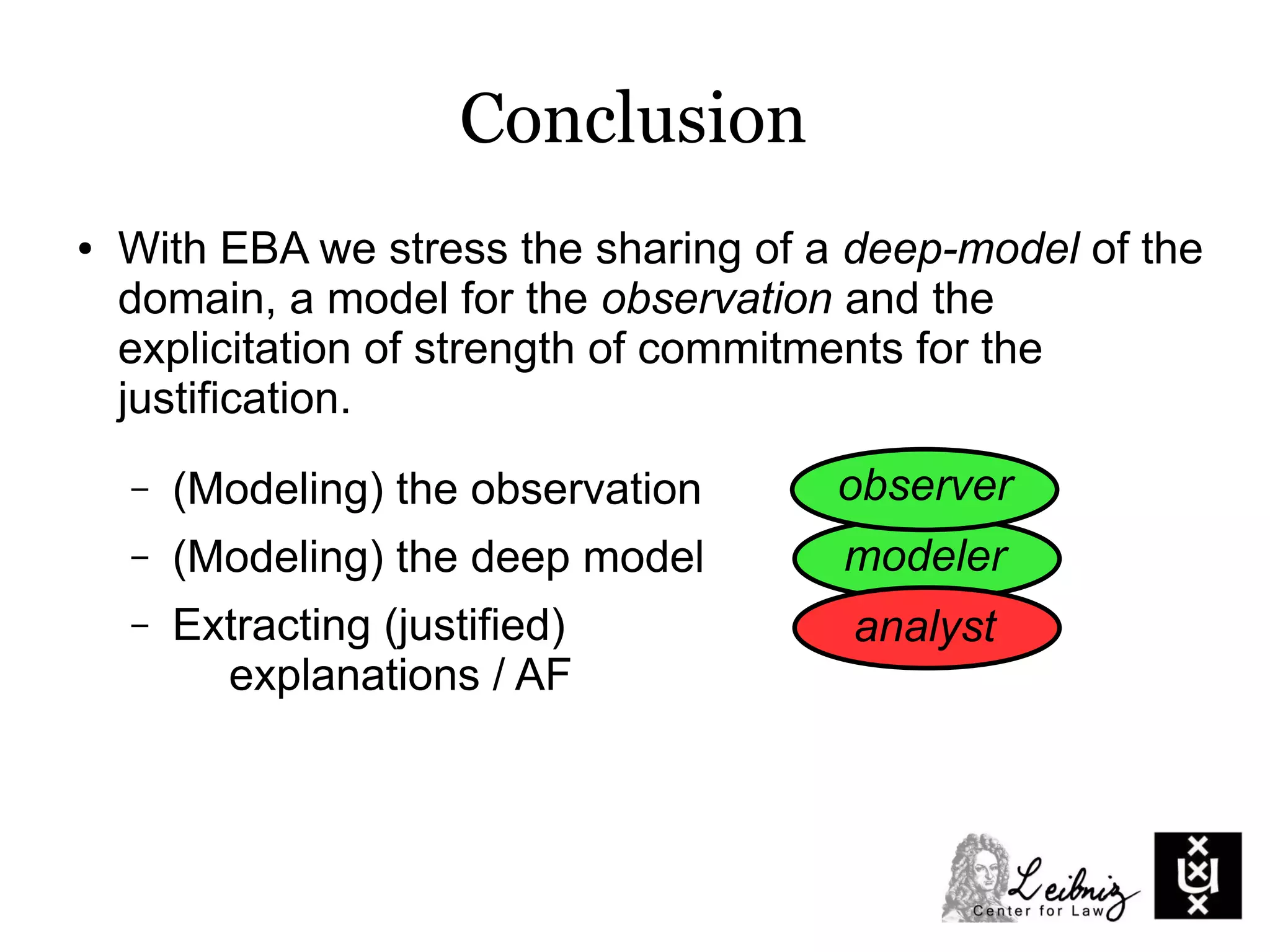

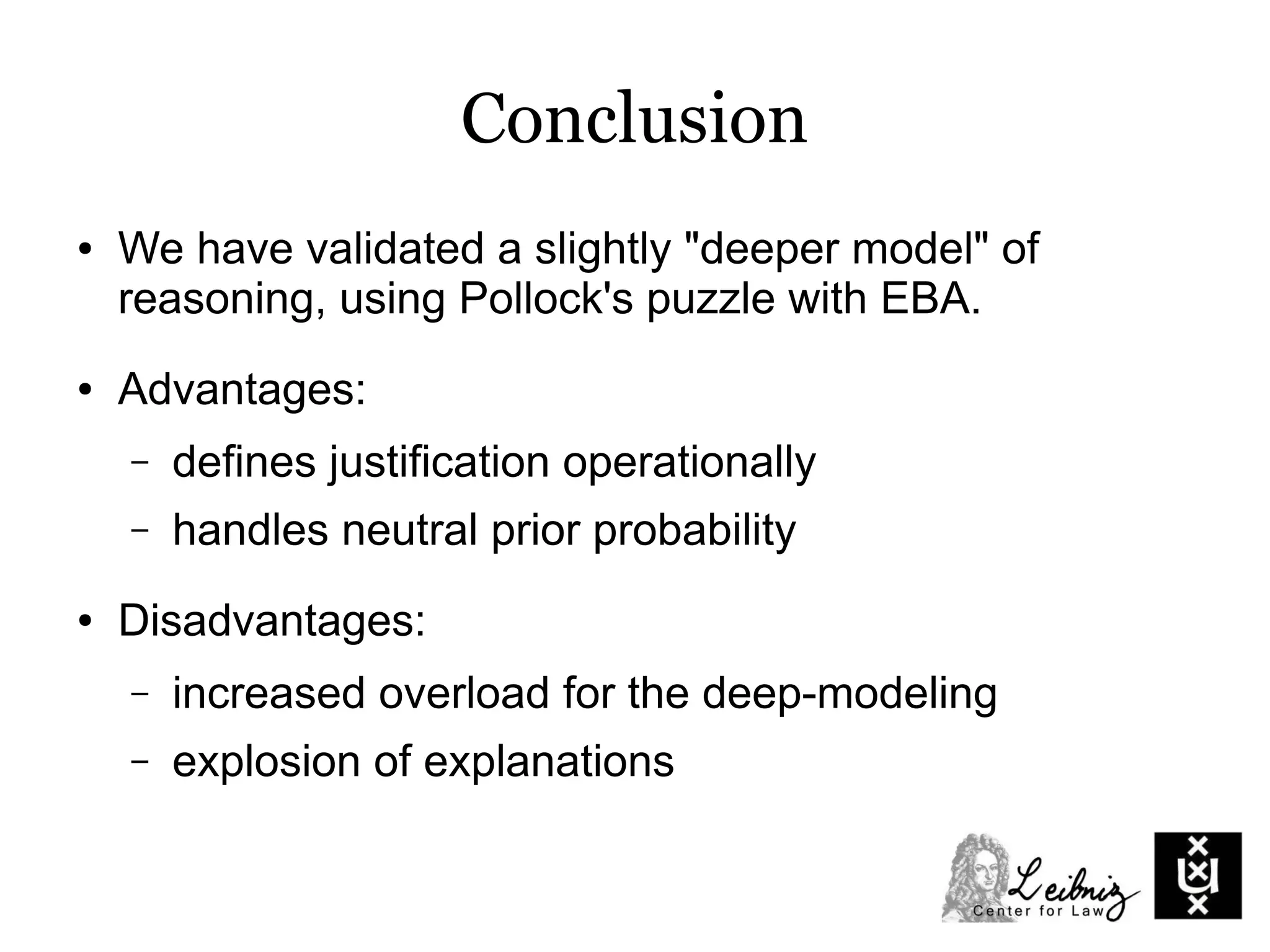

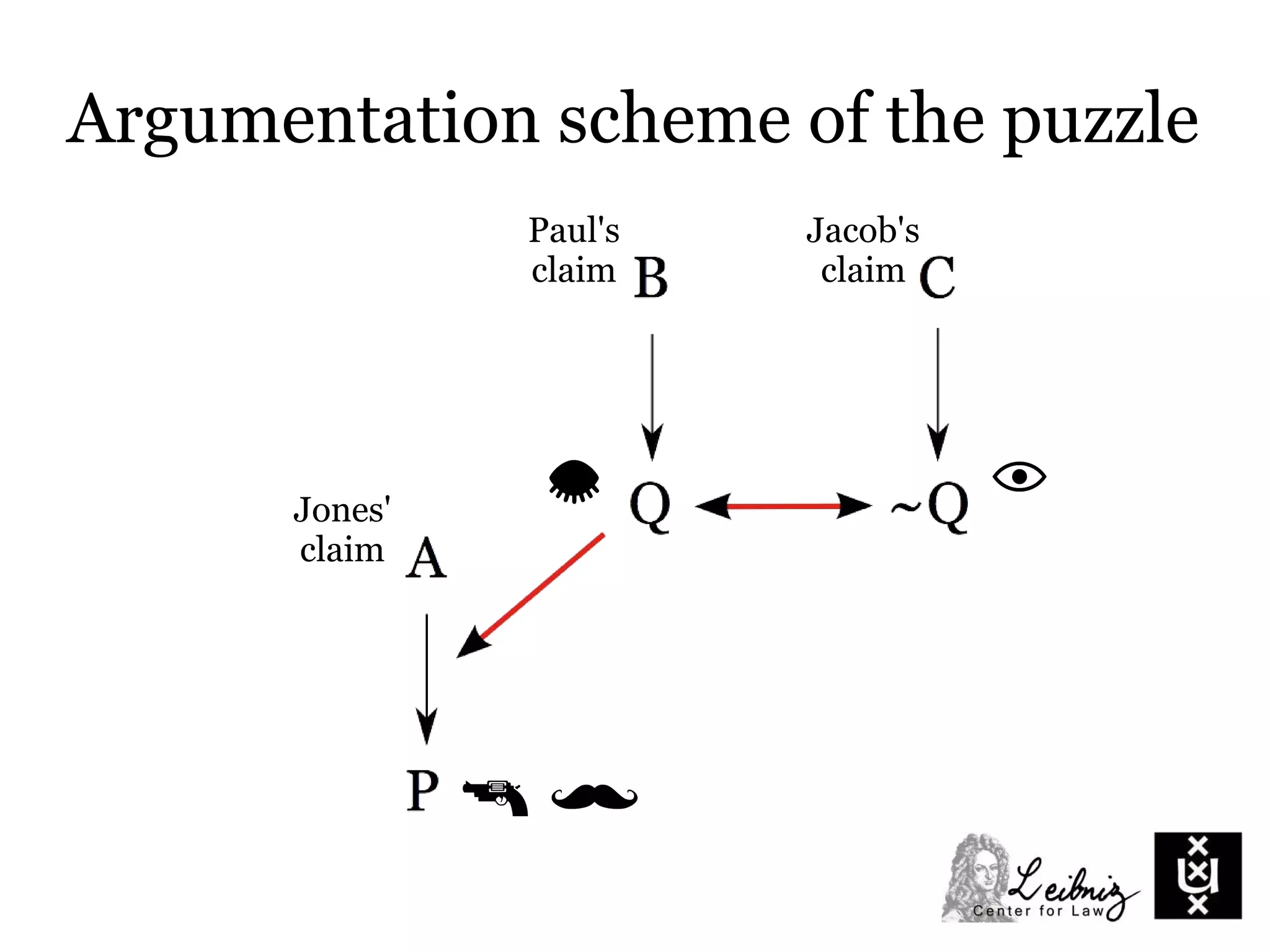

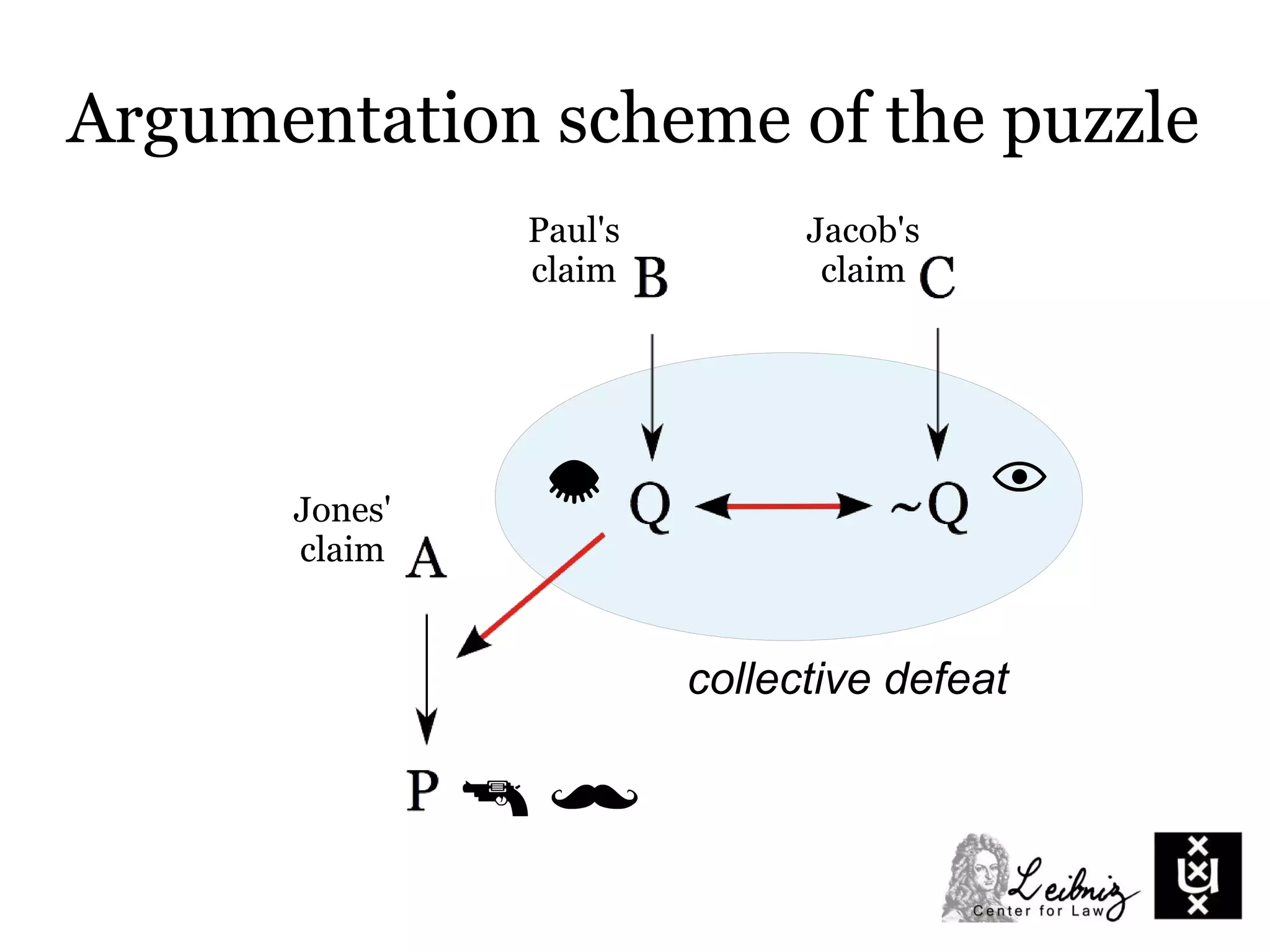

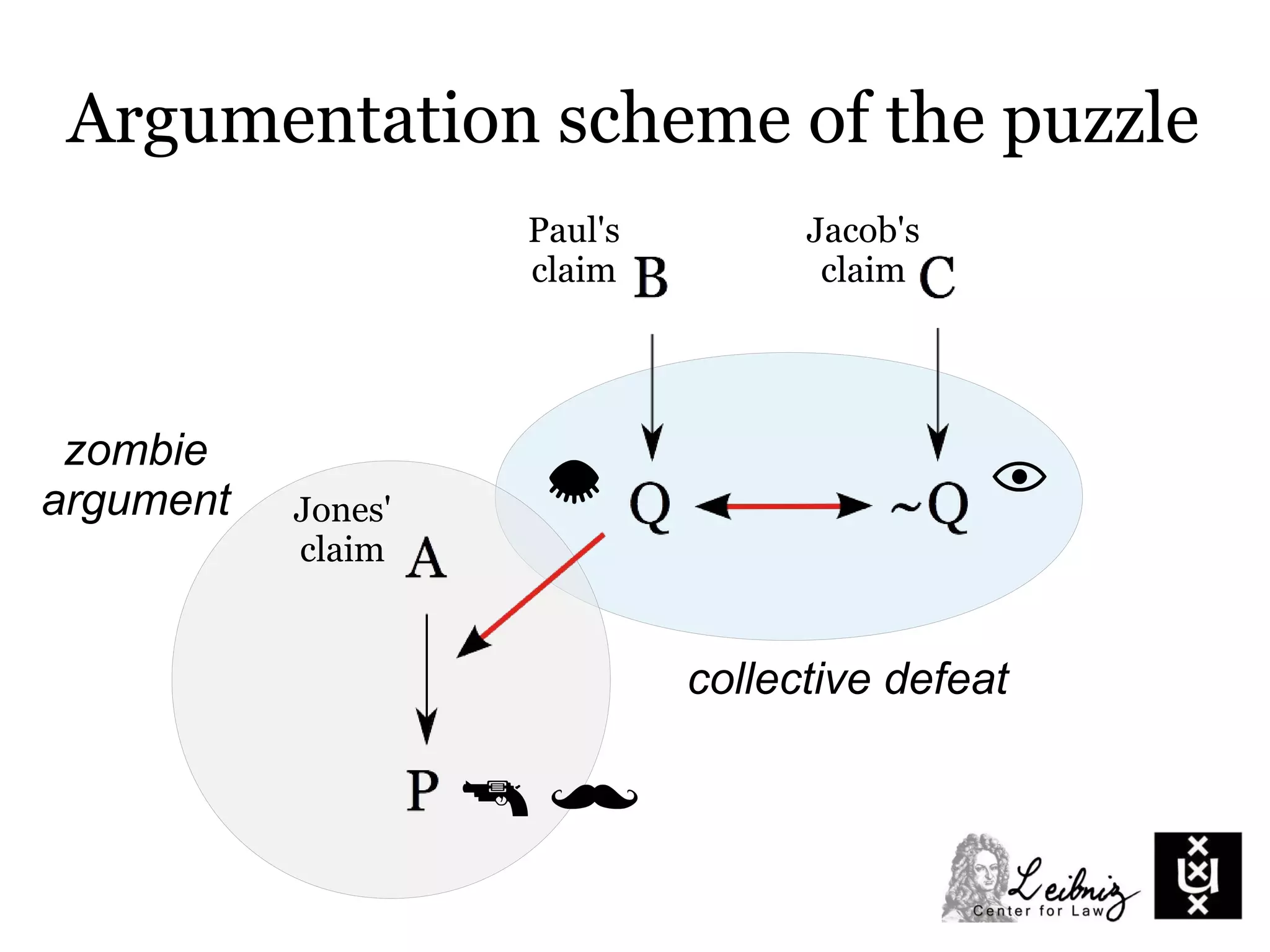

This document discusses implementing explanation-based argumentation using answer set programming. It begins with background on argumentation frameworks and their limitations in modeling real arguments. It then presents Pollock's puzzle as an example where current frameworks struggle. The document proposes an approach called explanation-based argumentation that models arguments as explanations for observations. These explanations can be generated, deleted if impossible, and evaluated based on how well they fit observations. The document concludes that answer set programming is well-suited to implement this approach due to its declarative problem modeling and search capabilities.

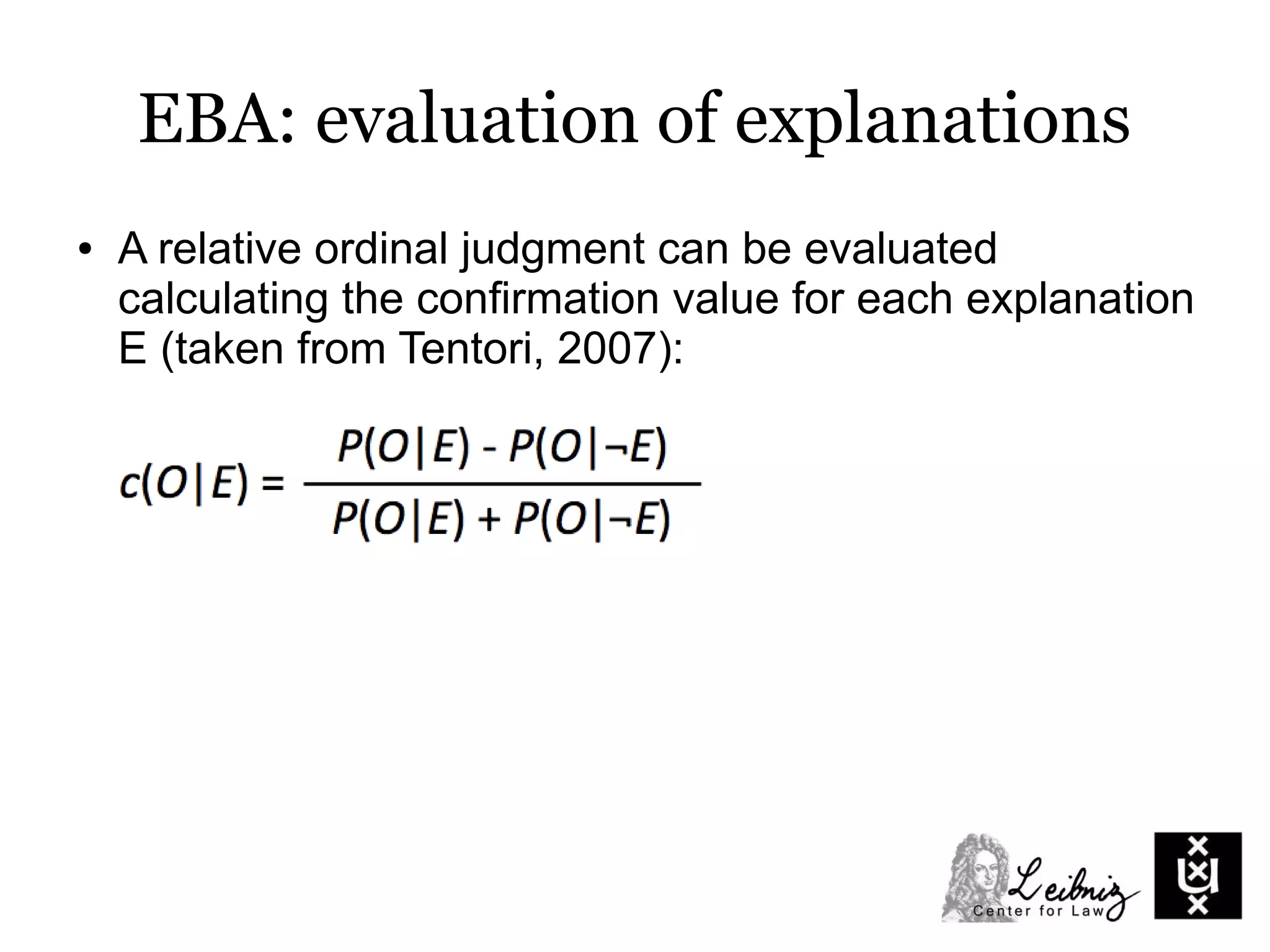

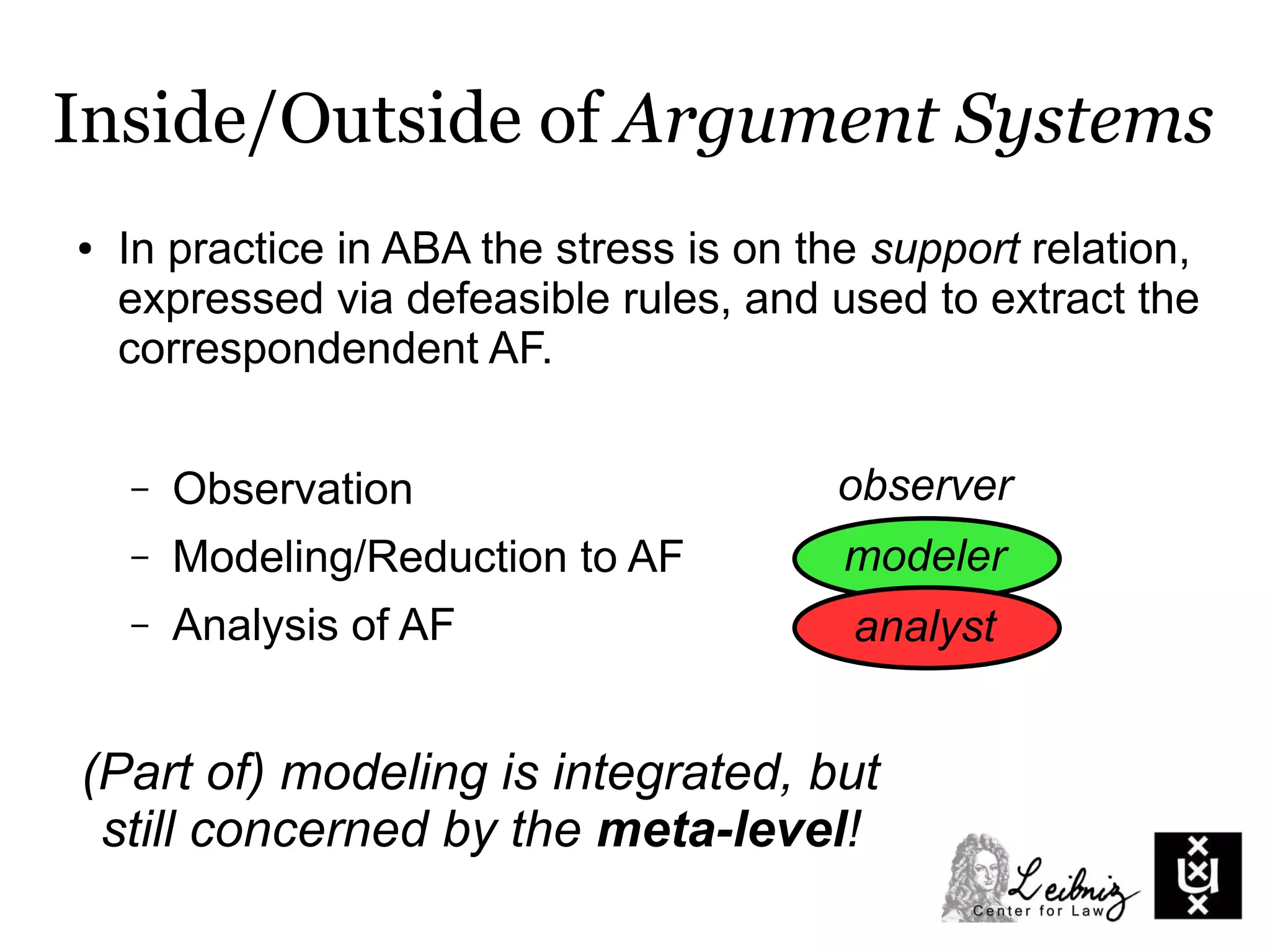

![Formal Argumentation

● An Argumentation framework (AF) [Dung] consists of :

– a set of arguments

– attack relations between arguments

● Formal argumentation frameworks

essentially target this meta-level](https://image.slidesharecdn.com/argmas-150115103716-conversion-gate02/75/Implementing-Explanation-Based-Argumentation-using-Answer-Set-Programming-6-2048.jpg)

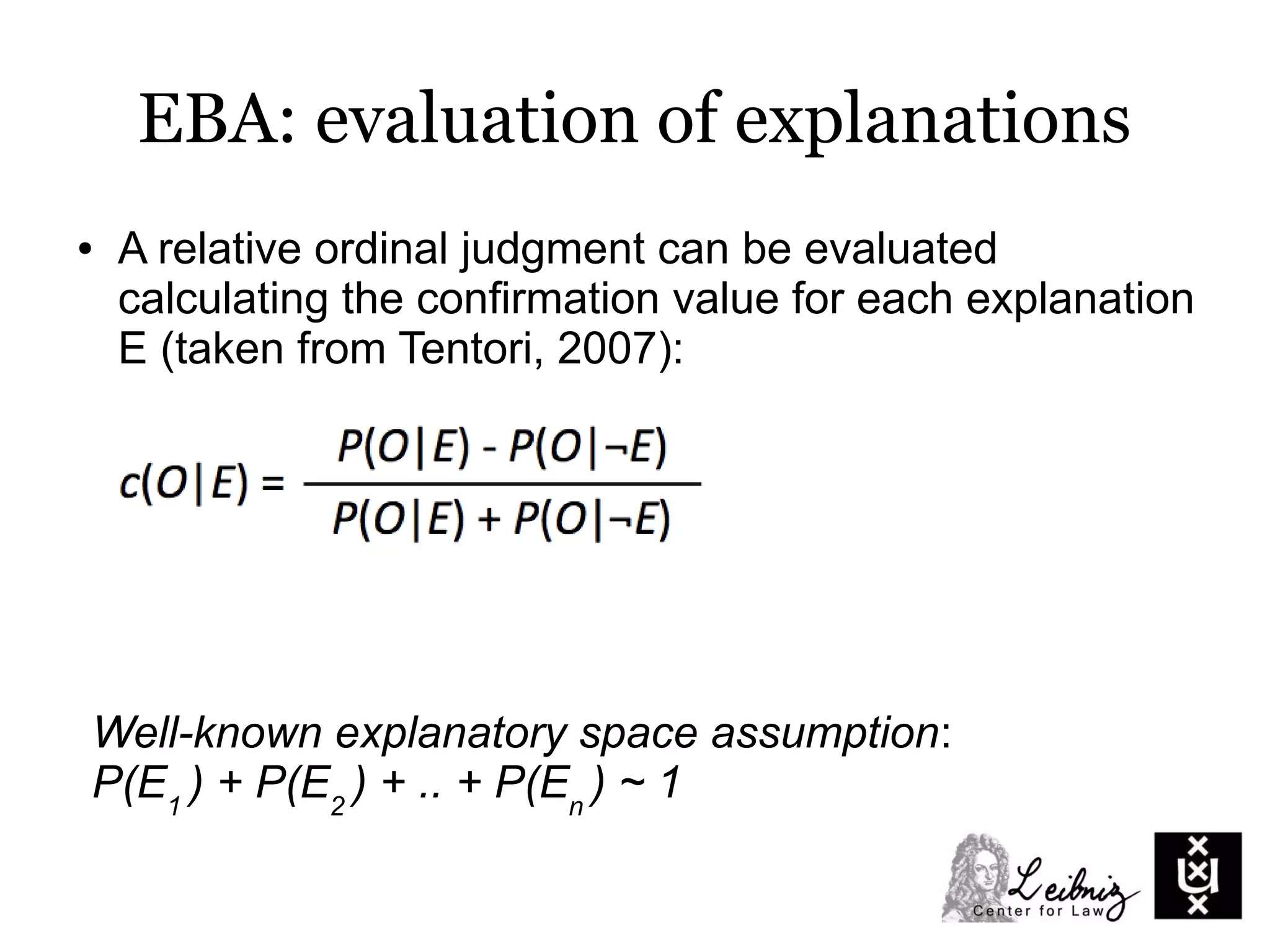

![EBA: observations

● The sequence of collected

messages consists in the

observation.

● Sometimes the

observation is collected by

a third-party adjudicator,

entitled to interpret the

case from a neutral

position.The Trial of Bill Burn under

Martin's Act [1838]](https://image.slidesharecdn.com/argmas-150115103716-conversion-gate02/75/Implementing-Explanation-Based-Argumentation-using-Answer-Set-Programming-28-2048.jpg)