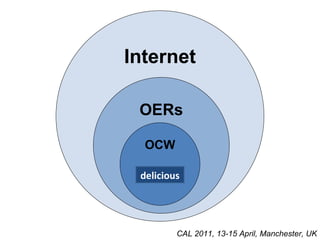

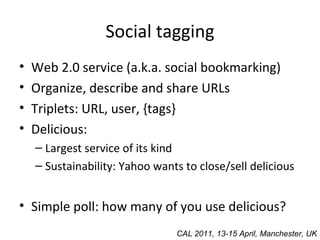

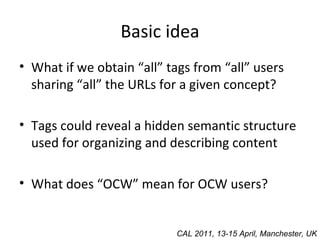

1) The document analyzes how users tag OpenCourseWare resources on the social bookmarking site Delicious in order to understand how these resources are organized and described.

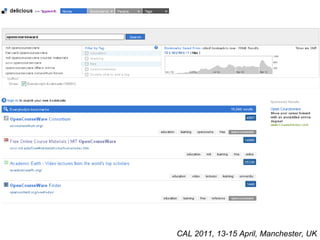

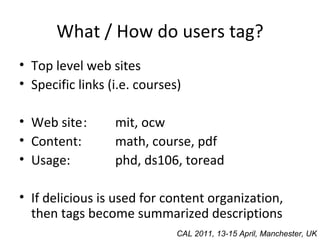

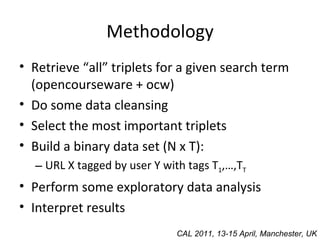

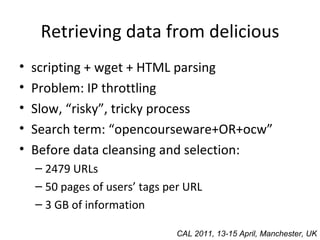

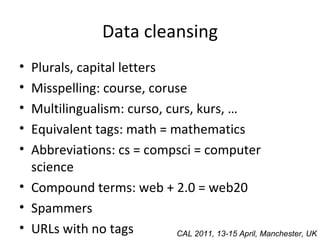

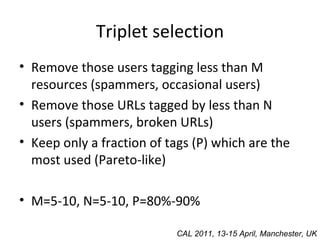

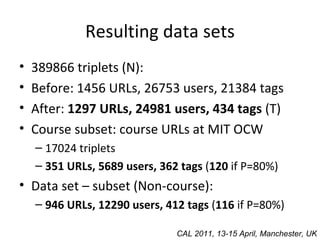

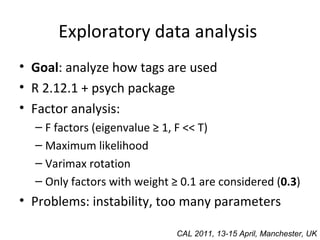

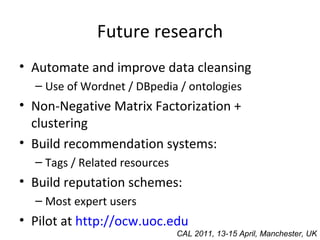

2) The researchers collected tagging data for OpenCourseWare URLs from Delicious and performed data analysis to identify themes in how URLs and their content were categorized.

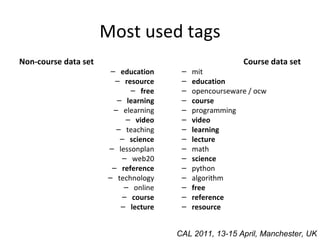

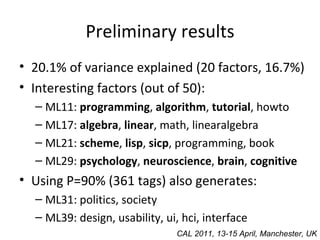

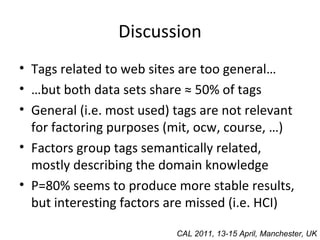

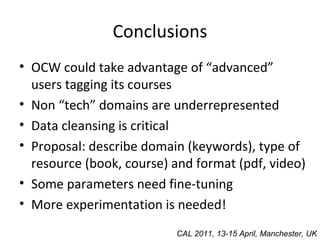

3) Preliminary results identified factors that grouped tags by semantic relationships, showing potential to describe course domains, resource types, and formats to improve searching of OpenCourseWare content.

![Thank you! Julià Minguillón [email_address] CAL 2011, 13-15 April, Manchester, UK](https://image.slidesharecdn.com/cal2011-jminguillon-110415070629-phpapp02/85/Analyzing-OpenCourseWare-usage-by-means-of-social-tagging-22-320.jpg)