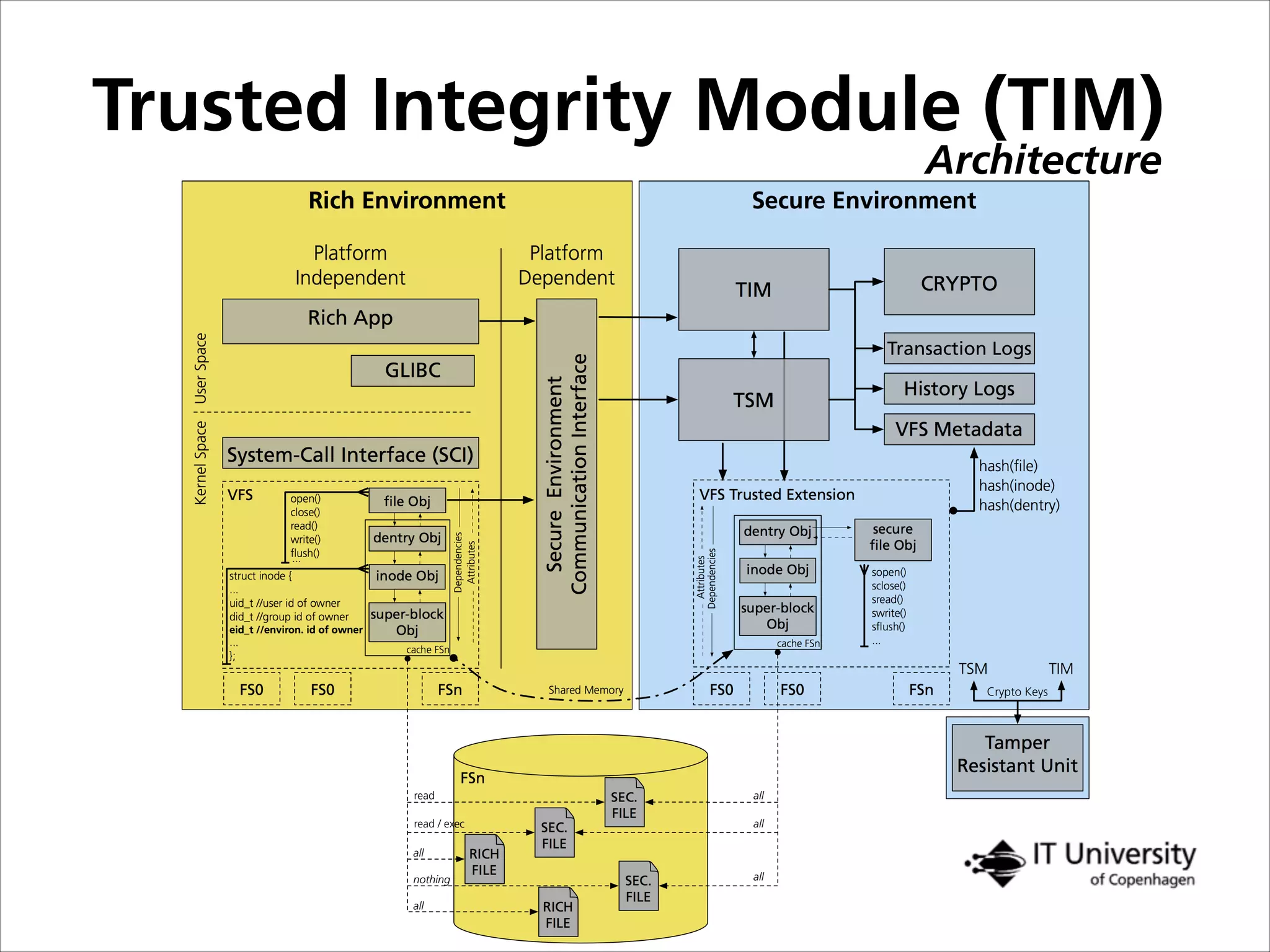

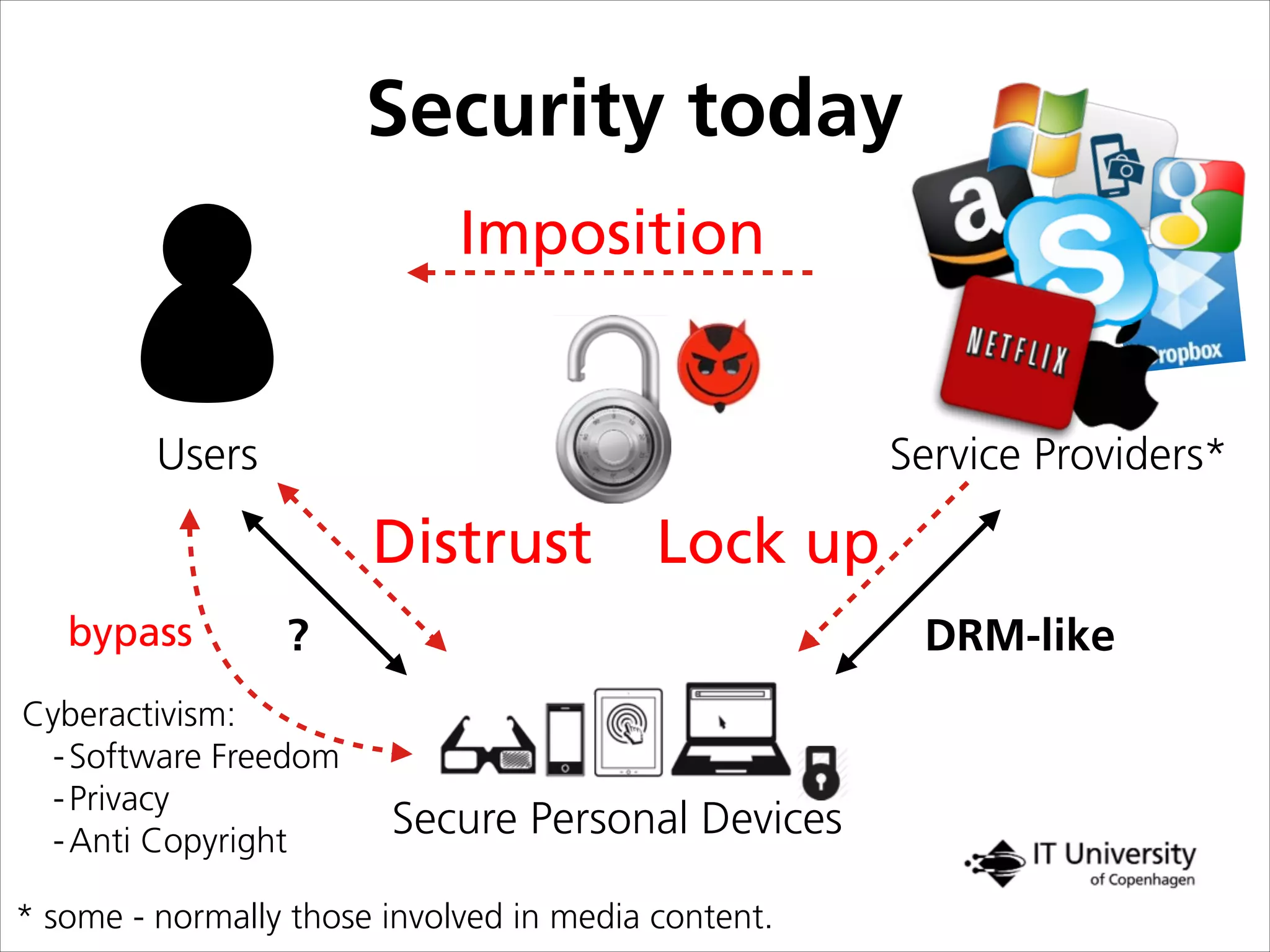

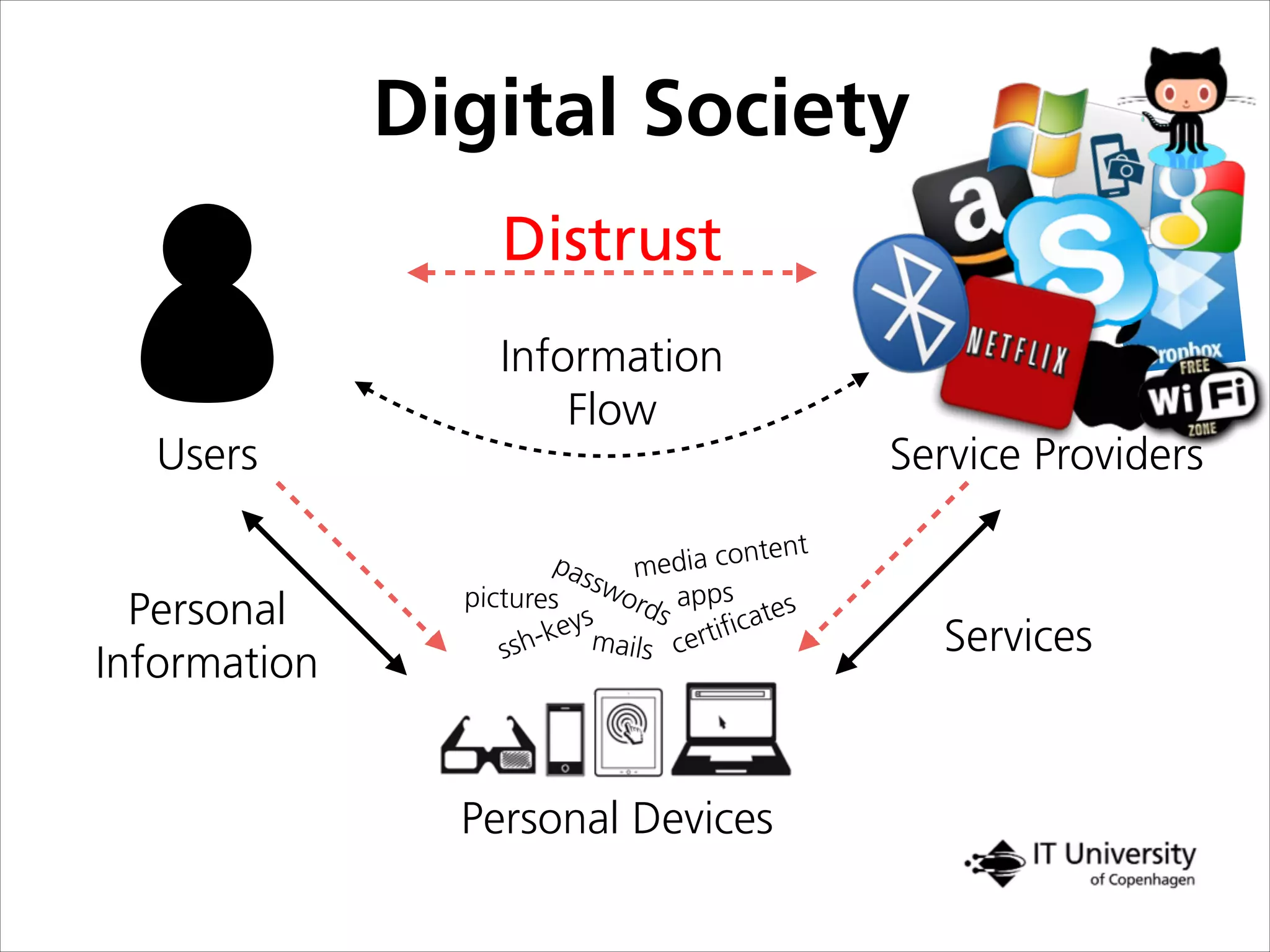

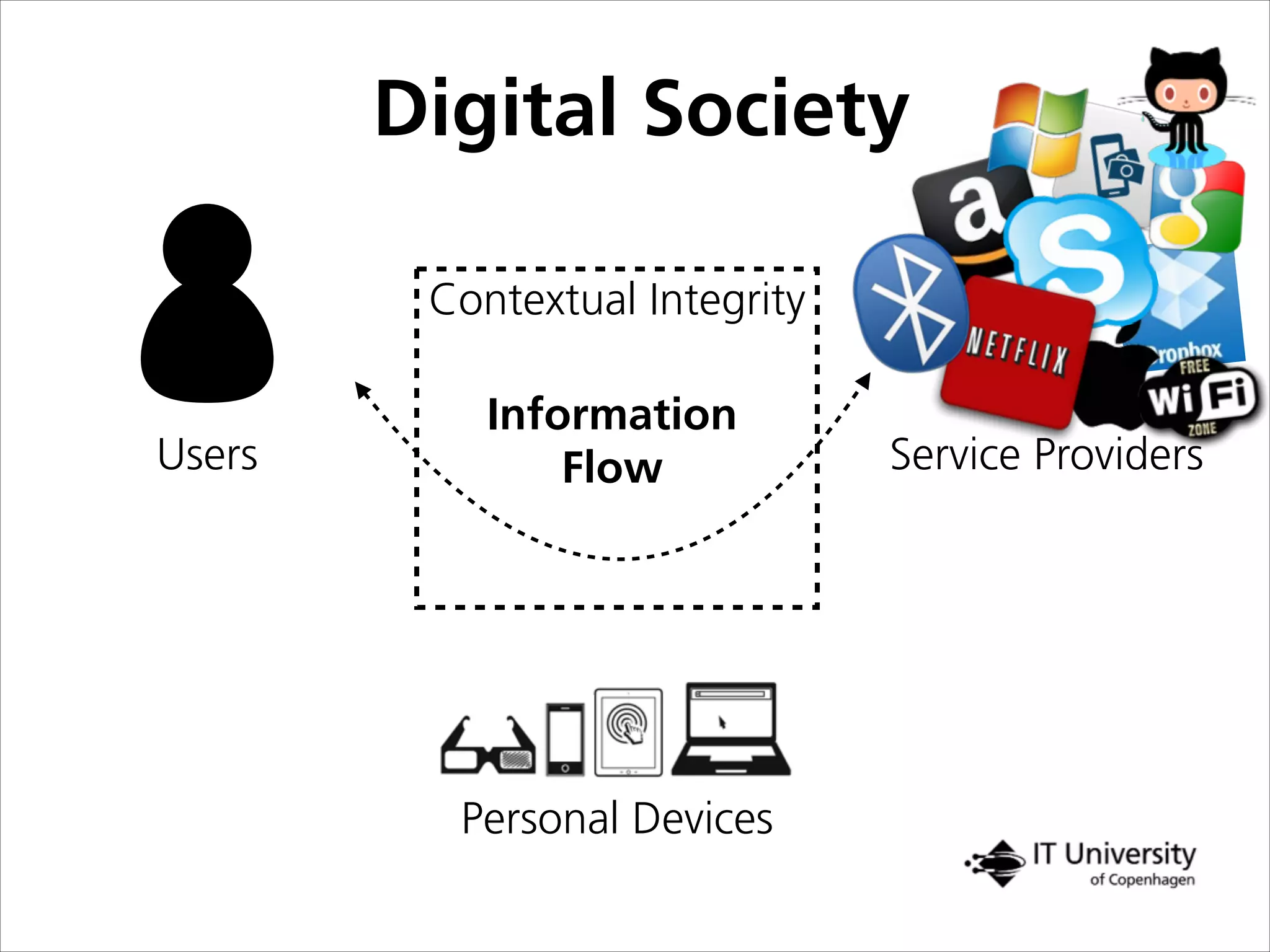

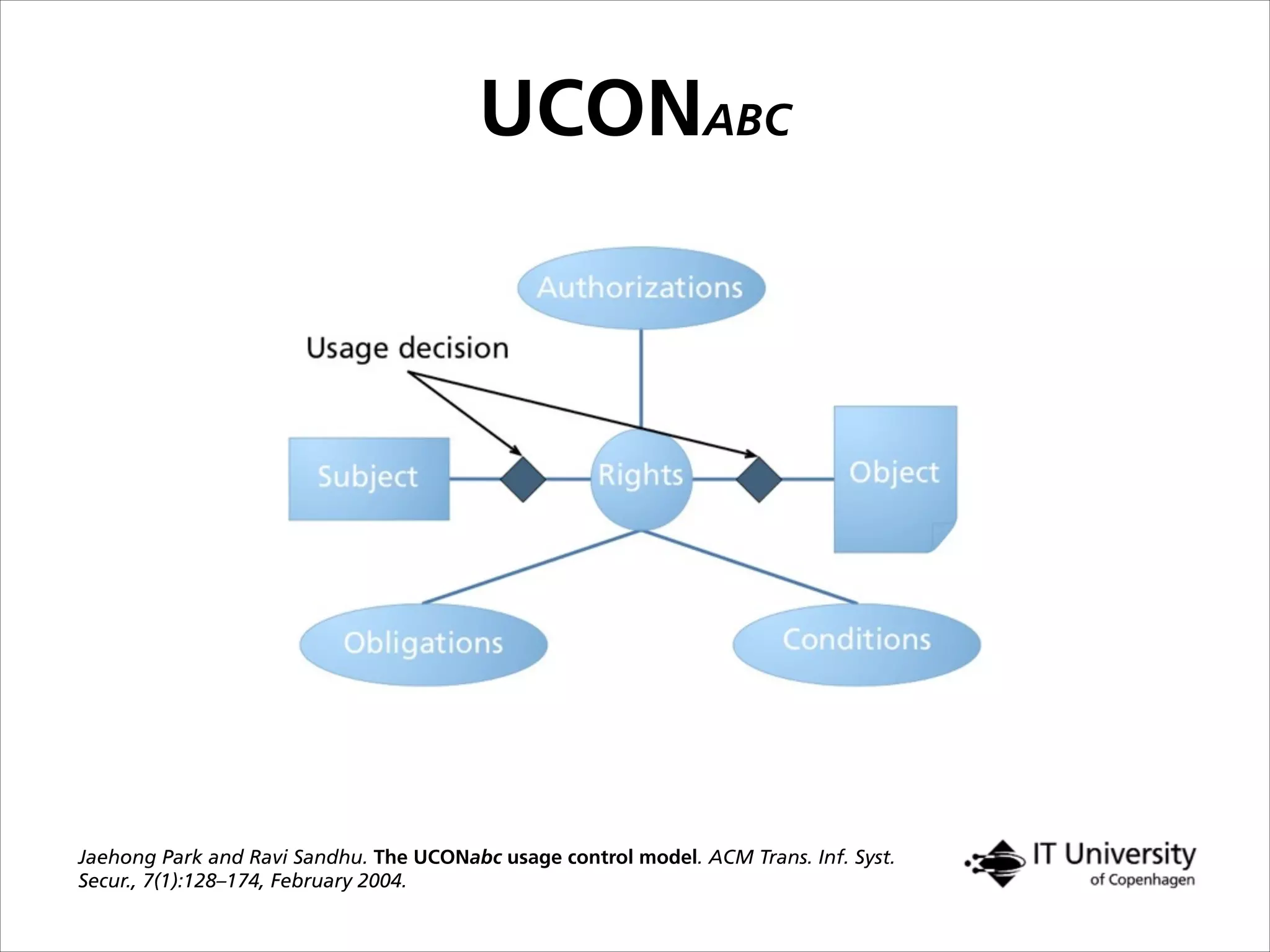

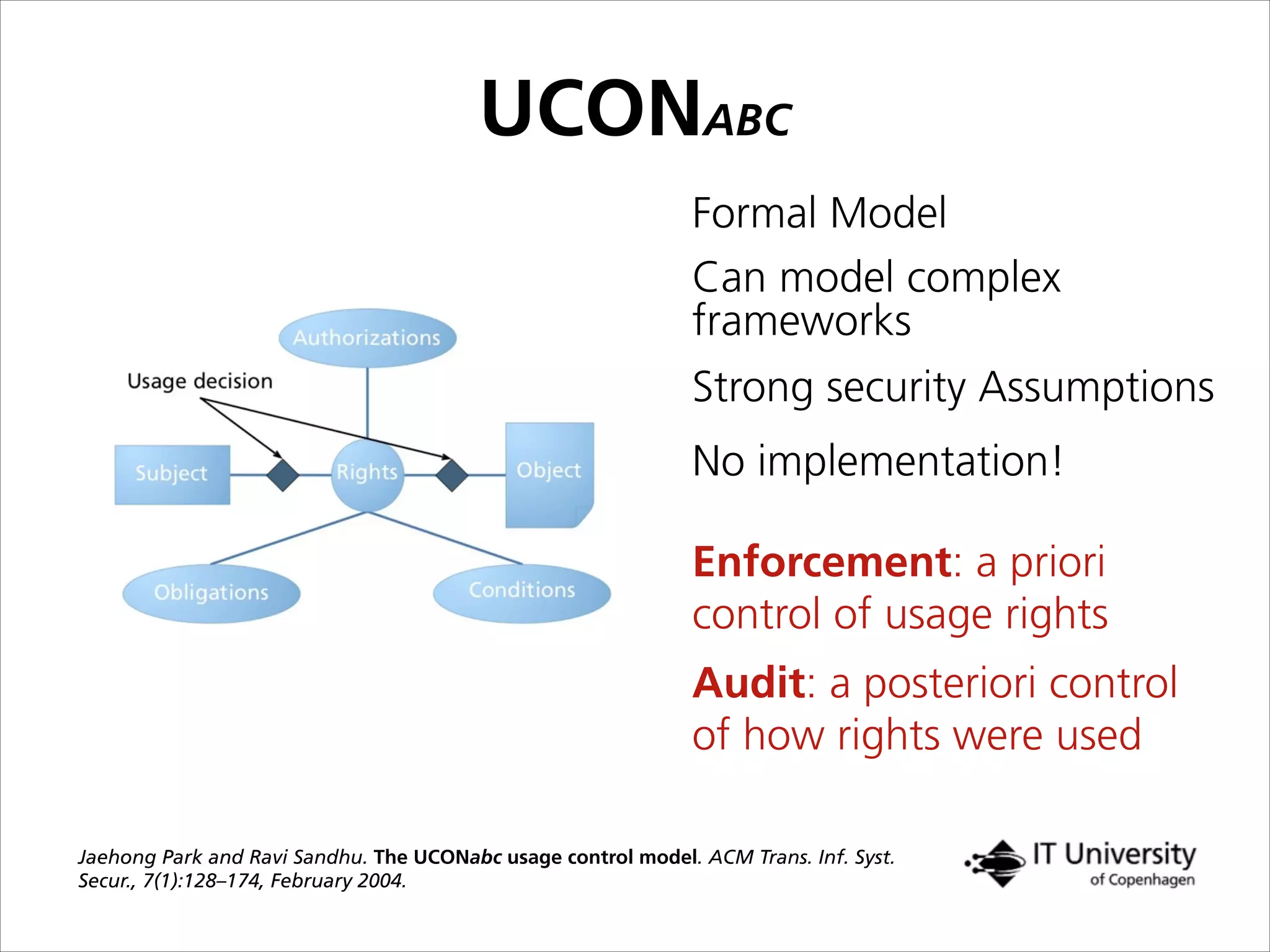

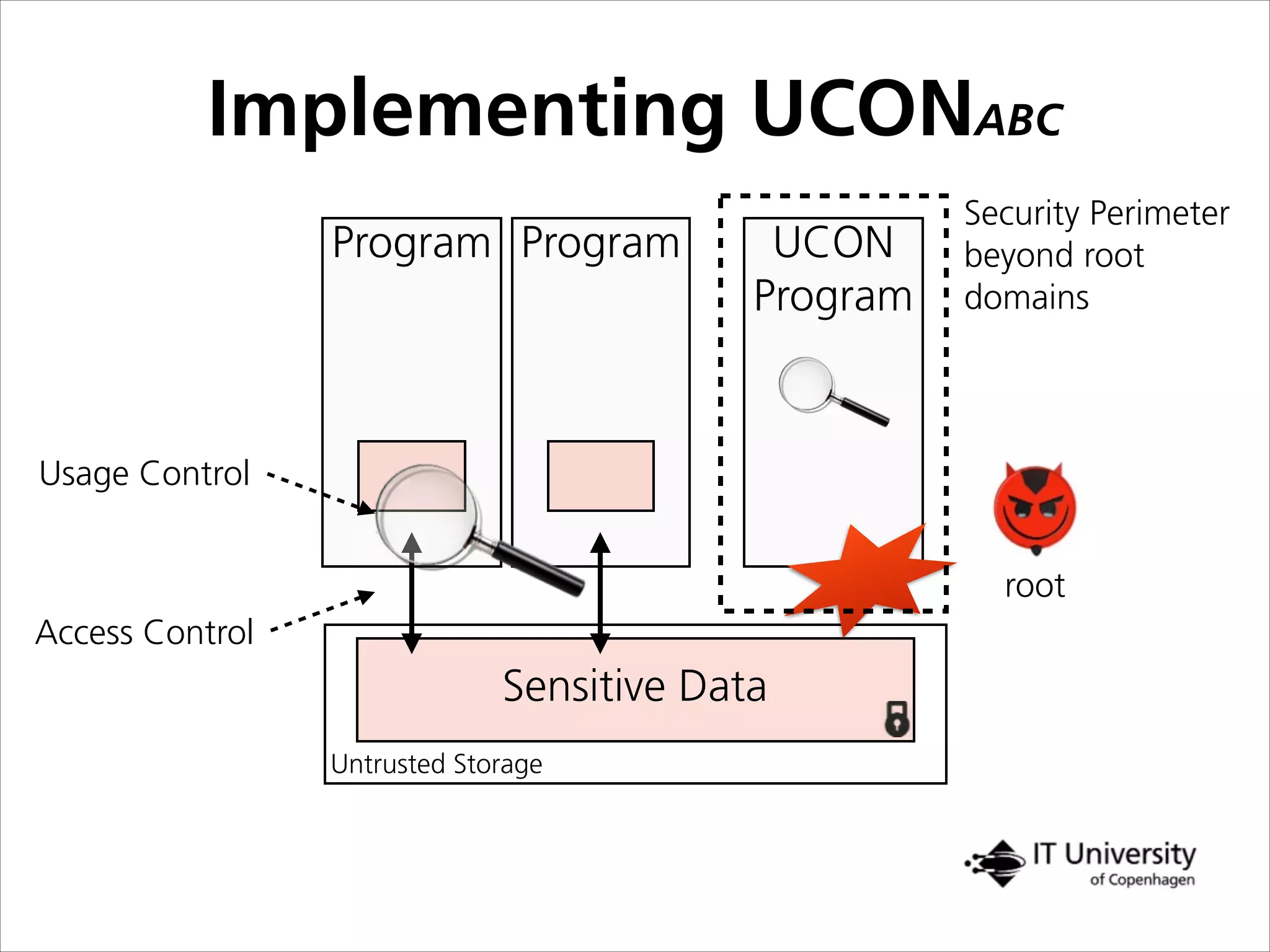

The document discusses building trust in digital interactions through the lens of privacy and personal data processing within various social contexts. It emphasizes the importance of contextual integrity and proposes a framework using trusted storage and integrity modules to ensure secure management of sensitive information. Additionally, it highlights the ongoing challenges and solutions in maintaining user and service provider trust amid the evolution of technology and privacy norms.

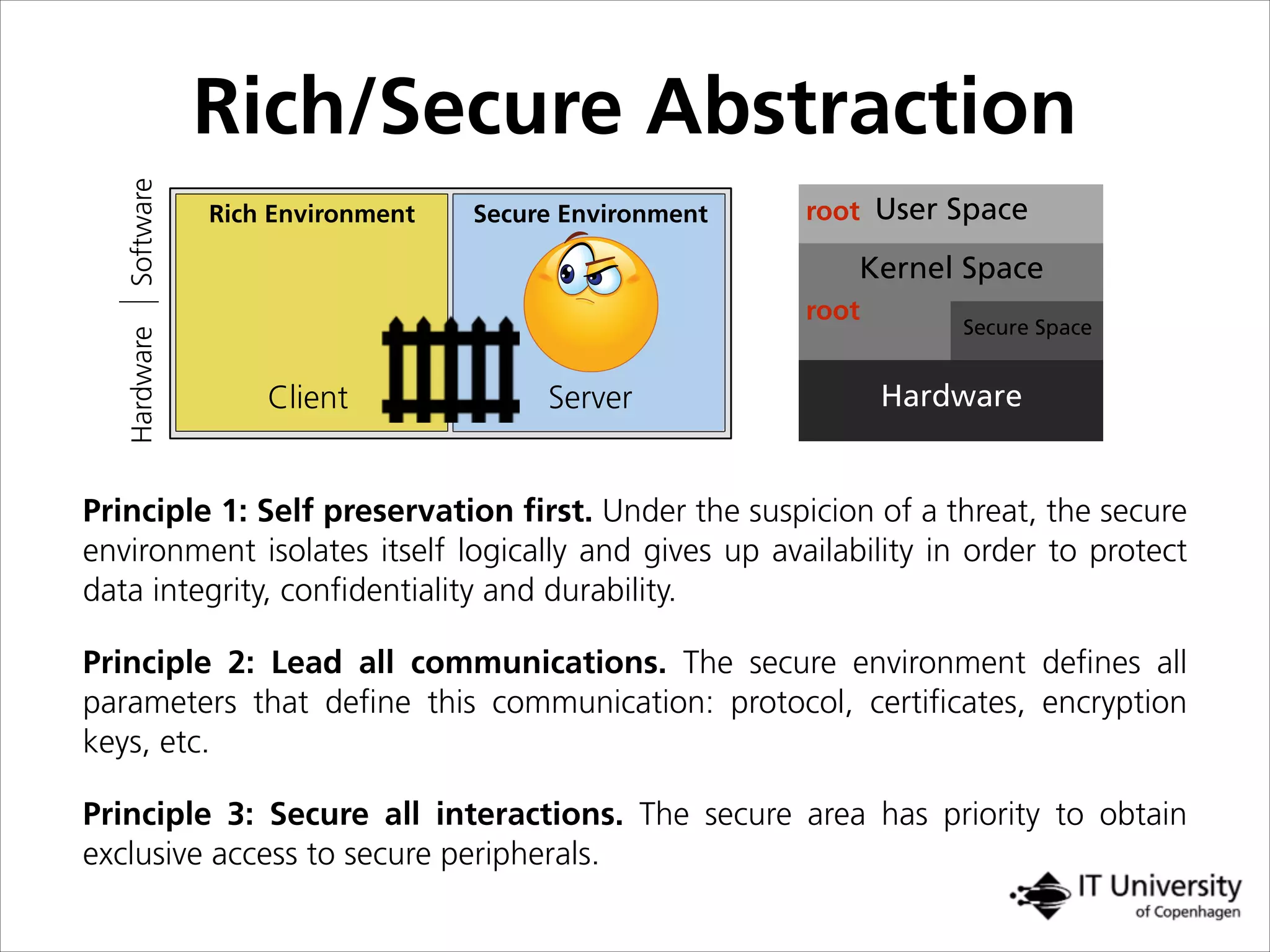

![Trusted Storage Module (TSM)

Architecture

Rich Environment Secure Environment

Data producer

App

Platform

Independent

Platform

Dependent

TSM CI

Secure

EnvironmentCI

TSM

Secure Module

Tamper

Resistant Unit

Crypto

Module

for [i..n]

Data Chunk

(i)

(ii)

Storage

(iii)(iv) Enc. DataEnc. Keys PDS

(v)

Enc. Data

Metadata](https://image.slidesharecdn.com/openitjavier-151011161026-lva1-app6891/75/Building-Trust-Despite-Digital-Personal-Devices-21-2048.jpg)

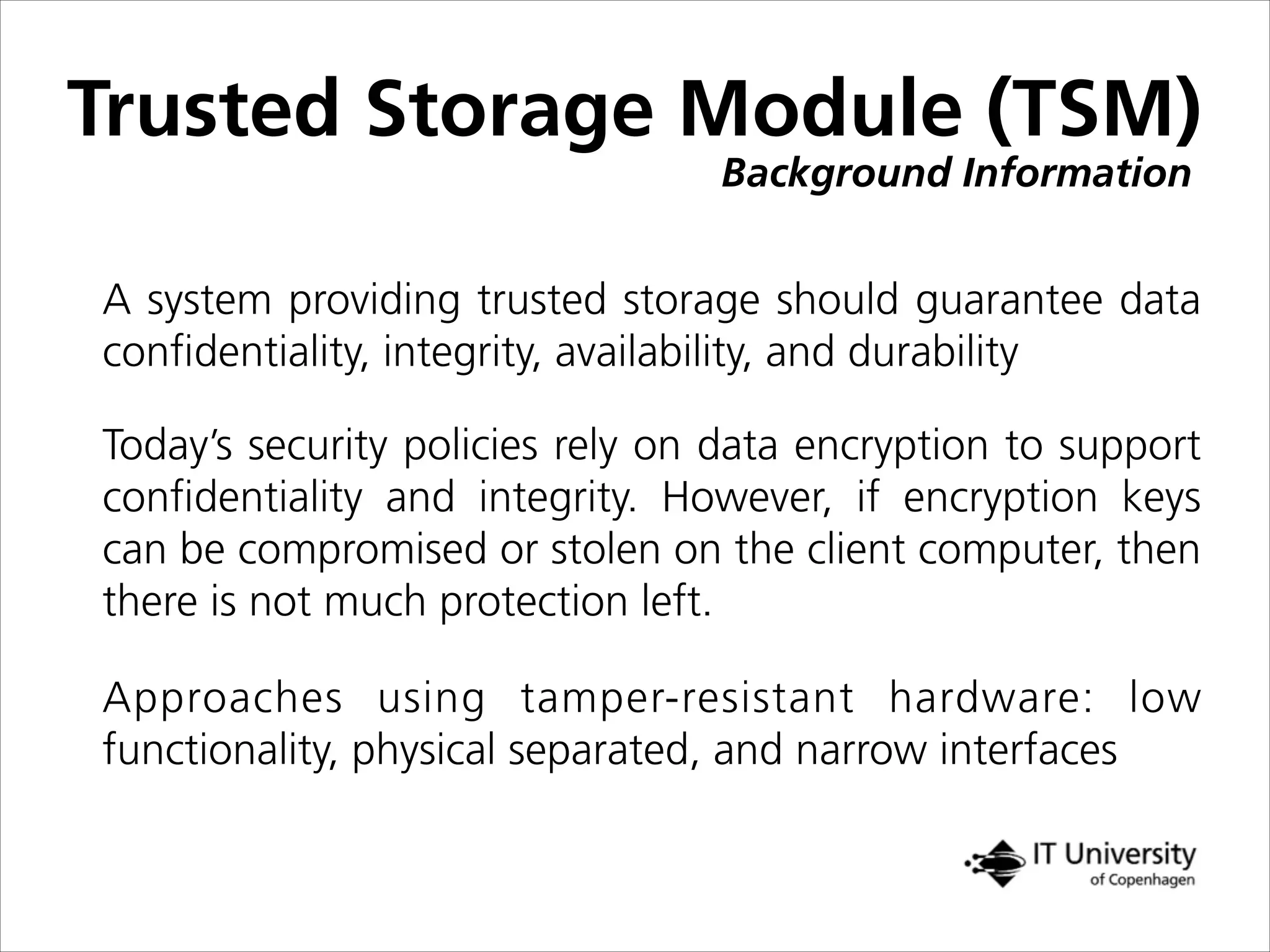

![0

0.00375

0.00750

0.01125

0.01500

1KB 10KB 20KB 50KB 100KB

Time[s]

Store&Object&in&Rich& Store&Object&in&Secure&

Trusted Storage Module (TSM)

Overhead](https://image.slidesharecdn.com/openitjavier-151011161026-lva1-app6891/75/Building-Trust-Despite-Digital-Personal-Devices-22-2048.jpg)