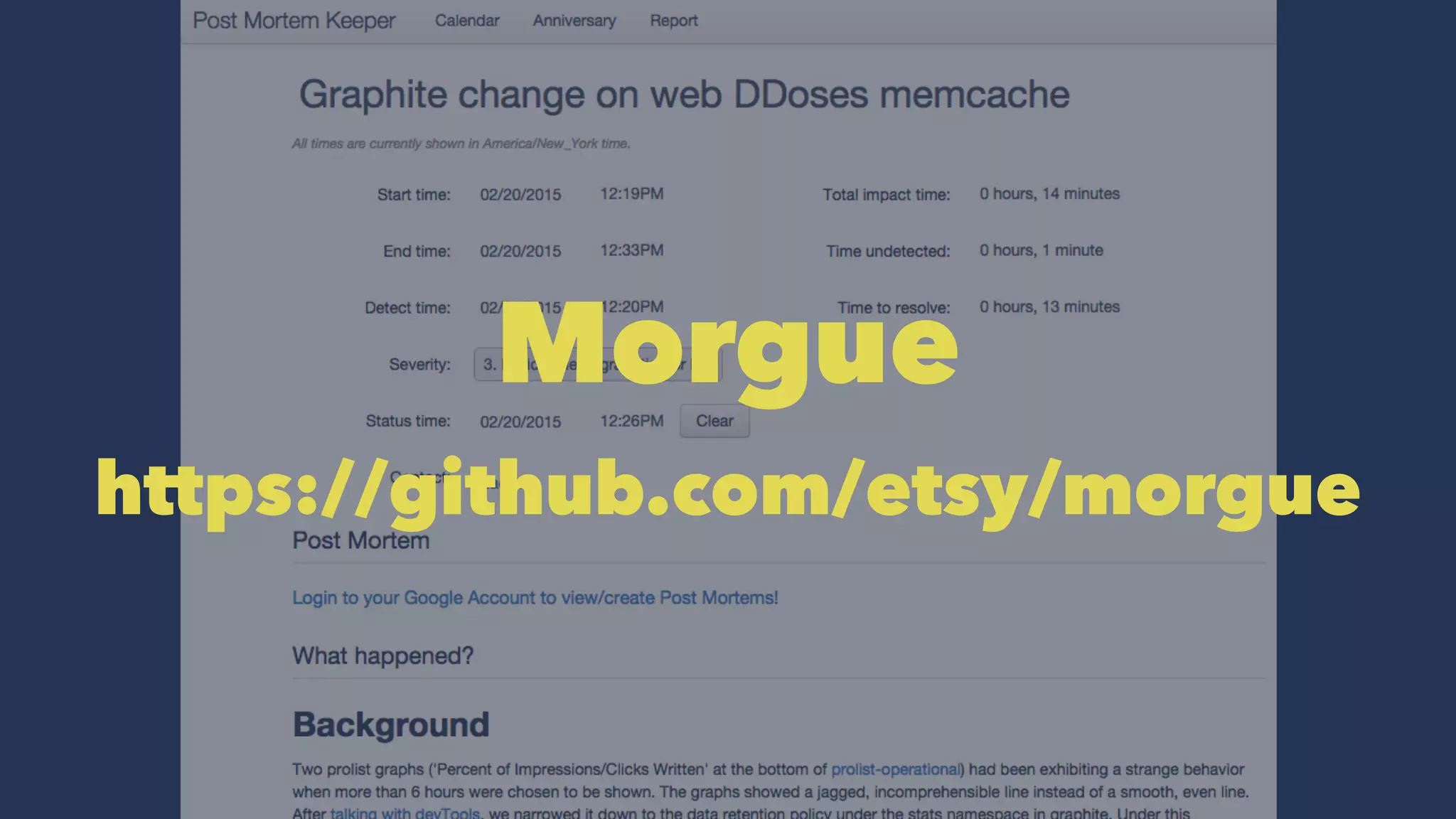

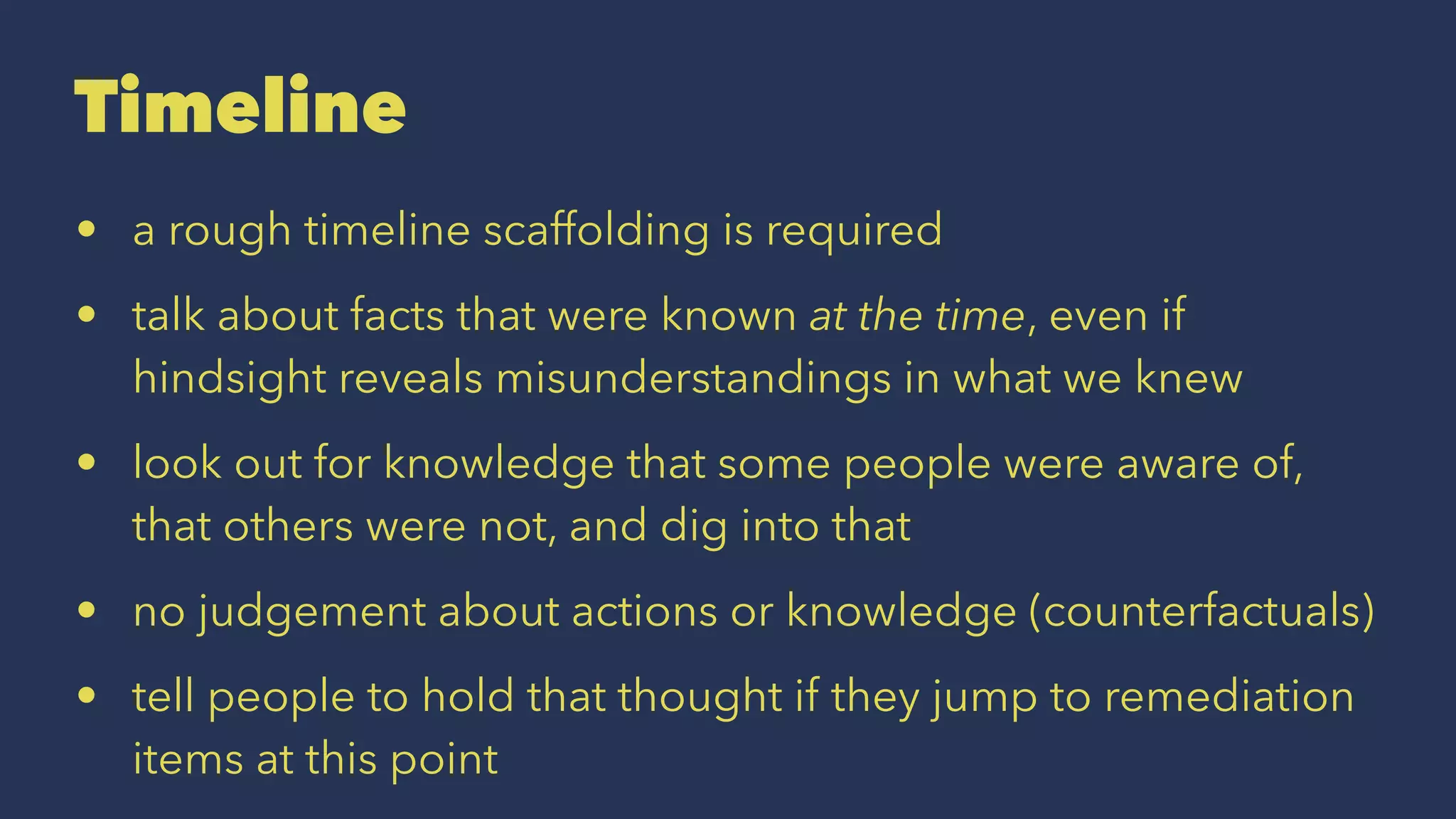

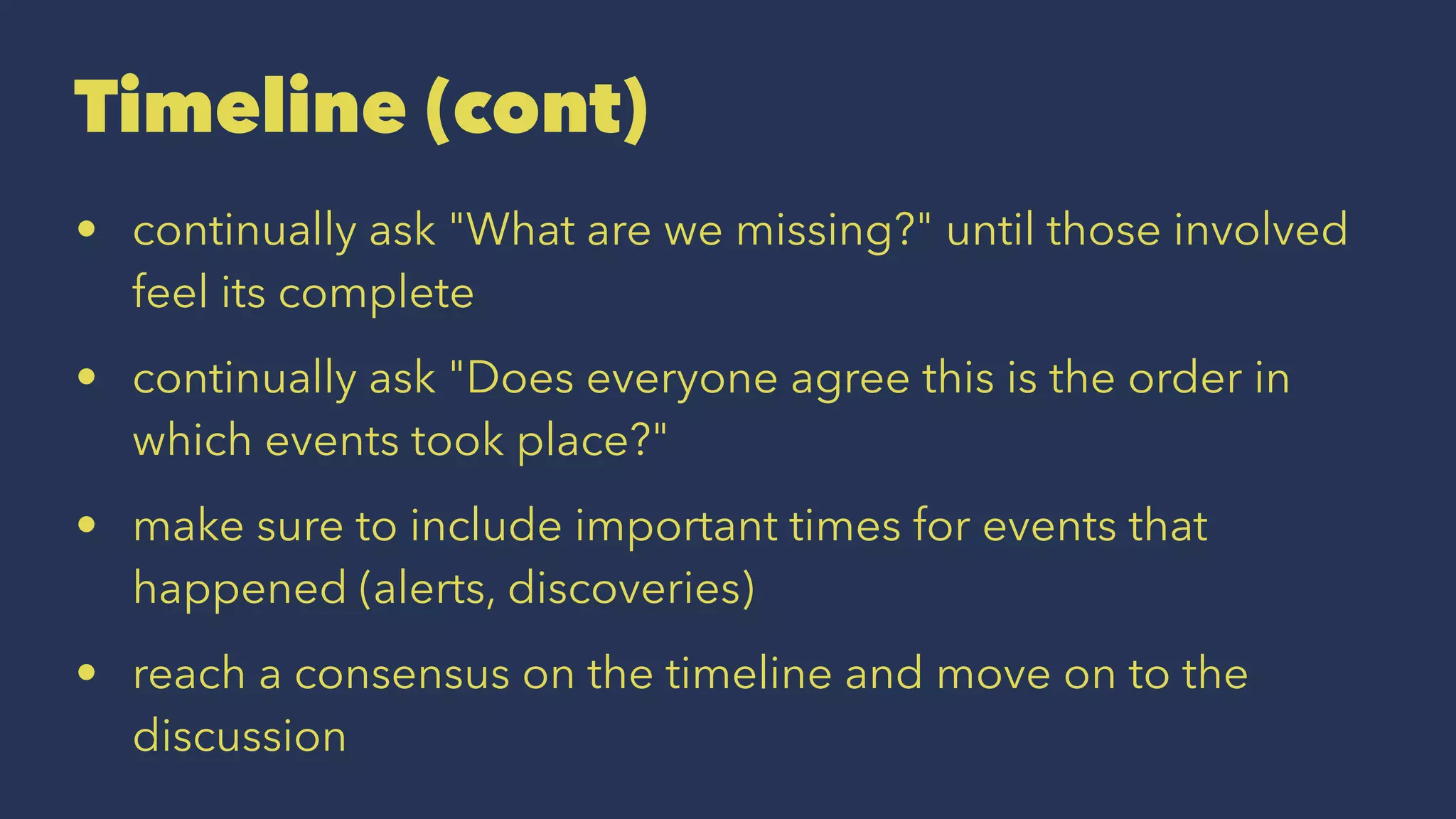

The document outlines the practices and values of Etsy in building a successful learning organization, emphasizing the importance of mastering failure through architecture and operability reviews, as well as blameless post-mortems. It discusses the role of systems thinking, personal mastery, and team learning in facilitating continuous improvement and transformation. Furthermore, it highlights methods to address failures without assigning blame, focusing on understanding system behaviors and mitigating risks during project launches.