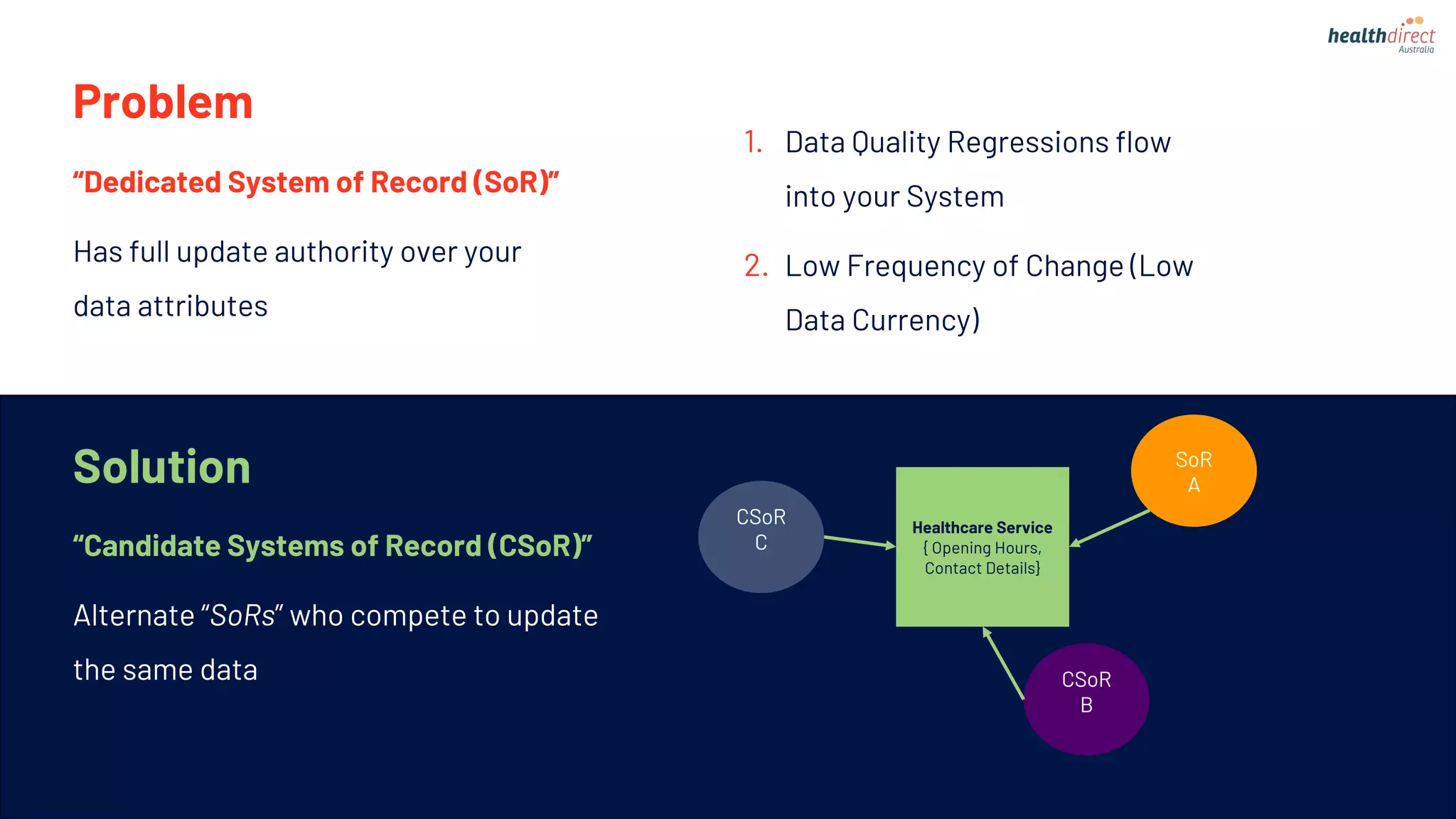

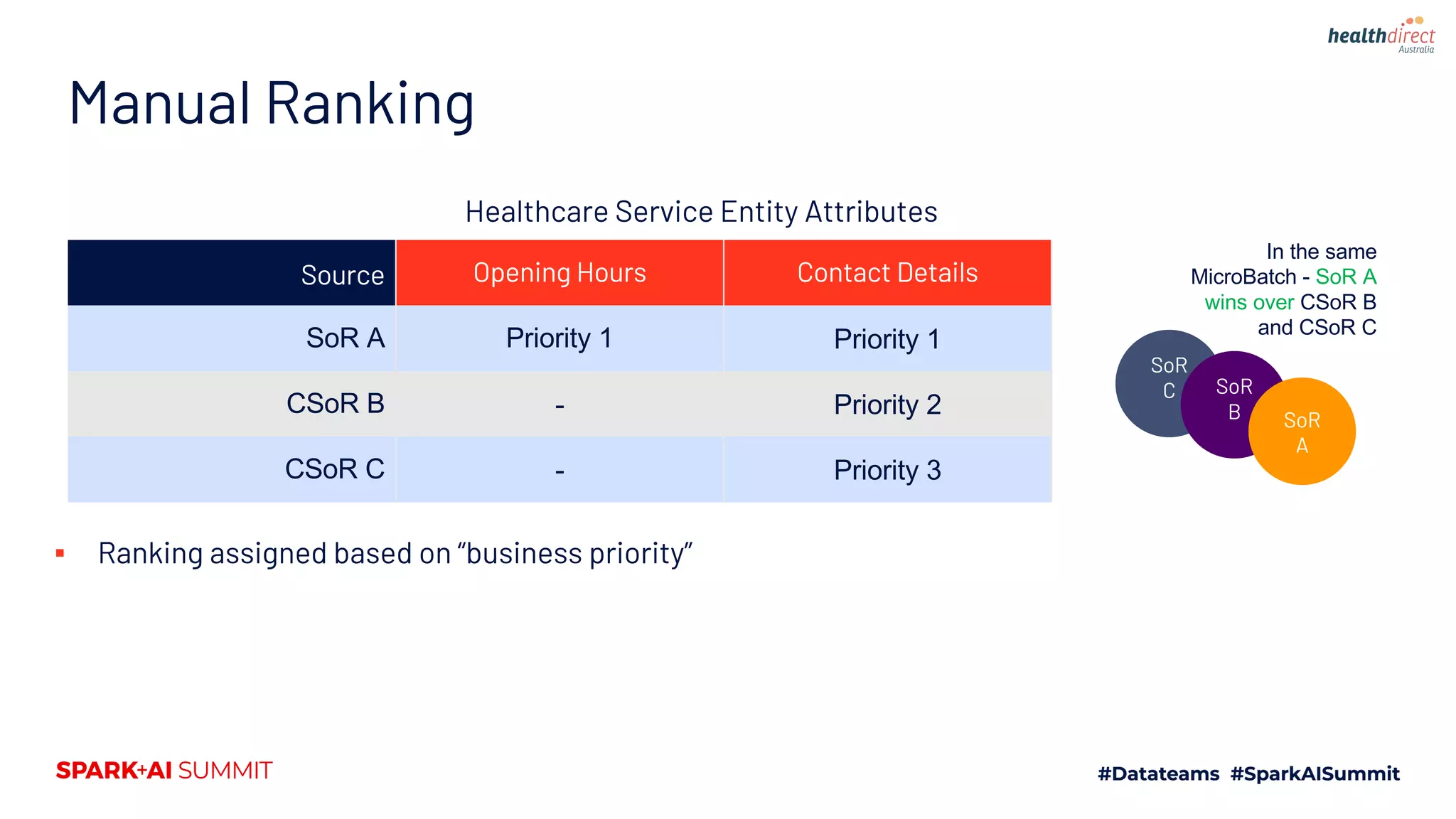

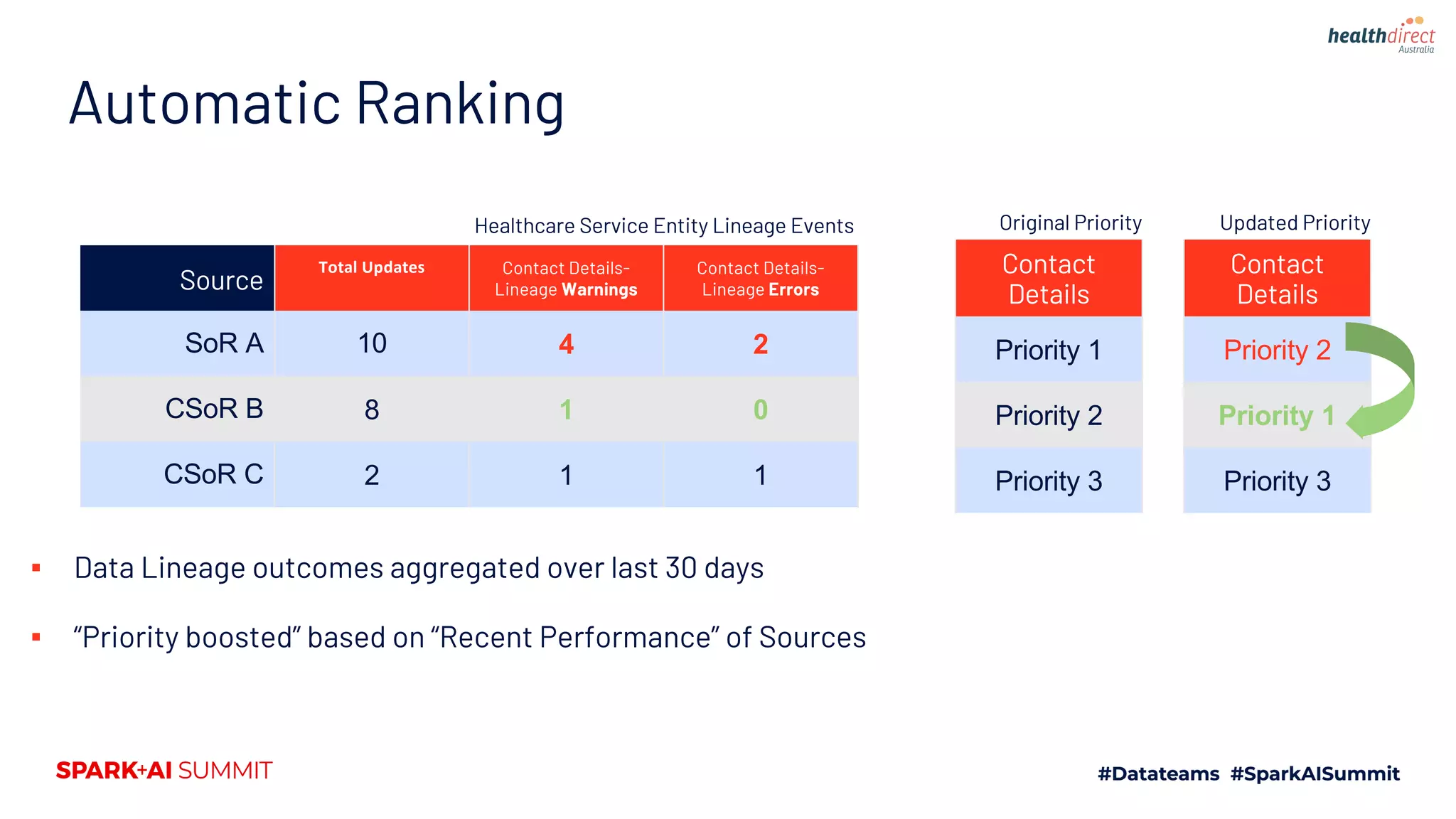

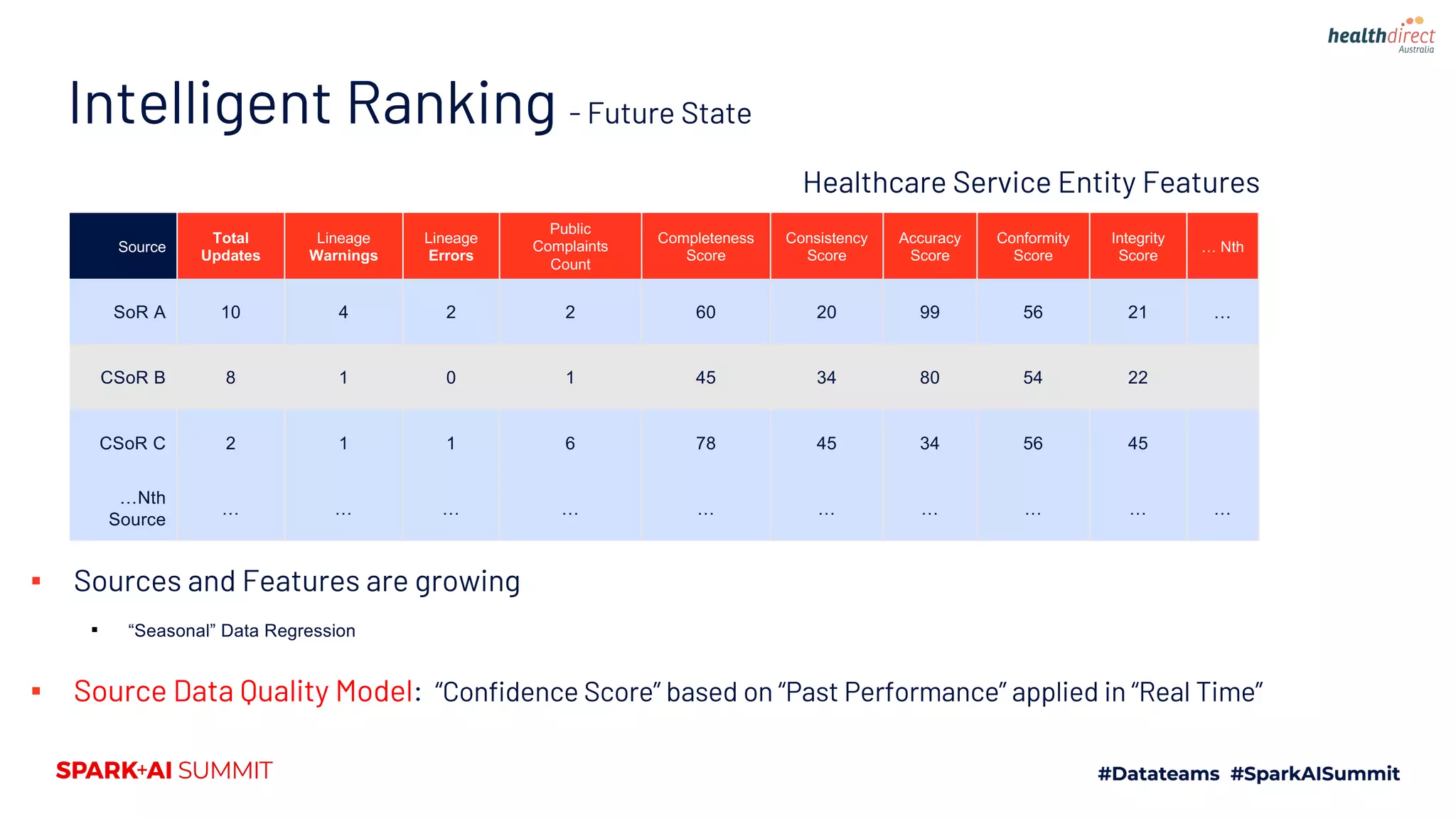

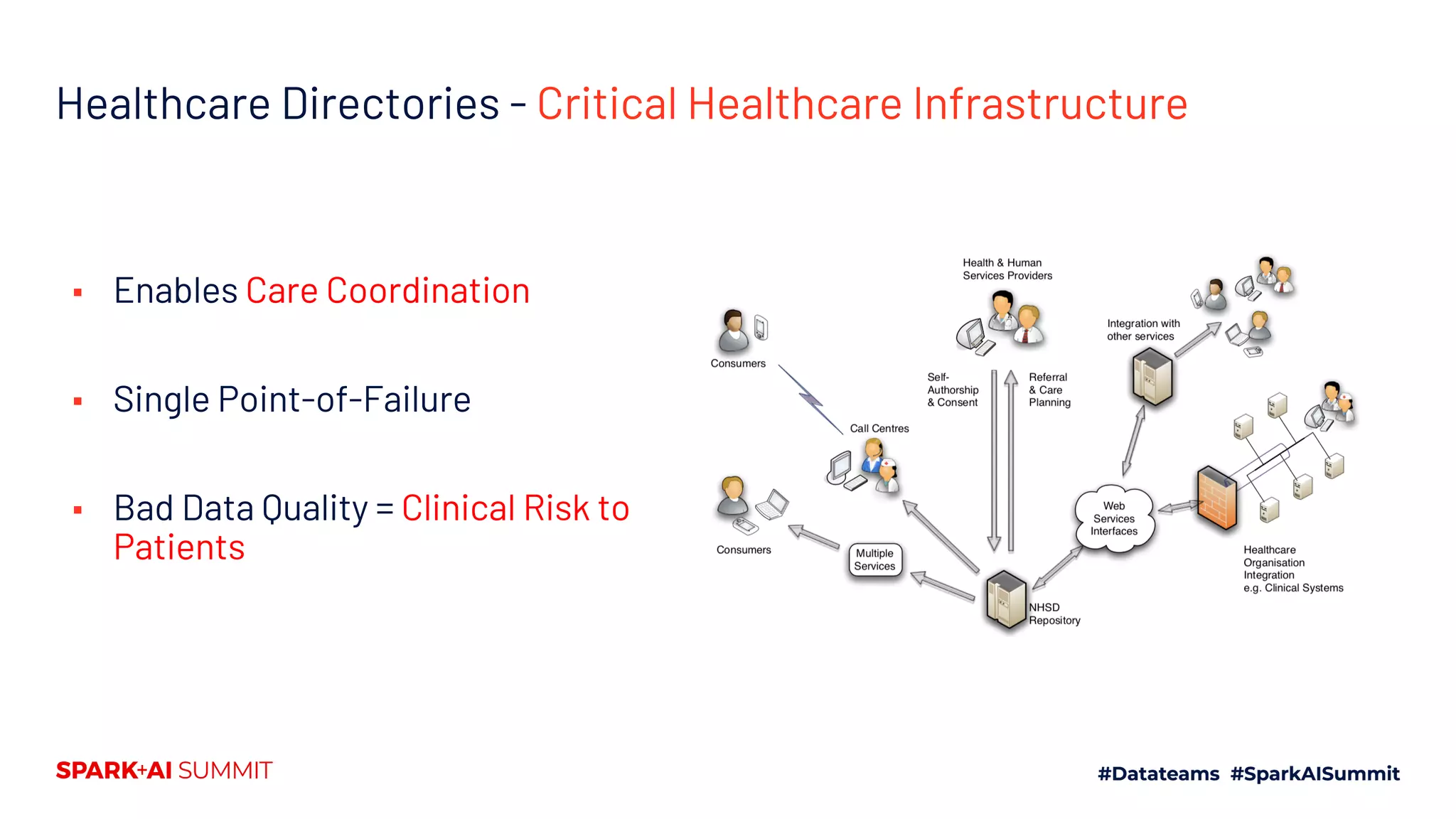

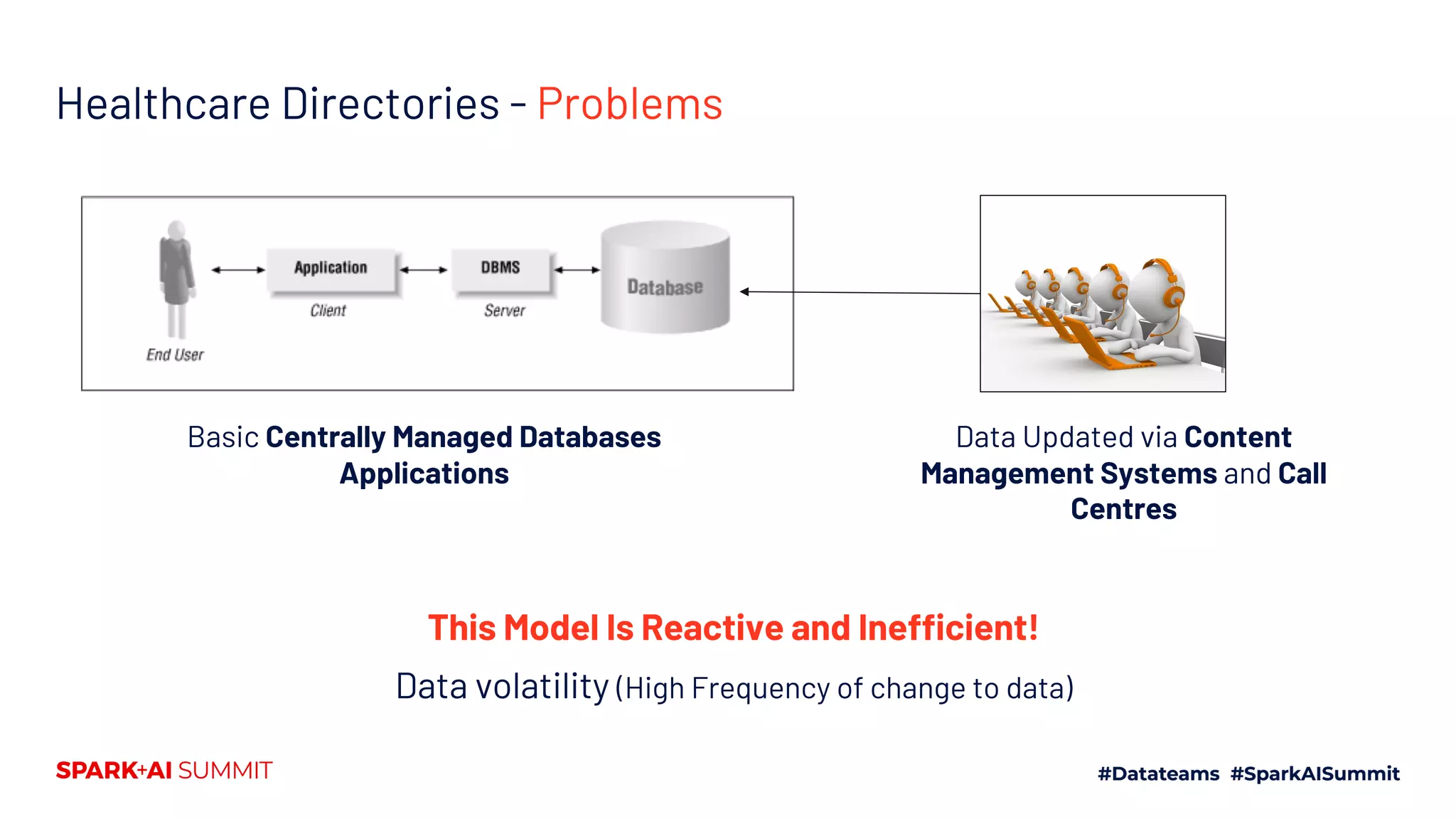

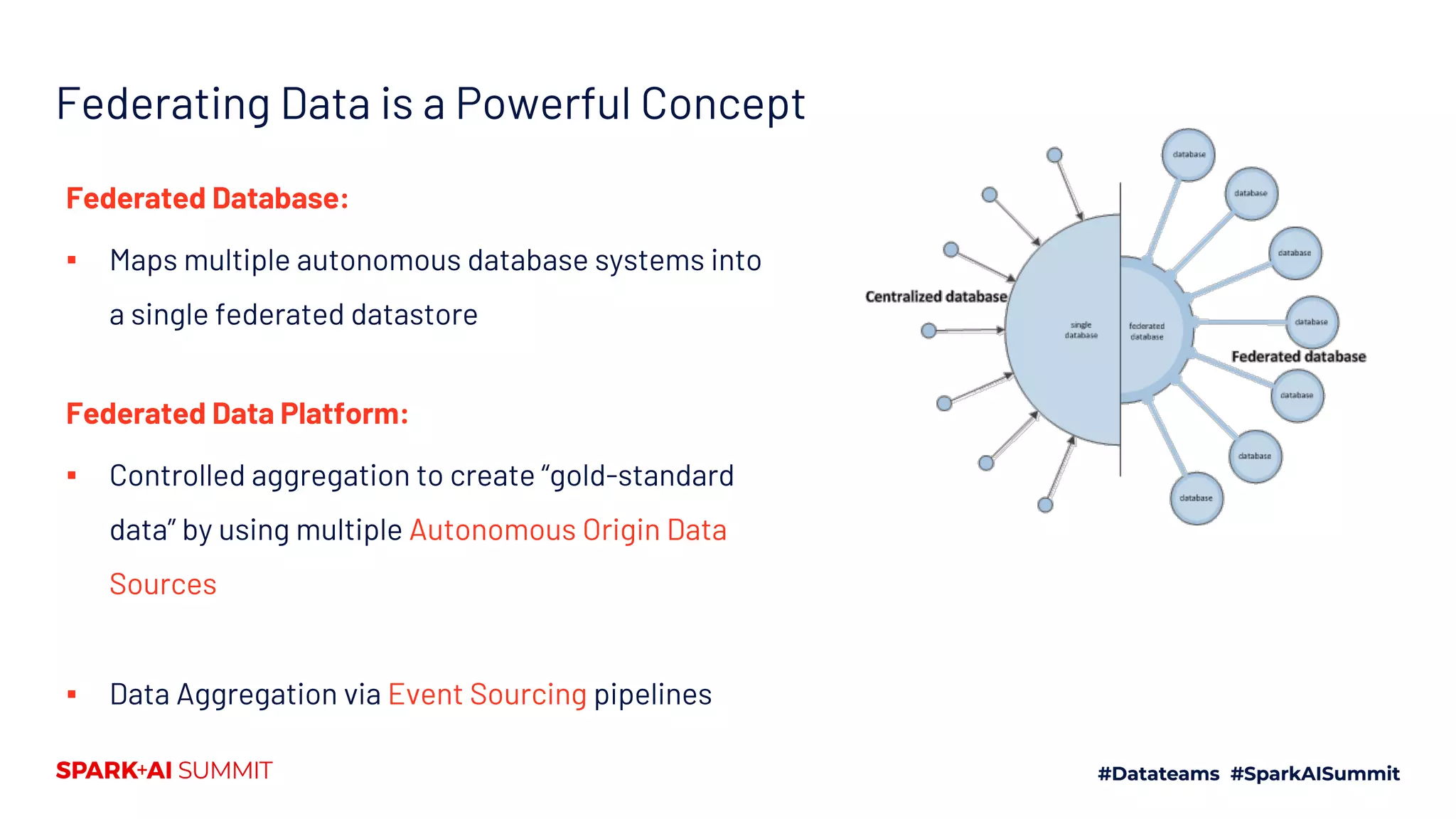

The document outlines the development of a federated data directory platform for public health to address the shortcomings of centralized data directories, which can lead to clinical risks due to poor data quality. It details the architecture, design patterns, and operational methodologies necessary for integrating various healthcare entities while ensuring high-quality, authoritative data sources. Additionally, the platform emphasizes intelligent ranking of systems of record to improve data accuracy and responsiveness in healthcare services.

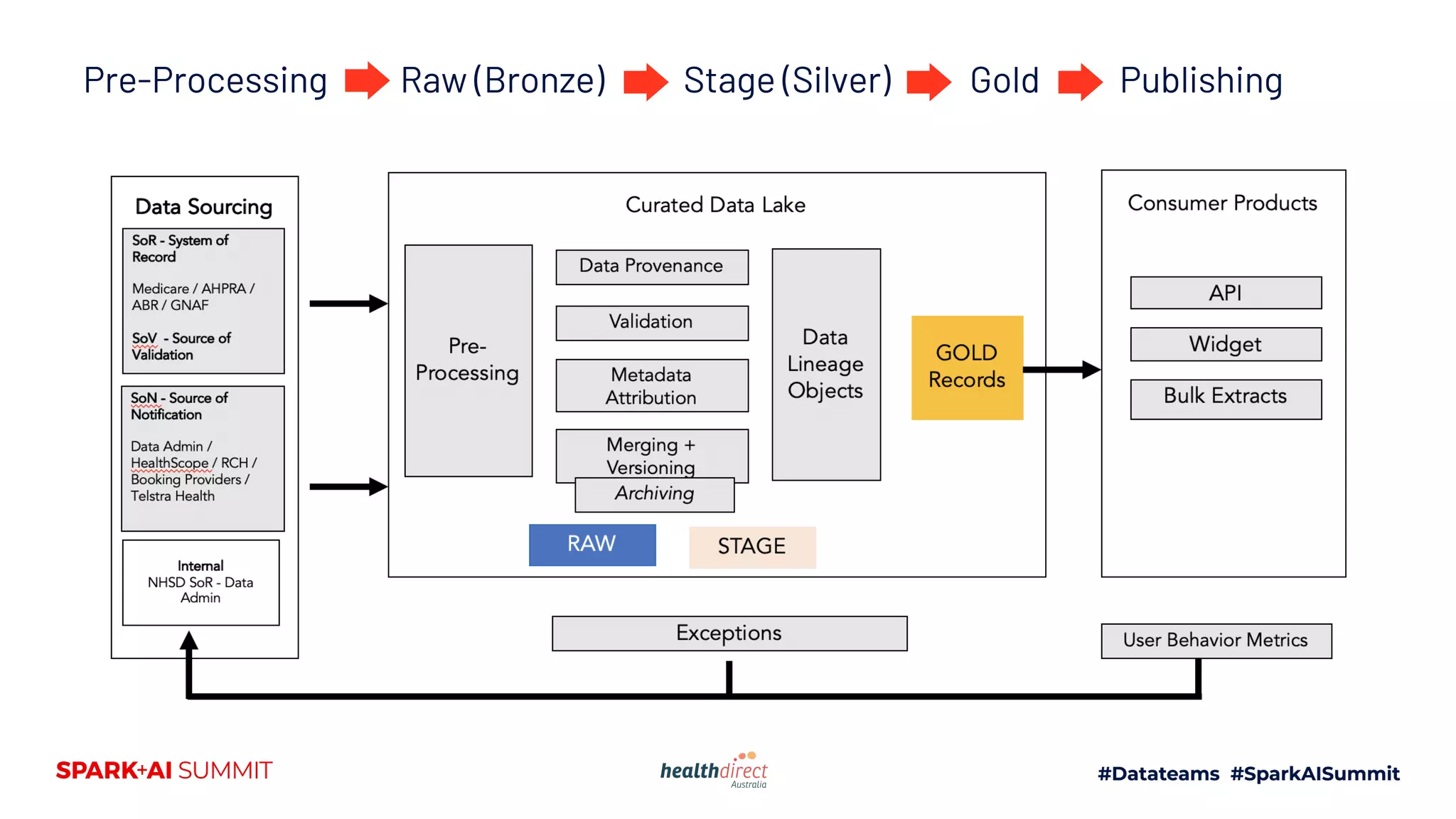

![Attribute Sourcing

Id: "561f10e4-0109-b99f-a2df-c059f9dc4a9b"

name: "Cottesloe Medical Centre"

bookingProviders [

{ Id: hotdoc,

providerIdentifier: cottesloe-medical-centre },

{ Id: healthengine,

providerIdentifier: ctl-m-re }

]

practitionerRelations [

{ pracId: c618860e-a69a

type: providerNumber,

value: 2djfkdn3k34 },

{ pracId: hsjfk3e-53vd

type: providerNumber,

value: dsfh4kslfls }

]

Calendar: {

openRules: […],

closedRules: […]

}

Contacts: {

Email: sss@gmail.com

Website: www.tt.com

Phone: 3242343

}

Medicare (SoV)

Healthscope (SoN)

Vendor Software (SoT)

Vendor Software (SoT)

Internal

Healthcare

Service

Practitioner

Relation

Details about

Practitioners who work at

a service

Internal

Internal

Vendor Software (SoT)

Data Federation](https://image.slidesharecdn.com/643markpaulanshulbajpai-200709194513/75/Building-a-Federated-Data-Directory-Platform-for-Public-Health-13-2048.jpg)

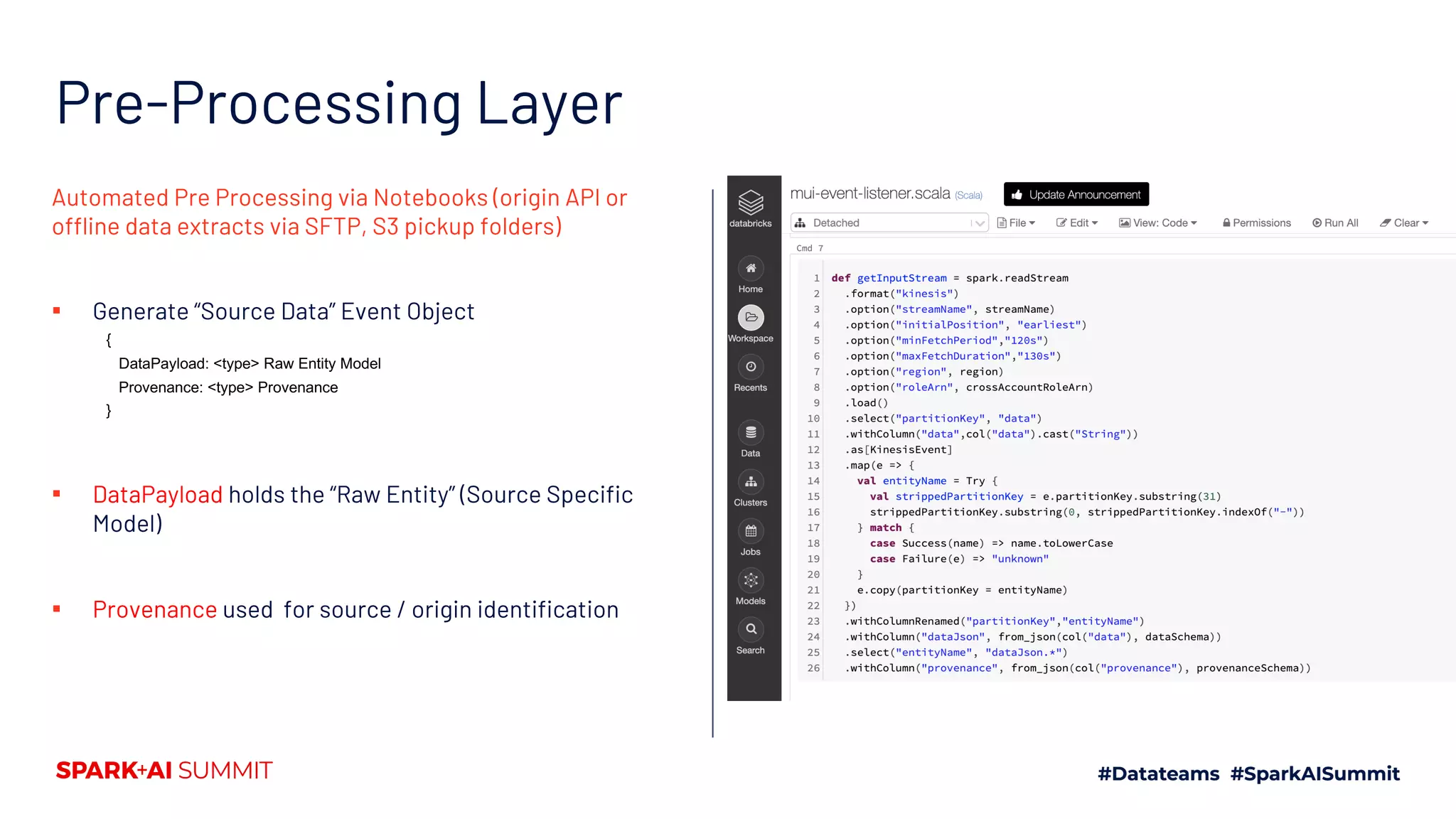

![Data Provenance Object event_trace_id: 79d77056-c773-4496-

ac0d-5223c49e06f0

file_name:

ext_provider_location_service_bf14

feb8-538a-4f40-85eb-

93b77d2c1704_2019-09-

17T22:50:47Z.json

source_file_name:

ext_provider_location_service_bf14

feb8-538a-4f40-85eb-

93b77d2c1704_2019-09-

17T22:50:47Z.json

flow_name:

nifi_flow_ext_provider_location_se

rvice_withstate_v1

owner_agency: HDAInternal

arrival_timestamp: 2019-09-

17T22:50:46Z

primary_key: [“pLocSvcId”]

primary_key_temporal: TRUE

data_in_load_strategy: DELTA

unique_data_code:

ext_provider_location_service

version: v0.0.1

source_identifier: TAL-2324

Trace an Event back to it’s Exact Origin

▪ Identify Upstream Source Identity & Raw Source File

▪ Inject Source (External) Identifier (e.g. Jira Ticket #)

Source Intention

▪ Target Entity (what this event intends to update)](https://image.slidesharecdn.com/643markpaulanshulbajpai-200709194513/75/Building-a-Federated-Data-Directory-Platform-for-Public-Health-20-2048.jpg)

![Data Lineage Object event_trace_id: 79d77056-c773-

4496-ac0d-5223c49e06f0

application_id: STAGE-01

application_name: STAGE

application_description: Versioned

Entity Data

application_version: 1.0.0

application_state:

STAGE_REFERENCING

dms_event_id: 4000ae0b-6b08-4dce-

a432-fff8e608e7ec

source_dms_event_id: 4de1802c-

70e6-4552-b2b0-4349bfc3a073

operation: [{

operation_name:

ENTITY_REFERENCING,

operation_rule_name:

plsParsing,

operation_result: SUCCESS,

failure_severity: “”,

attributes: [“”],

created_time: 2019-09-

30T22:39:47Z

}],

created_time: 2019-09-

30T22:39:47Z

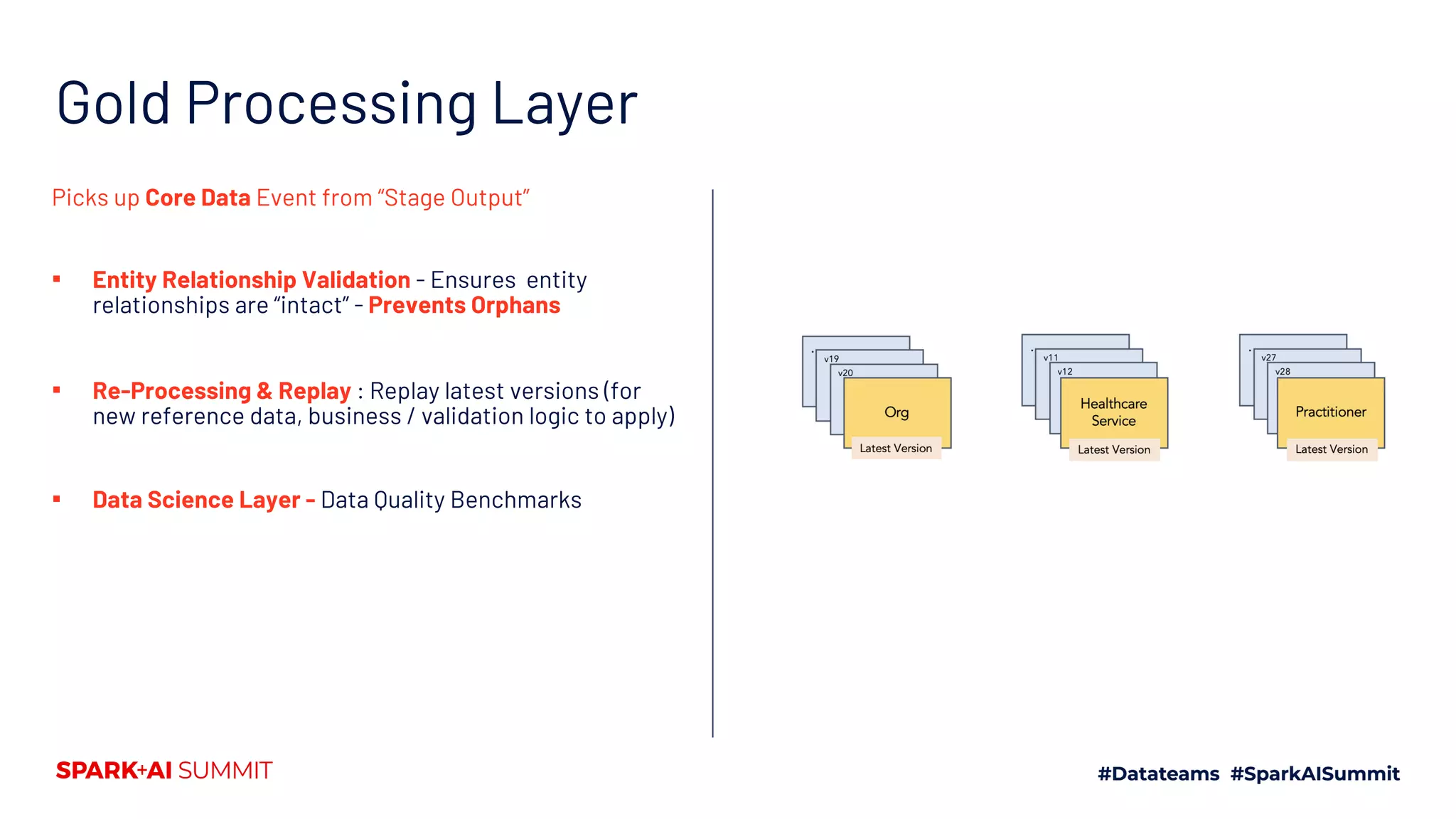

Encapsulate Operation Outcomes that occur to Entity

Events

▪ Capture deviation of Data Quality

▪ Exceptions / Warnings

▪ Exceptions - Fix Data

▪ Warning - Improve Data (Quality)

▪ Visibility of End to End Data Flow (via Operational

Outcomes Summary)](https://image.slidesharecdn.com/643markpaulanshulbajpai-200709194513/75/Building-a-Federated-Data-Directory-Platform-for-Public-Health-21-2048.jpg)