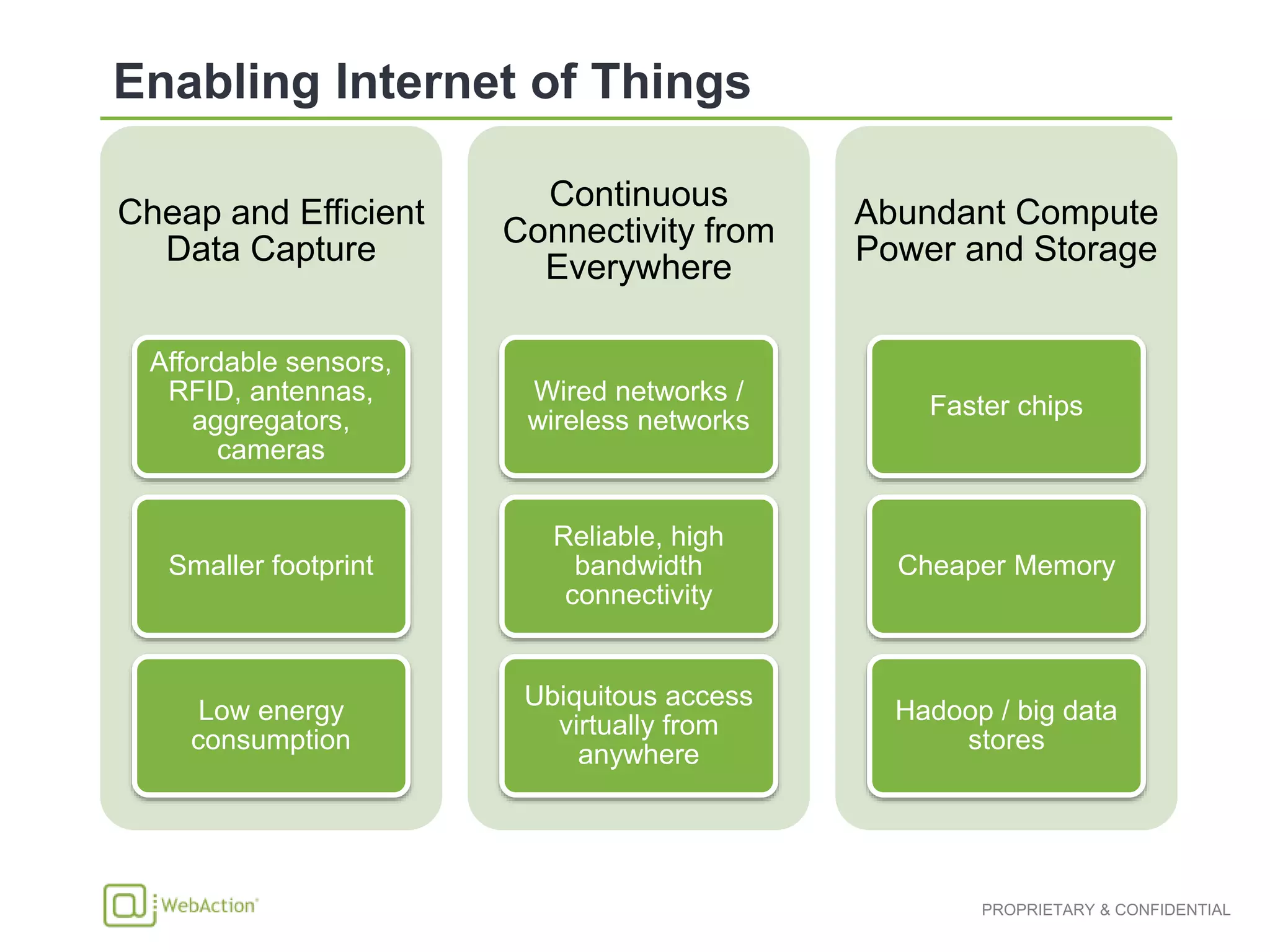

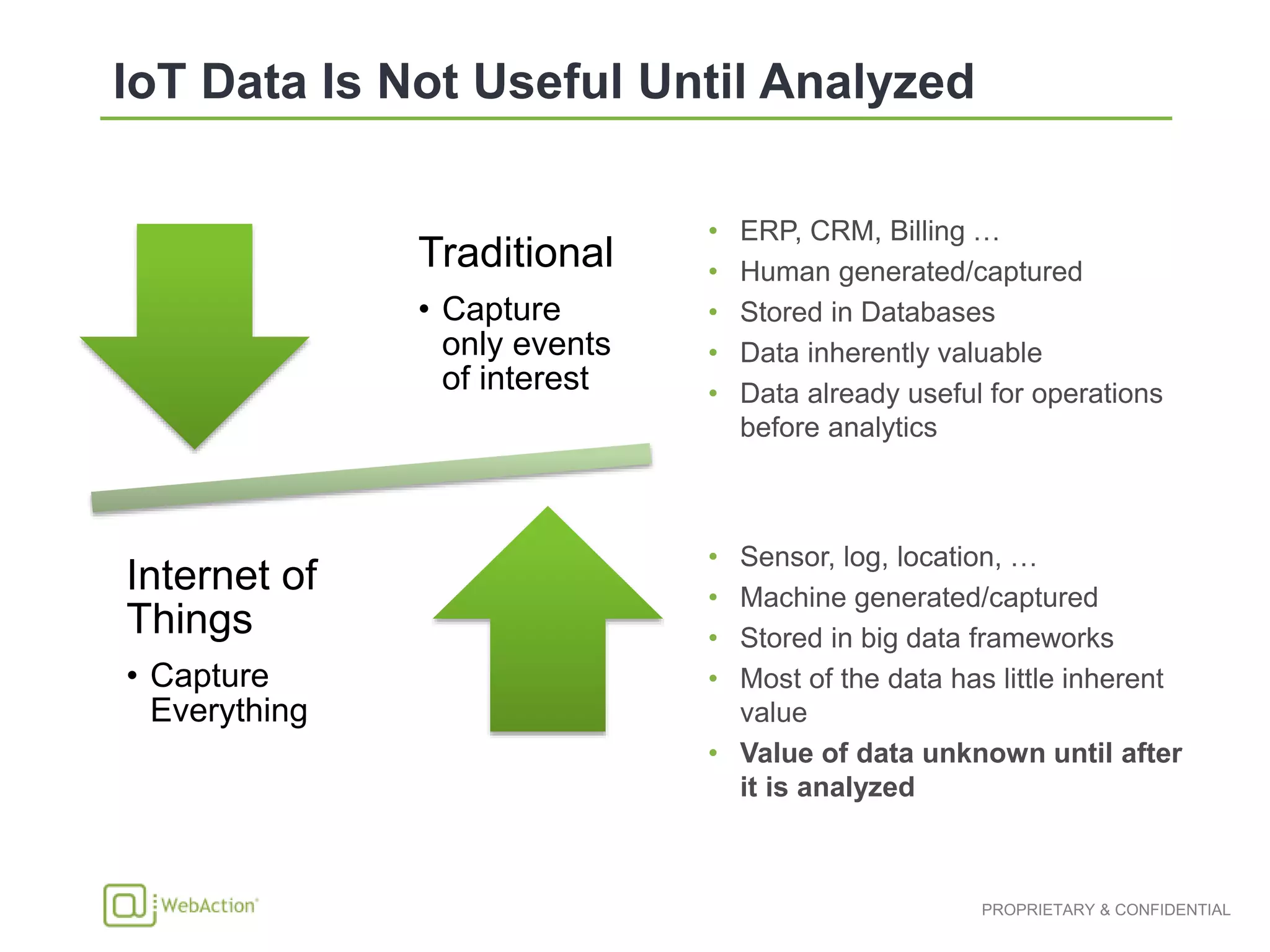

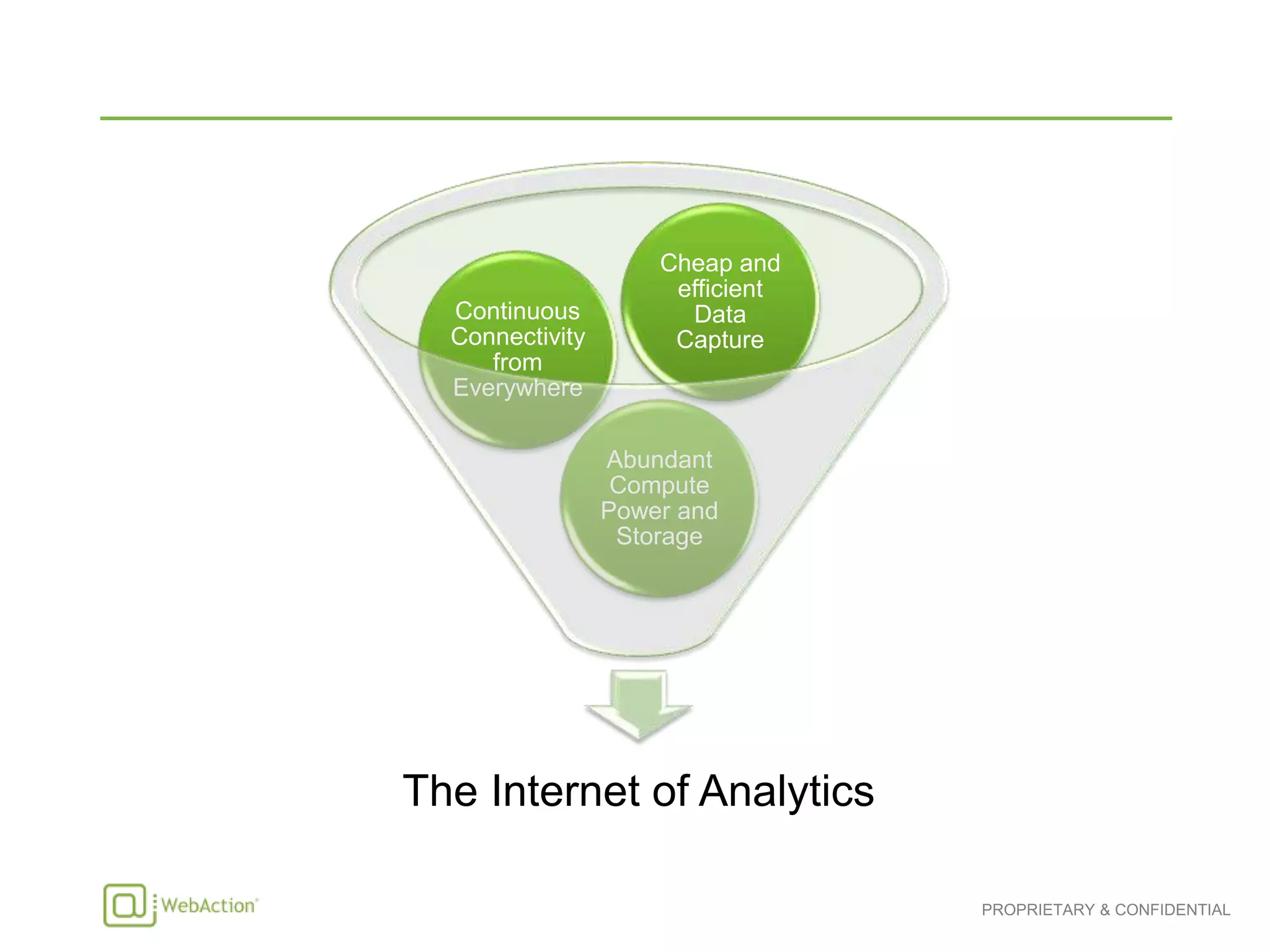

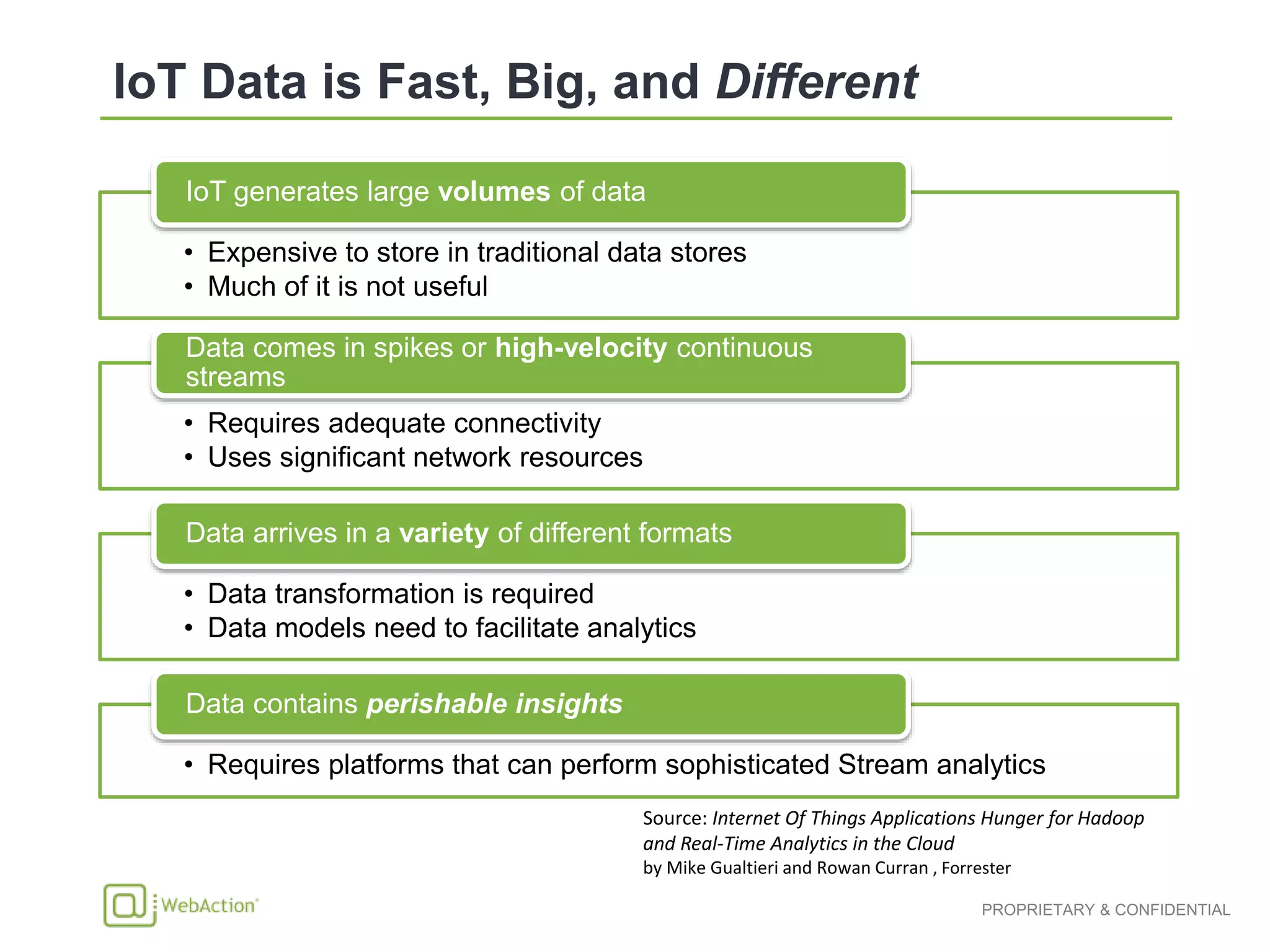

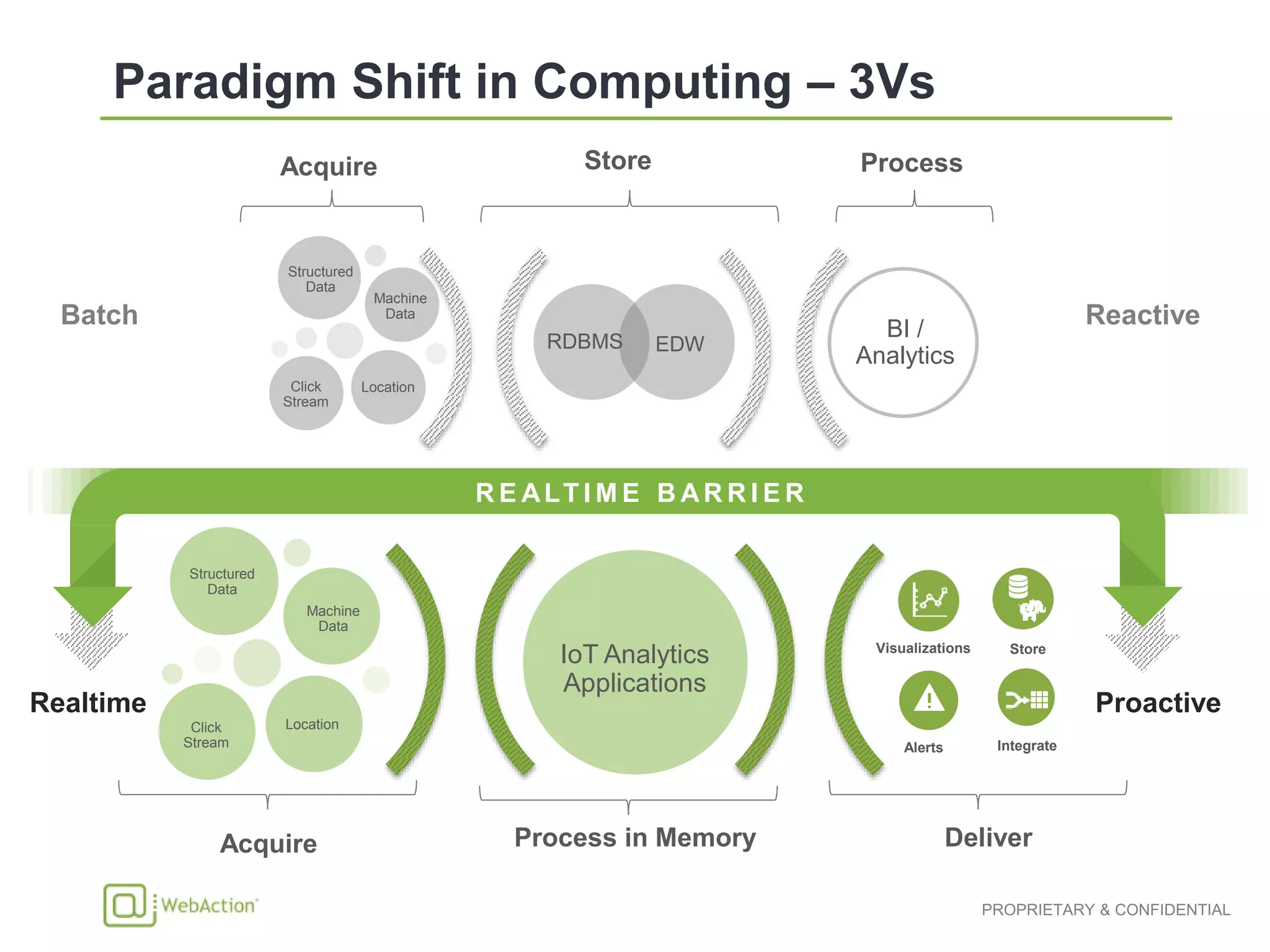

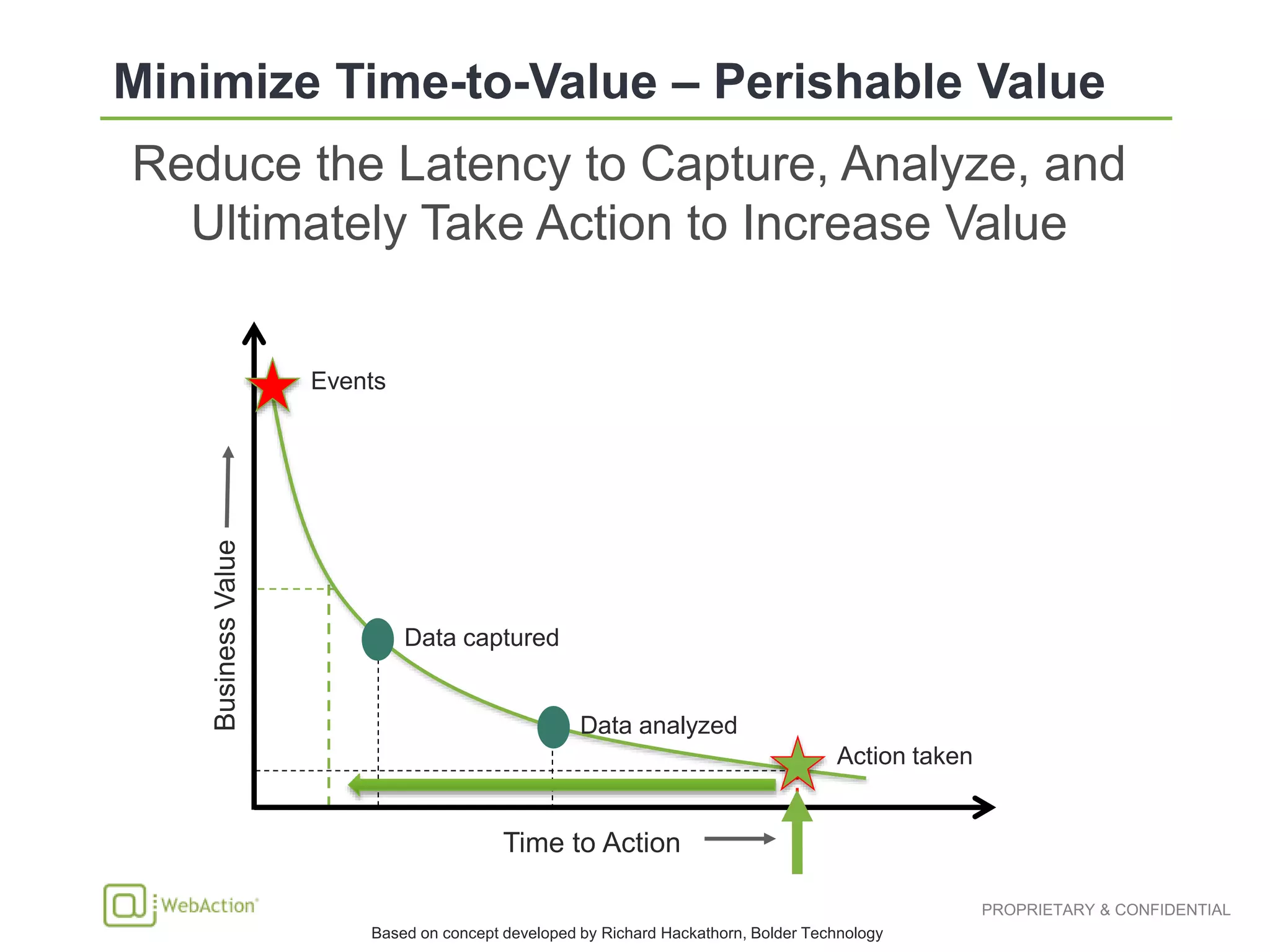

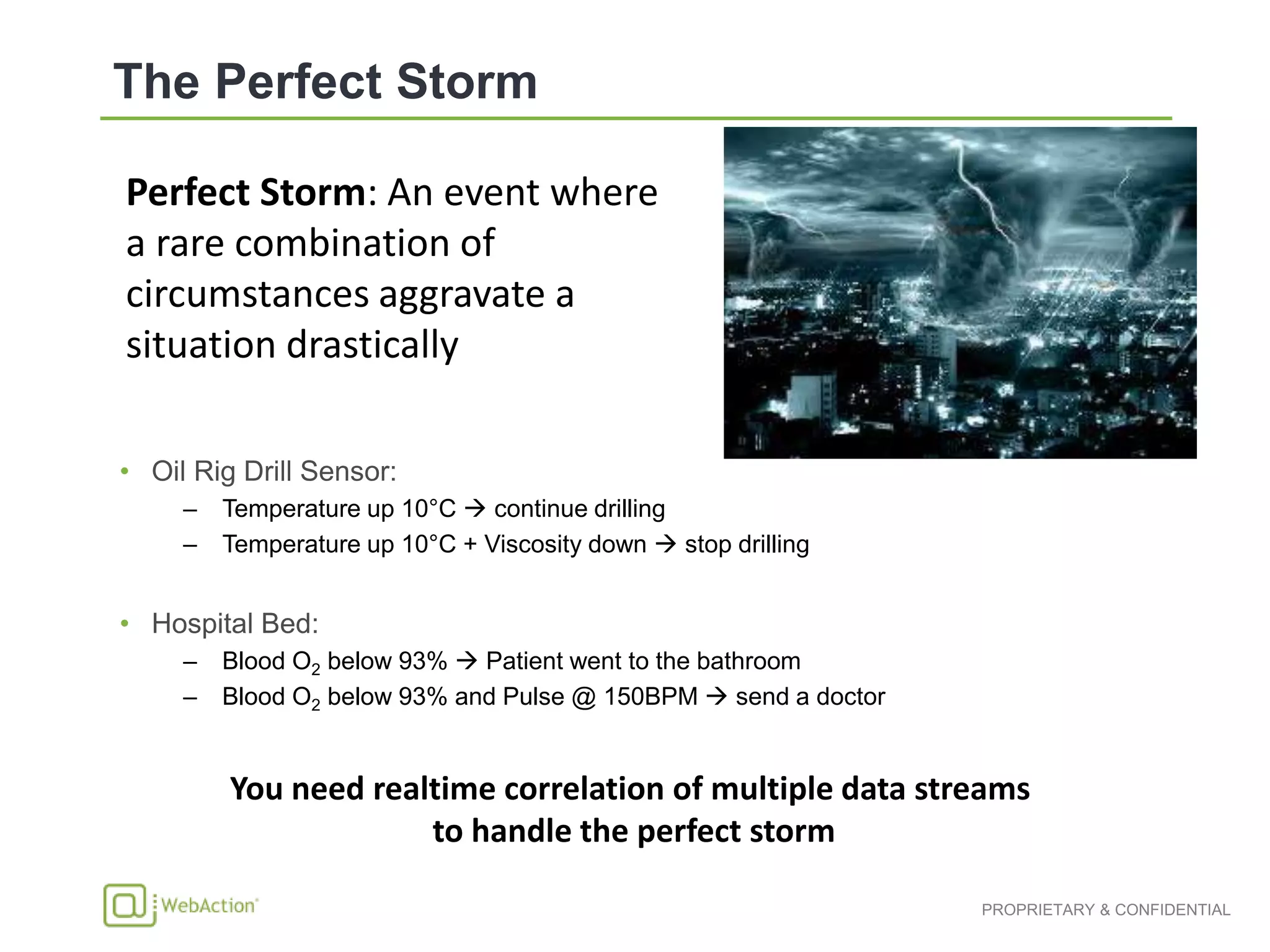

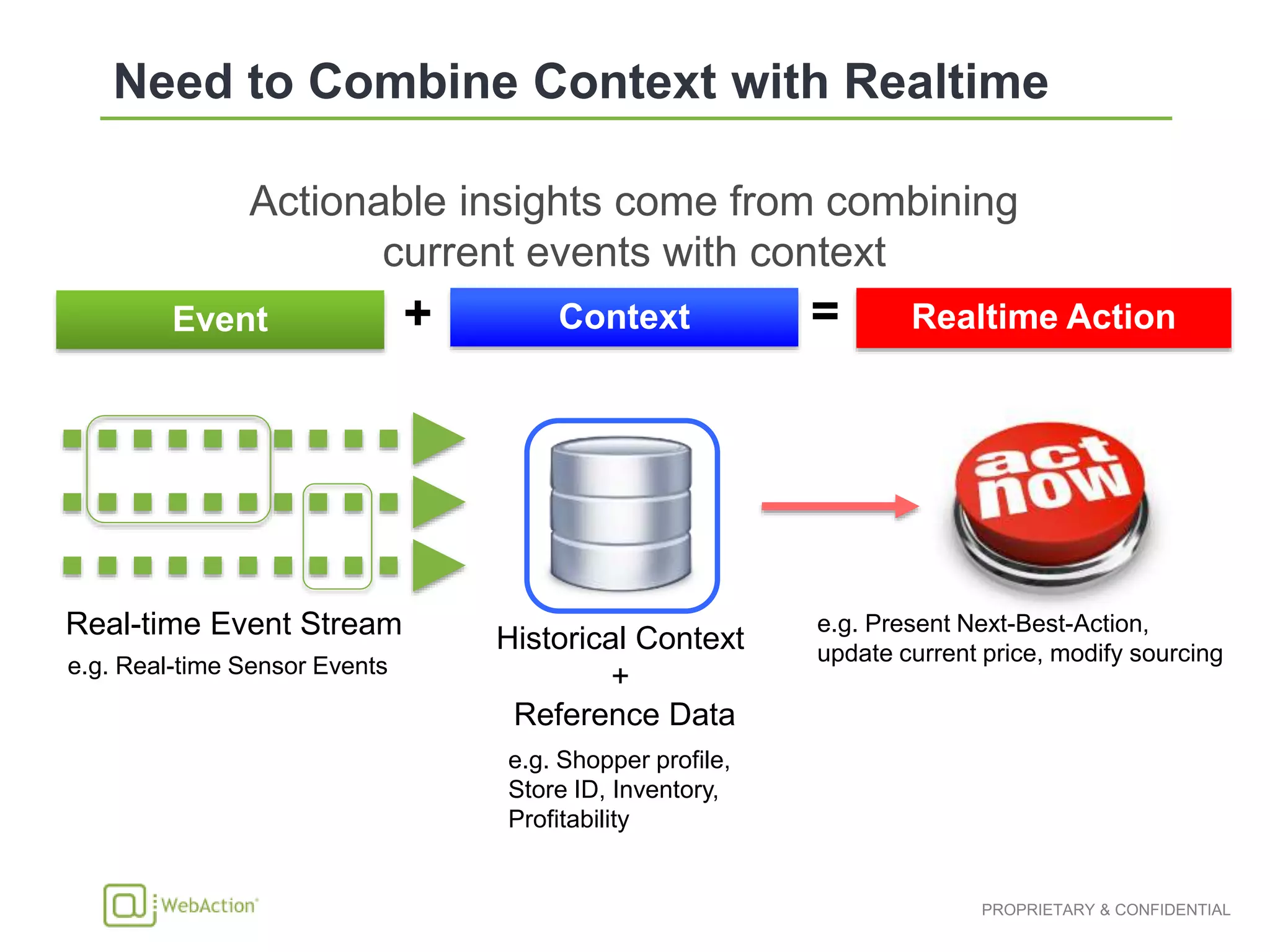

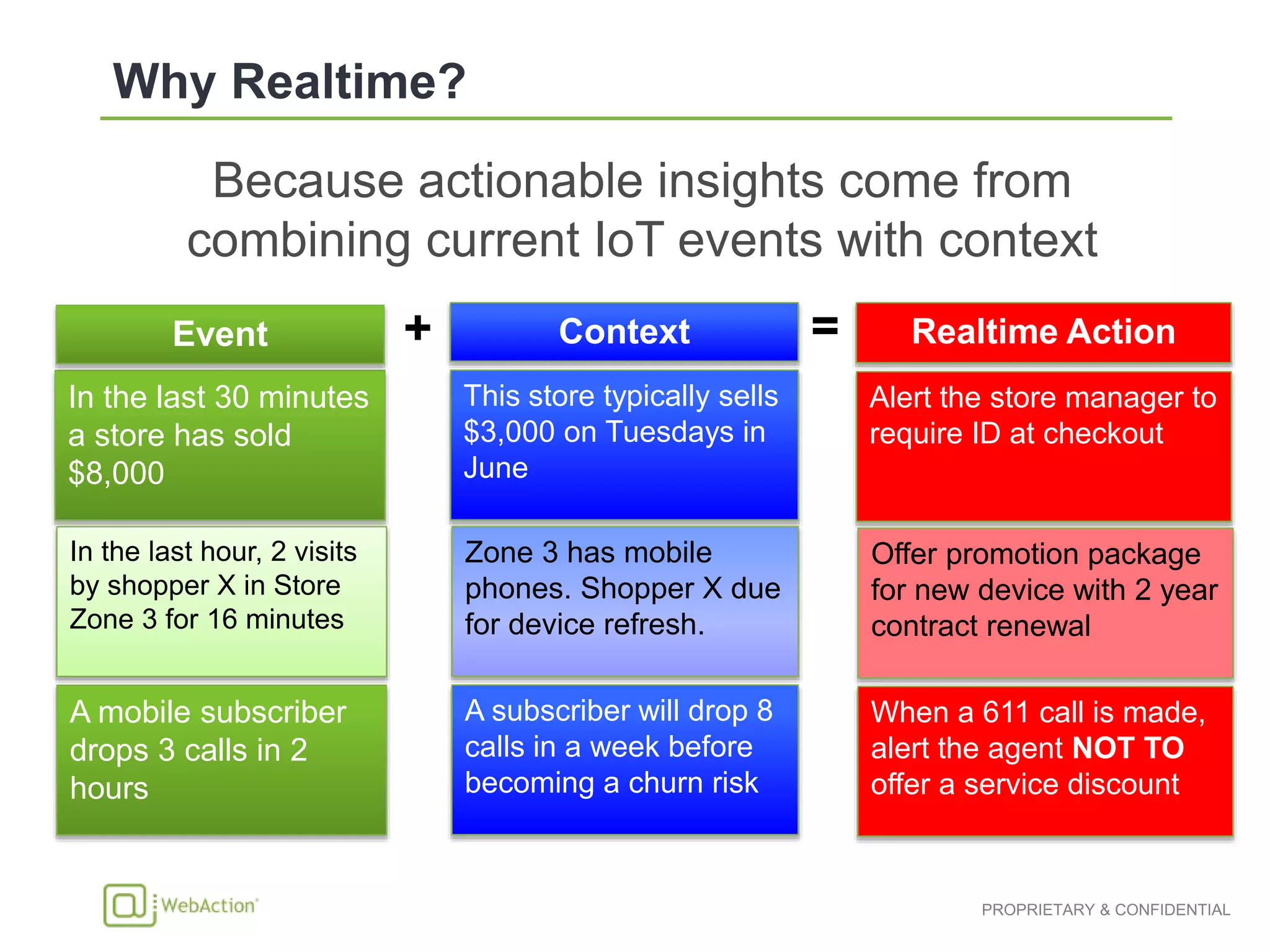

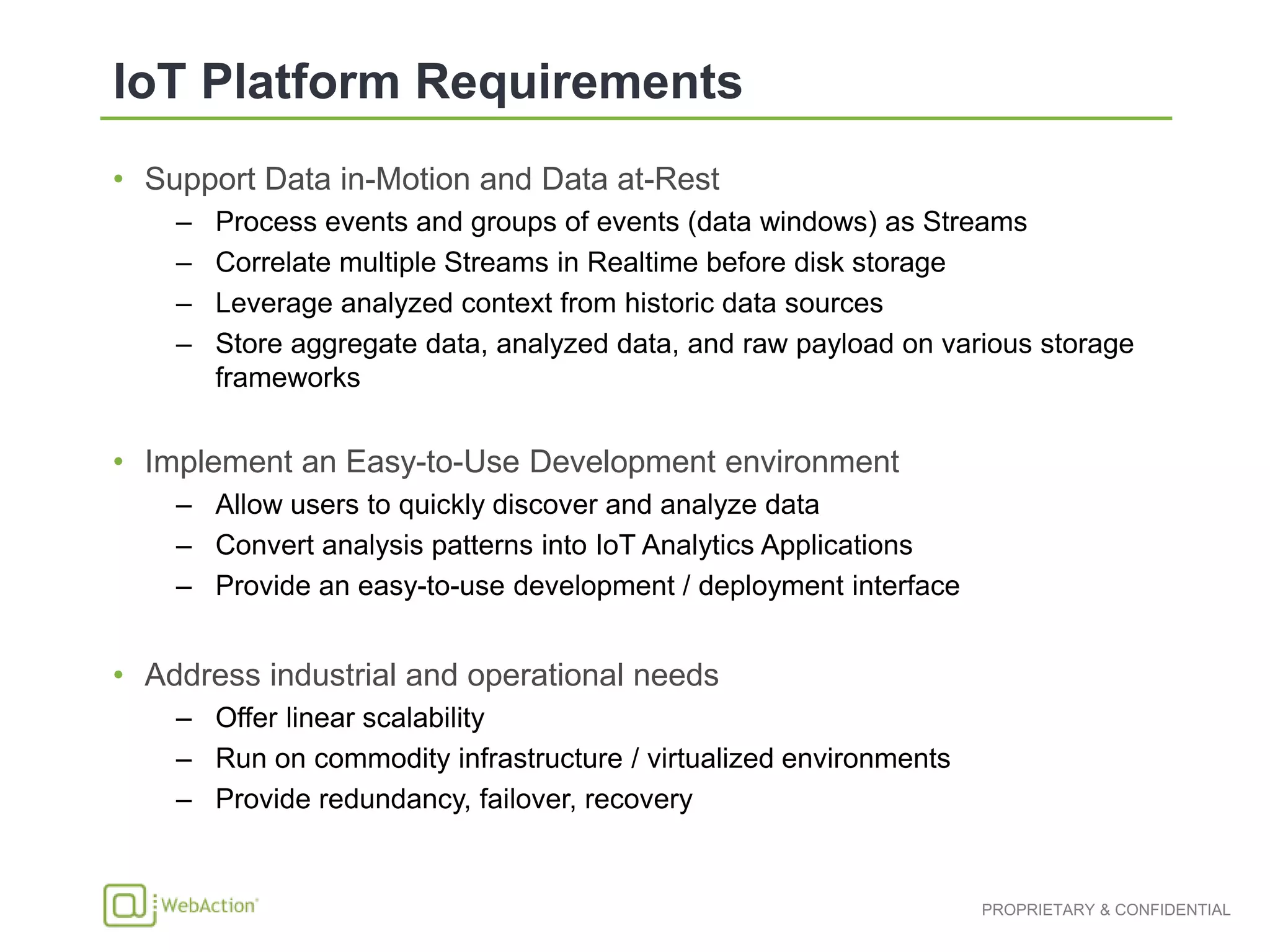

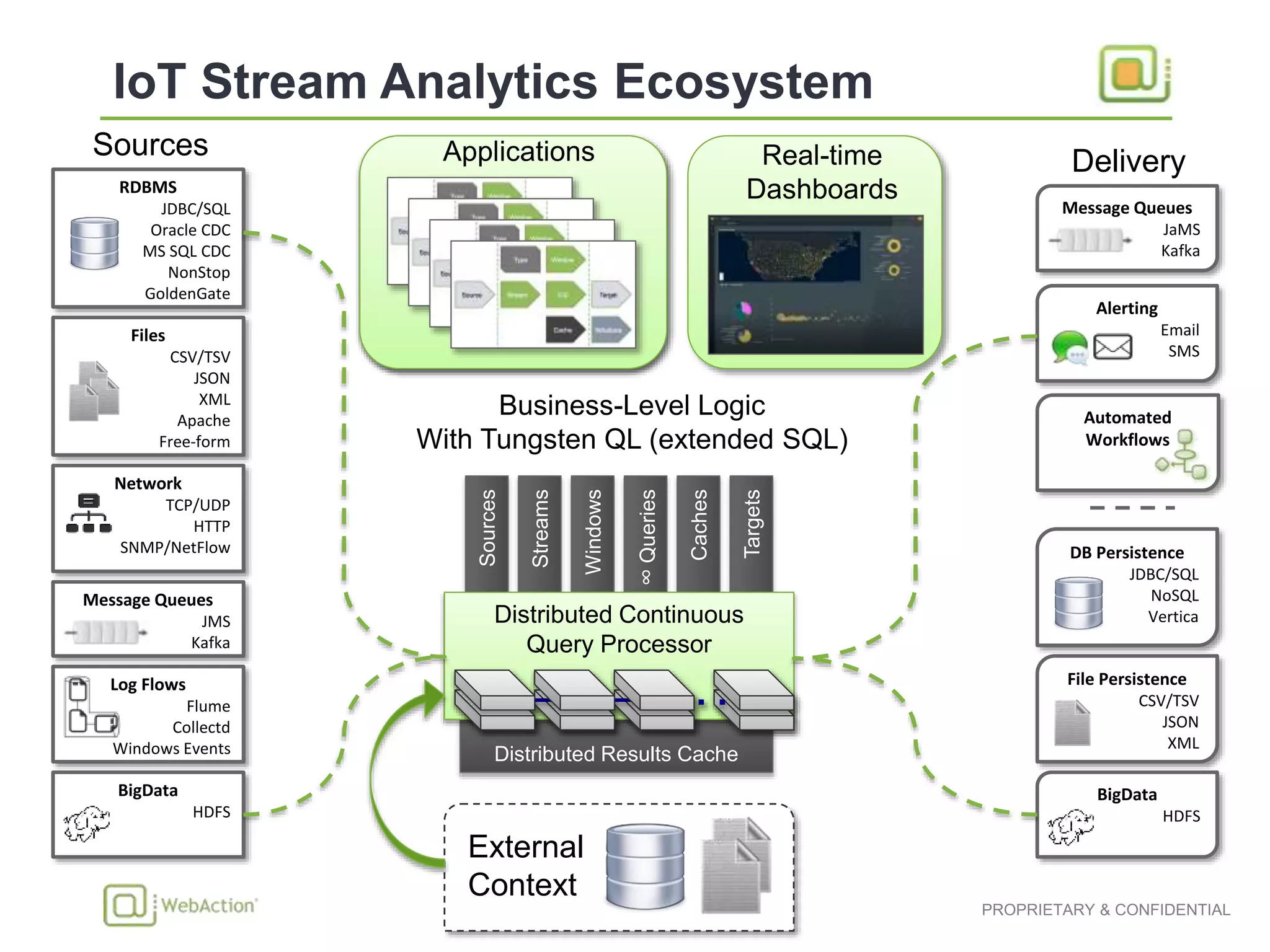

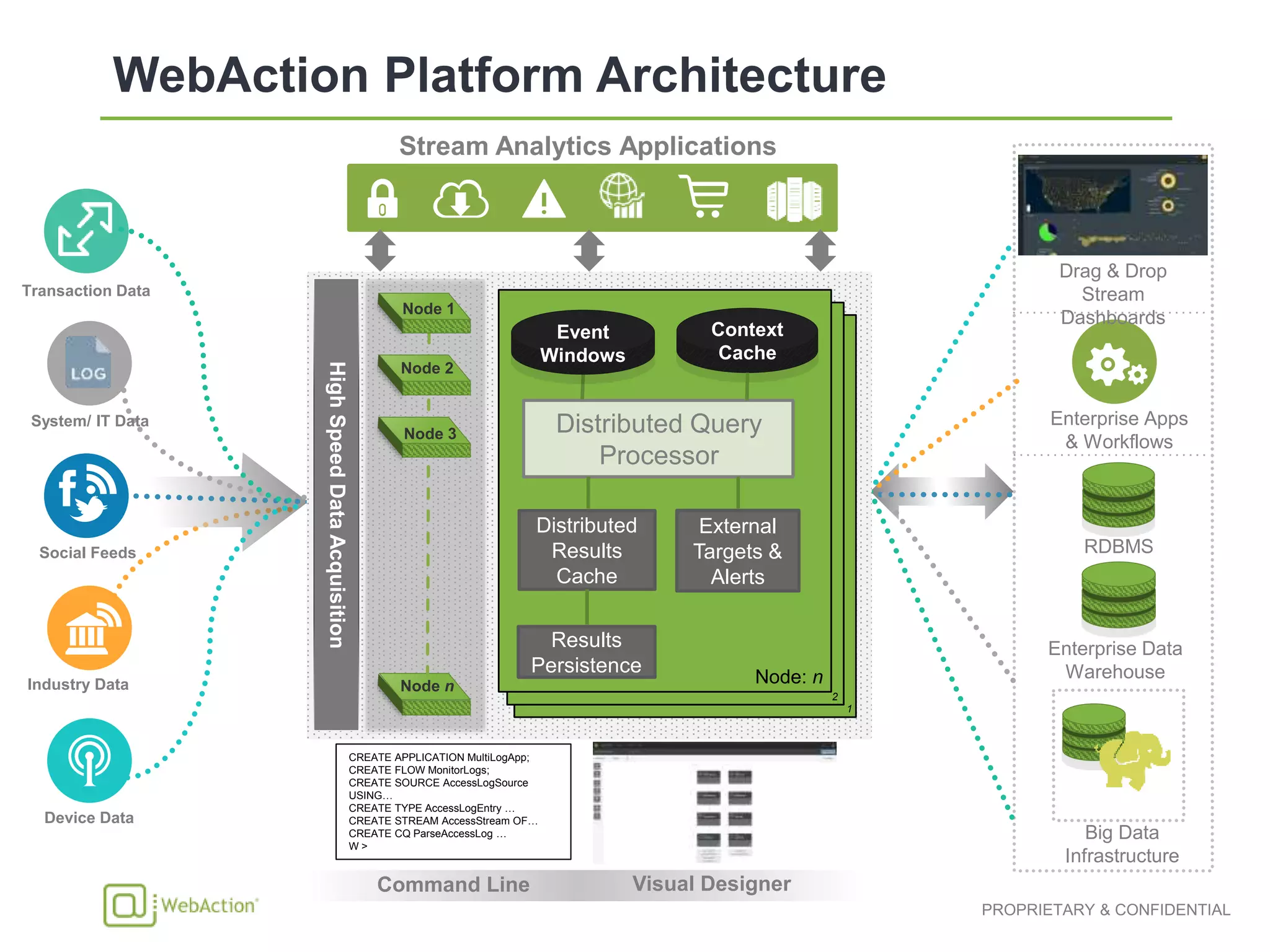

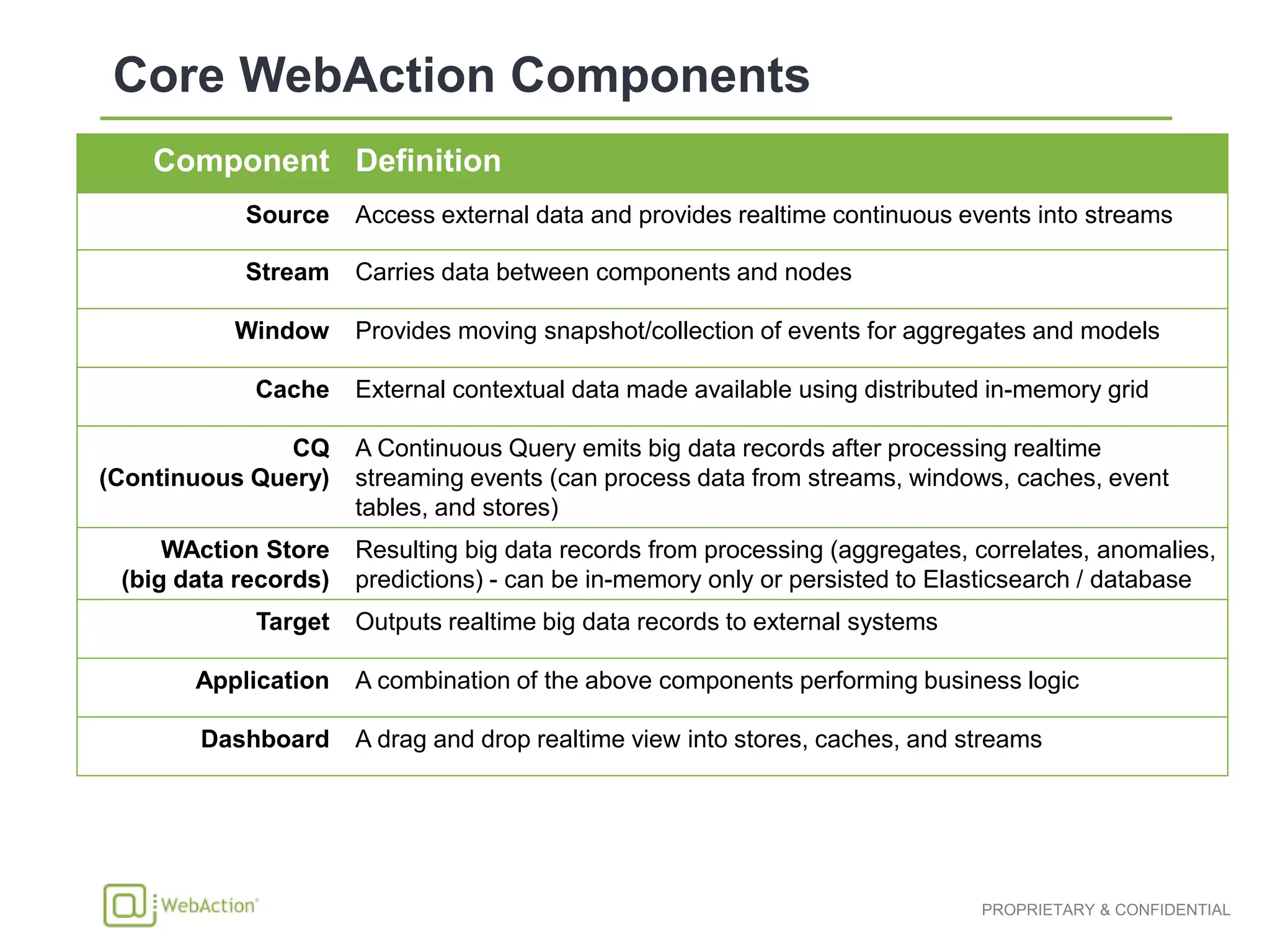

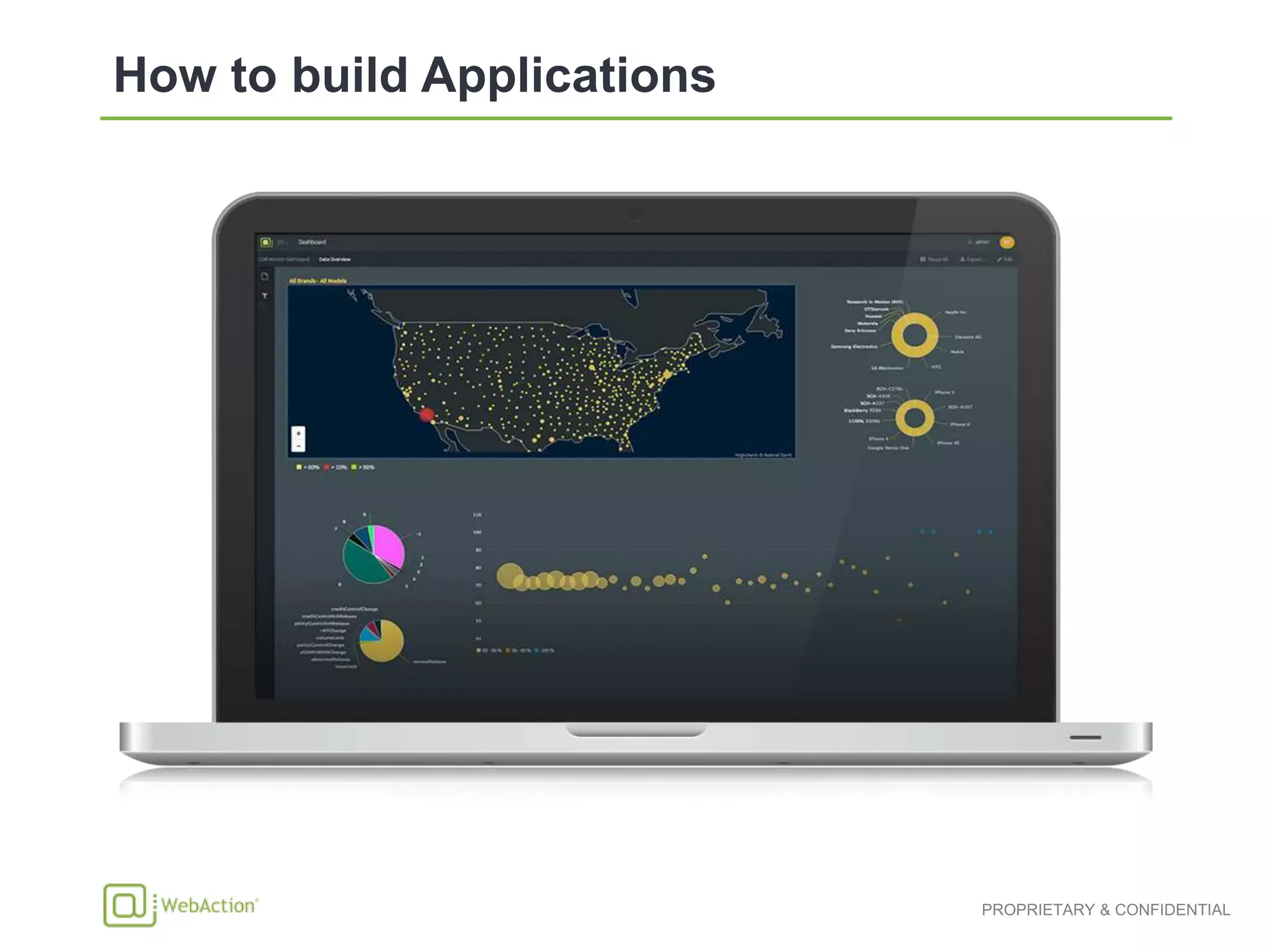

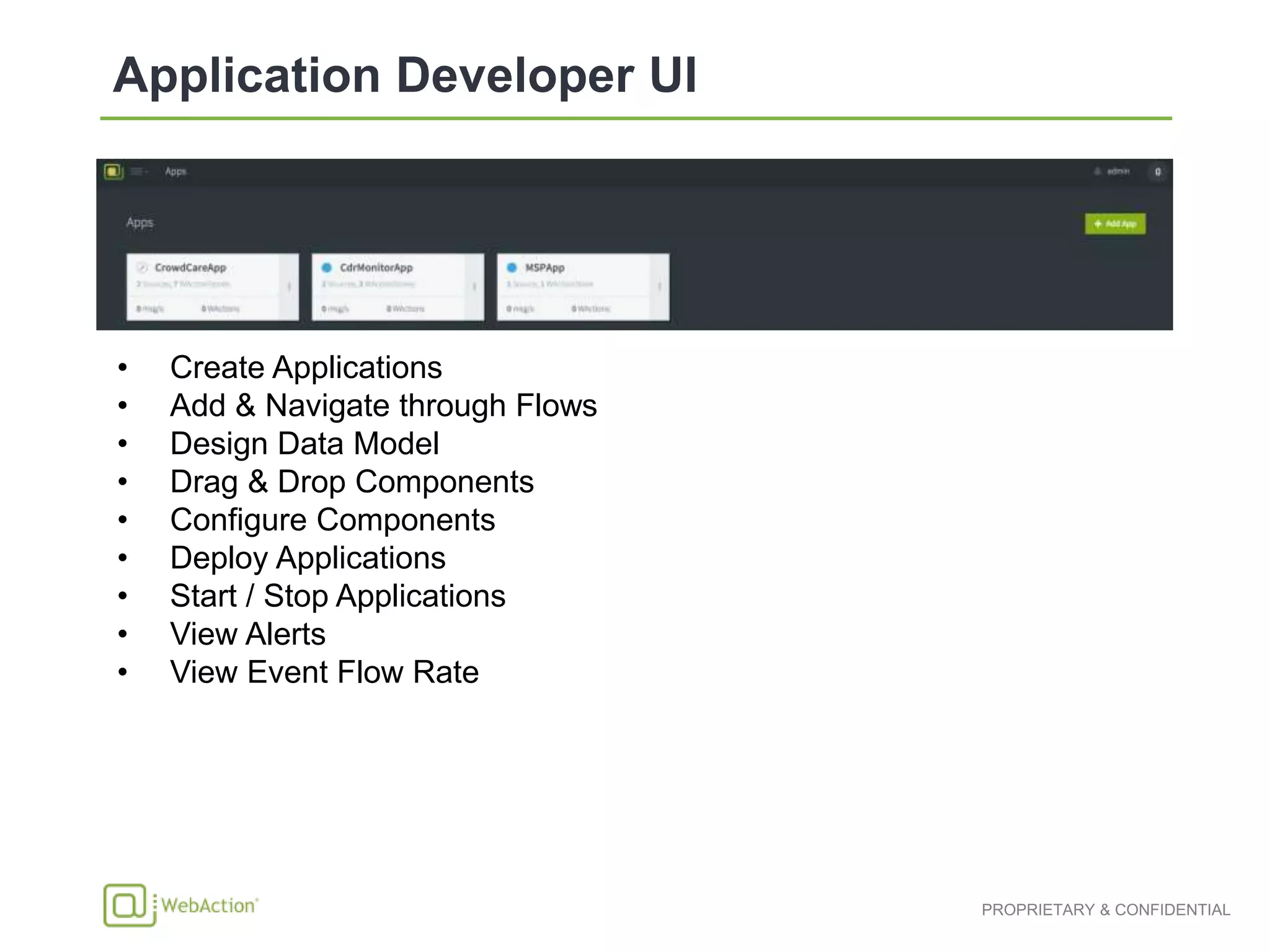

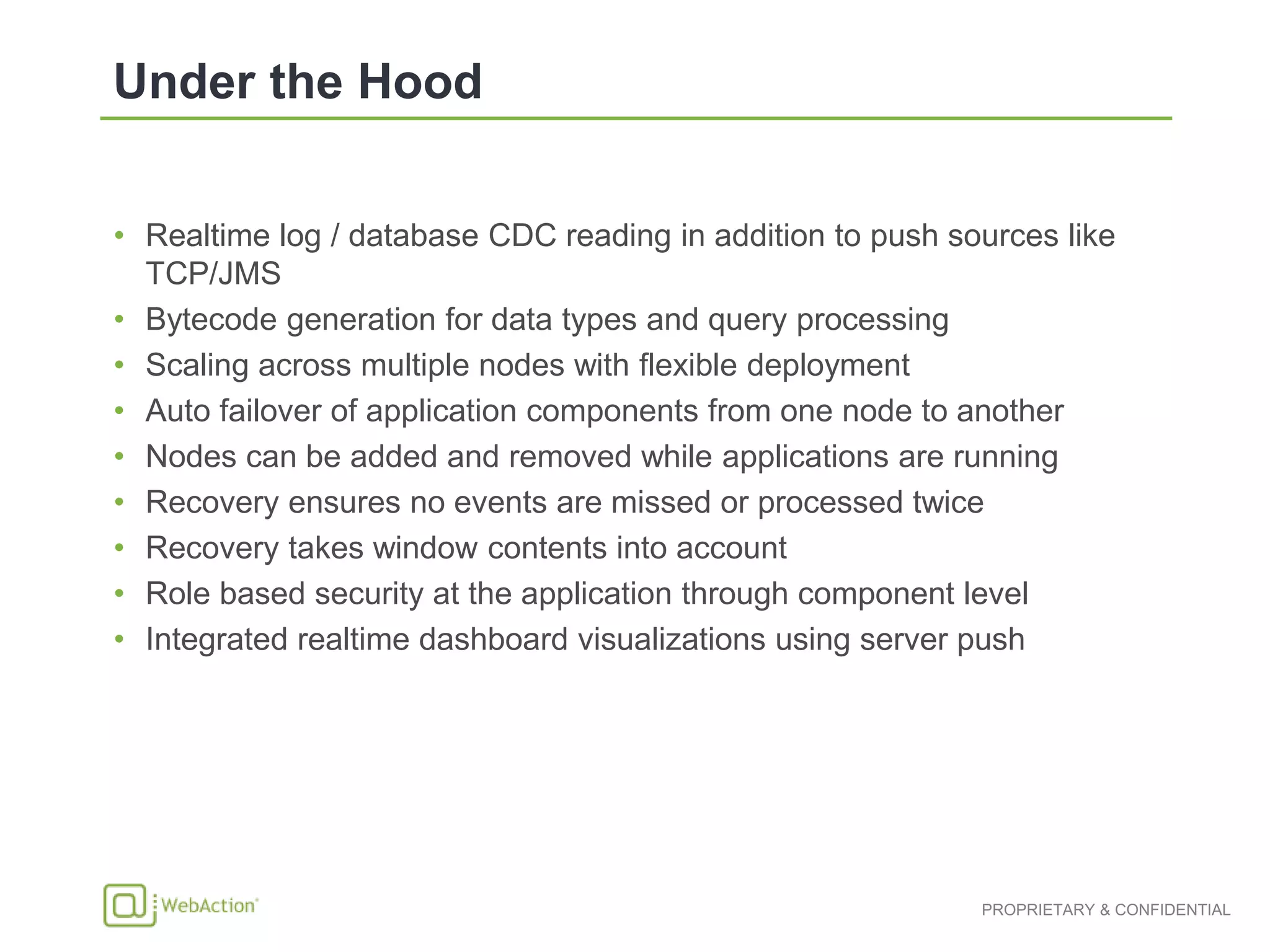

The document discusses the challenges and solutions associated with processing high-velocity streams of IoT data for analytics. It emphasizes the need for continuous connectivity, efficient data capture, and real-time processing to convert raw data into actionable insights. The key to deriving value from this data is the integration of real-time events with contextual information, supported by robust analytics platforms and scalable infrastructure.