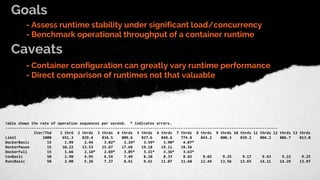

The document discusses the limitations of standard container lifecycle operations in achieving performance guarantees, presenting Bucketbench as a solution for benchmarking the performance of various container runtimes like Docker, Containerd, and Runc. It details the usage, configuration, and goals of Bucketbench, and highlights the impact of concurrent loads on runtime performance. Key findings and future improvements are also outlined, indicating areas for further exploration and development.

![https://github.com/estesp/bucketbench

A Go-based framework for benchmarking container

lifecycle operations (using concurrency and load)

against docker, containerd (0.2.x and 1.0), and runc.

The YAML file provided via the --benchmark flag will determine which

lifecycle container commands to run against which container runtimes, specifying

iterations and number of concurrent threads. Results will be displayed afterwards.

Usage:

bucketbench run [flags]

Flags:

-b, --benchmark string YAML file with benchmark definition

-h, --help help for run

-s, --skip-limit Skip 'limit' benchmark run

-t, --trace Enable per-container tracing during benchmark runs

Global Flags:

--log-level string set the logging level (info,warn,err,debug) (default "warn")

H

O

W

CAN

W

E

BEN

CH

M

ARK

VARIO

U

S

CO

N

TAIN

ER

RU

N

TIM

E

O

PTIO

N

S?

examples/basic.yaml

name: BasicBench

image: alpine:latest

rootfs: /home/estesp/containers/alpine

detached: true

drivers:

-

type: Docker

threads: 5

iterations: 15

-

type: Runc

threads: 5

iterations: 50

commands:

- run

- stop

- remove](https://image.slidesharecdn.com/mobysummitl-170918195709/85/Bucketbench-Benchmarking-Container-Runtime-Performance-6-320.jpg)

![Architecture

Two key interfaces:

Driver

Drives the container runtime

Bench

Defines the container operations

and provides results/statistics

type Driver interface {

Type() Type

Info() (string, error)

Create(name, image string, detached bool, trace bool) (Container, error)

Clean() error

Run(ctr Container) (string, int, error)

Stop(ctr Container) (string, int, error)

Remove(ctr Container) (string, int, error)

Pause(ctr Container) (string, int, error)

Unpause(ctr Container) (string, int, error)

}

type Bench interface {

Init(driverType driver.Type, binaryPath,

imageInfo string, trace bool) error

Validate() error

Run(threads, iterations int) error

Stats() []RunStatistics

Elapsed() time.Duration

State() State

Type() Type

Info() string

}

Driver implementations support:

docker, containerd (1.0 via gRPC Go client

API; 0.2.x via `ctr` binary), and runc today

Can easily be extended to support any

runtime which can implement the Driver

interface](https://image.slidesharecdn.com/mobysummitl-170918195709/85/Bucketbench-Benchmarking-Container-Runtime-Performance-8-320.jpg)