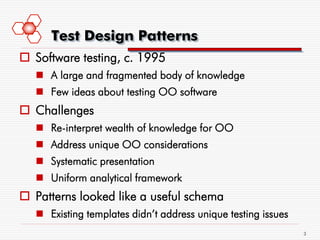

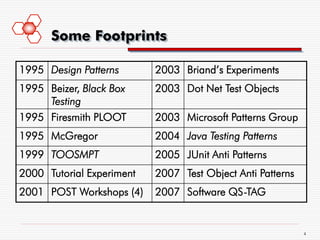

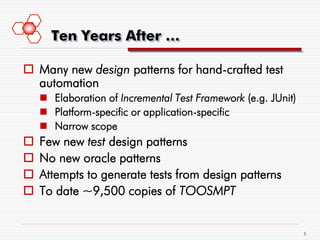

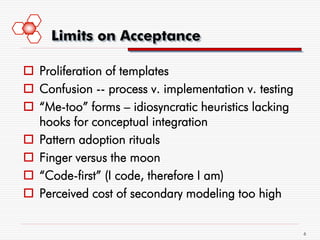

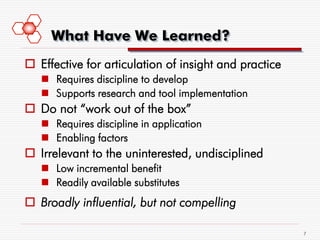

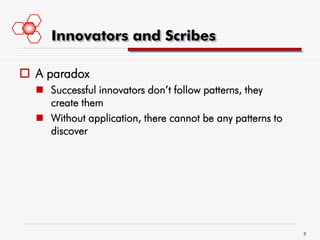

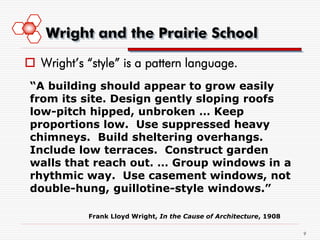

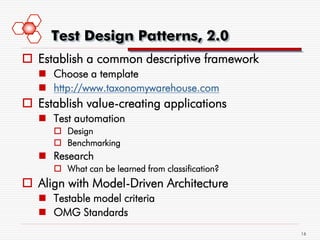

This document discusses the successes and challenges of using test patterns over the past 10 years. It describes how test patterns were useful for articulating testing insights and practices, but have not been widely adopted. Reasons for limited adoption include the proliferation of templates, confusion between different pattern types, and the perception that using patterns requires too much additional modeling effort. The document also suggests that while innovators create new patterns, those seeking existing patterns may be less influential. It argues that test patterns will remain important for building a conceptual framework for testing and efficiently sharing solutions, especially as software systems increase in complexity.