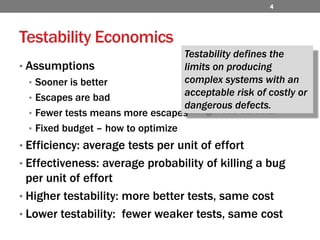

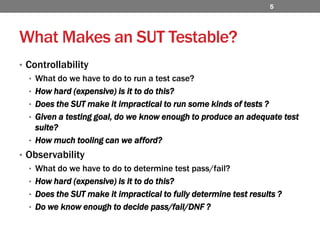

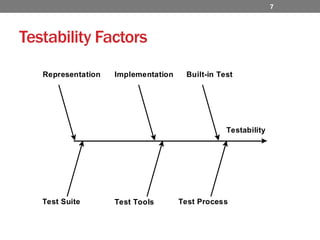

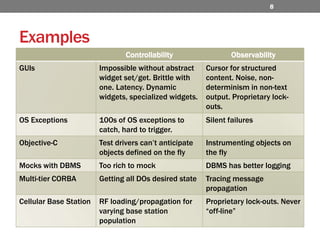

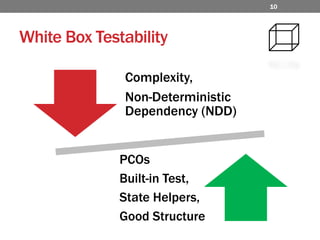

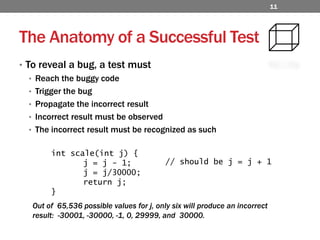

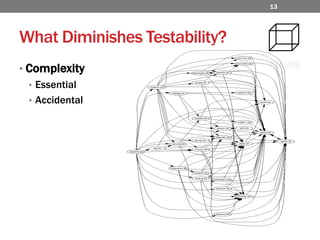

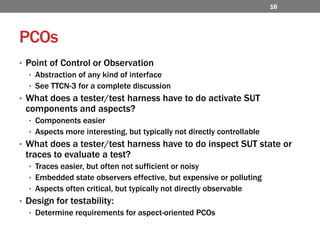

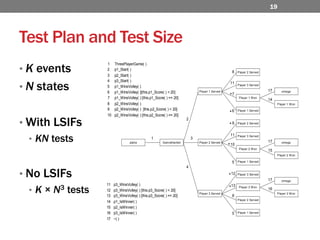

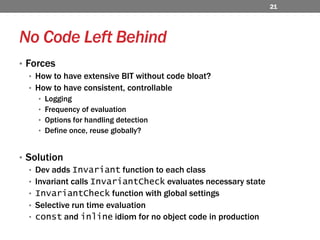

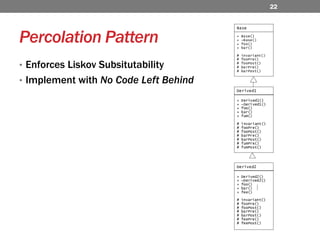

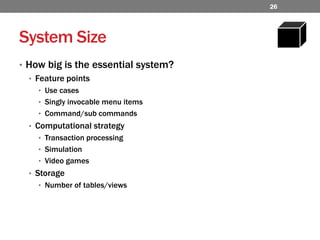

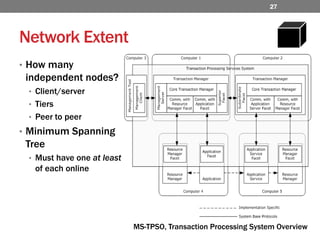

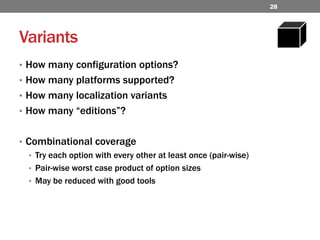

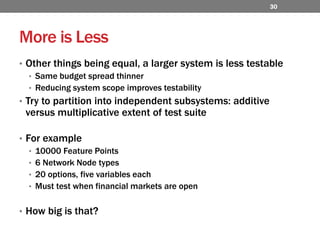

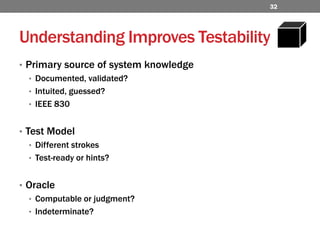

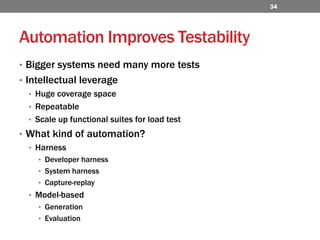

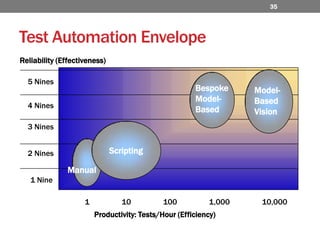

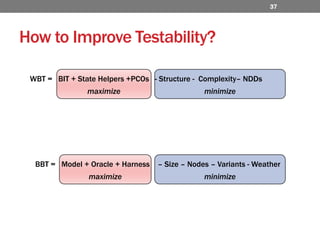

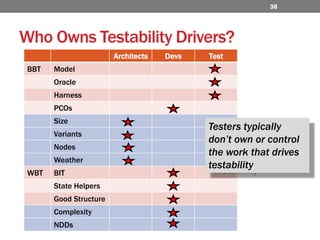

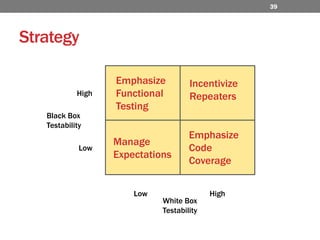

The document discusses the importance of testability in software systems, outlining factors that affect both white box and black box testability. It emphasizes the need for controllability and observability in test design, as well as strategies to improve testability through structured code and automation. Additionally, it addresses challenges such as system complexity, resource constraints, and the significance of understanding system requirements for effective testing.