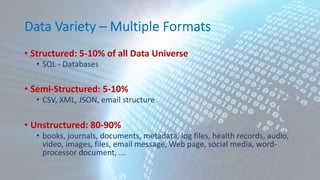

This document discusses big data and high performance computing. It begins by outlining where big data comes from, including sources like people, organizations, and machines. It then discusses opportunities that can be derived from big data analysis. The document explains the "big data problem" of how to process and store massive amounts of data across clusters. It provides background on why distributed computing solutions are needed now given exponential growth in digital data. The Hadoop ecosystem is introduced as a big data technology stack. The document outlines MapReduce and HDFS as core distributed computing architectures. It also discusses GPUs and massive parallelization using CUDA to enable high performance computing for big data workloads.

![MapReduce Architecture

INPUTDATA

OUTPUTDATA

Map()

Map()

Map()

Map()

Reduce()

Reduce()

Split

[k1, v1]

Sort

by k

Merge

[k1, [v1, v2, …, vN]]](https://image.slidesharecdn.com/amcham-big-data-hpc-200512-low-200513184135/85/Big-Data-and-High-Performance-Computing-29-320.jpg)