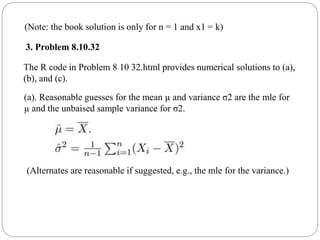

This document discusses statistical methods, focusing on Bayesian inference and maximum likelihood estimation through various problems involving discrete random variables. It includes examples related to parameter estimation and posterior distributions, emphasizing the application of advanced statistical techniques. Additionally, it provides R code for numerical solutions and calculations related to confidence intervals and posterior distributions.

![(d). Let p have a uniform distribution on [0, 1]. The posterior distribution

of p has density

which can be recognized as a Beta(a∗, b∗) distribution with

The mean of the posterior distribution is the mean of the Beta(a∗, b∗)

distribution which is](https://image.slidesharecdn.com/bayesianinferenceandmaximumlikelihood-240723121943-bd15a3a5/85/Bayesian-Inference-and-Maximum-Likelihood-9-320.jpg)

![The posterior distribution has density:

Which normalizes to a Beta(a ∗,b∗) distribution where

a = a +3, and b∗ = b + 97.

The mean of the posterior distribution is

E[θ | X] = a ∗/(a + b∗).

For a = b = 1 we have E[θ | X] =4/102 and](https://image.slidesharecdn.com/bayesianinferenceandmaximumlikelihood-240723121943-bd15a3a5/85/Bayesian-Inference-and-Maximum-Likelihood-15-320.jpg)

![For a.5, and b = 5 we have E[θ | X] = 3.5/105.5.

The R code in P roblem 8 10 63.html plots the prior/posterior densities

and computes the posterior means.](https://image.slidesharecdn.com/bayesianinferenceandmaximumlikelihood-240723121943-bd15a3a5/85/Bayesian-Inference-and-Maximum-Likelihood-16-320.jpg)