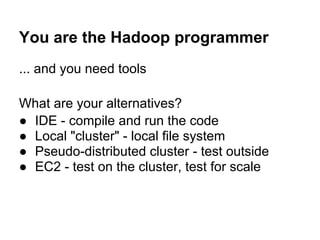

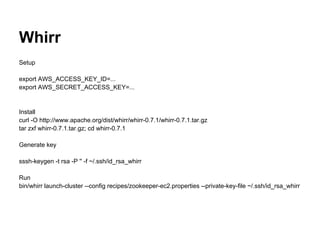

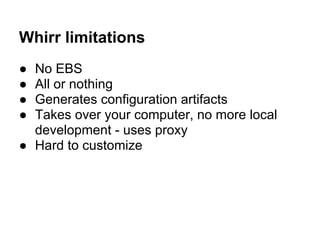

This document discusses options for running Hadoop clusters on Amazon EC2, including using tools like Whirr to automate cluster setup, limitations of Whirr, using Amazon EMR, manually setting up clusters, and advanced options like monitoring cluster health. It also provides context on Hadoop, clouds, and related technologies like HBase, Cassandra, and different Hadoop distributions from Cloudera, MapR, and others.