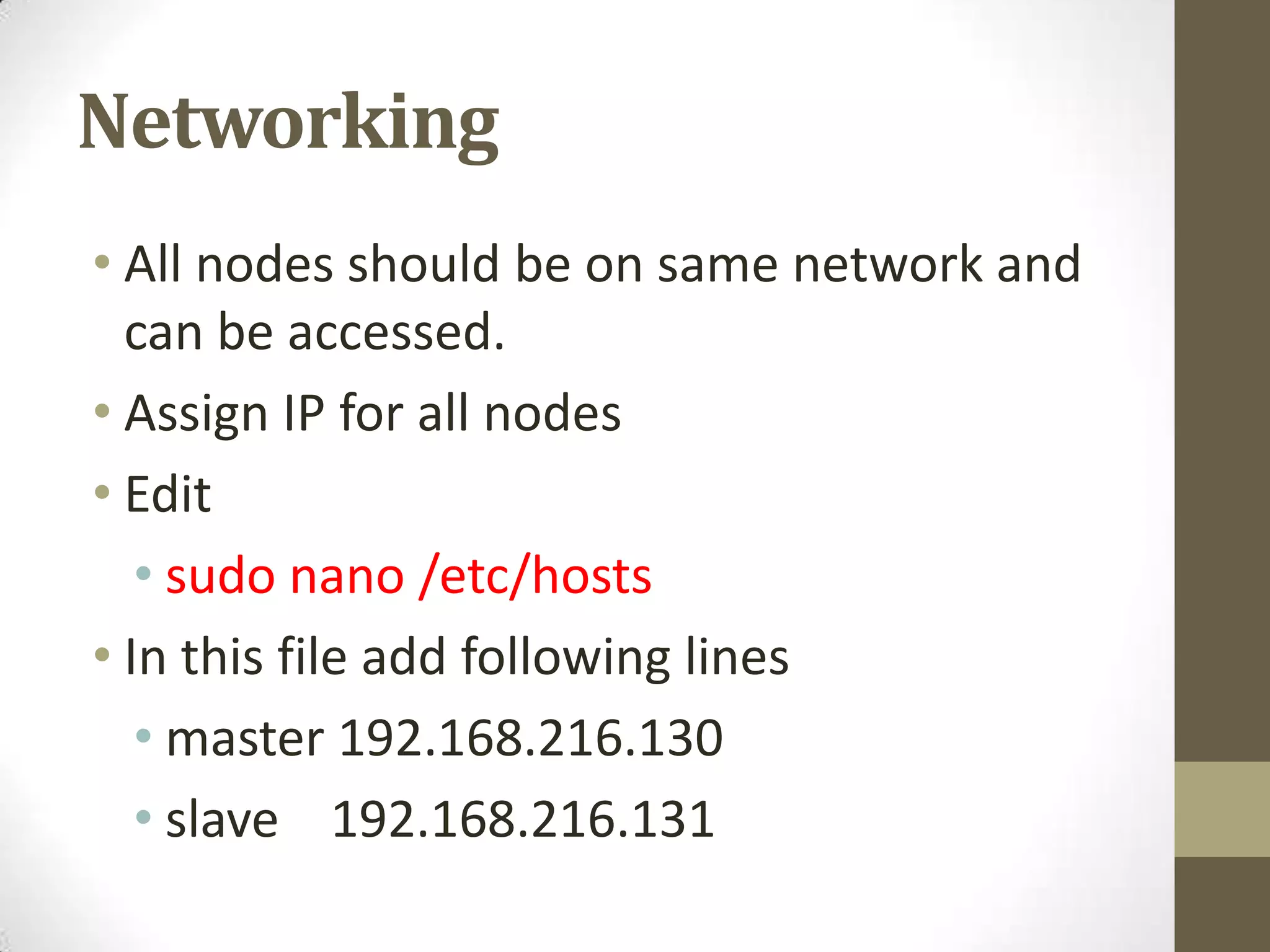

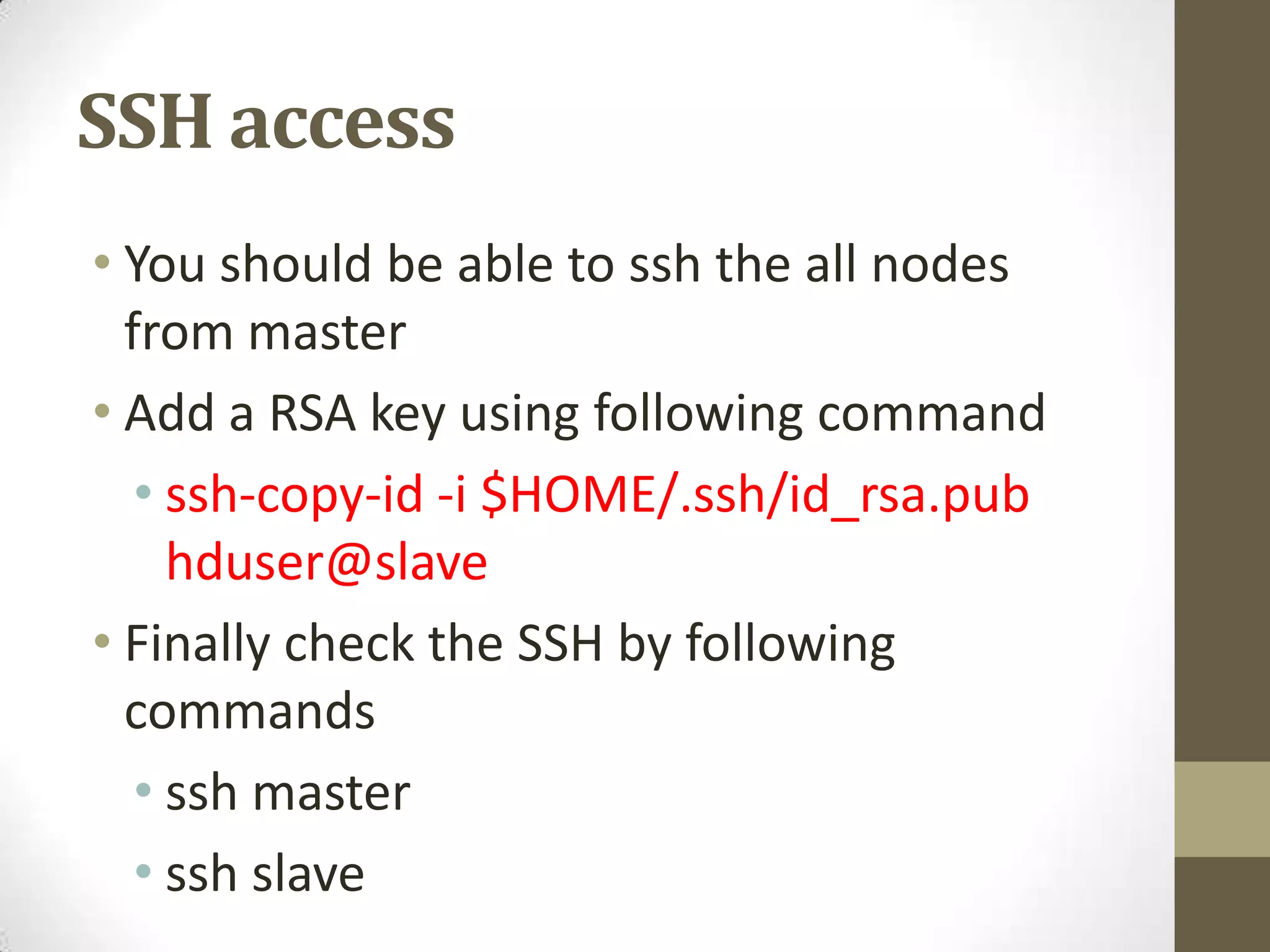

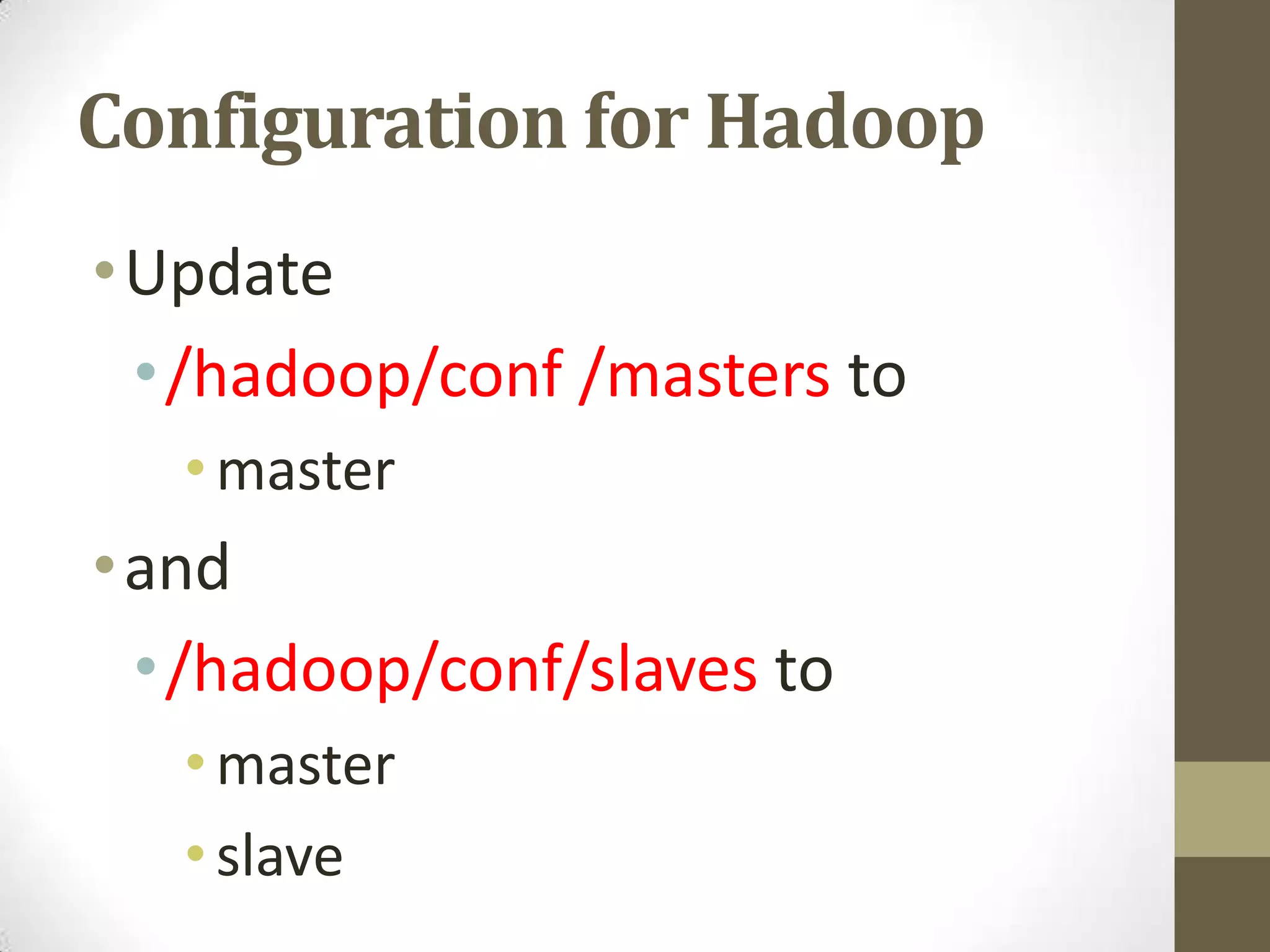

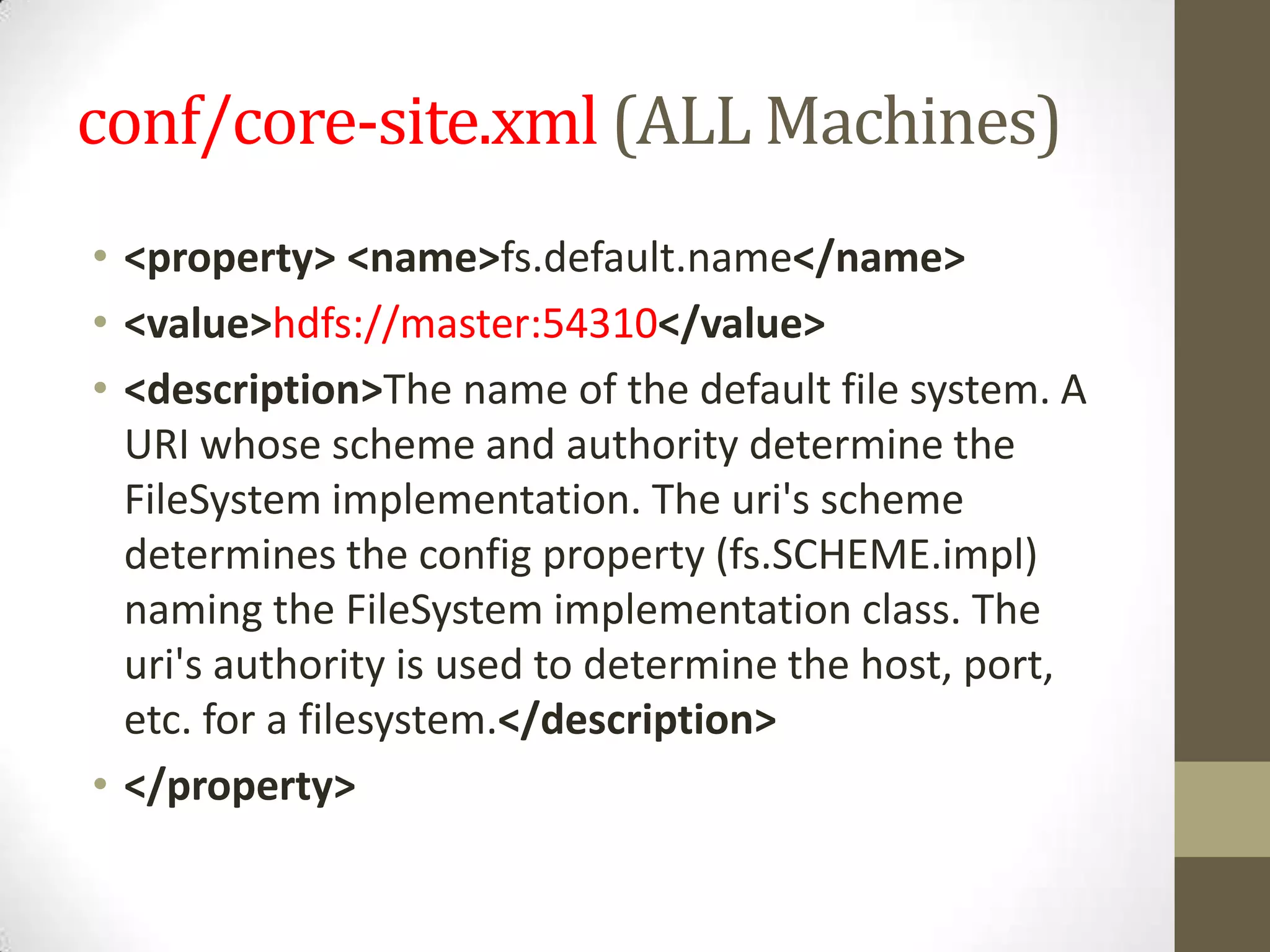

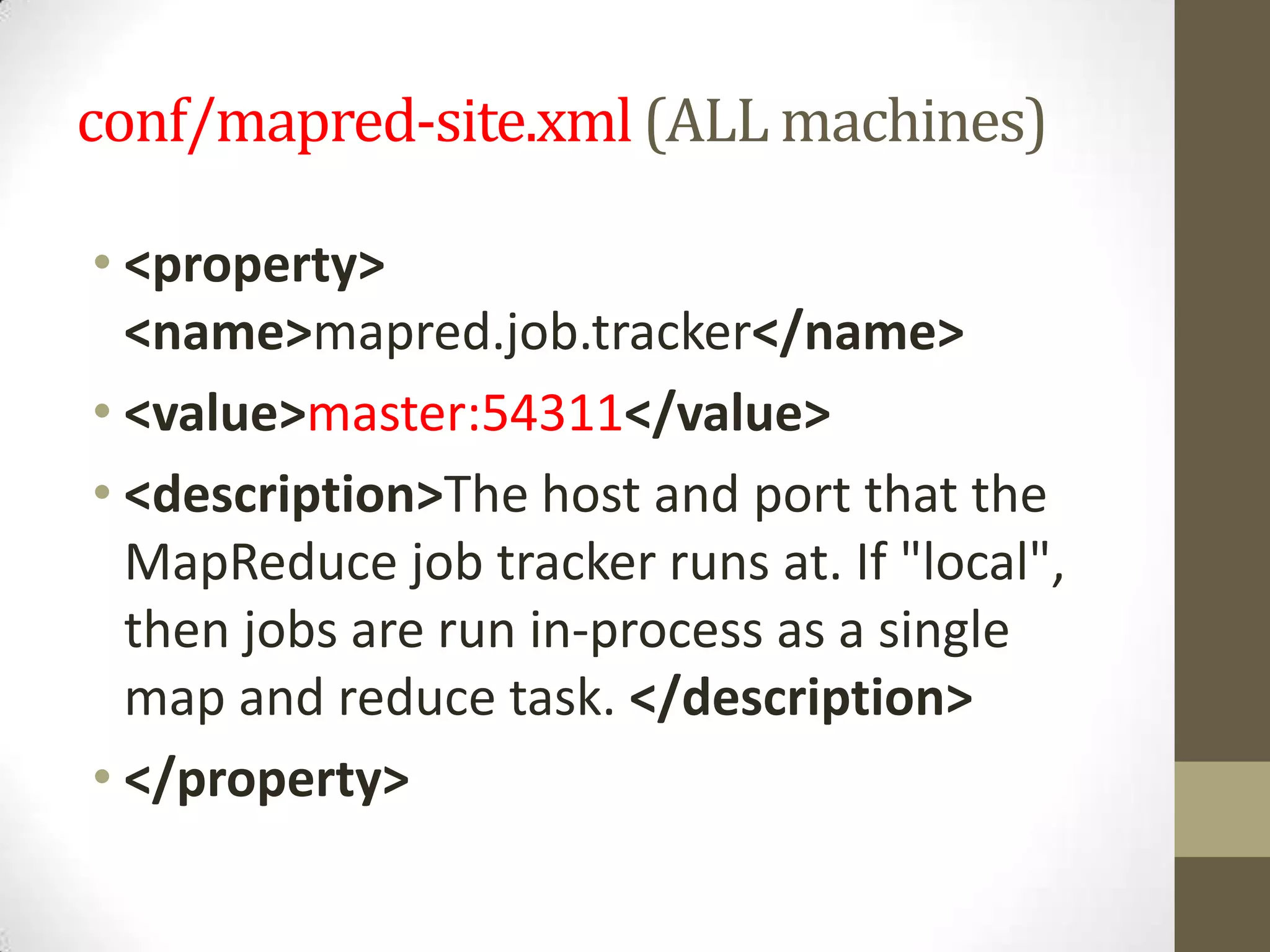

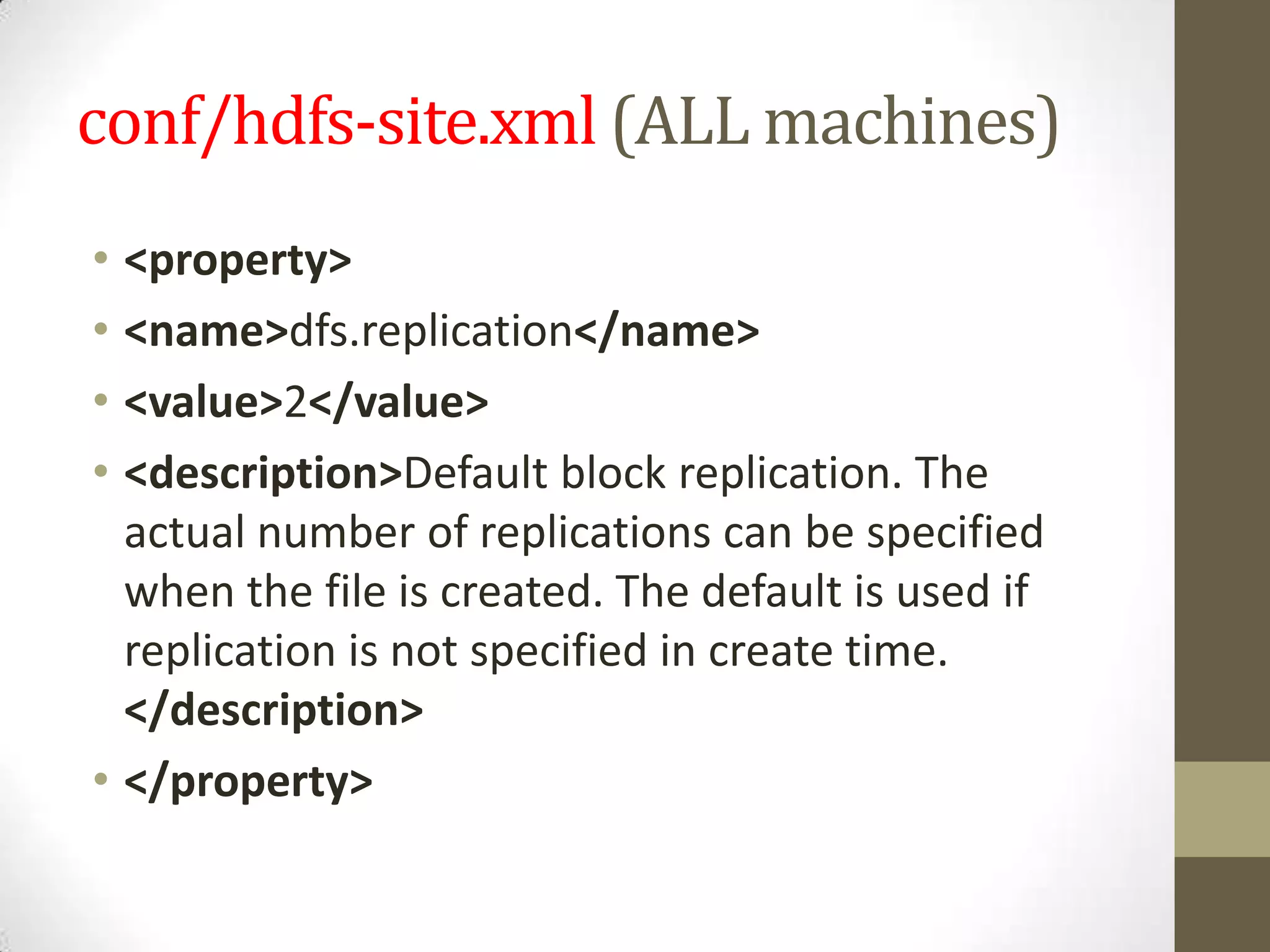

This document provides instructions for setting up a multi-node Hadoop cluster on multiple machines. It describes configuring networking and SSH access between nodes, editing configuration files, formatting the HDFS filesystem, and starting the Hadoop daemons and a sample MapReduce job.