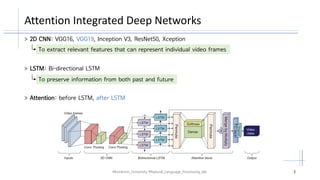

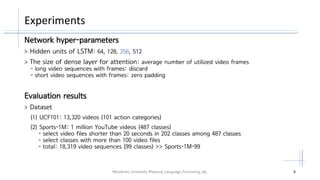

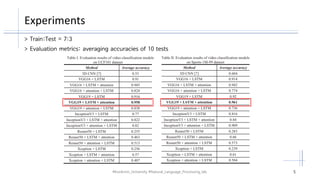

The document discusses the integration of attention mechanisms into deep networks for video classification, utilizing convolutional neural networks (CNN) and long short-term memory (LSTM) models. It presents experiments conducted on datasets such as UCF101 and Sports-1M, highlighting evaluation metrics and network hyper-parameters. The findings suggest that attention mechanisms improve accuracy and that VGG19 is optimal for integration due to its low dimensions.