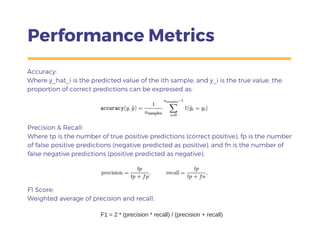

A binary classifier predicts outcomes that are either 0 or 1. It is trained on historical data containing features and targets, and learns patterns to predict probabilities of each class for new data. Performance is evaluated using metrics like accuracy, precision, recall from a confusion matrix, and ROC AUC. The bias-variance tradeoff and over/under fitting are minimized by optimizing model complexity during training and testing.