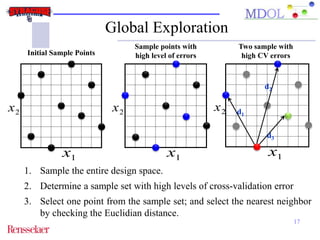

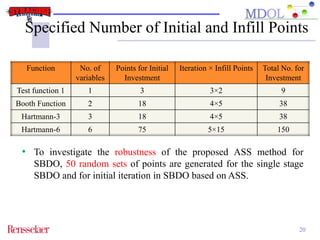

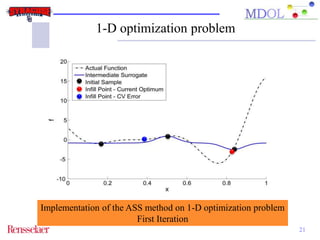

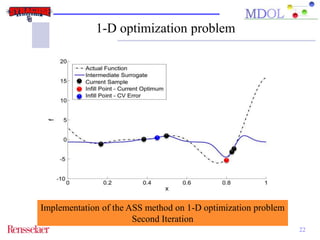

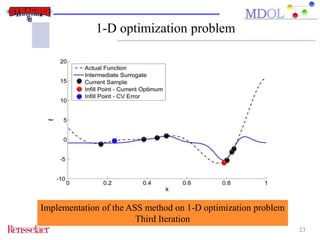

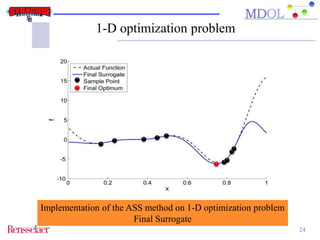

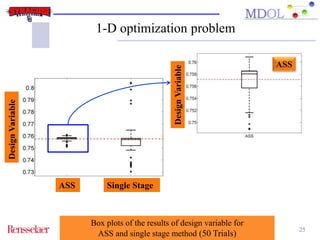

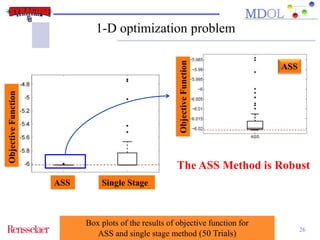

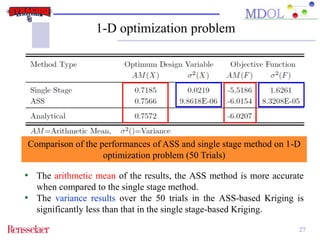

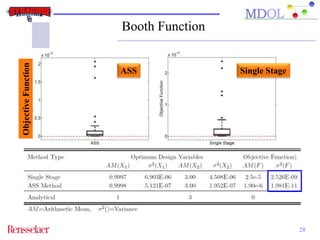

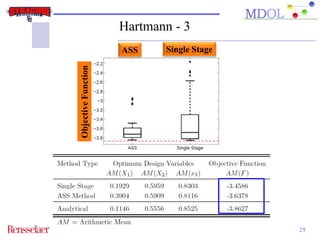

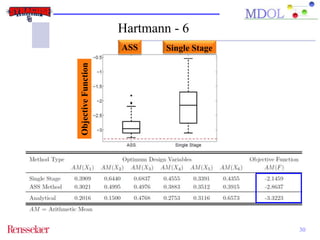

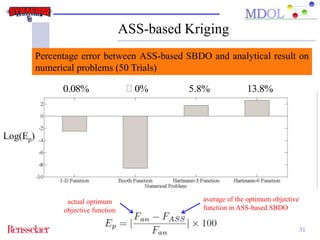

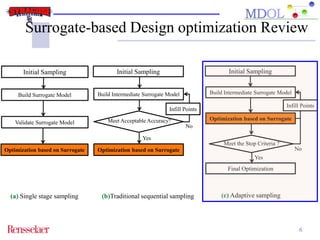

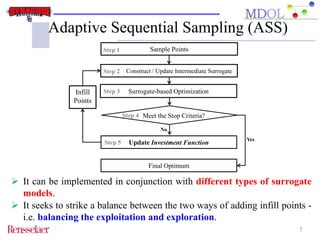

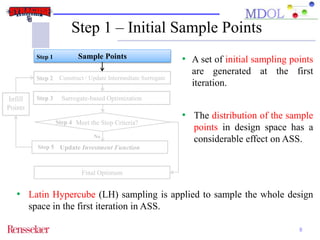

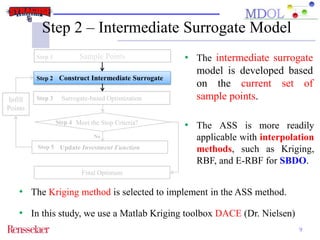

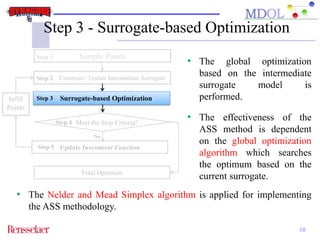

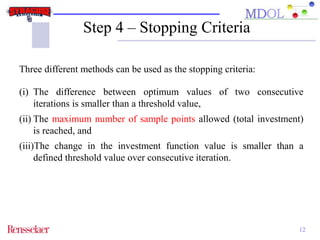

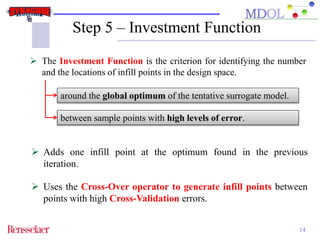

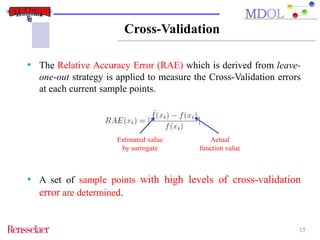

The document discusses the development of an adaptive sequential sampling (ASS) methodology for surrogate-based design optimization (SBDO) aimed at improving the accuracy of surrogate models in engineering design. By strategically adding infill points where surrogates exhibit high error and near the global optimum, the ASS method enhances local exploitation and global exploration, yielding better optimization results. Initial numerical examples validate the effectiveness of the ASS method over traditional single-stage approaches, demonstrating improved efficiency and accuracy.

![Cross-Over

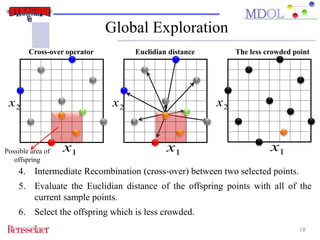

• This operator is used to combine information from two current

16

sample points with high levels of cross-validation error.

• The Intermediate Recombination method is only applicable to real

variables to combine the genetic material of two parents.

α represents a scaling factor, and is chosen randomly between the

interval [−d, 1 + d].

• In this study, the standard intermediate recombination is used and

the value of d is assumed to be zero (d = 0)](https://image.slidesharecdn.com/alisdmassv3-141124181322-conversion-gate02/85/ASS_SDM2012_Ali-16-320.jpg)