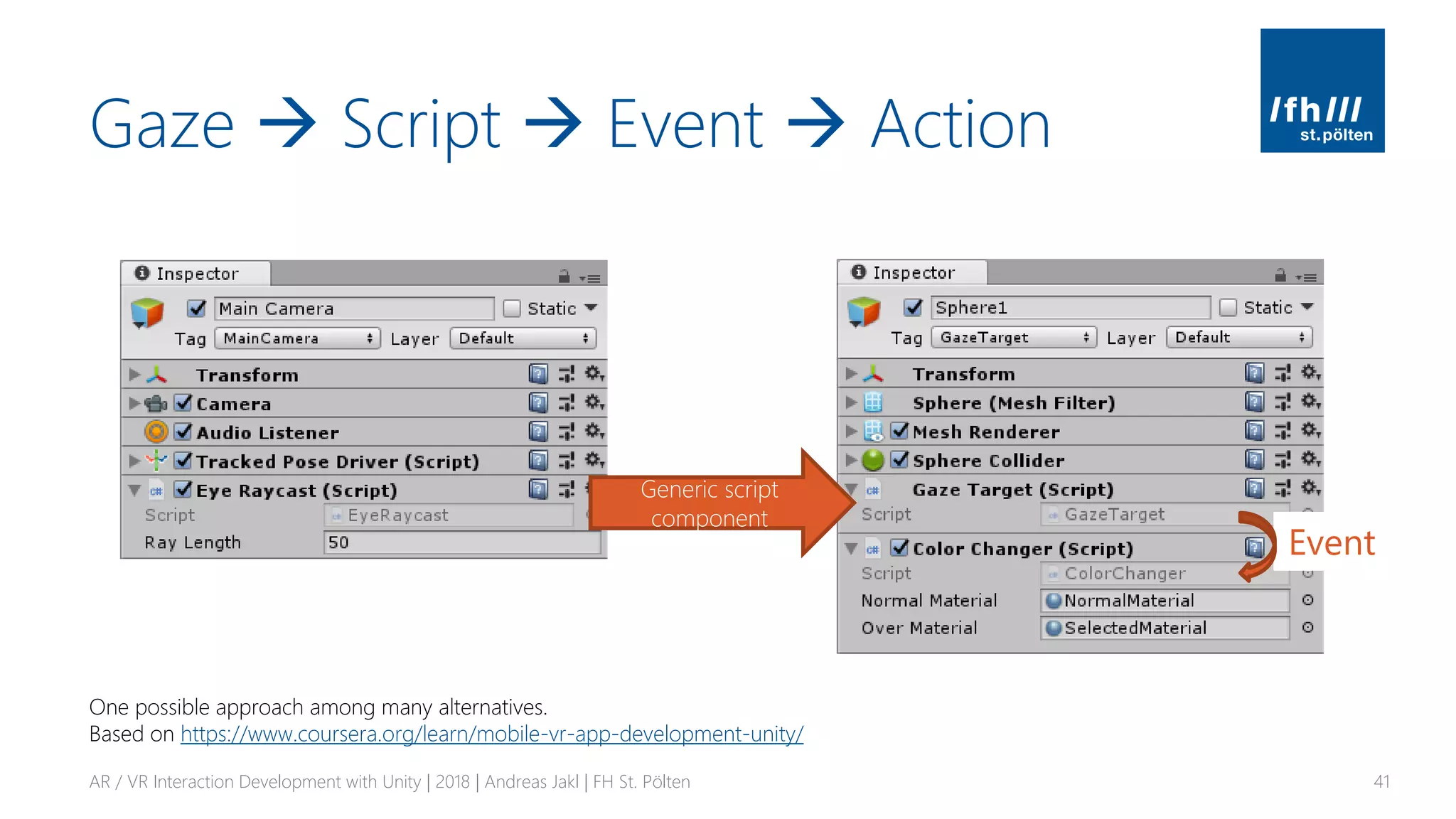

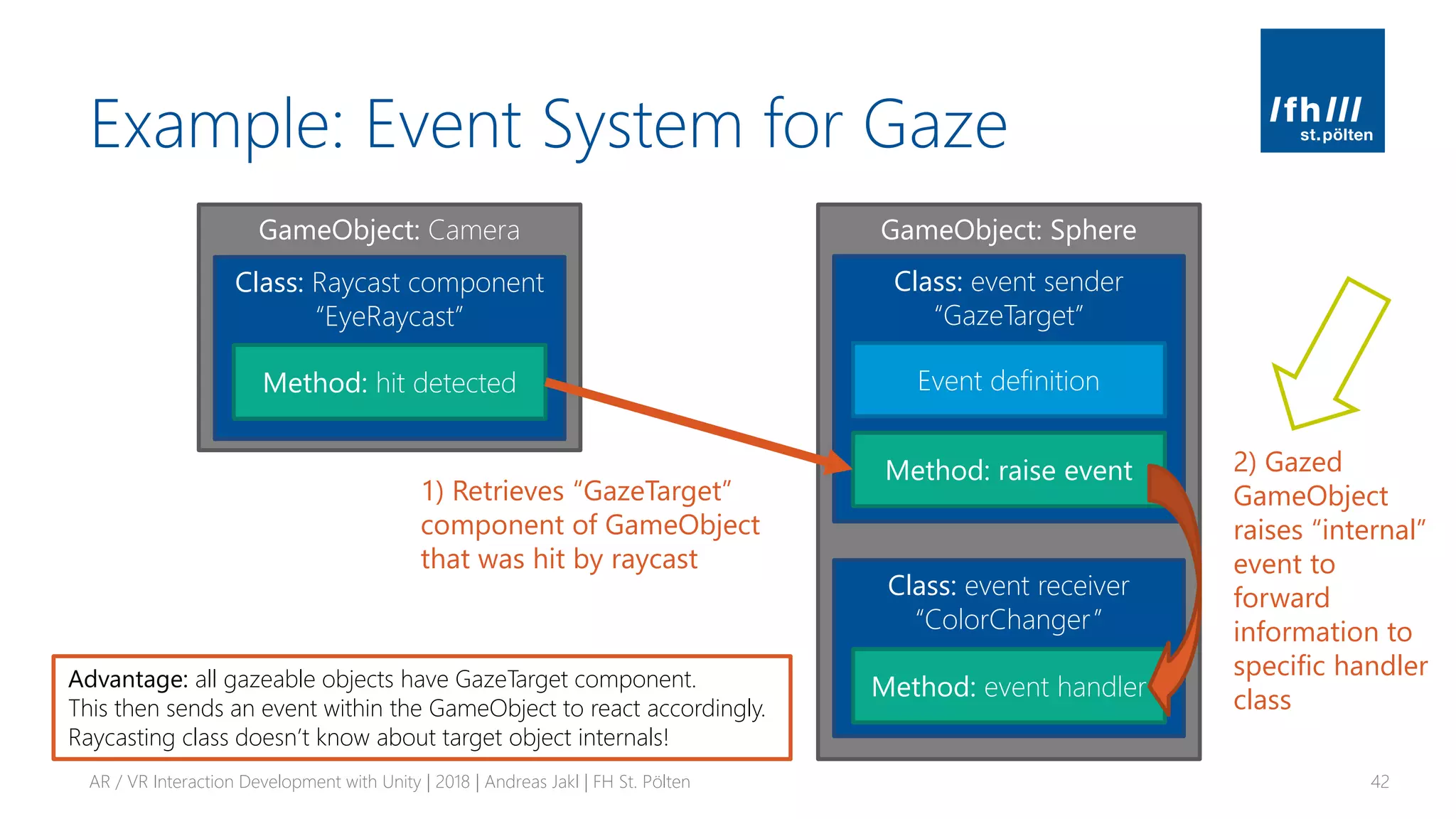

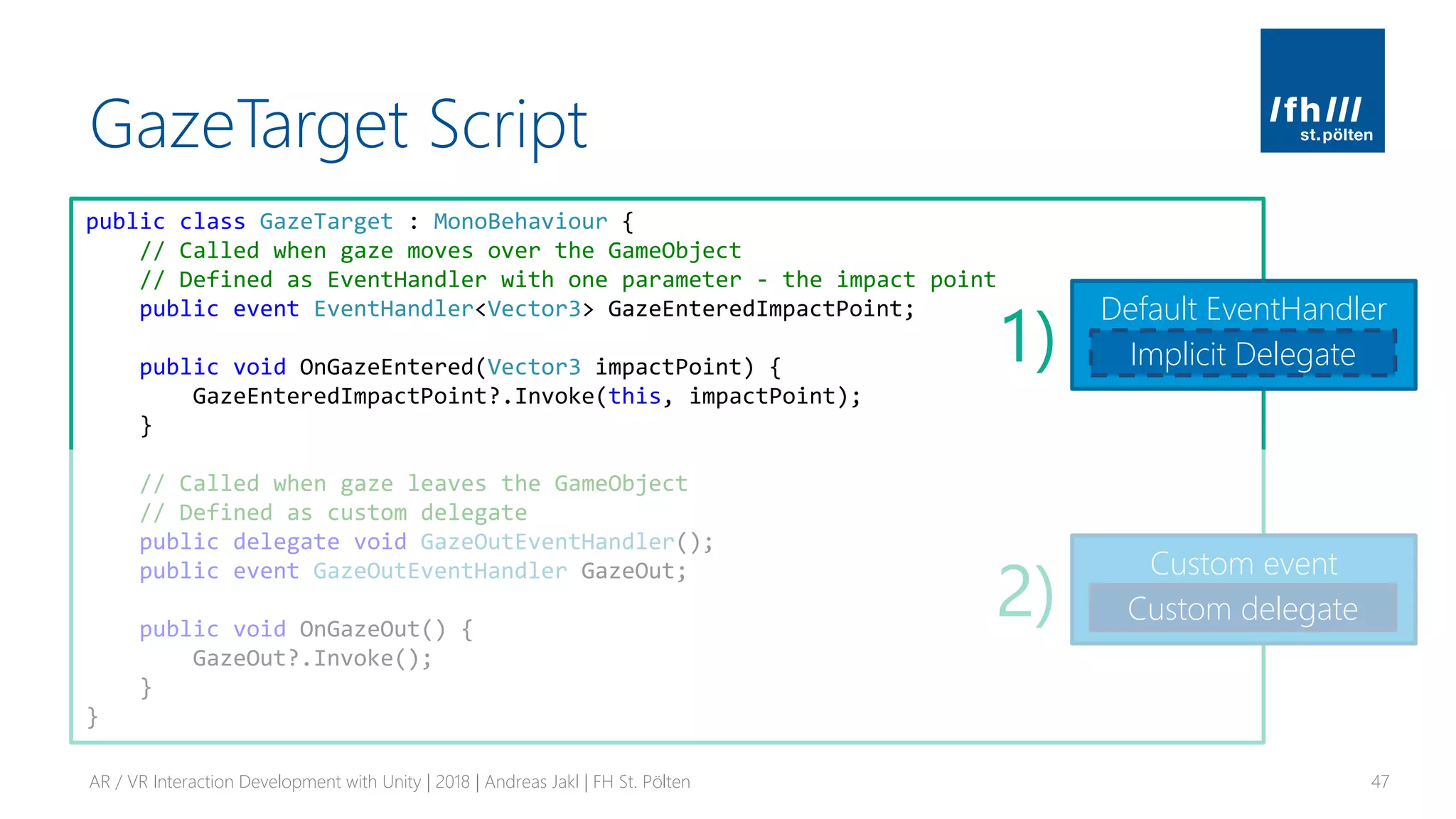

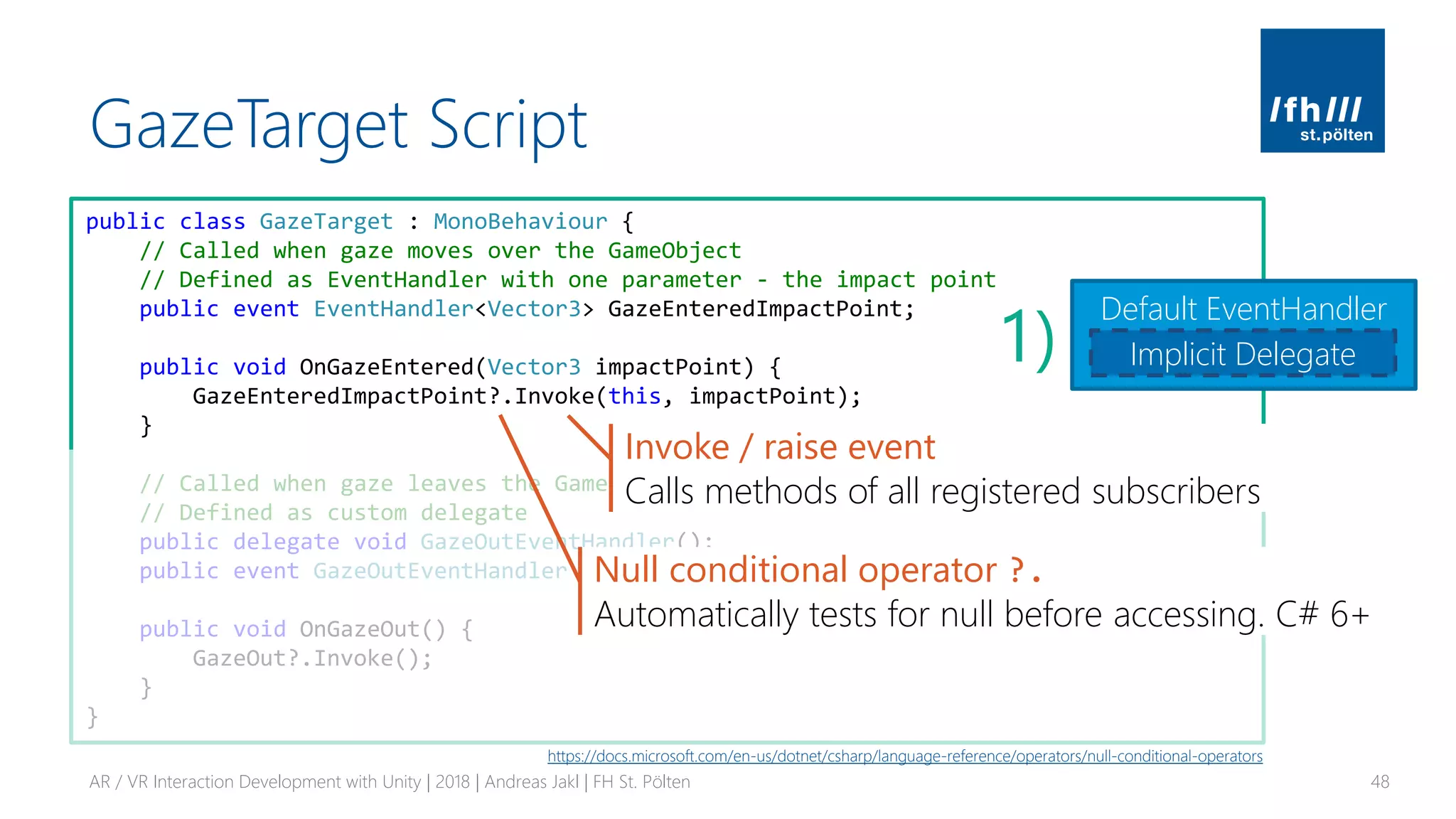

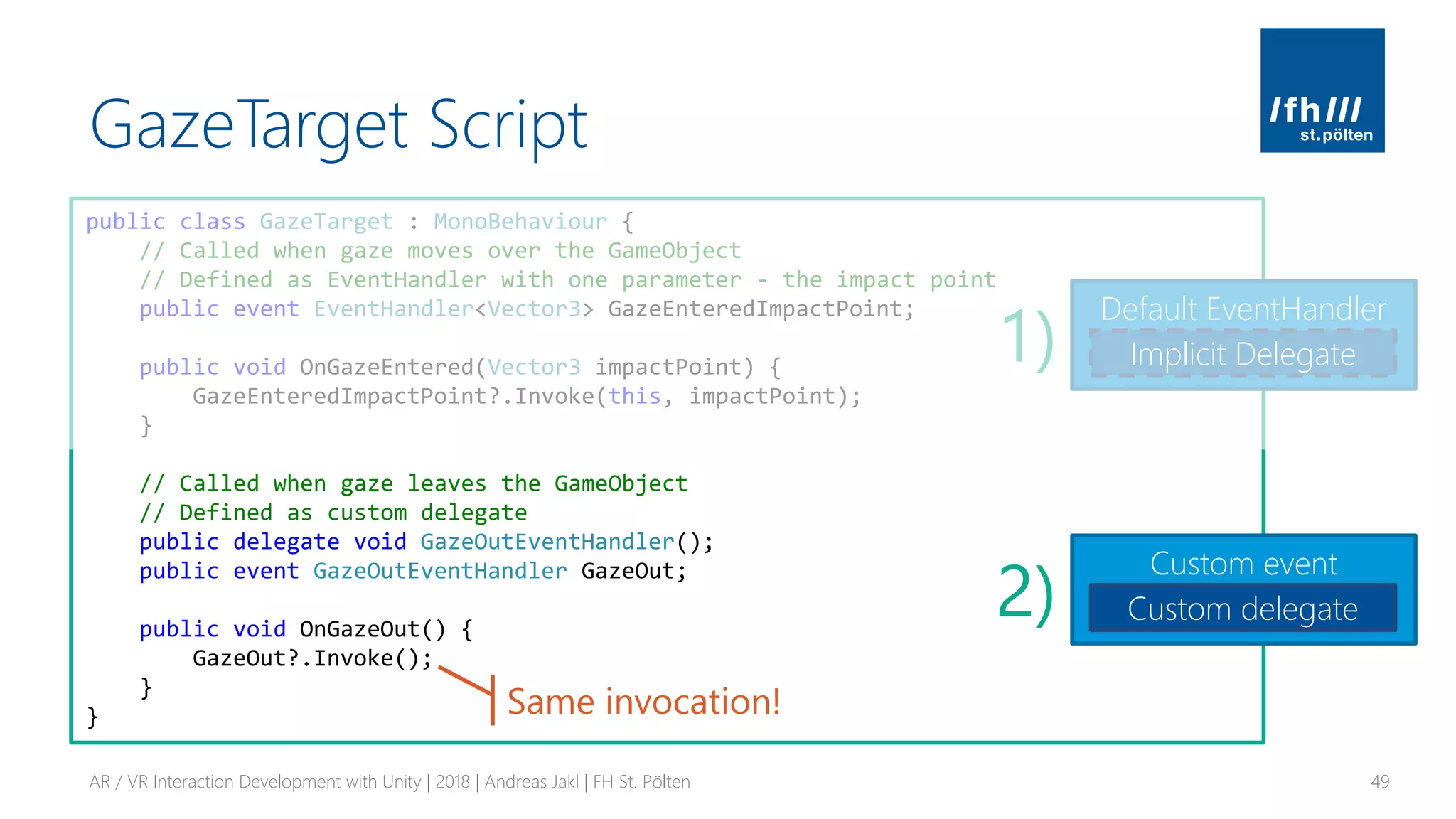

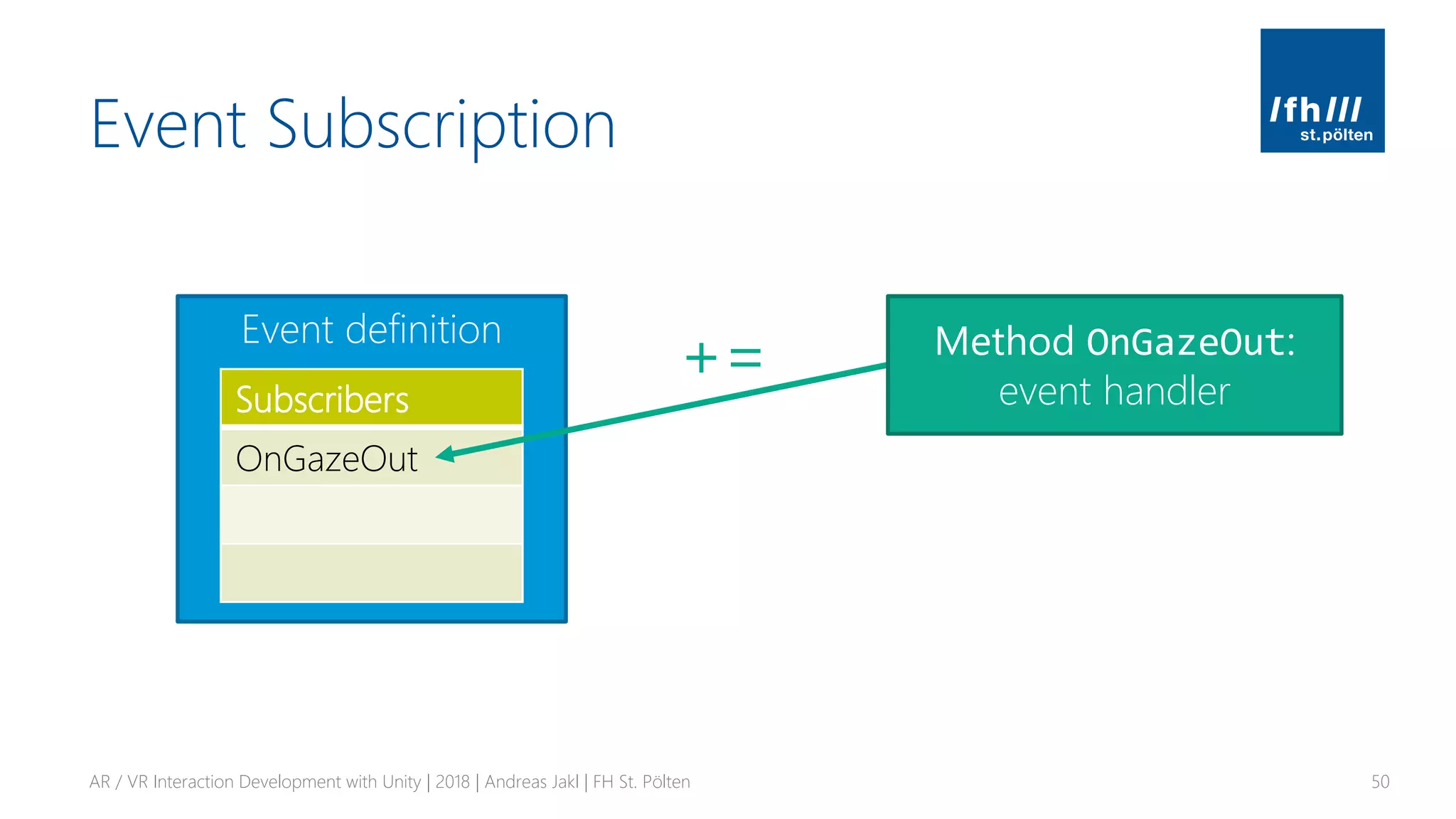

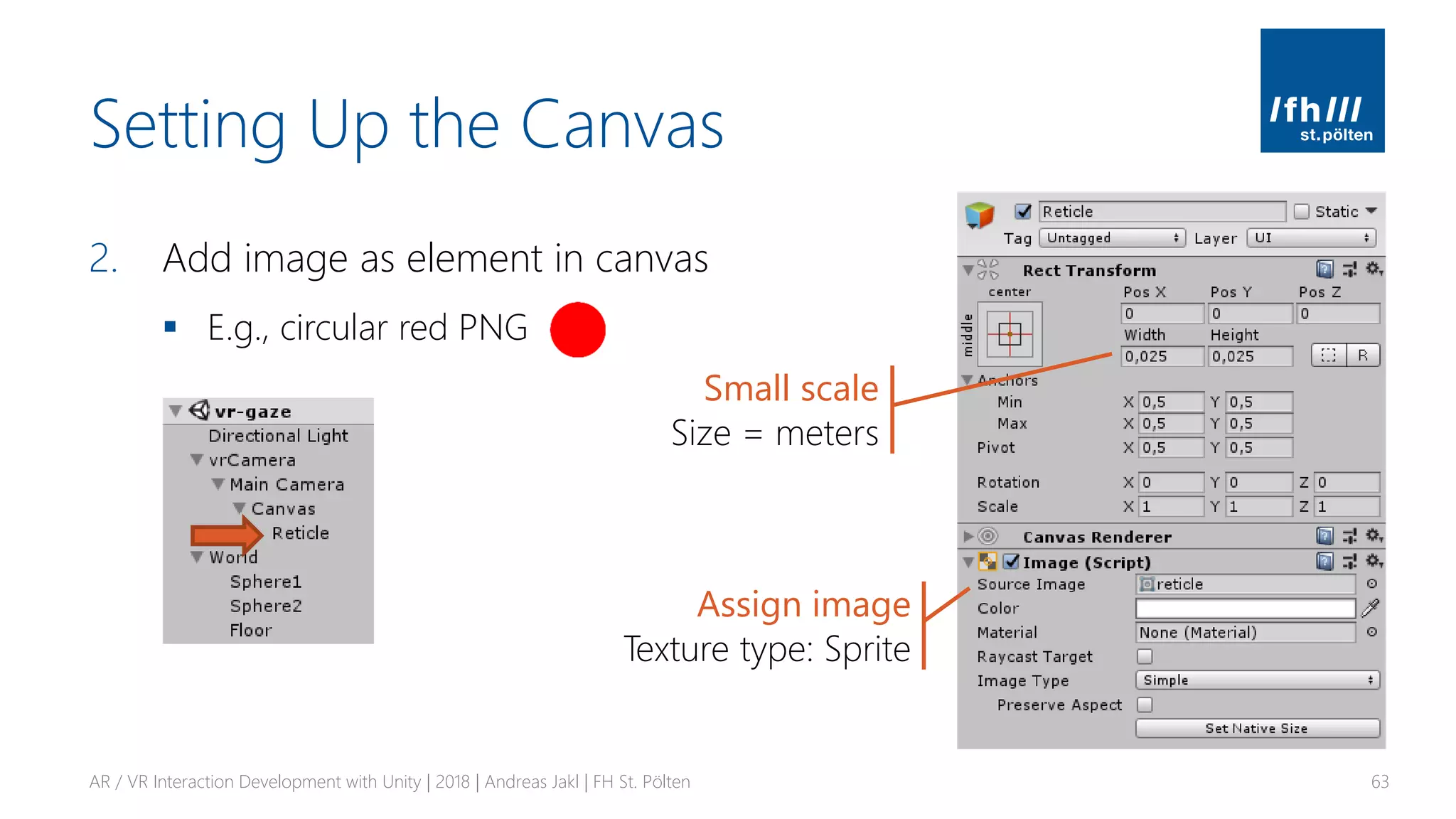

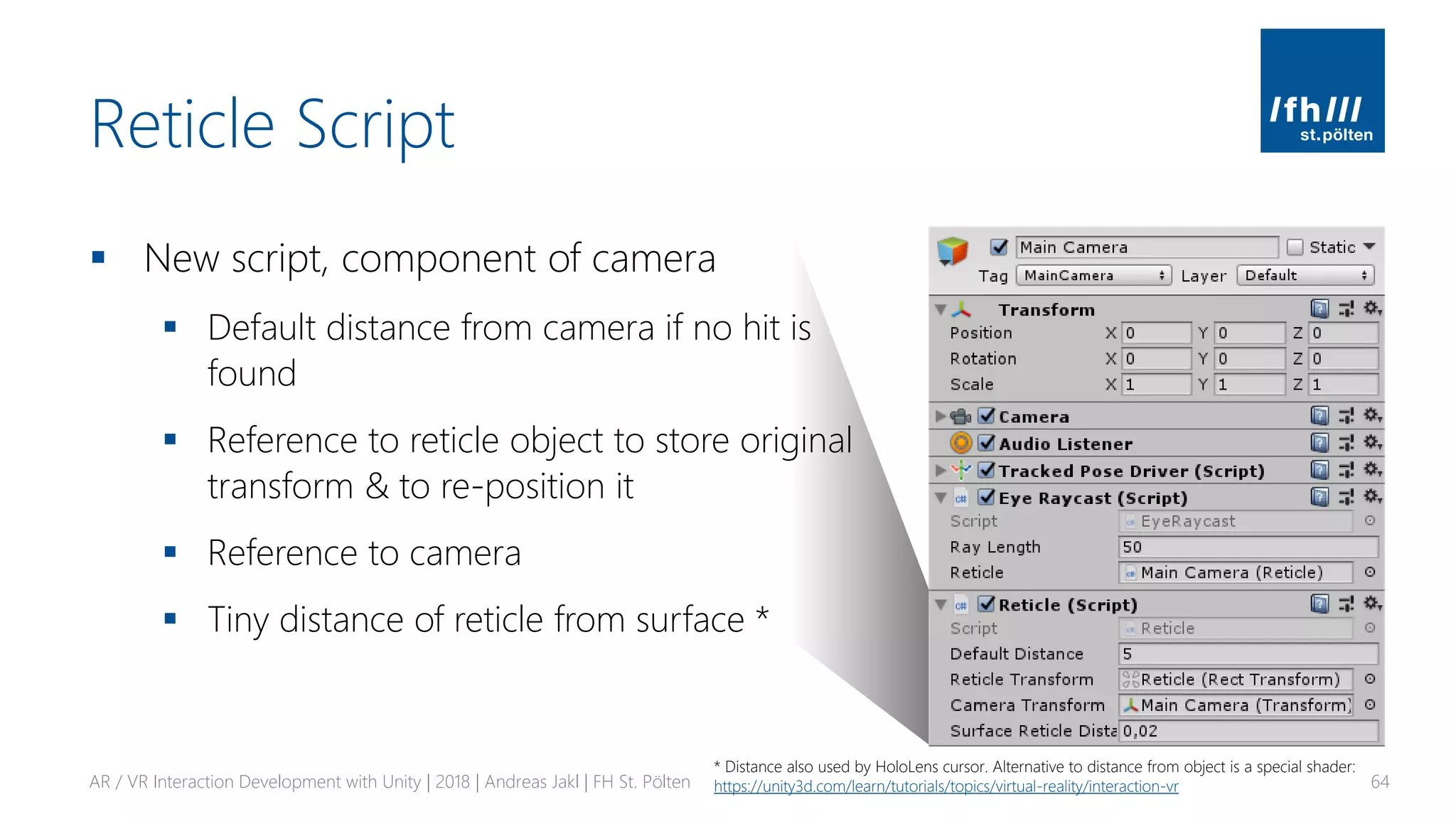

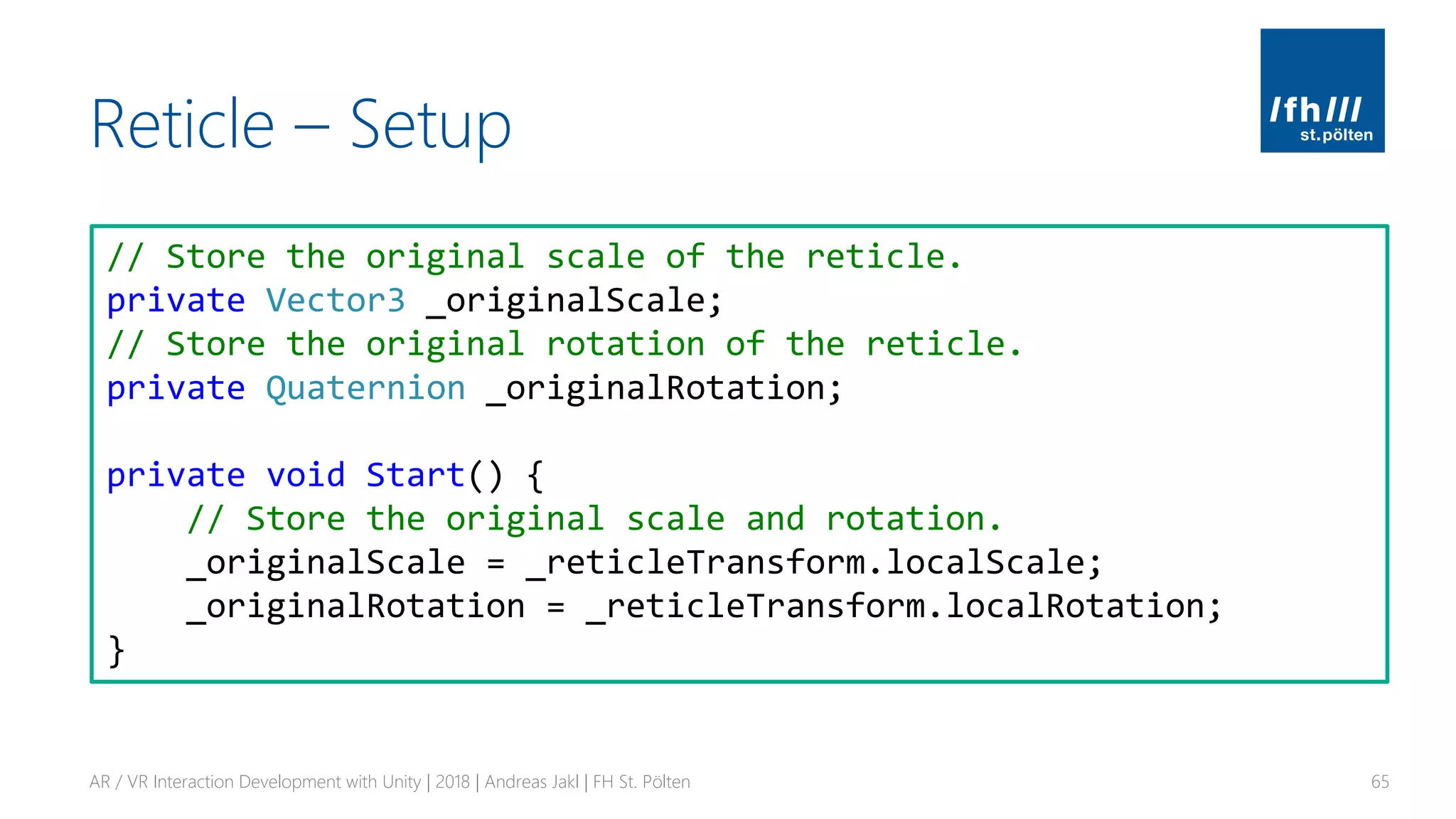

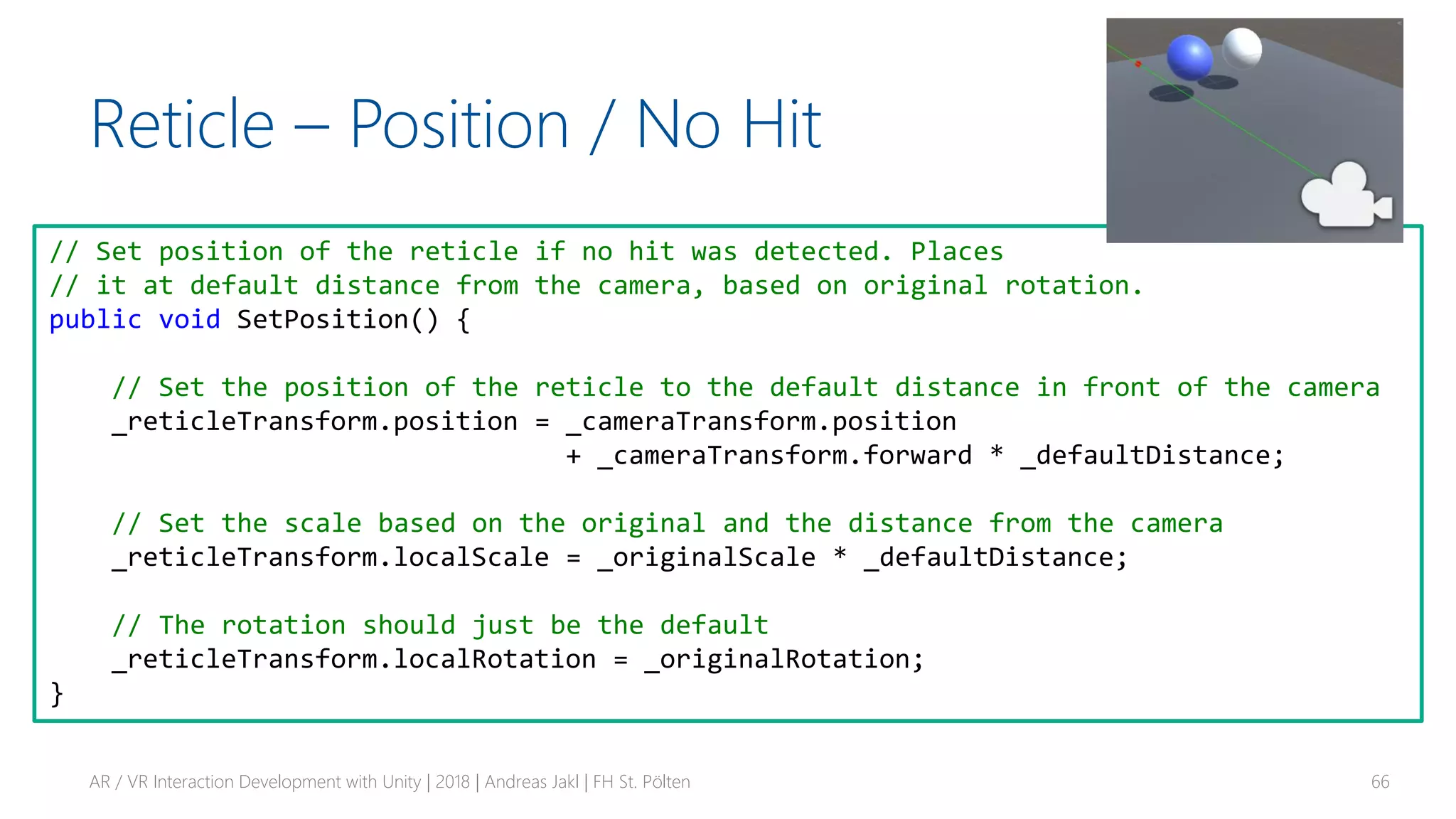

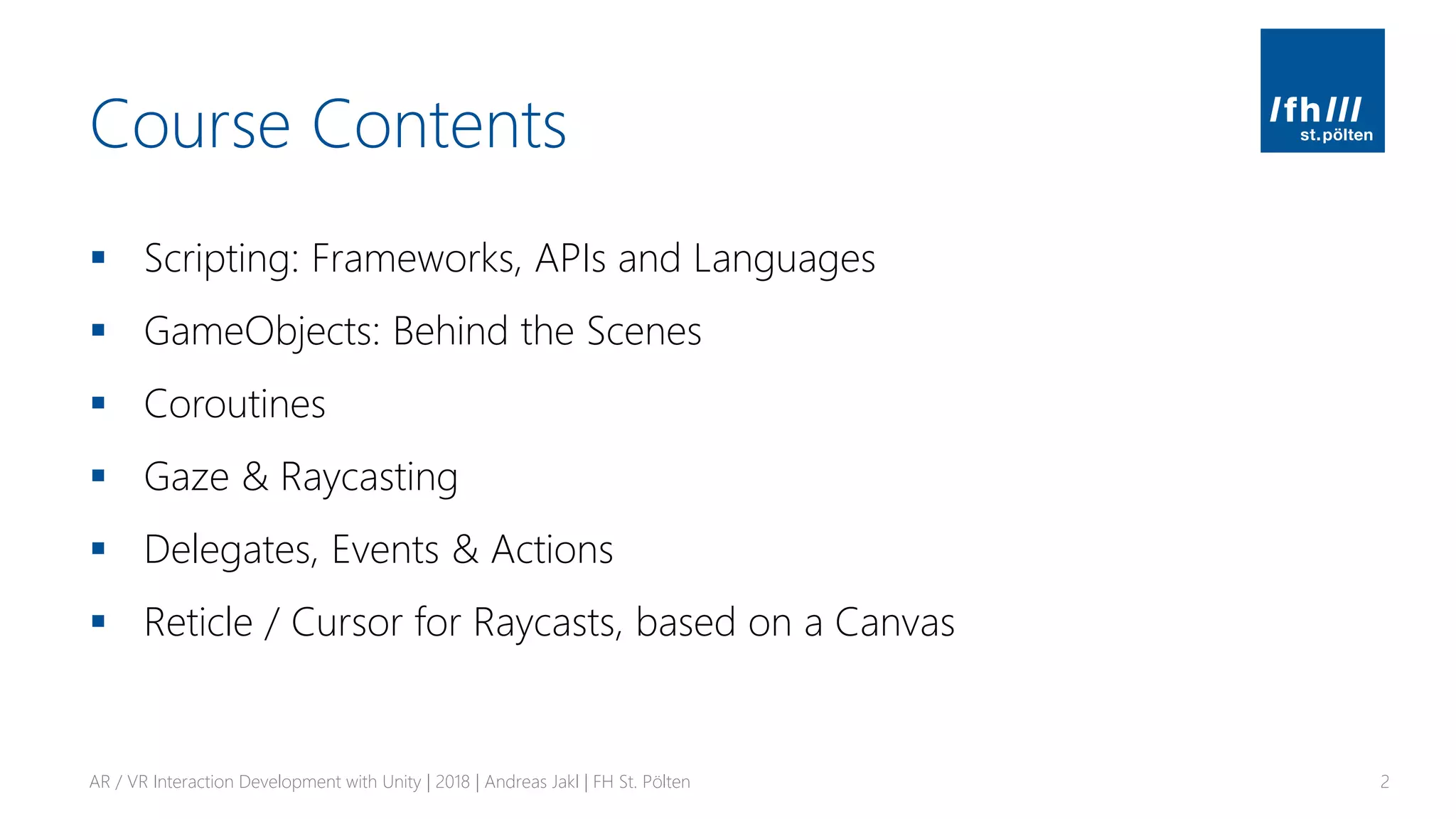

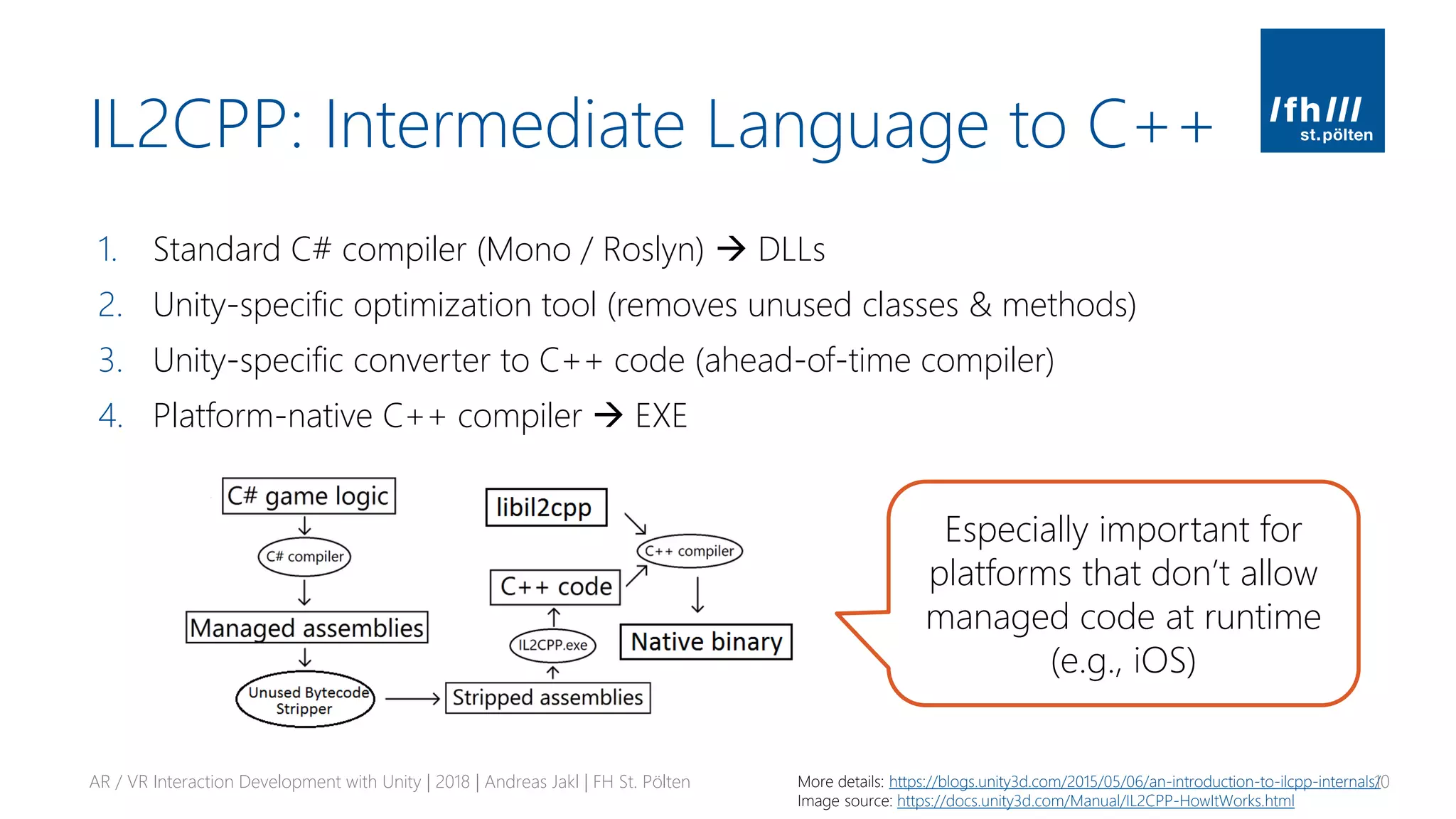

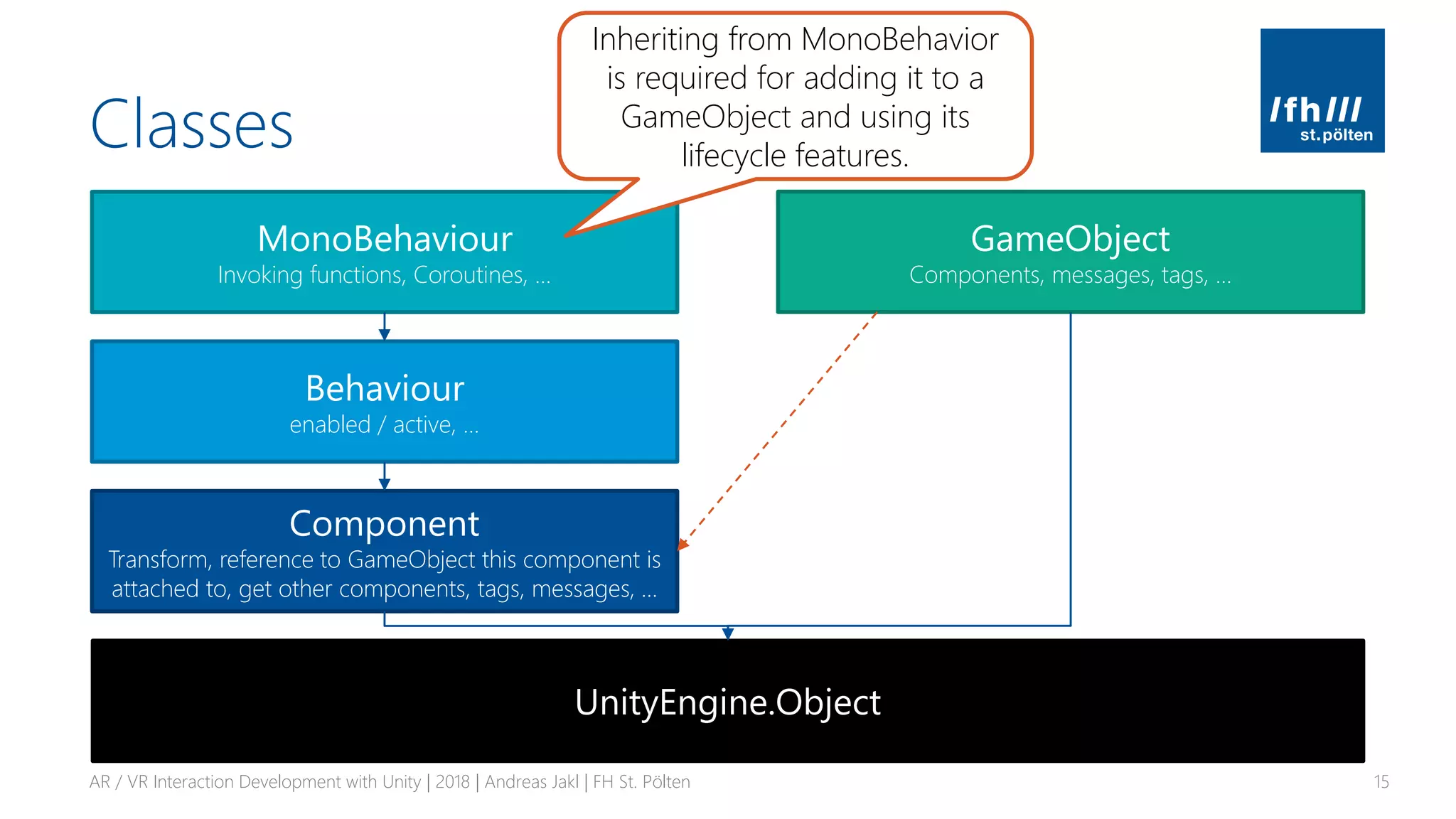

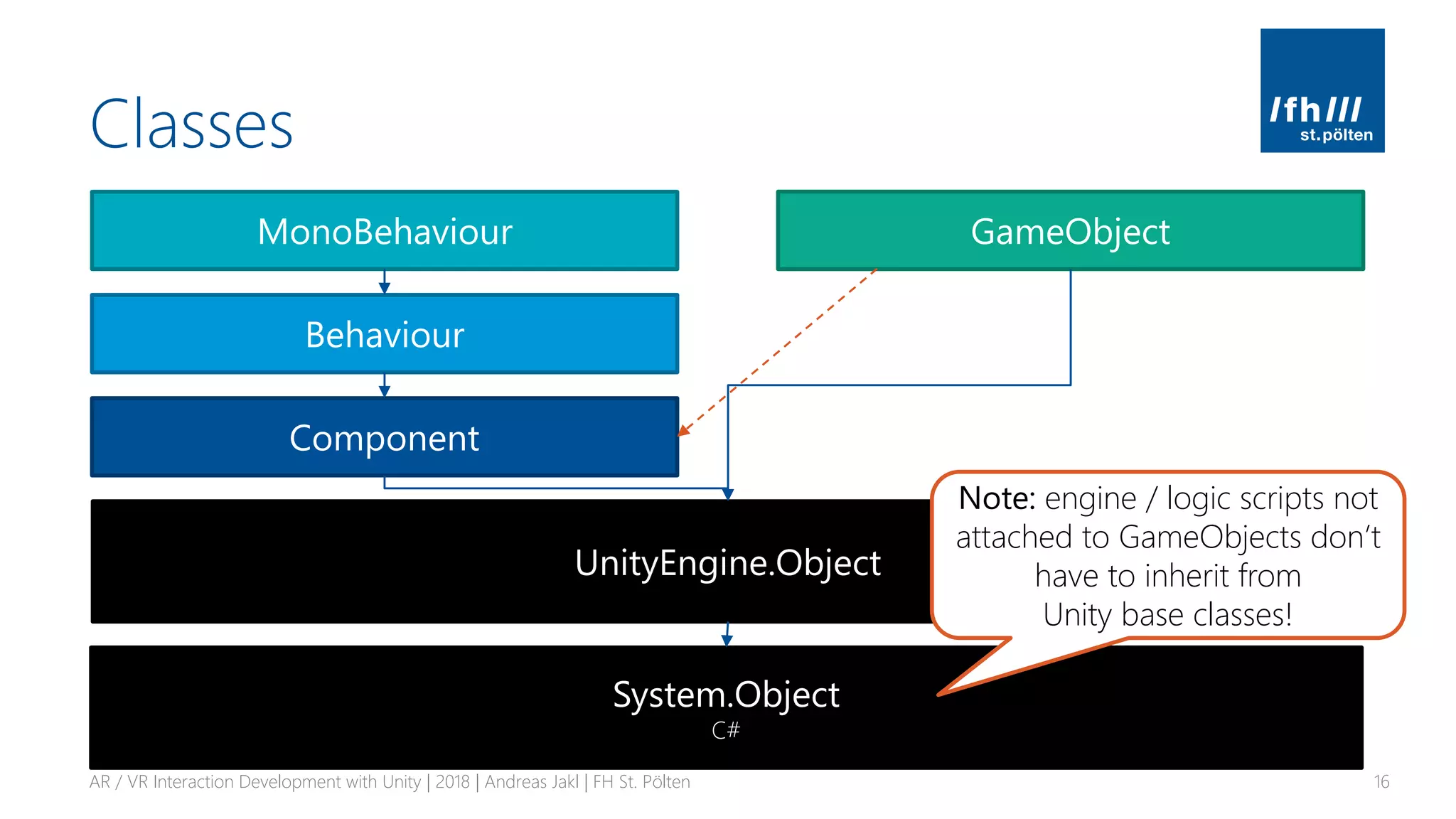

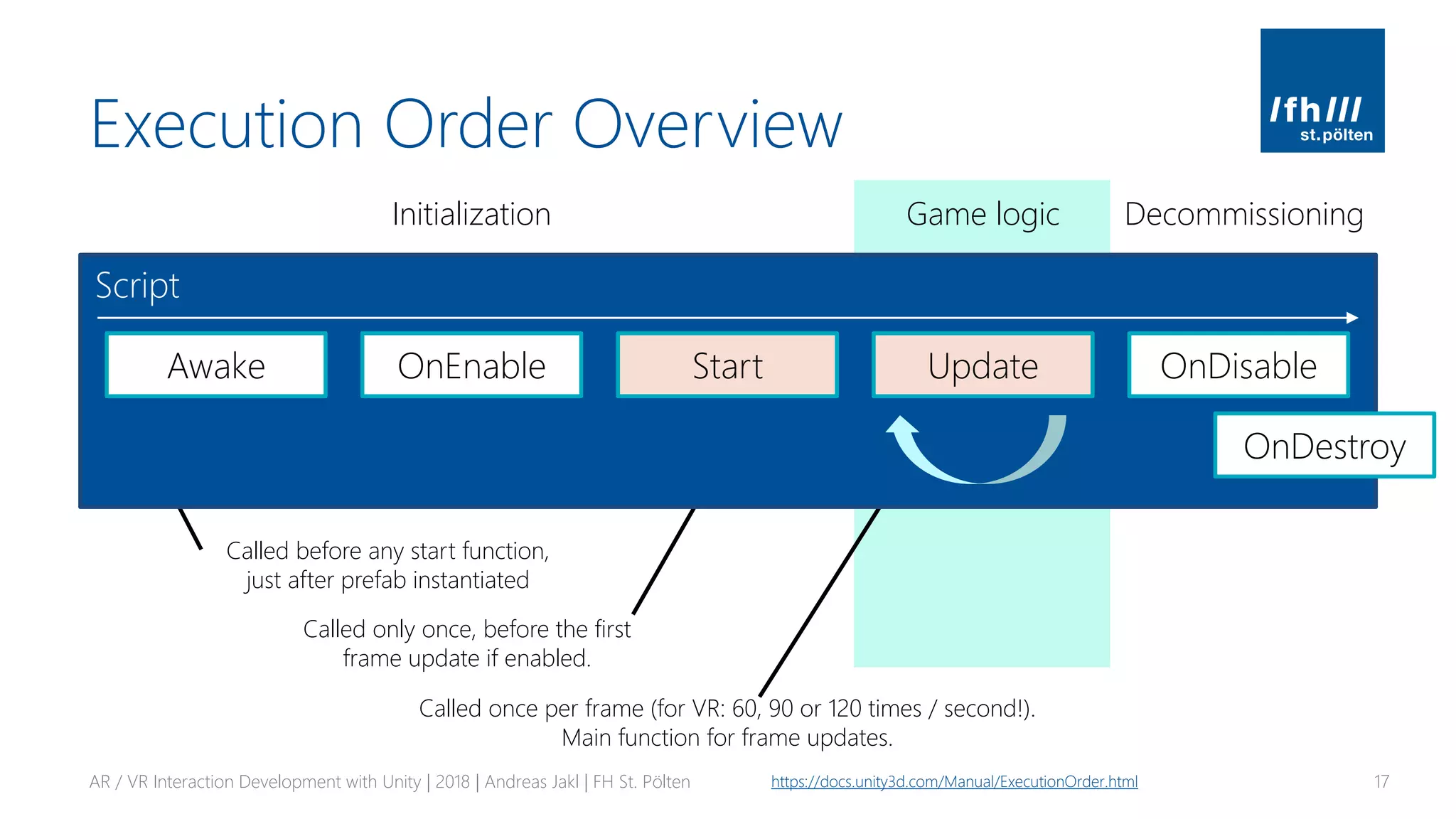

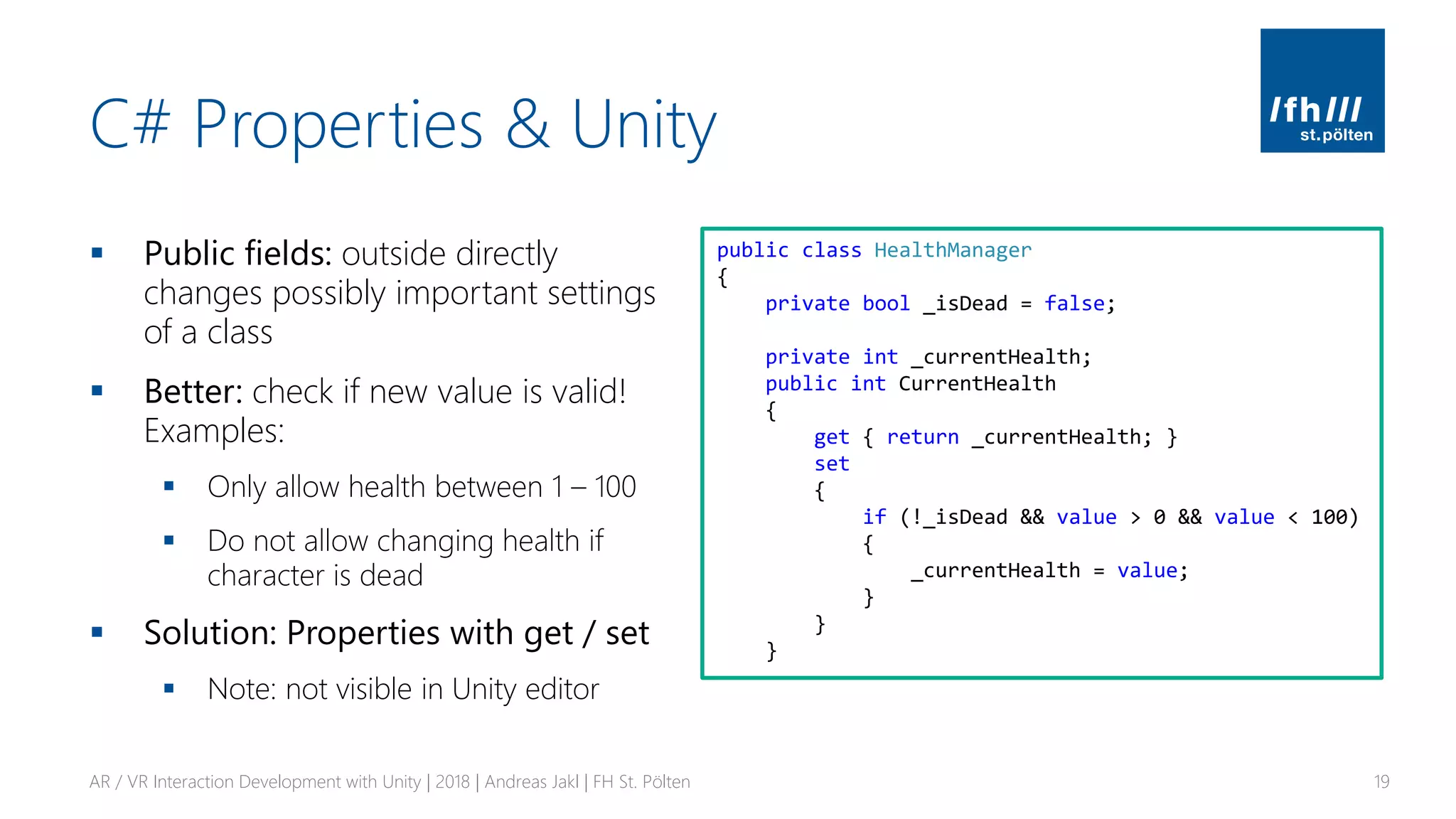

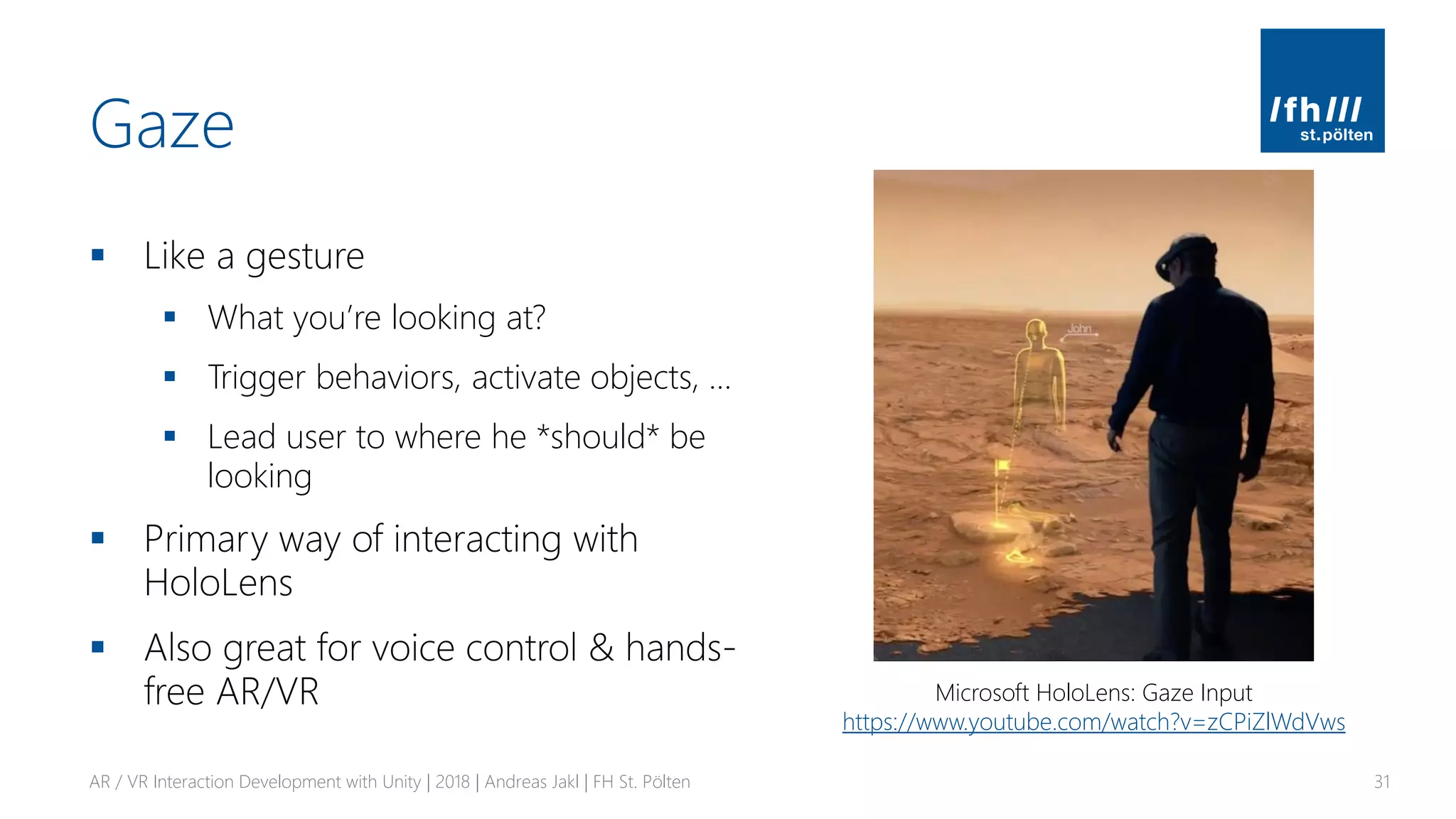

The document provides an overview of AR/VR interaction development using Unity, focusing on scripting, GameObjects, and various programming concepts like Coroutines, raycasting, and event systems. It includes details about Unity's compatibility with .NET frameworks, behaviors of scripts, and how to implement gaze interactions. The content is geared towards students enrolled in a master class course at St. Pölten University of Applied Sciences.

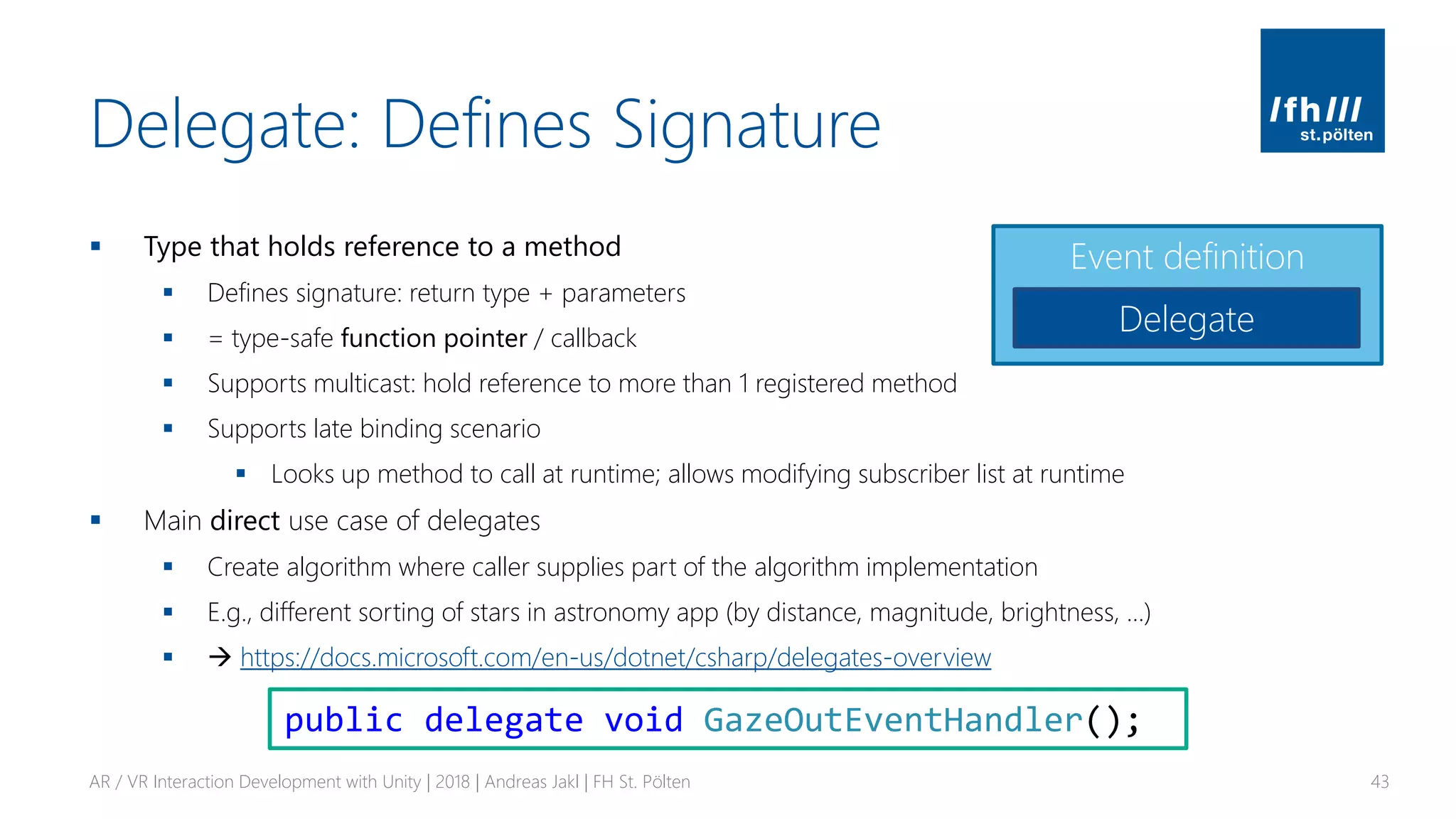

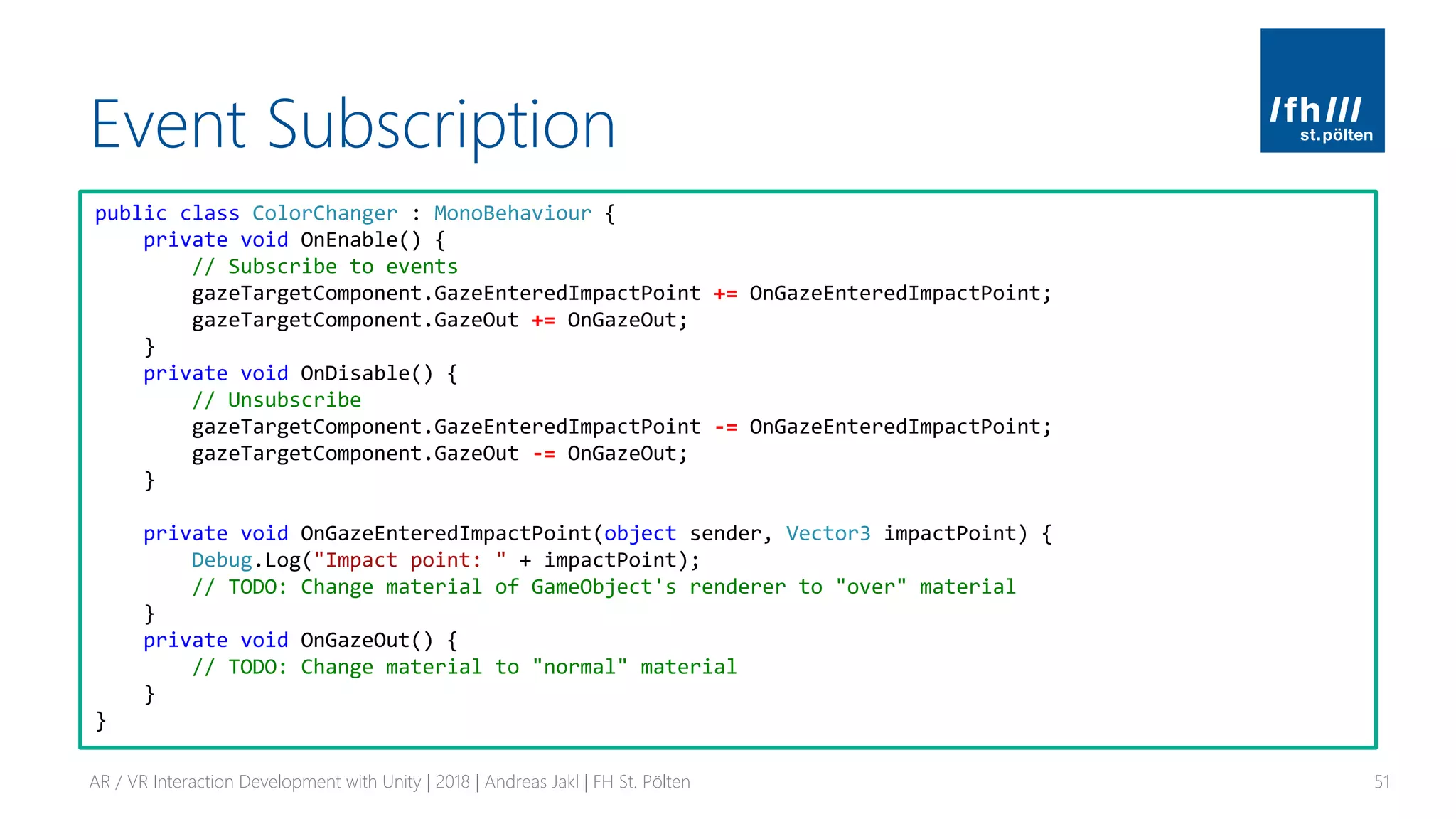

![Fields & References

▪ Public fields (member variables)

▪ Assignable through Unity editor

▪ Private fields

▪ Generally preferred – information encapsulation!

▪ Add [SerializeField] attribute for visibility in the Unity Editor

AR / VR Interaction Development with Unity | 2018 | Andreas Jakl | FH St. Pölten 18

Serialization in Unity: https://docs.unity3d.com/ScriptReference/SerializeField.html

public class PlayerManager : MonoBehaviour

{

public int PlayerHealth;

[SerializeField]

private bool _rechargeEnabled;

private int _rechargeCounter = 0;](https://image.slidesharecdn.com/ar-vrinteractiondevelopmentwithunity-181217160044/75/AR-VR-Interaction-Development-with-Unity-18-2048.jpg)

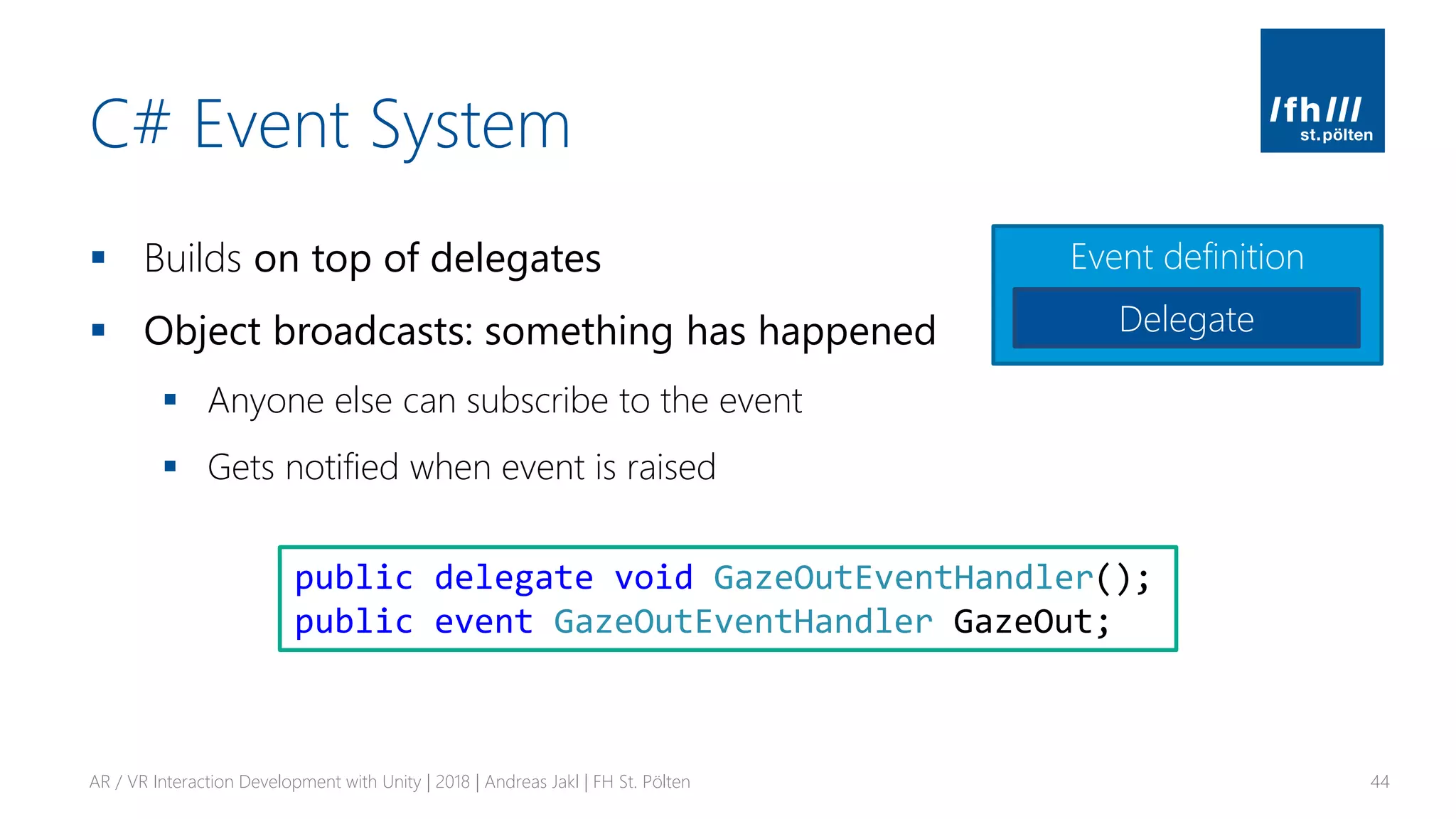

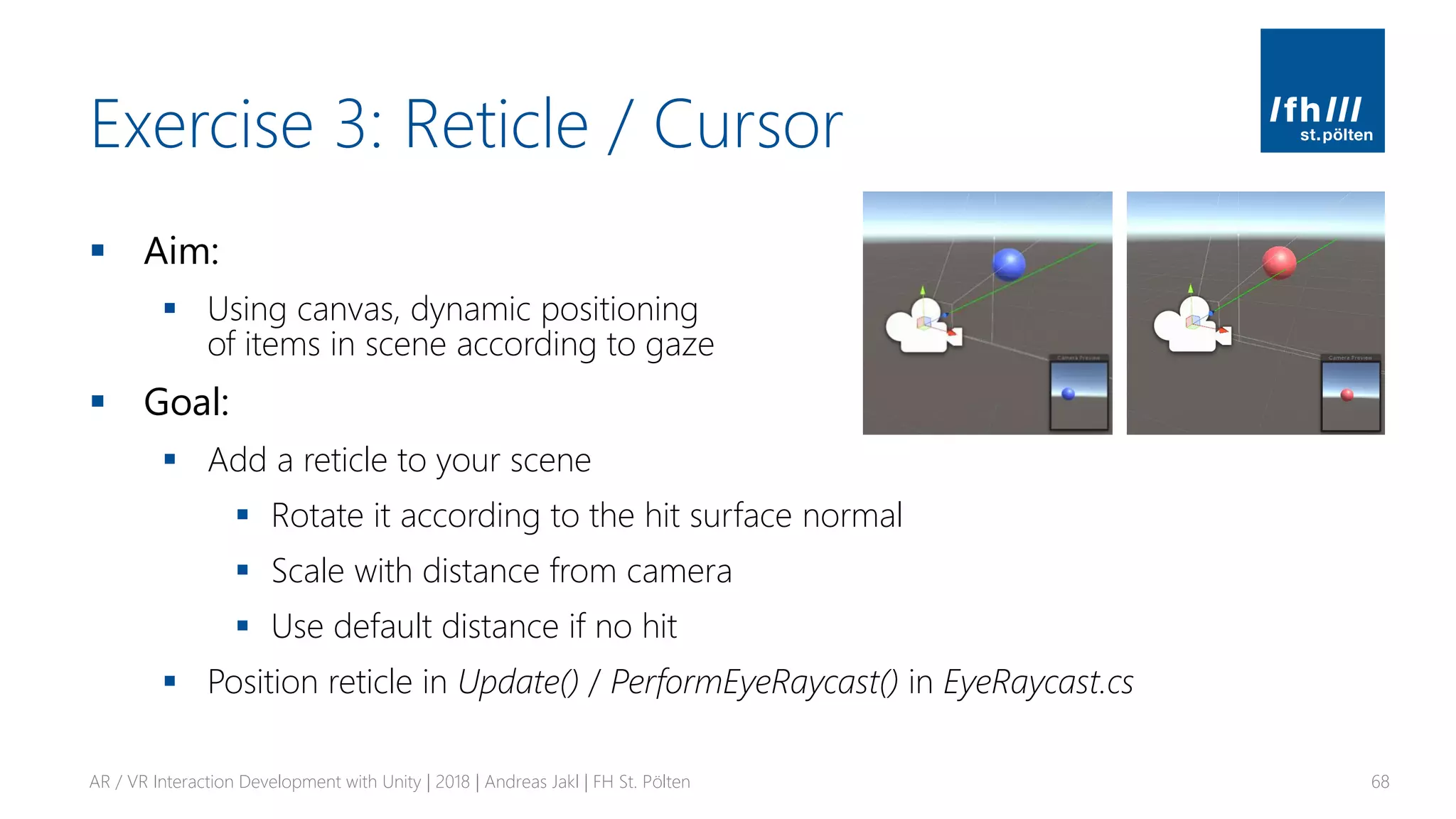

![Exercise 1: Scripts & Instantiating

▪ Aim:

▪ Use fields, references, public, private, lists, instantiate,

frame update times

▪ Goal:

▪ Instantiate a total of [x] game objects (e.g., cubes)

▪ [x] is configurable in the Unity editor

▪ Create a new cube after a random amount of time passed and apply a random color.

You can store a target time in a variable and check in Update() or use Invoke()

▪ Fade the game object in by modifying its alpha with a coroutine.

Make sure you use a material / shader that supports transparency. Test two variants:

1. Use yield return new WaitForSeconds(.05f)

2. Use Time.deltaTime & yield return null

AR / VR Interaction Development with Unity | 2018 | Andreas Jakl | FH St. Pölten 28](https://image.slidesharecdn.com/ar-vrinteractiondevelopmentwithunity-181217160044/75/AR-VR-Interaction-Development-with-Unity-28-2048.jpg)

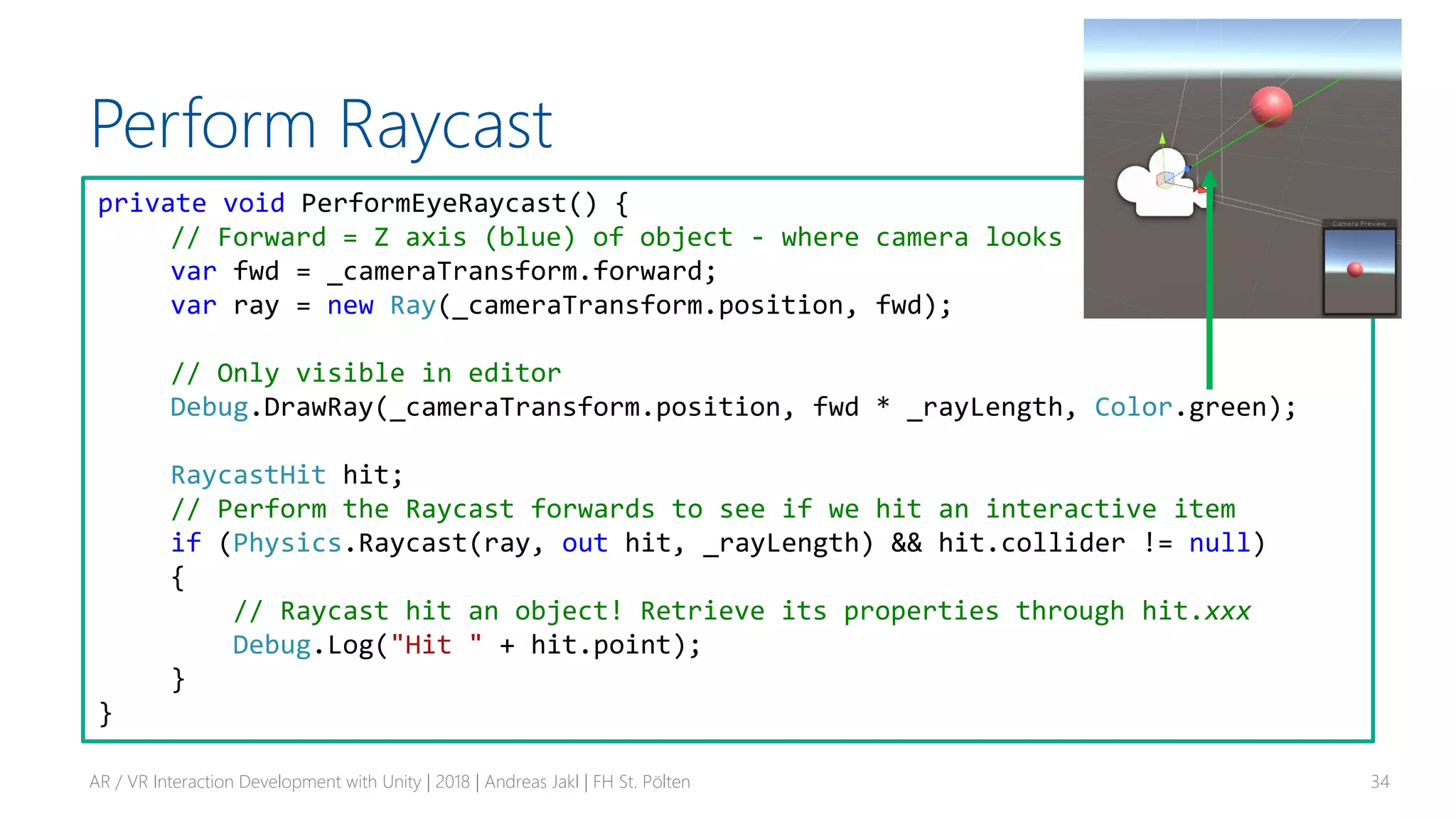

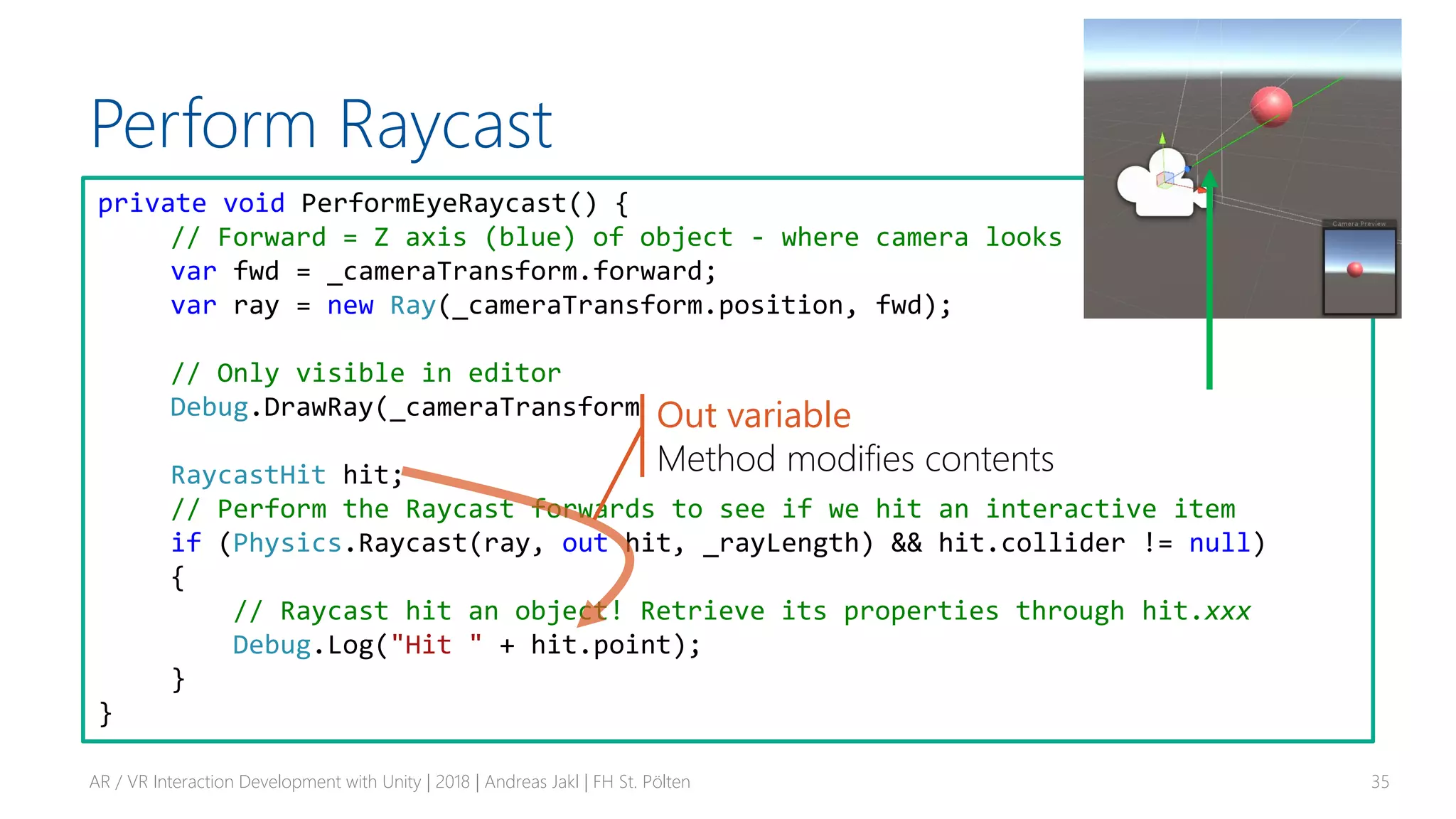

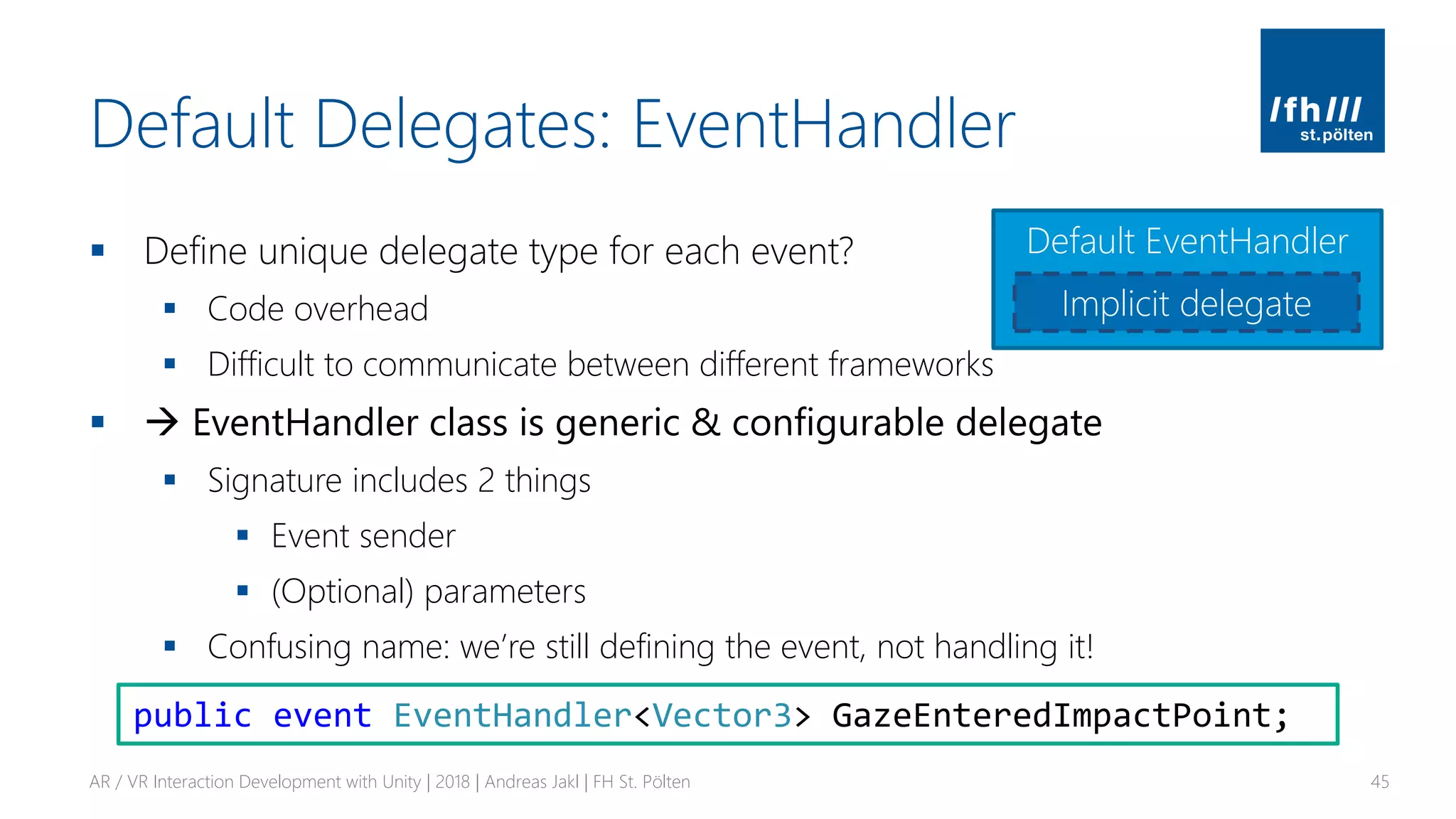

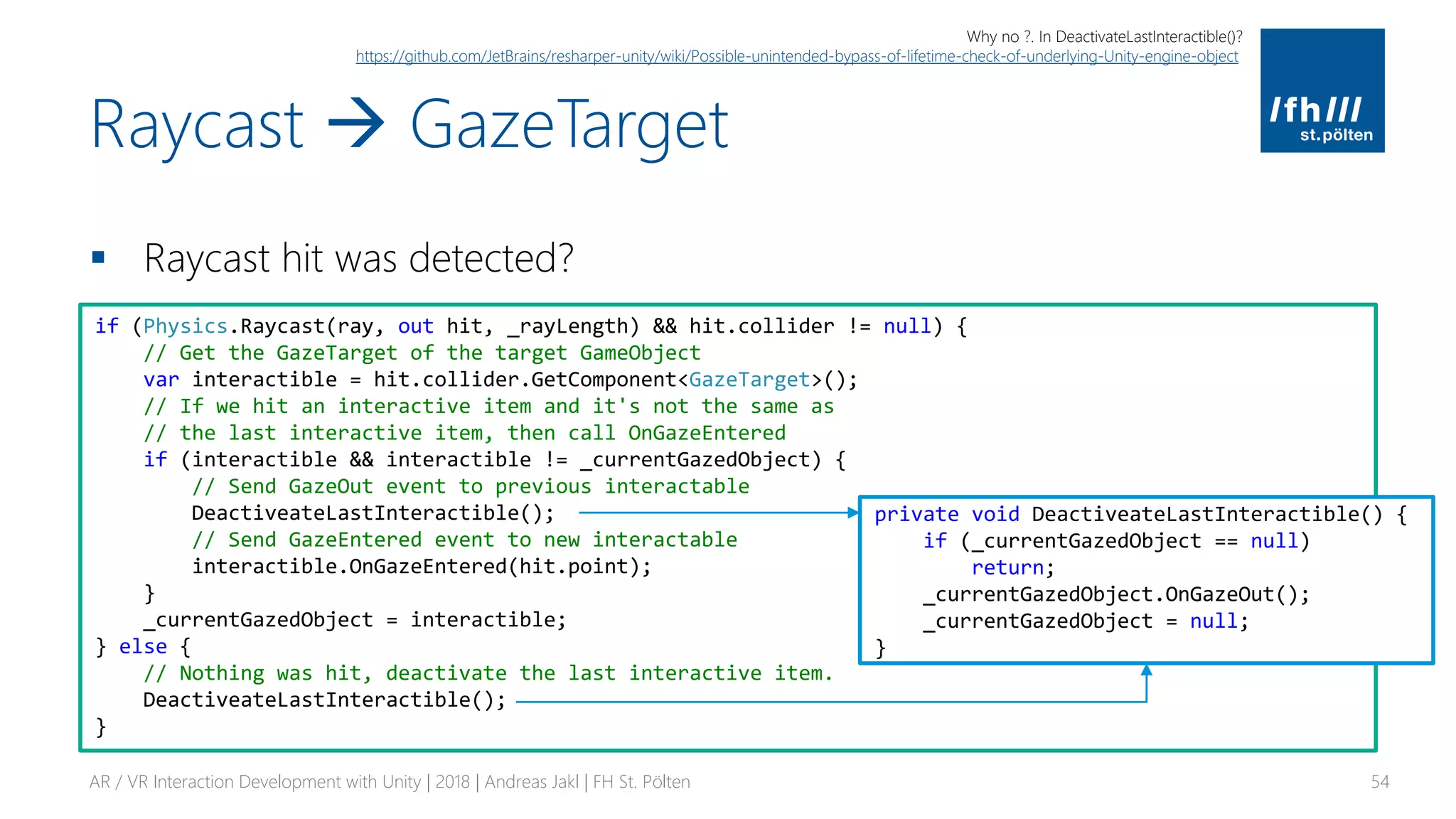

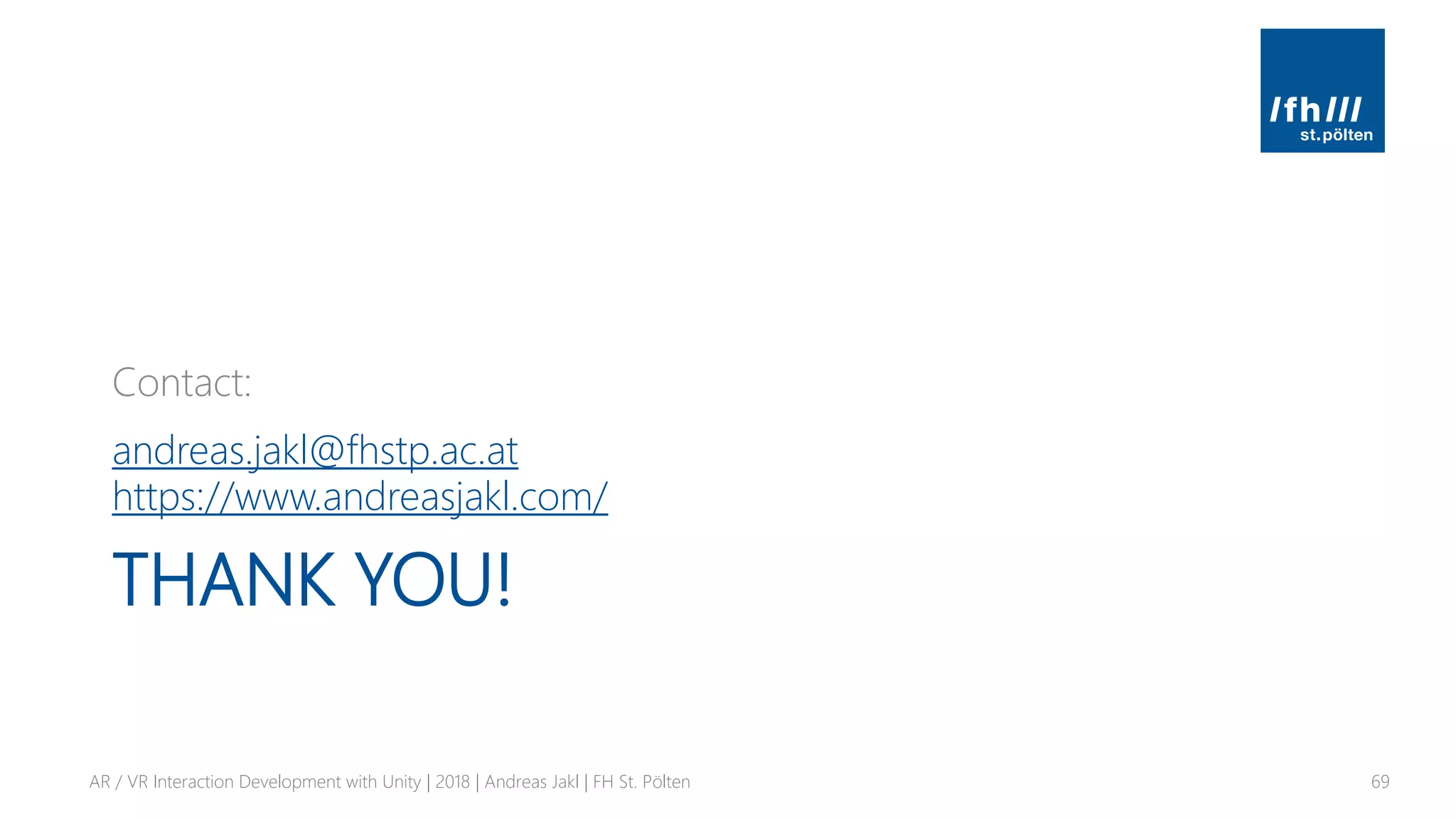

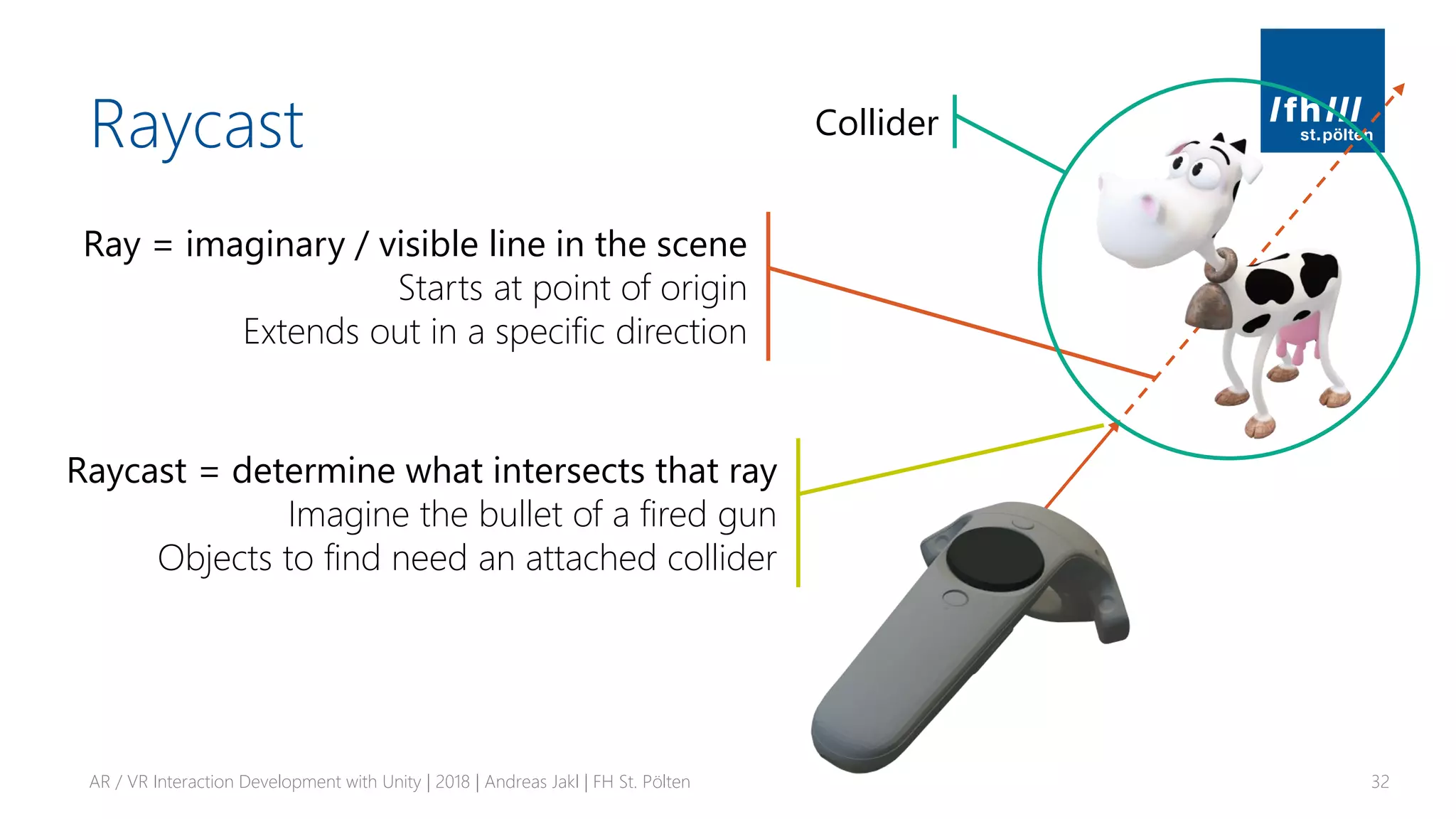

![Raycasting

AR / VR Interaction Development with Unity | 2018 | Andreas Jakl | FH St. Pölten 33

Script as component of the raycasting camera

public class EyeRaycast : MonoBehaviour {

private Transform _cameraTransform;

[SerializeField] private float _rayLength = 50f;

void Start()

{

// Retrieve transform of the camera component

// (which is on the same GameObject as this script)

_cameraTransform = GetComponent<Camera>().transform;

}

Performance tip

Cache GetComponent calls you need every frame

https://unity3d.com/learn/tutorials/topics/performance-optimization/optimizing-scripts-unity-games

http://chaoscultgames.com/2014/03/unity3d-mythbusting-performance/](https://image.slidesharecdn.com/ar-vrinteractiondevelopmentwithunity-181217160044/75/AR-VR-Interaction-Development-with-Unity-33-2048.jpg)