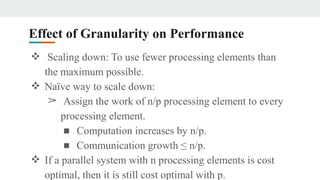

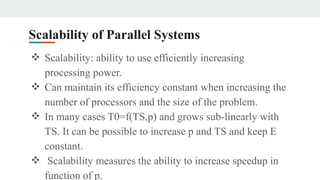

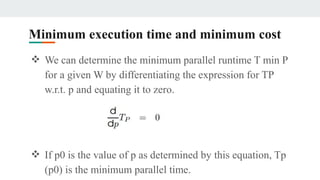

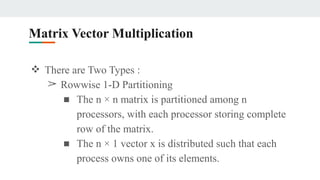

This document discusses analytical models for parallel programs. It covers sources of overhead in parallel programs like load imbalance and dependencies. It defines performance metrics like execution time and overhead functions. It discusses how granularity affects performance and the scalability of parallel systems. It provides examples of optimizing dense matrix algorithms like matrix-vector and matrix-matrix multiplication. The goal is to determine minimum execution times and costs by analyzing parallel runtimes as a function of problem size and number of processors.