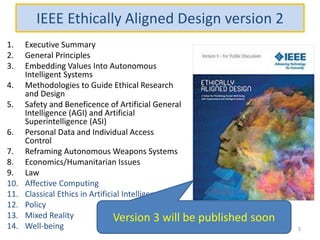

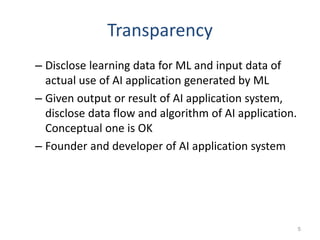

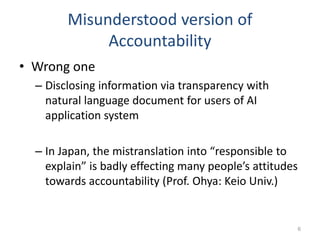

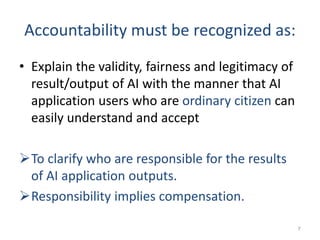

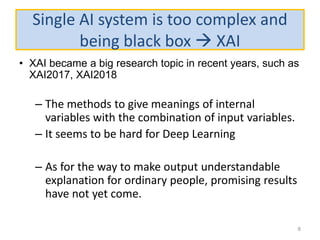

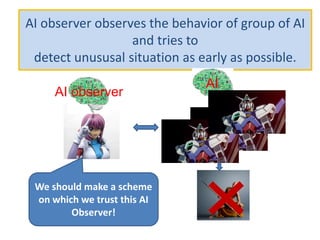

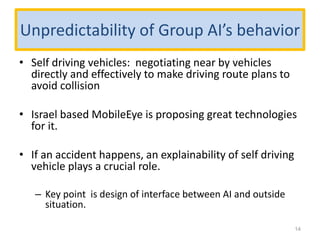

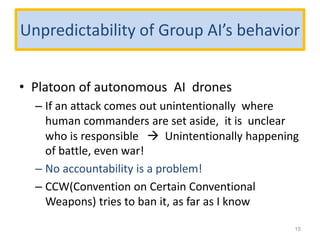

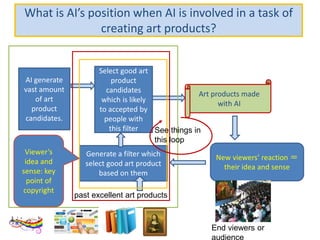

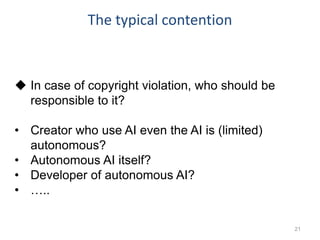

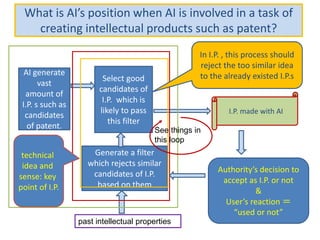

The document discusses AI ethics and accountability, emphasizing the need for transparency and clarity in AI decision-making processes. It addresses the challenges of accountability in single and group AI systems, particularly in contexts such as autonomous weapons and financial trading algorithms. The conclusion highlights the importance of licensing, compensation, and public trust in AI systems to ensure ethical compliance and acceptance by ordinary citizens.