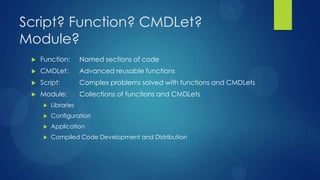

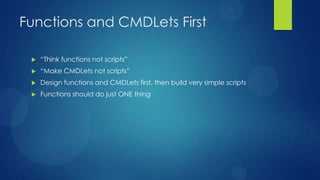

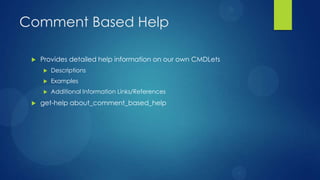

The document discusses best practices for writing effective PowerShell code, emphasizing function architecture, naming conventions, comments, and error handling. It advocates for coding guidelines to improve readability, maintainability, and reduce errors, urging developers to focus on creating functions and cmdlets rather than complex scripts. Additionally, it includes demos related to web functions and API notifications through pushover.net.

![Style Guides/conventions

Style guide or coding/programming conventions are a set of rules used

when writing the source code for a computer program [WikiPedia]

Date back to the 1970s

Claims to increase readability of and understand-ability of source code,

and this reduce the chances of programming errors.

Claims to reduce software maintenance costs

Software developers all work with guides/conventions

We have server naming conventions, account naming conventions, why

not scripting conventions?

One of the hardest things for any group to develop, agree upon and to

stick to](https://image.slidesharecdn.com/powershell-140415055638-phpapp02/85/Advanced-PowerShell-Automation-3-320.jpg)