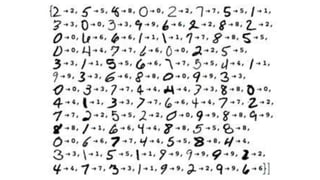

Machine learning is the field of study that allows computers to learn without being explicitly programmed. It involves computers learning from large amounts of data to continuously improve performance on tasks without being explicitly programmed. The document outlines the early developments in machine learning from the 1950s to 1990s involving neural networks, game playing, and data-driven approaches. It also discusses modern machine learning involving deep learning, computer vision, reinforcement learning, and how computers now can continuously learn from massive datasets to perform human-like tasks.

![“[Machine Learning is the] field of study that

gives computers the ability to learn without being

explicitly programmed.” – Arthur Samuel (1959)](https://image.slidesharecdn.com/wkuagpbftxiqm0g3xeg5-signature-70e700bcae21231eb6ecf36165941028d72d6da7f9535a92cff9fc3ef366ddbc-poli-161012091017/85/48-Machine-learning-7-320.jpg)