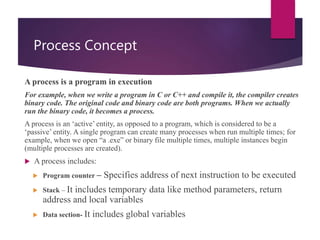

This document provides an overview of operating system process management, including the definition and states of processes, process control blocks (PCBs), and different types of scheduling (long-term and short-term). It also discusses inter-process communication, including message passing and shared memory, along with the concepts of cooperating and independent processes. Additionally, it covers specific problems like the producer-consumer problem and synchronization mechanisms for managing data between concurrent processes.