This document discusses basics of intensity transformations and spatial filtering of digital images. It covers the following key points:

- Intensity transformations map input pixel intensities to output intensities using an operator T. Common transformations include log, power-law, and piecewise-linear functions.

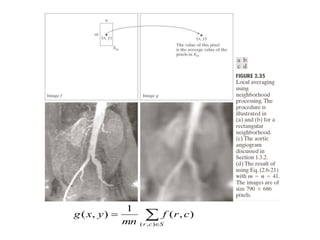

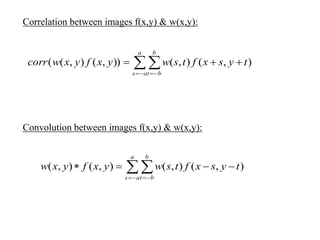

- Spatial filters operate on neighborhoods of pixels. Linear filters perform averaging or correlation while non-linear filters use ordering like median.

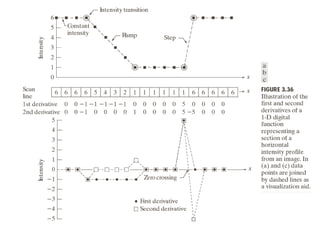

- Basic filters include smoothing to reduce noise, sharpening to enhance edges using Laplacian or unsharp masking, and gradient for edge detection.

- Fuzzy set theory can be applied to intensity transformations by defining membership functions for concepts like dark/bright. It can also be used for spatial filtering by defining

![ Basics of Intensity Transformations and Spatial Filtering

g(x,y) = T [f(x,y)]

where f(x,y) is the input image, g(x,y) is the output image, and T is

an operator on f defined over a neighborhood of point (x,y)](https://image.slidesharecdn.com/3intensitytransformationsspatialfilteringslides-201121054856/85/3-intensity-transformations-and-spatial-filtering-slides-1-320.jpg)

![BASIC INTENSITY TRANSFORMATION FUNCTIONS:

Image negatives

The negative of an image with intensity levels in the range[0,L-1]

is obtained by using the negative transformation, given by the expression:

s = L-1-r

reversing the intensity levels of an image in this manner produce

the equivalent of a photographic negative.

Log Transformations

The general form of log transformation is

s = c log(1+r)

where c is constant & assumed that r ≥ 0.

This transformation maps a narrow range of low intensity values in the point

into a wider range of output values.

nth root and nth power transformations.](https://image.slidesharecdn.com/3intensitytransformationsspatialfilteringslides-201121054856/85/3-intensity-transformations-and-spatial-filtering-slides-5-320.jpg)

![Histogram:

• The histogram of a digital image with intensity levels in the range

[0,L-1] is a discrete function h(rk)= nk , where rk is the kth intensity

value and nk is the no. of pixels in the image of intensity rk .

• Normalized histogram is given by

p(rk)= nk /MN, for k = 0,1,2,…(L-1)

• p(rk) is an estimate of the probability of occurrence of intensity

level rk in an image.

• The sum of all components of a normalized histogram is equal to 1](https://image.slidesharecdn.com/3intensitytransformationsspatialfilteringslides-201121054856/85/3-intensity-transformations-and-spatial-filtering-slides-18-320.jpg)

![Histogram Equalization:

Consider for a moment continuous intensity values and let the

variable r denote the intensities of an image to be processed. We assume

that r is in the range [0, L-1], with r=0 (black) and r=L-1 (white). For r

satisfying these conditions. We focus on attention on transformations of

the form

s = T(r) , 0 ≤ r ≤L-1

that produce an output intensity level s for every pixel in the input image

having intensity r. We assume that:

a) T(r) is a monotonically increasing function in the interval 0 ≤ r ≤ L-1

b) 0 ≤ T(r) ≤ L-1 for 0 ≤ r ≤ L-1](https://image.slidesharecdn.com/3intensitytransformationsspatialfilteringslides-201121054856/85/3-intensity-transformations-and-spatial-filtering-slides-20-320.jpg)

![PRINCIPLES OF FUZZY SET THEORY:

Let Z be the set of elements with generic element of Z denoted by z, i.e. Z={z}. This set is

called universe of discourse. A fuzzy set, A in Z is characterized by a membership

function, μA(z), that associates with each element of Z a real number in the interval [0 1].

The value of μA(z) at z represents the grade of membership of z in A.

With fuzzy sets, we say that all z s for which μA(z) =1 are full members of the set.

all z s for which μA(z) =0 are not members of the set.

all zs for which μA(z) = between 0 &1 have partial membership in the set.

A={z, μA(z) |z ϵ Z }](https://image.slidesharecdn.com/3intensitytransformationsspatialfilteringslides-201121054856/85/3-intensity-transformations-and-spatial-filtering-slides-50-320.jpg)

![The problem specific knowledge just explained can be formalized in the

form of the following fuzzy IF-THEN rules:

R1: IF the colour is green, THEN the fruit is verdant

R2: IF the color is yellow, THEN the fruit is half-mature

R3: IF the color is red, THEN the fruit is mature

In this context color is linguistic variable and

particular color is linguistic value. A linguistic

value ,z,is fuzzified by using a membership

function to map it to interval [0 1].](https://image.slidesharecdn.com/3intensitytransformationsspatialfilteringslides-201121054856/85/3-intensity-transformations-and-spatial-filtering-slides-53-320.jpg)