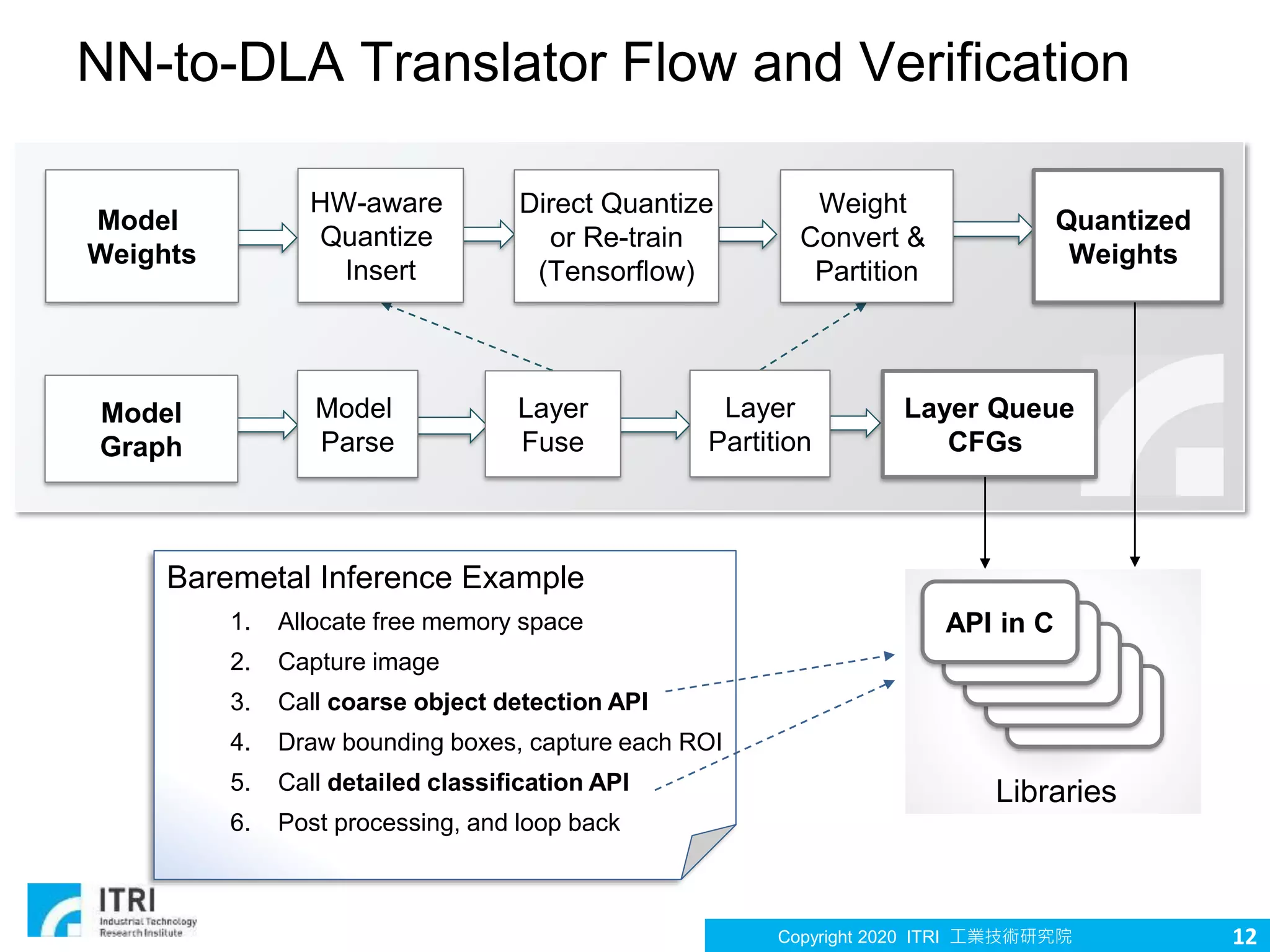

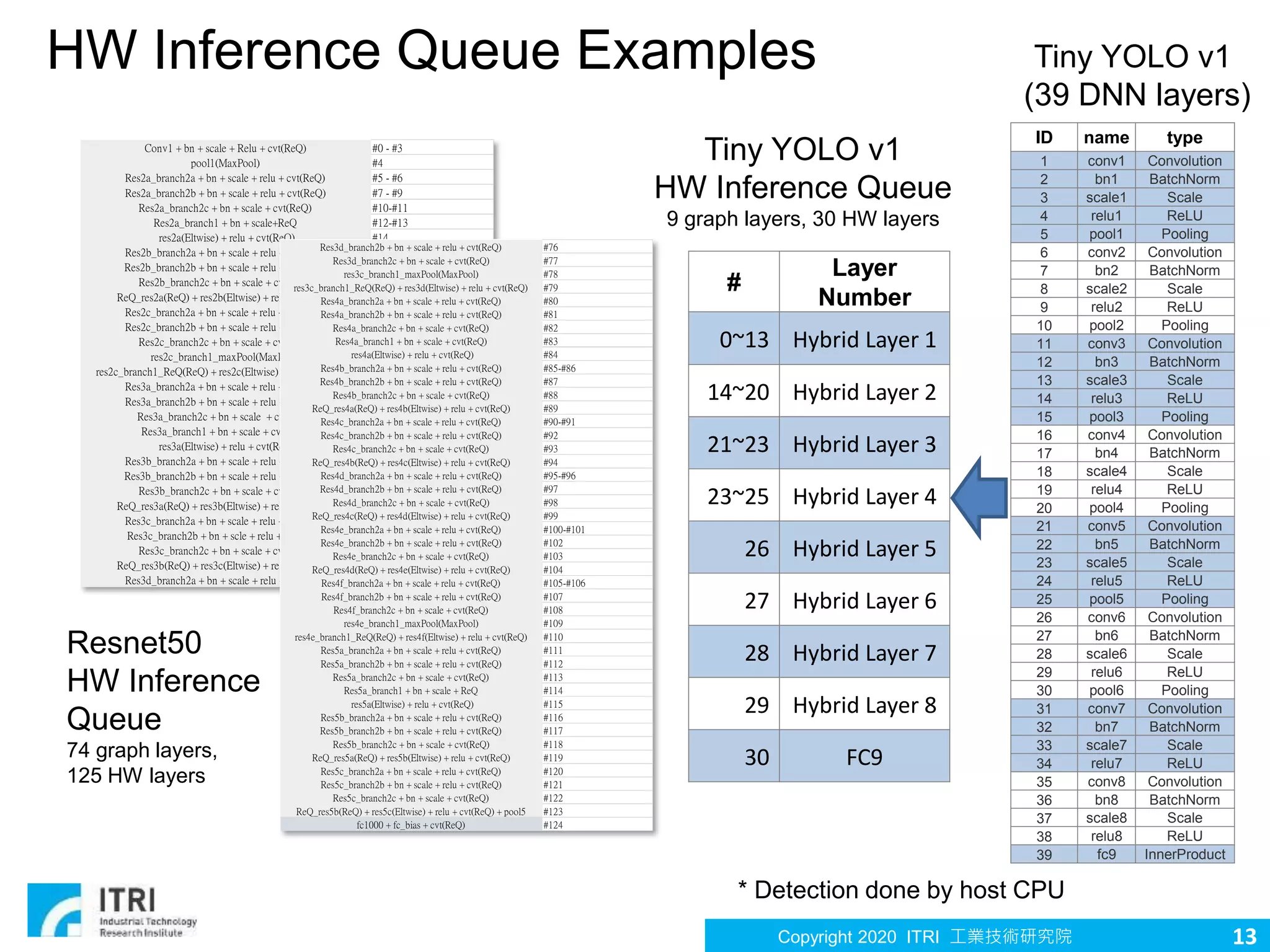

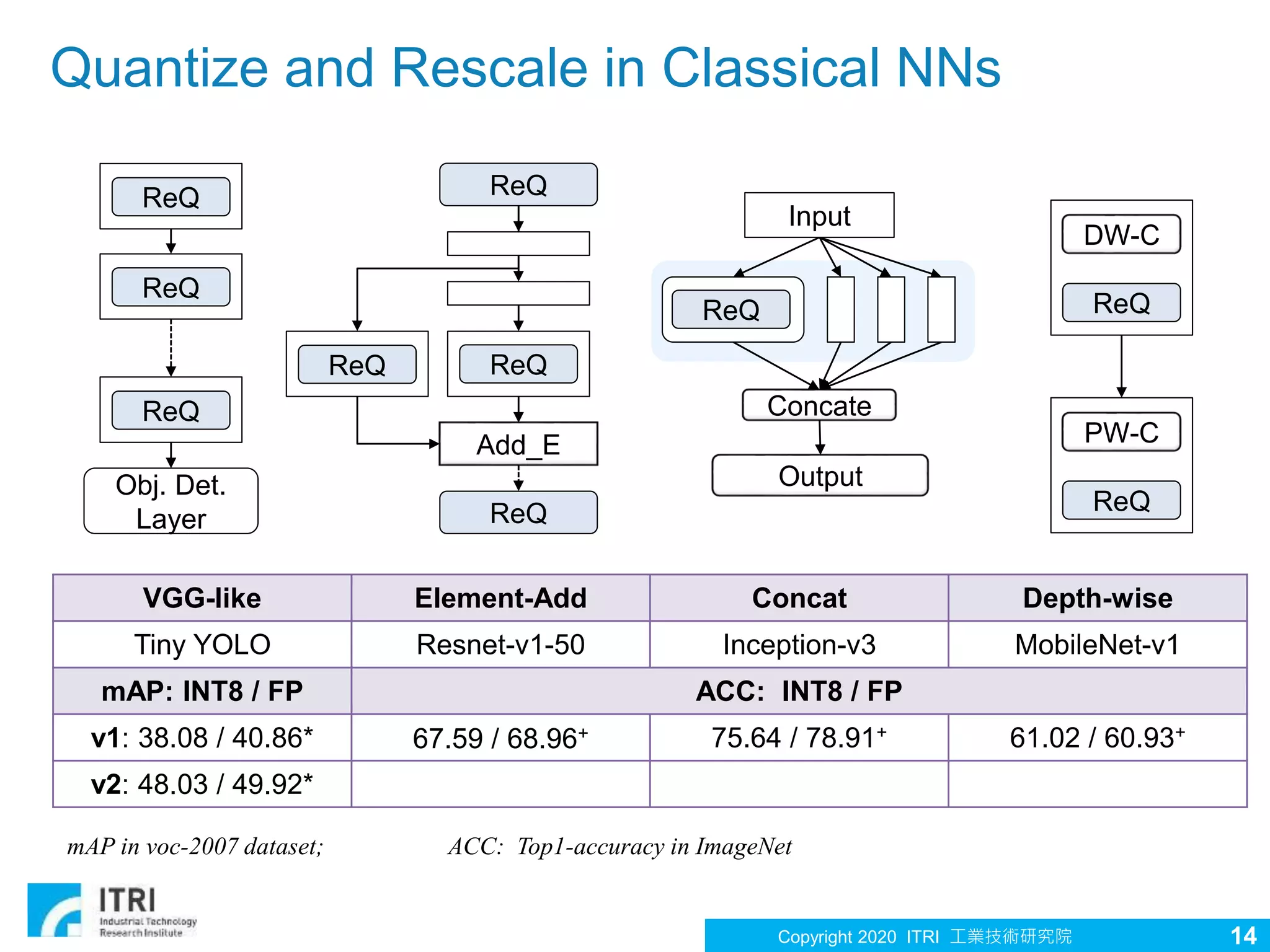

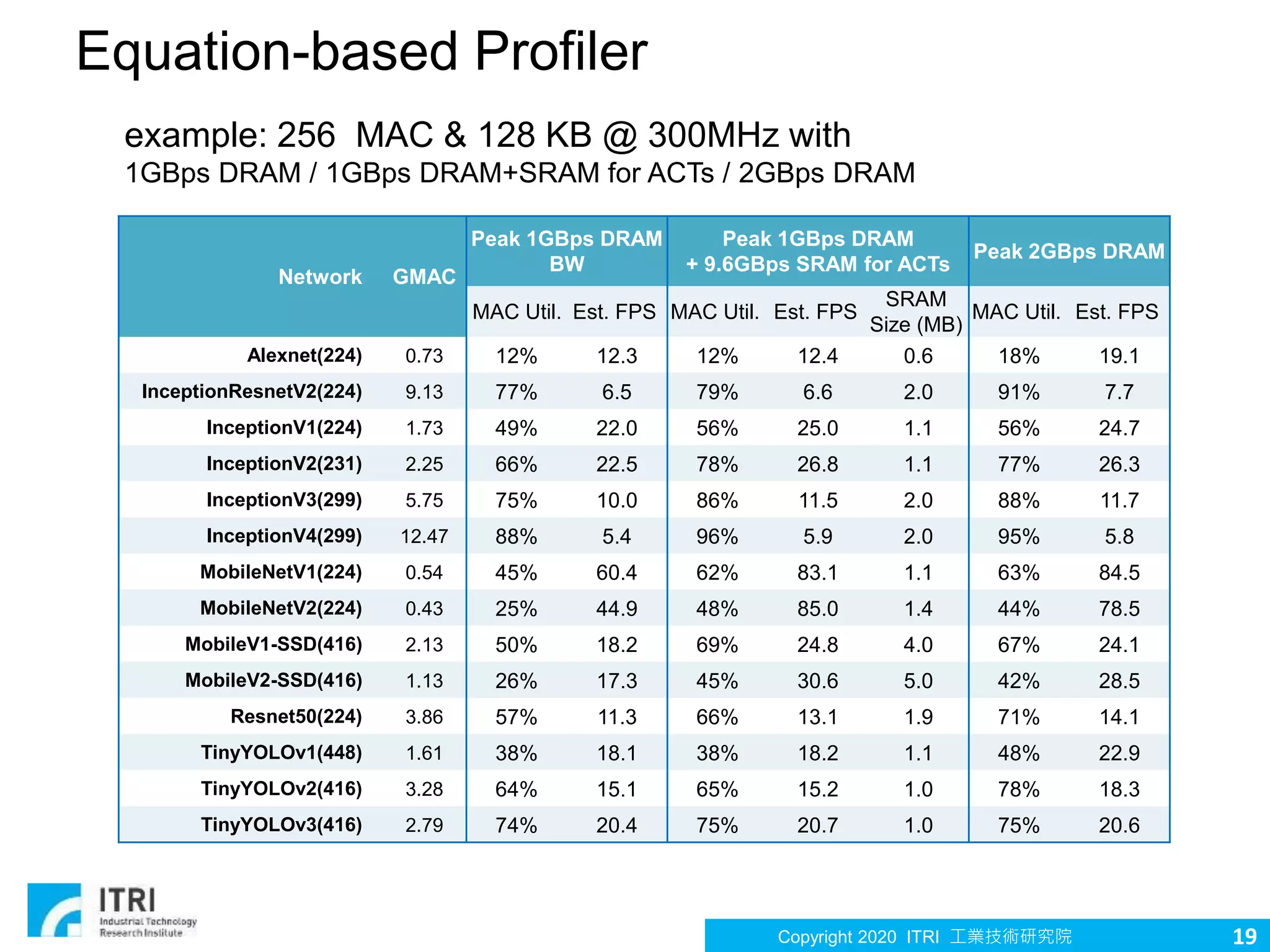

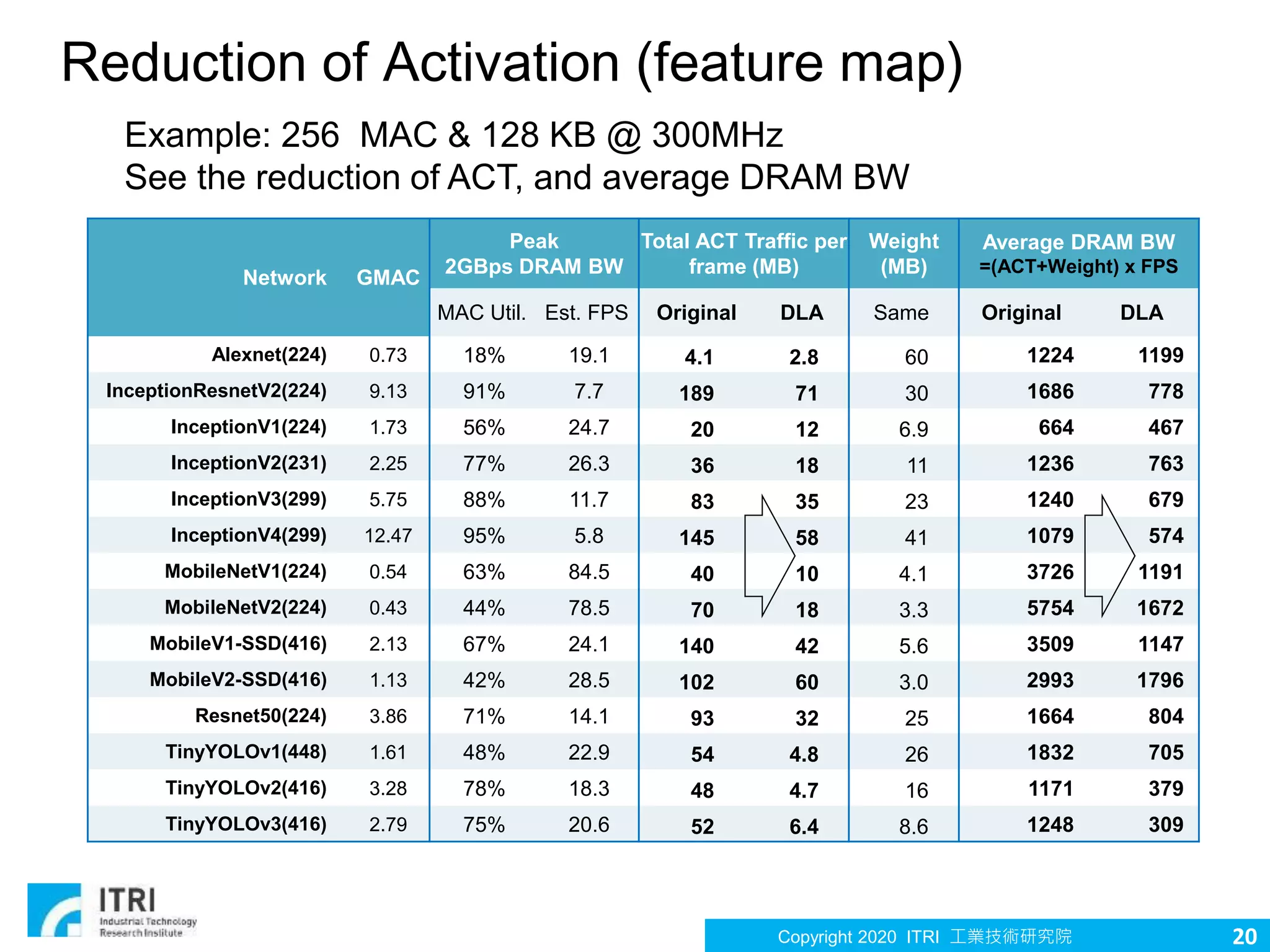

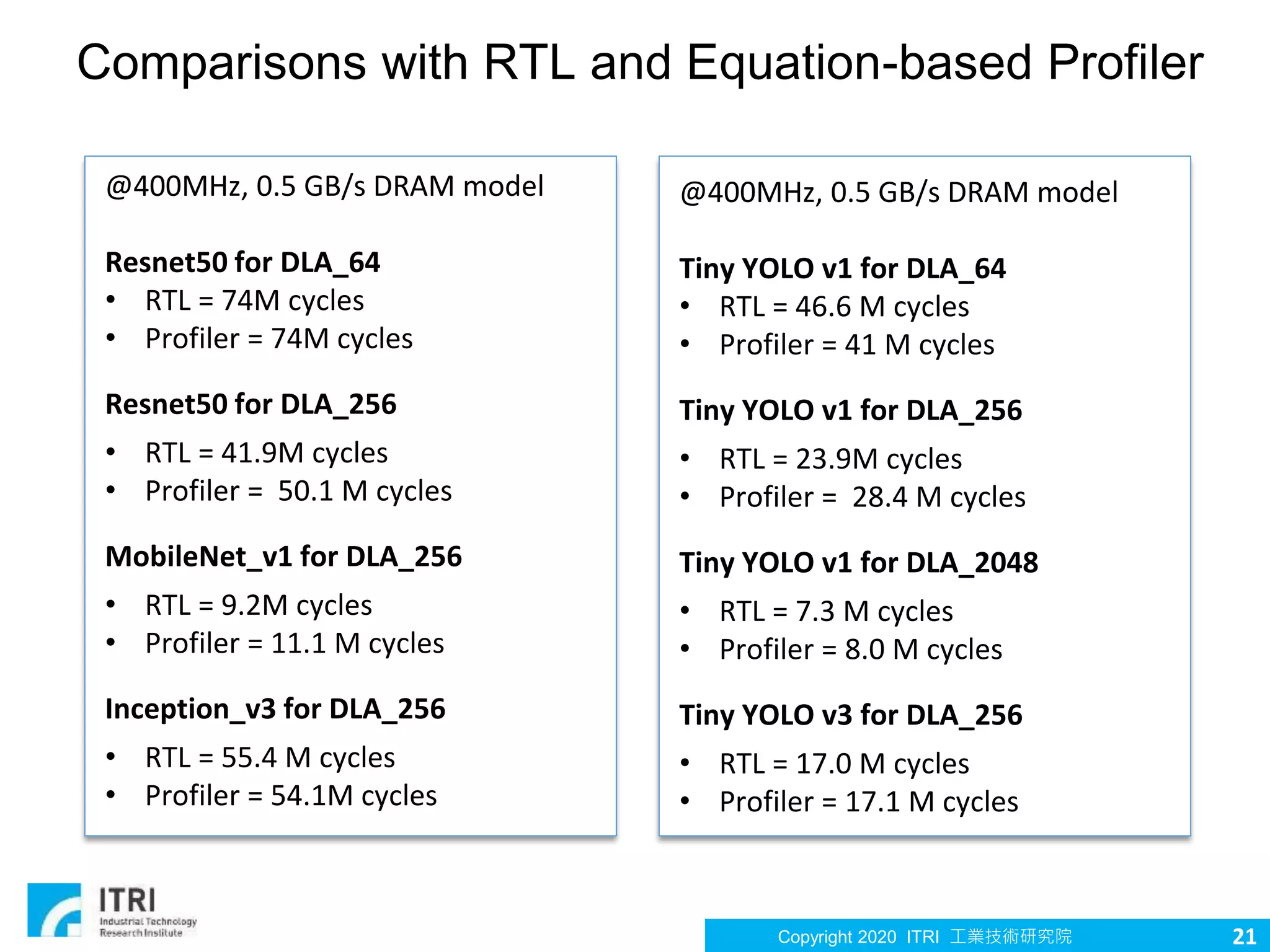

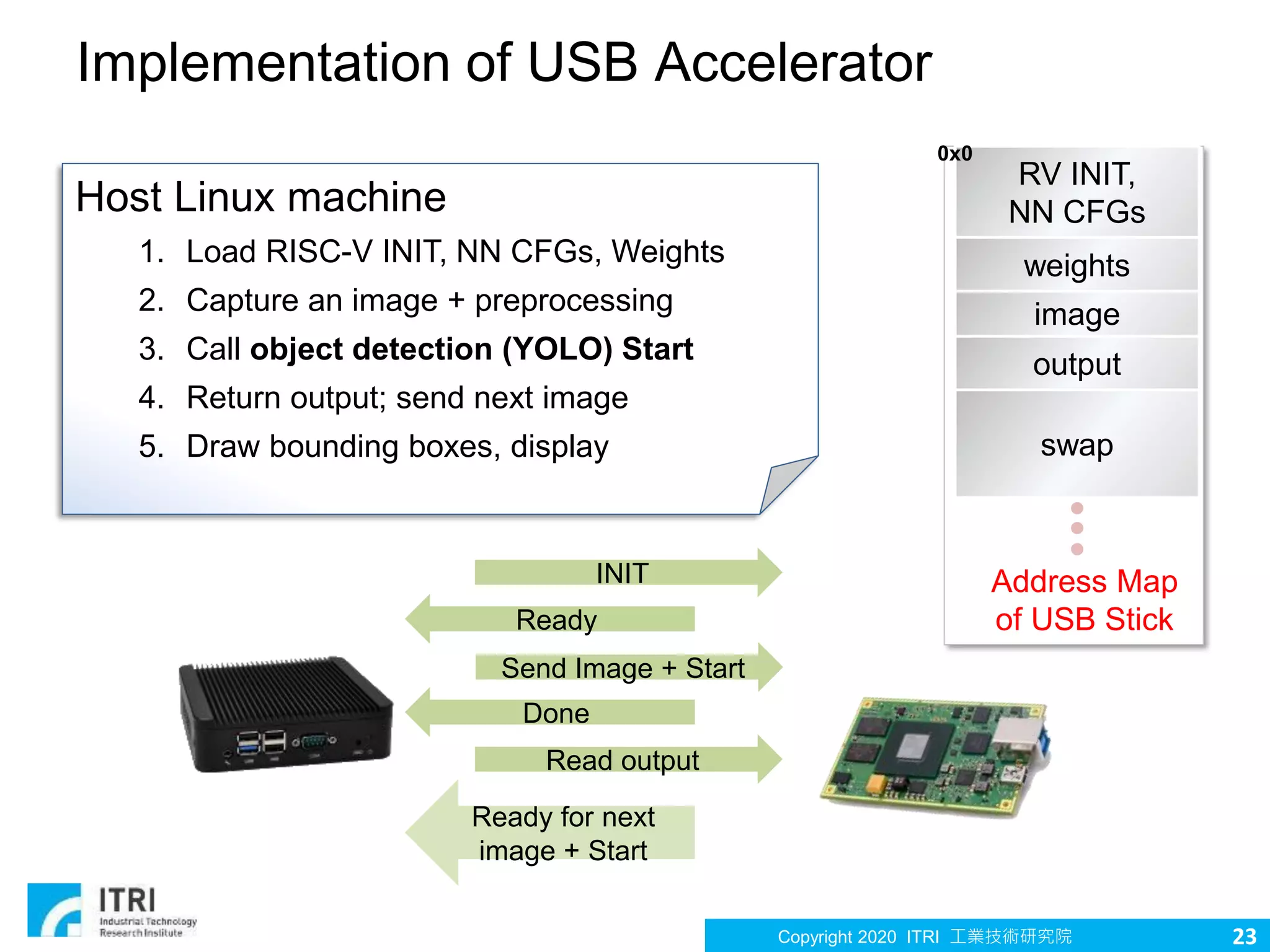

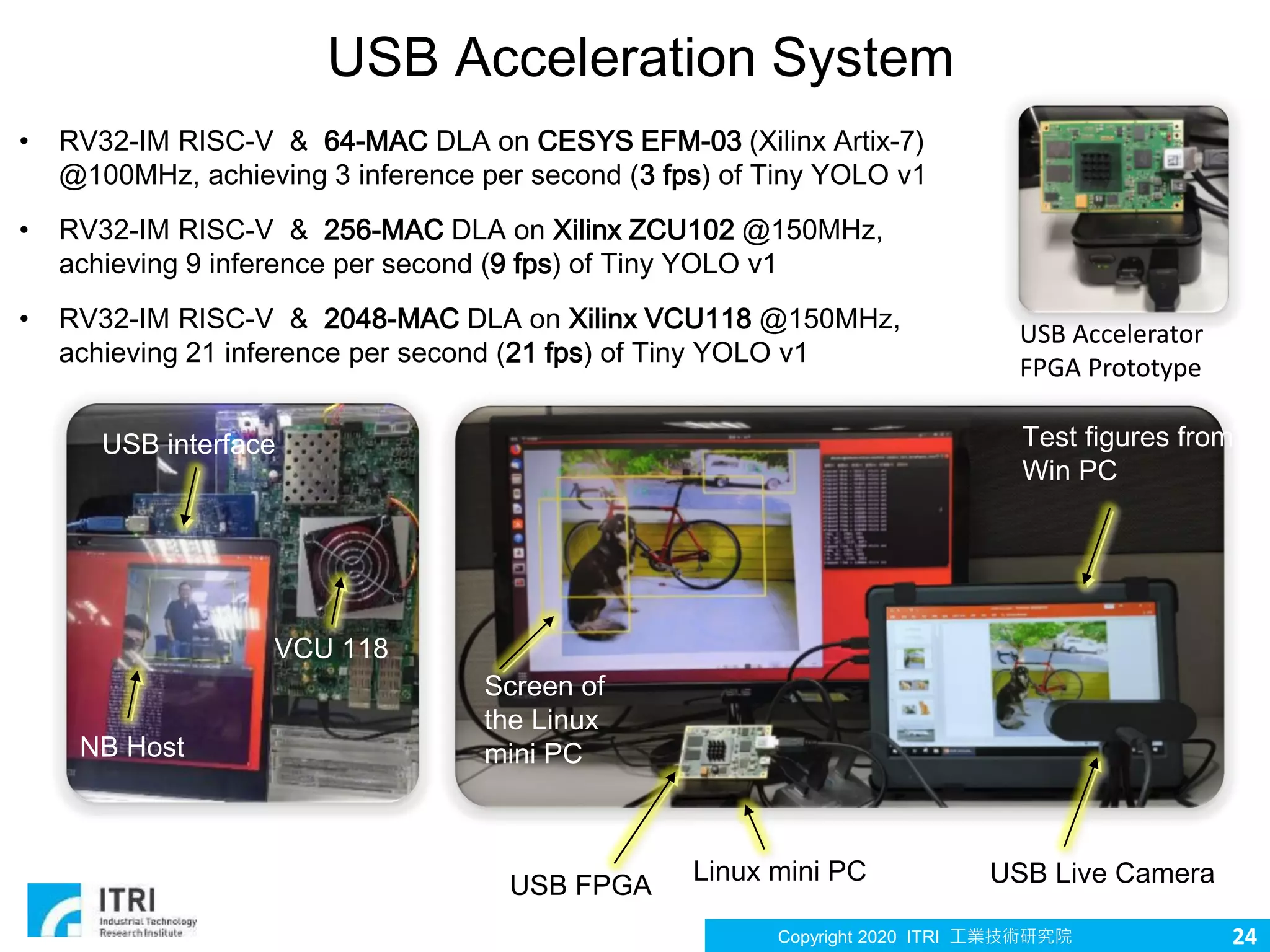

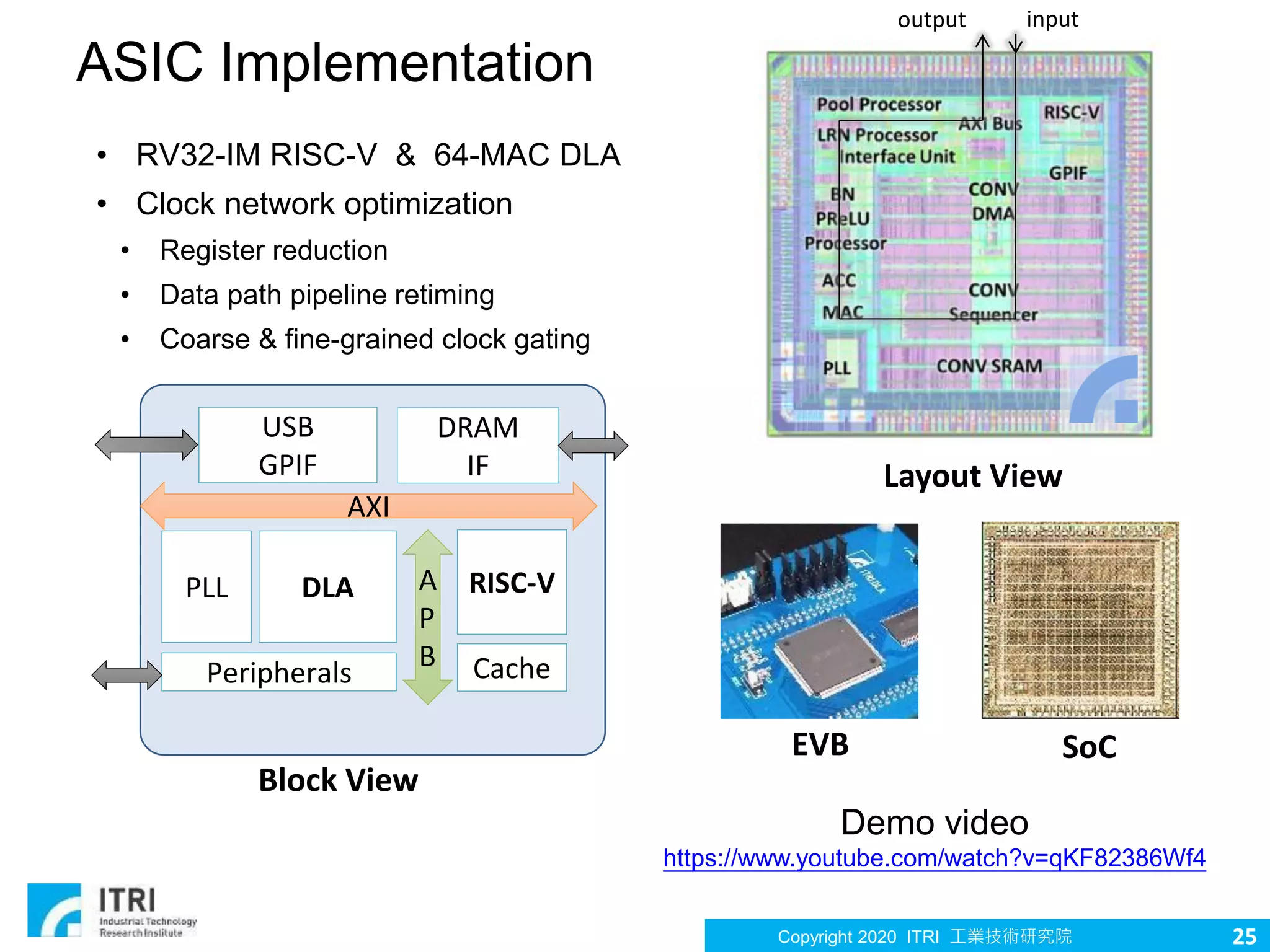

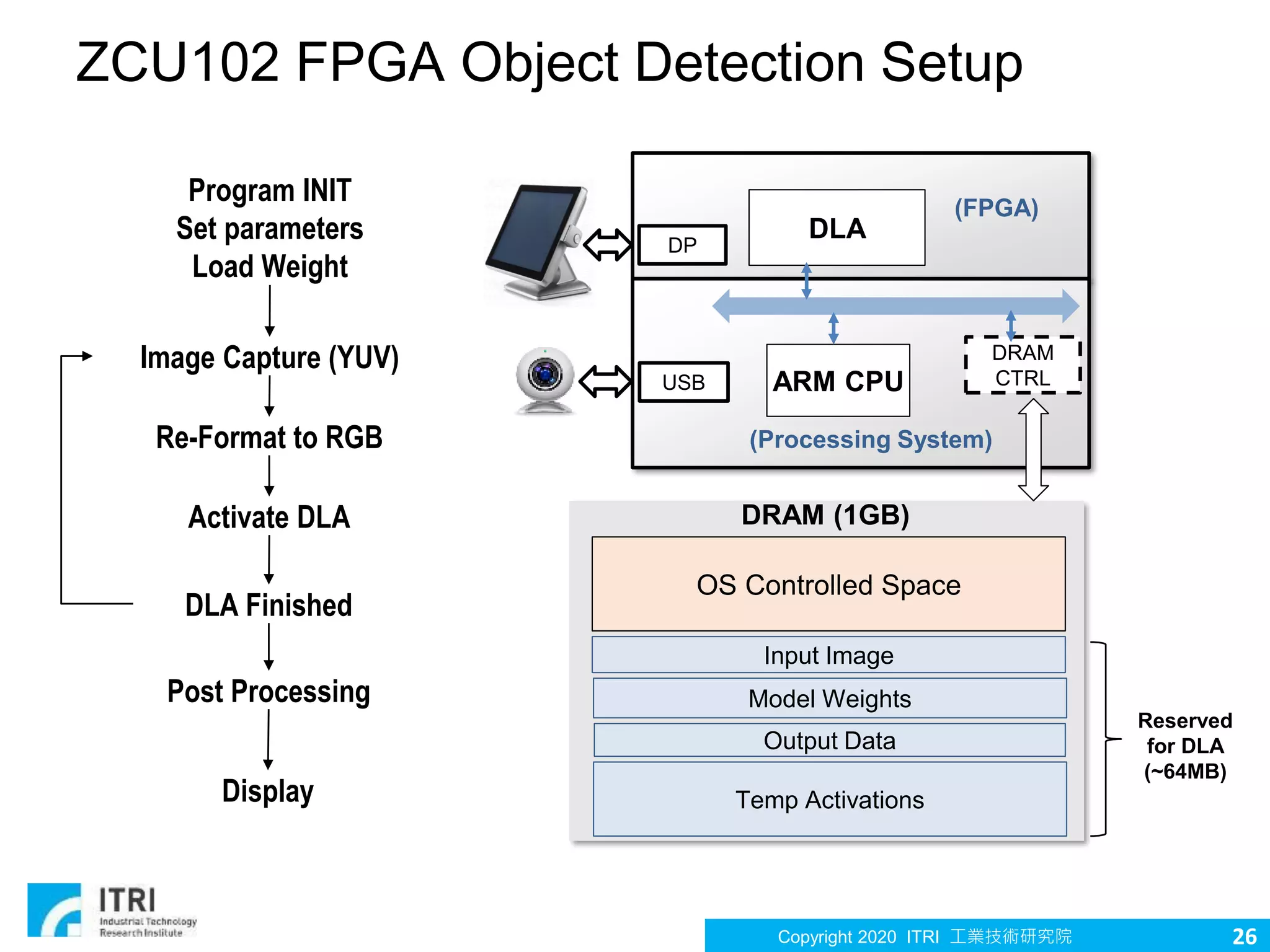

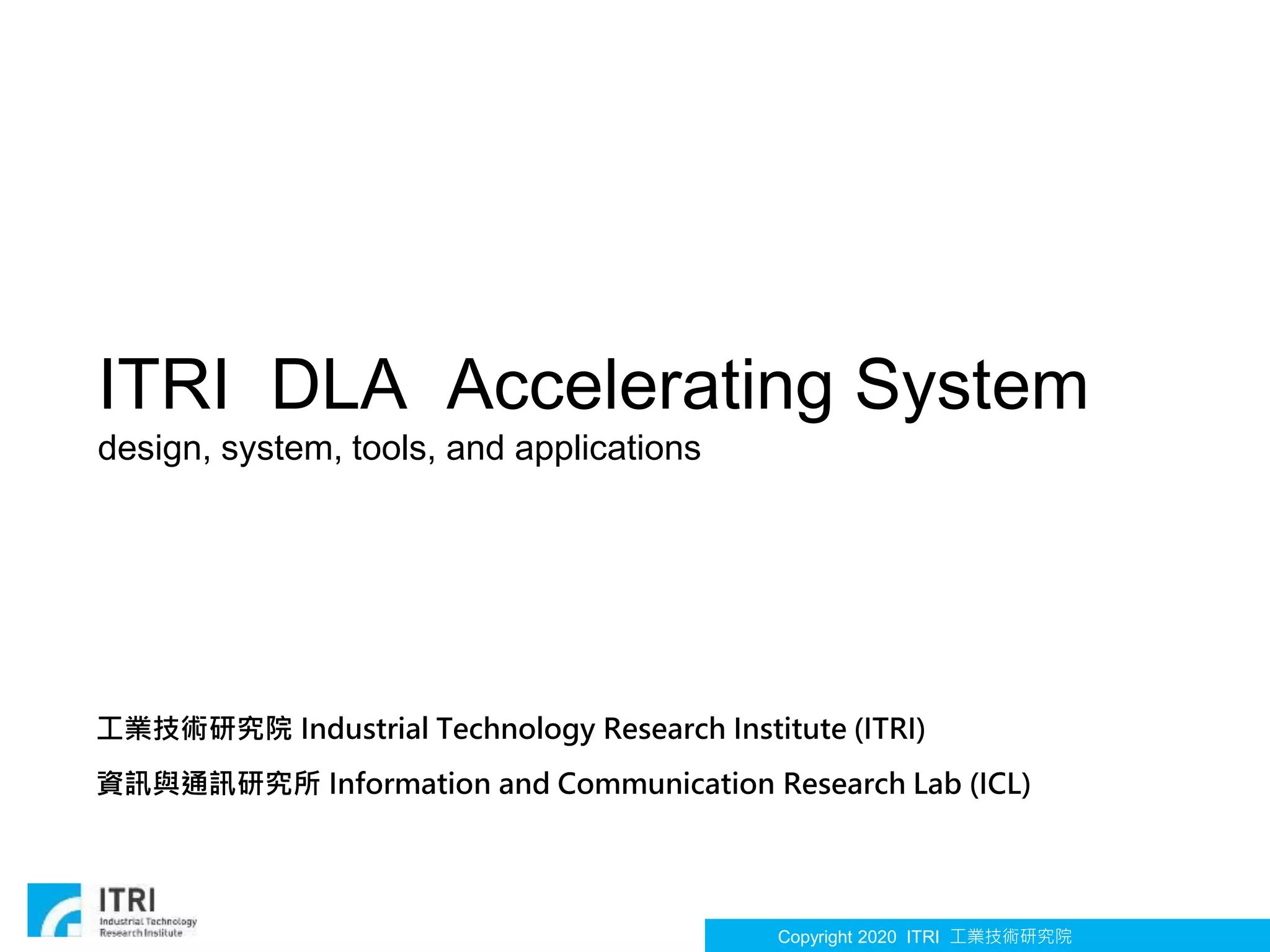

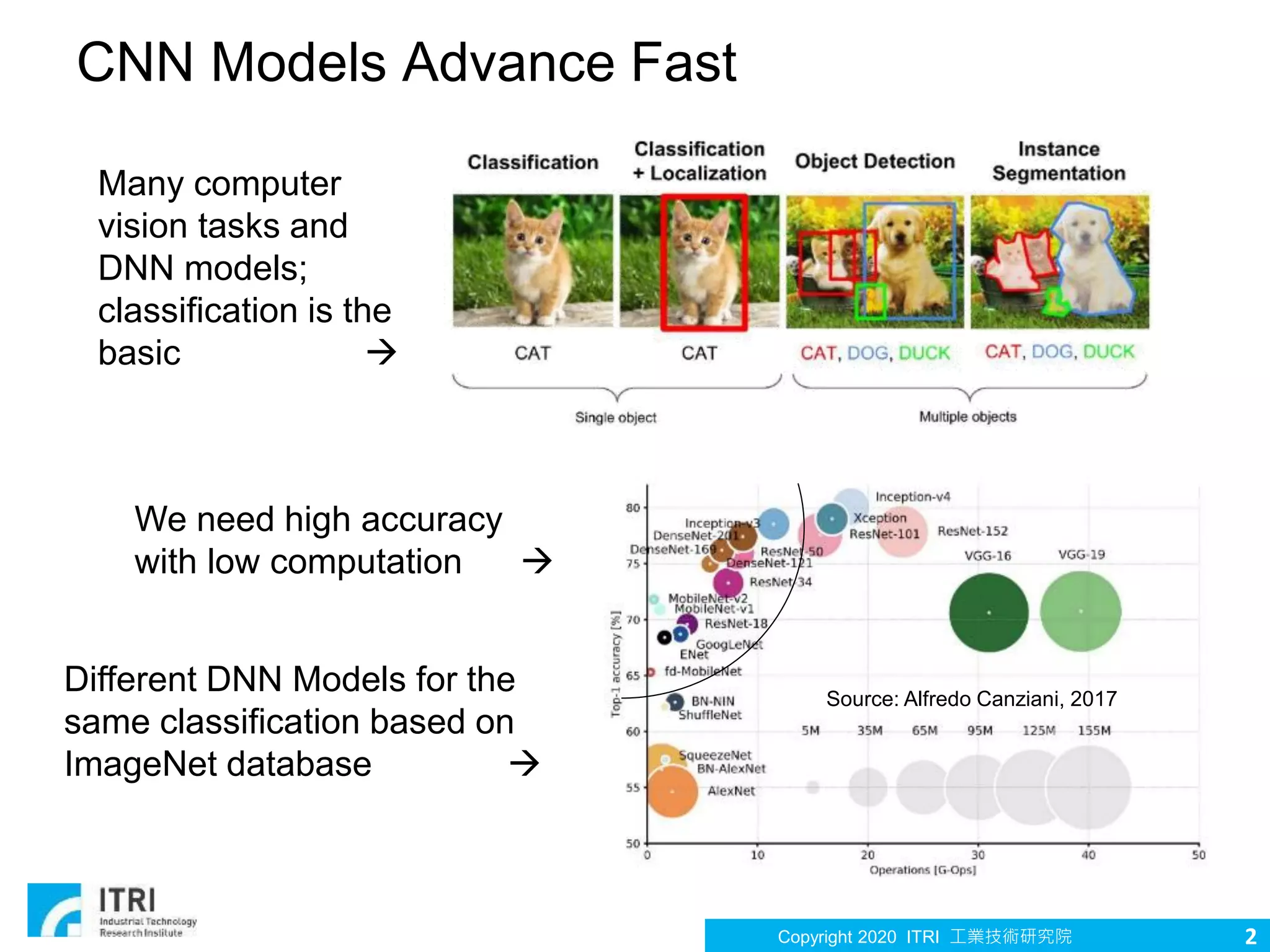

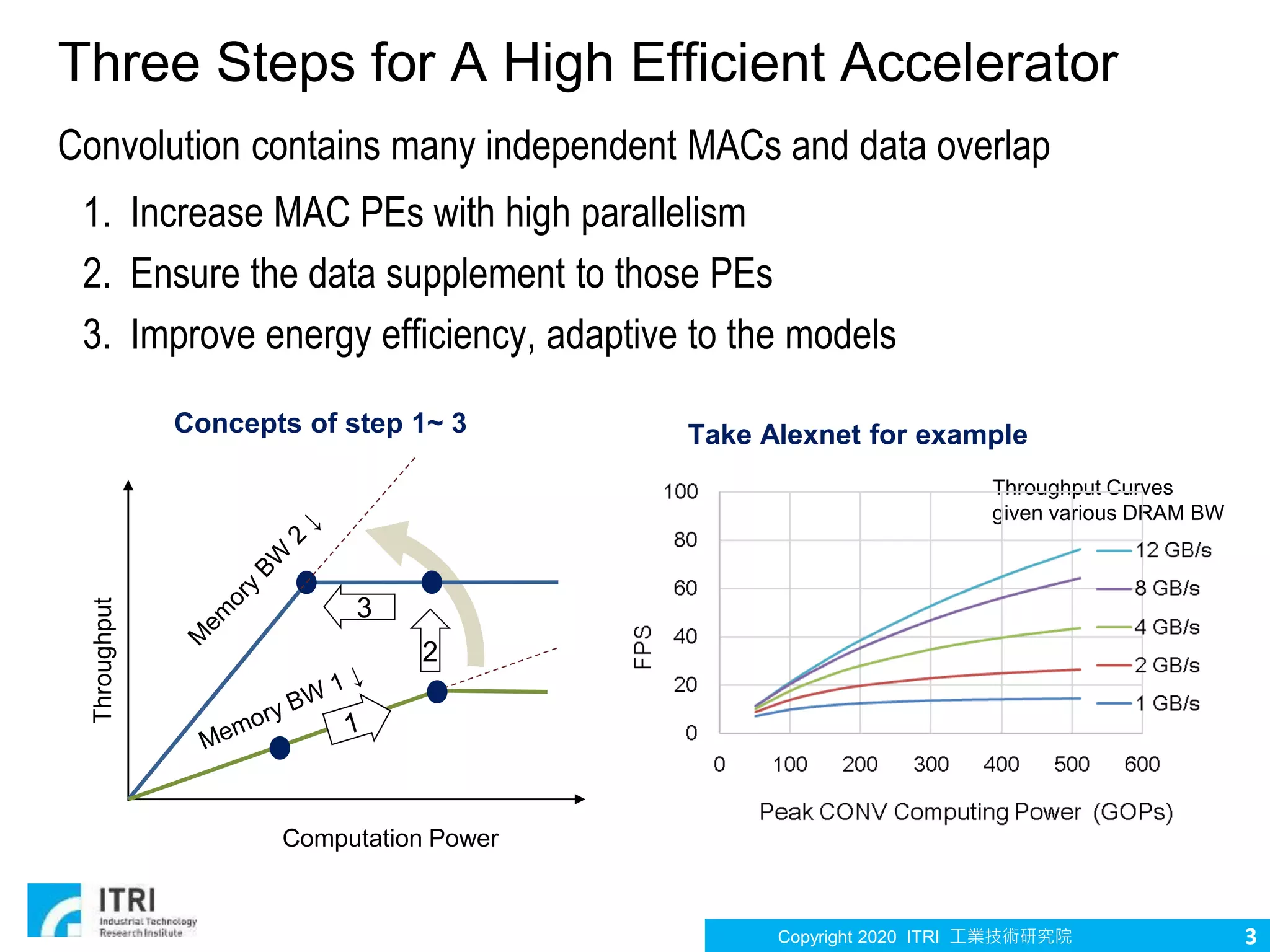

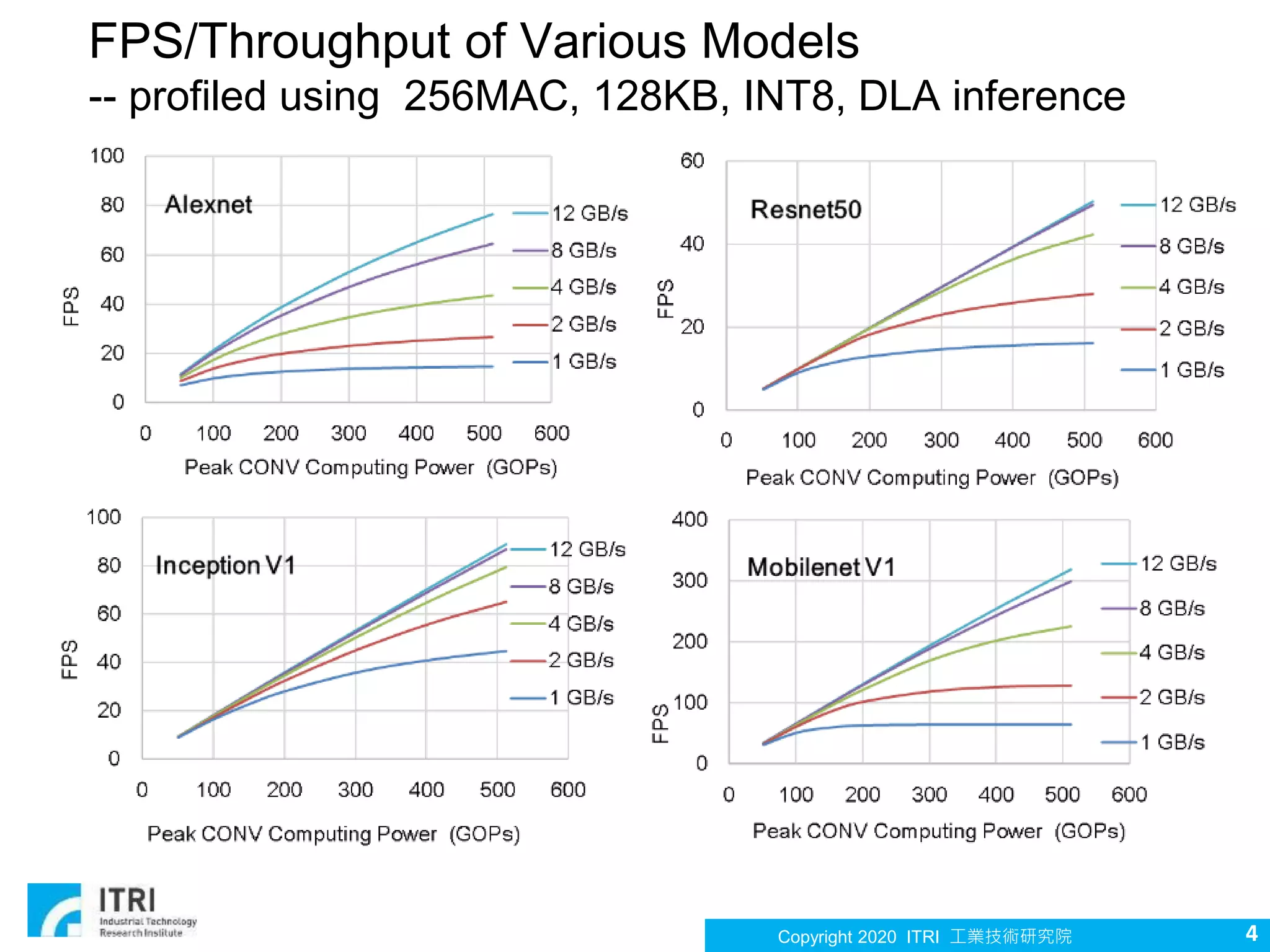

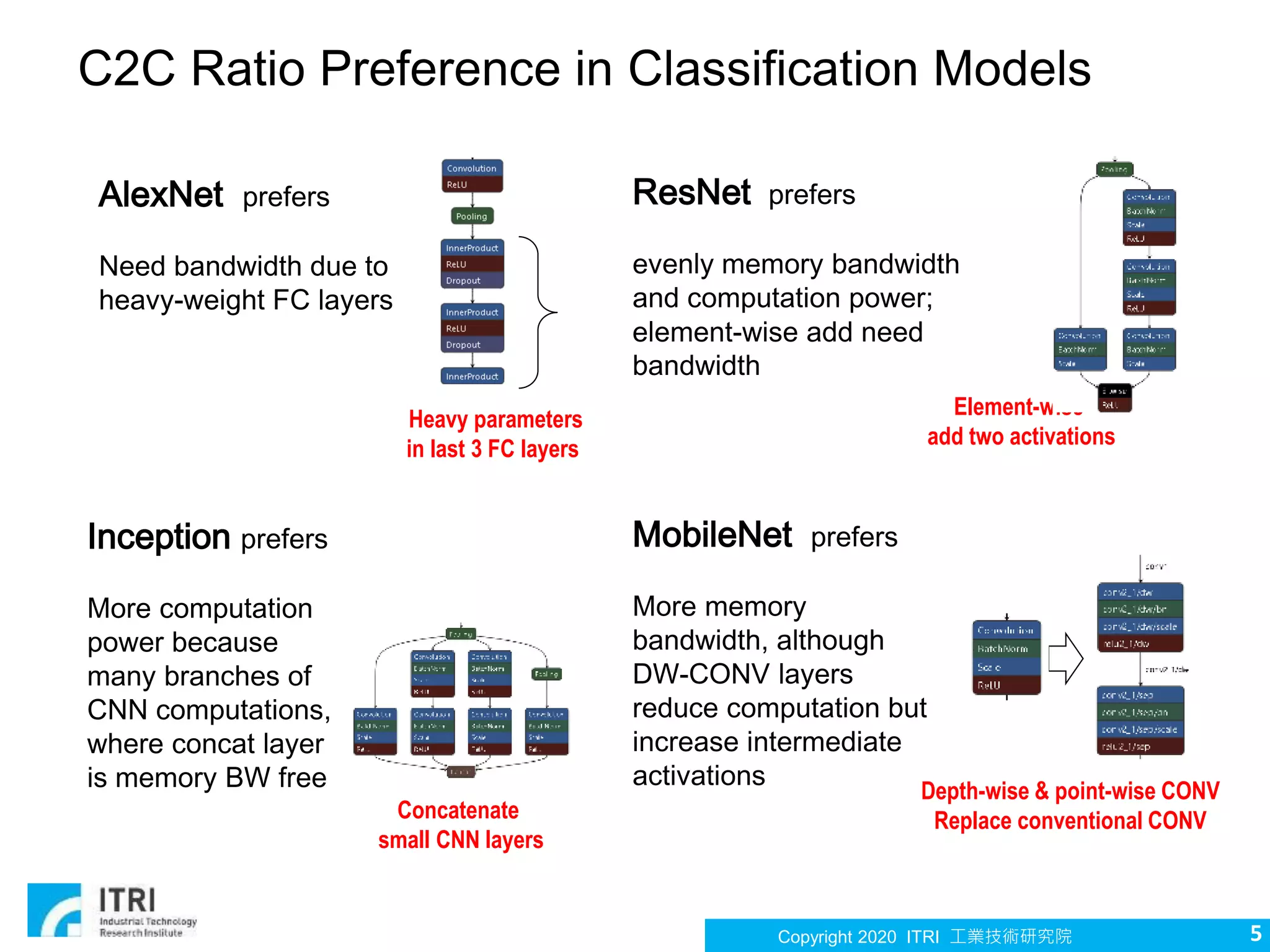

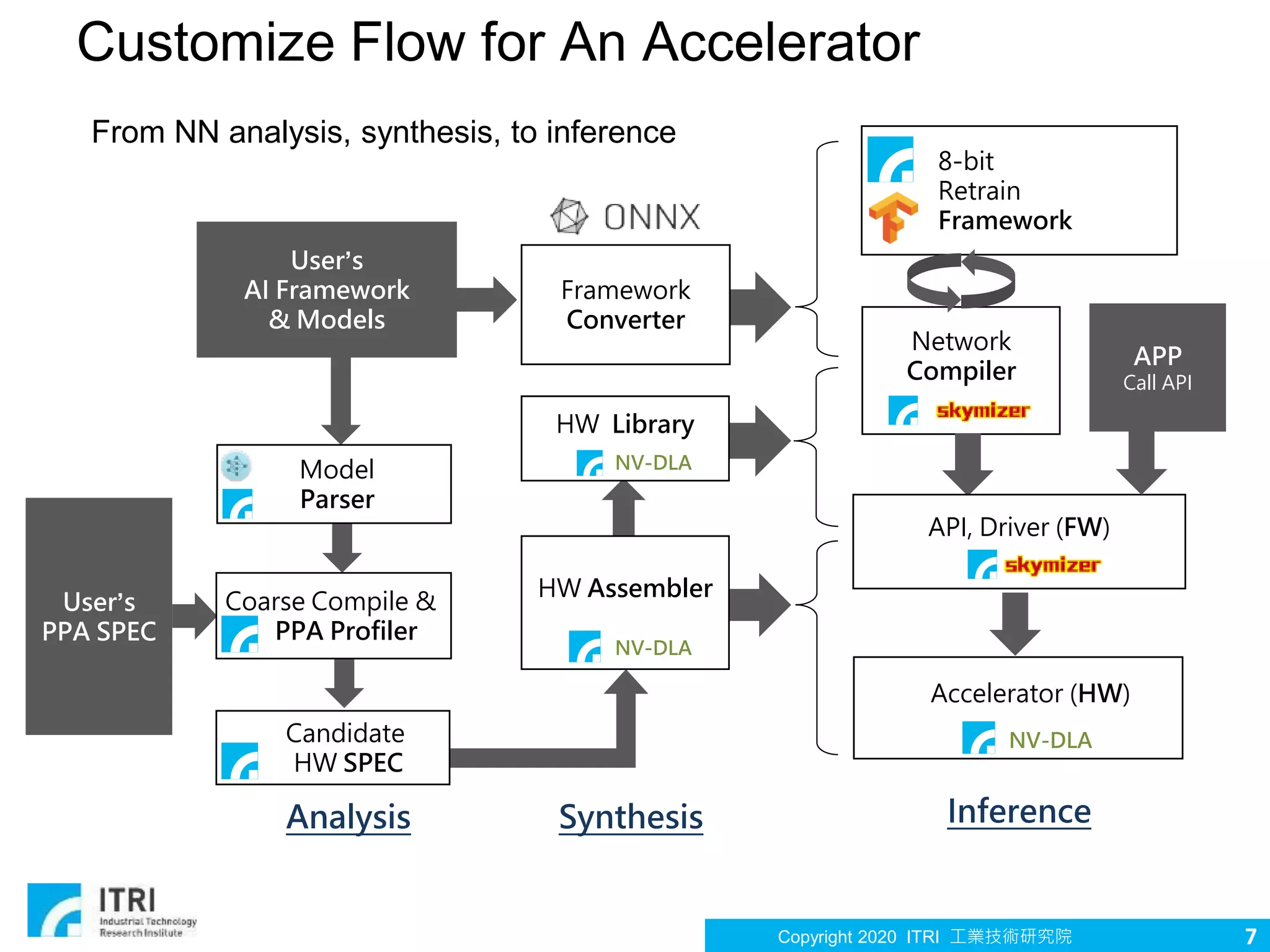

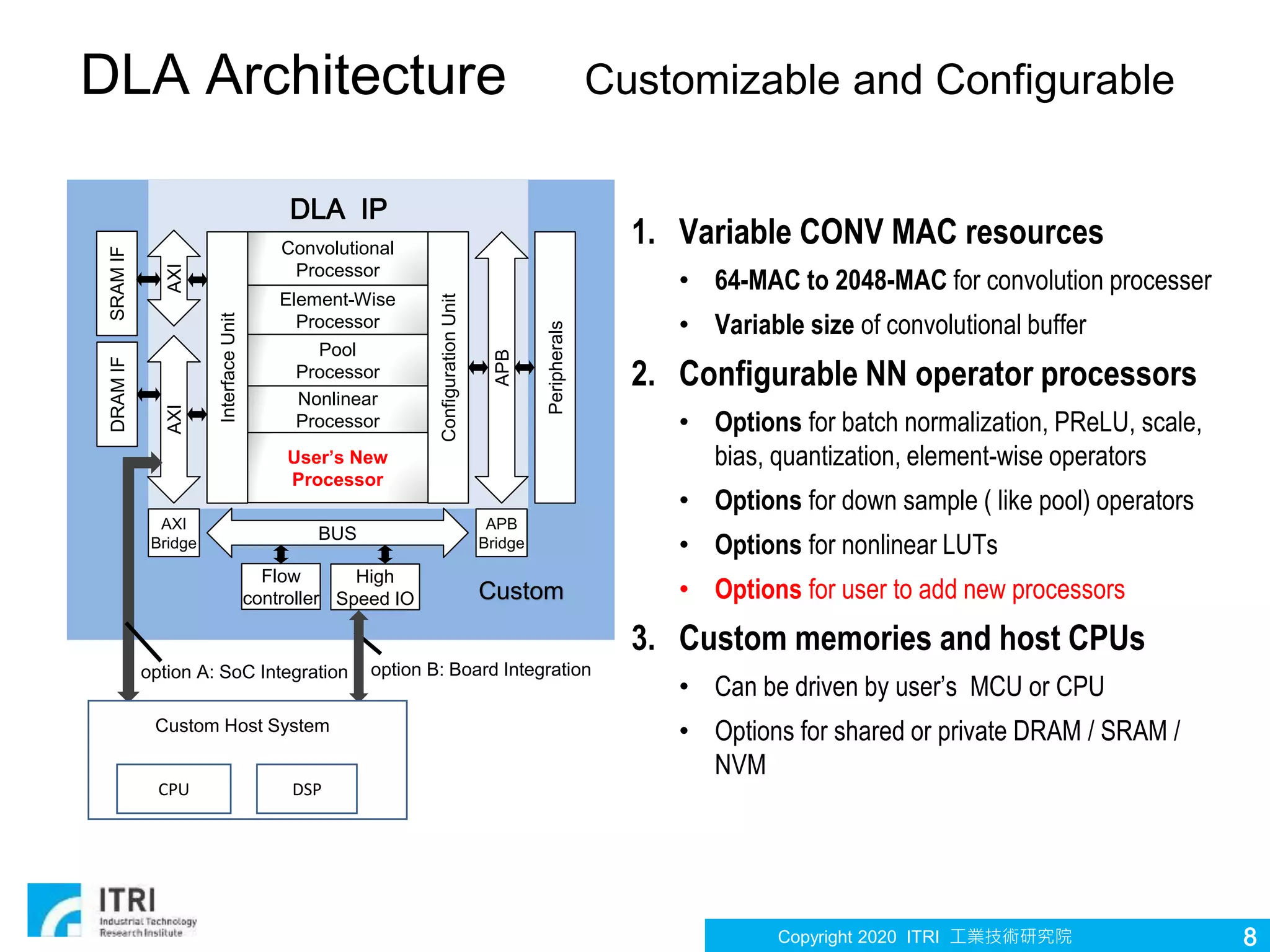

The document discusses the Design and Learning Accelerator (DLA) architecture by ITRI, emphasizing its customization and efficiency for deep neural network inference. It details the various components, including convolutional processors and memory configurations, necessary for optimizing performance across different AI models. Key aspects such as parallelism, memory bandwidth, and energy efficiency are outlined to highlight enhancements in acceleration for computer vision tasks.

![Copyright 2020 ITRI 工業技術研究院 10

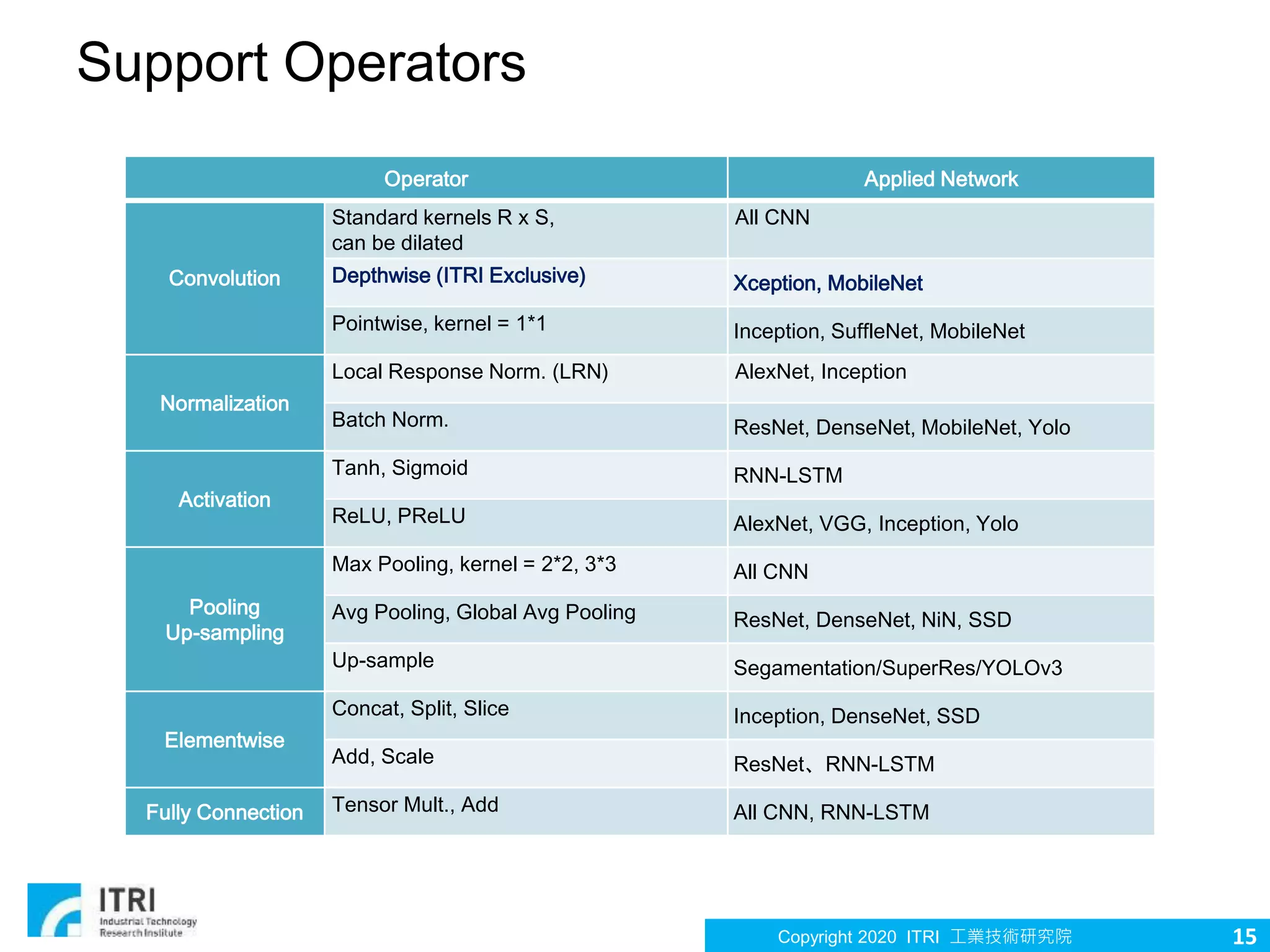

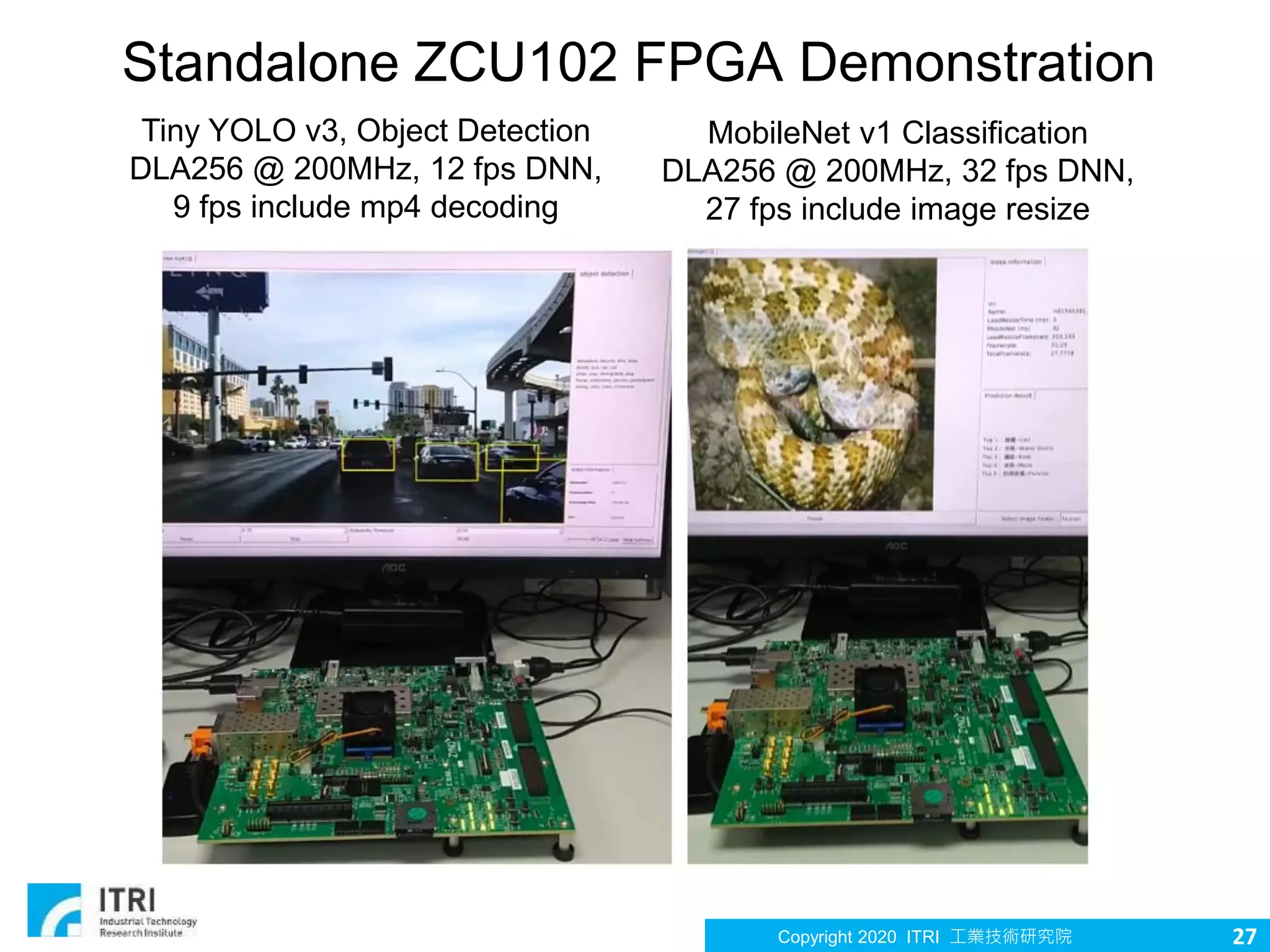

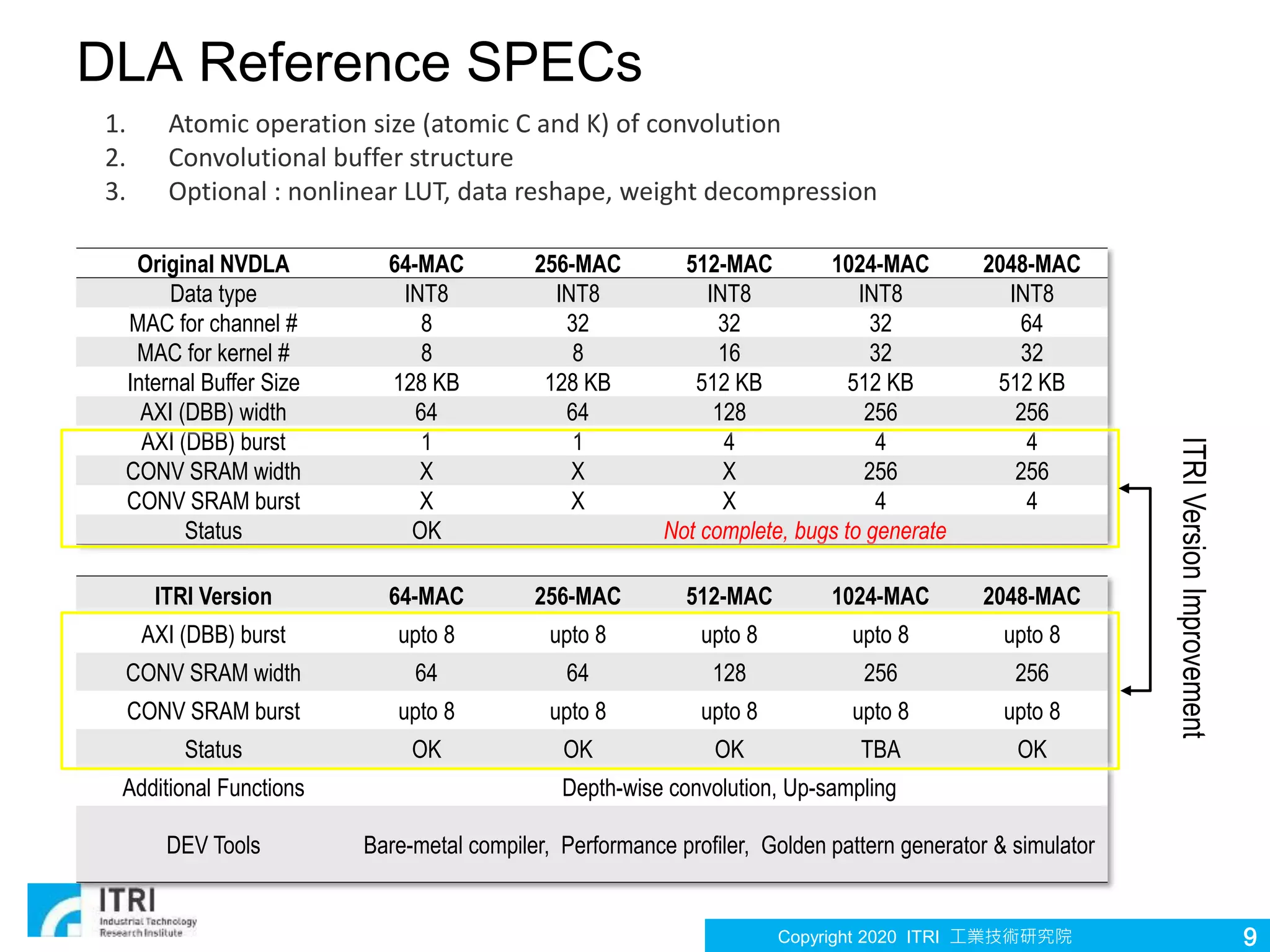

Features of DLA Hardware width

height

IN IN

IN

OUT

kernels

Stride 1, no pad

Channel first Plane first

3D CONV example

1. Variable HW resource

• Search an efficient resource to models

• Adaptive performance & power consumption

2. Suit for long-channel convolution

• Output pixel first, share IN, avoiding partial sum storage

• Support any kernel size (n x m) ,the same data flow

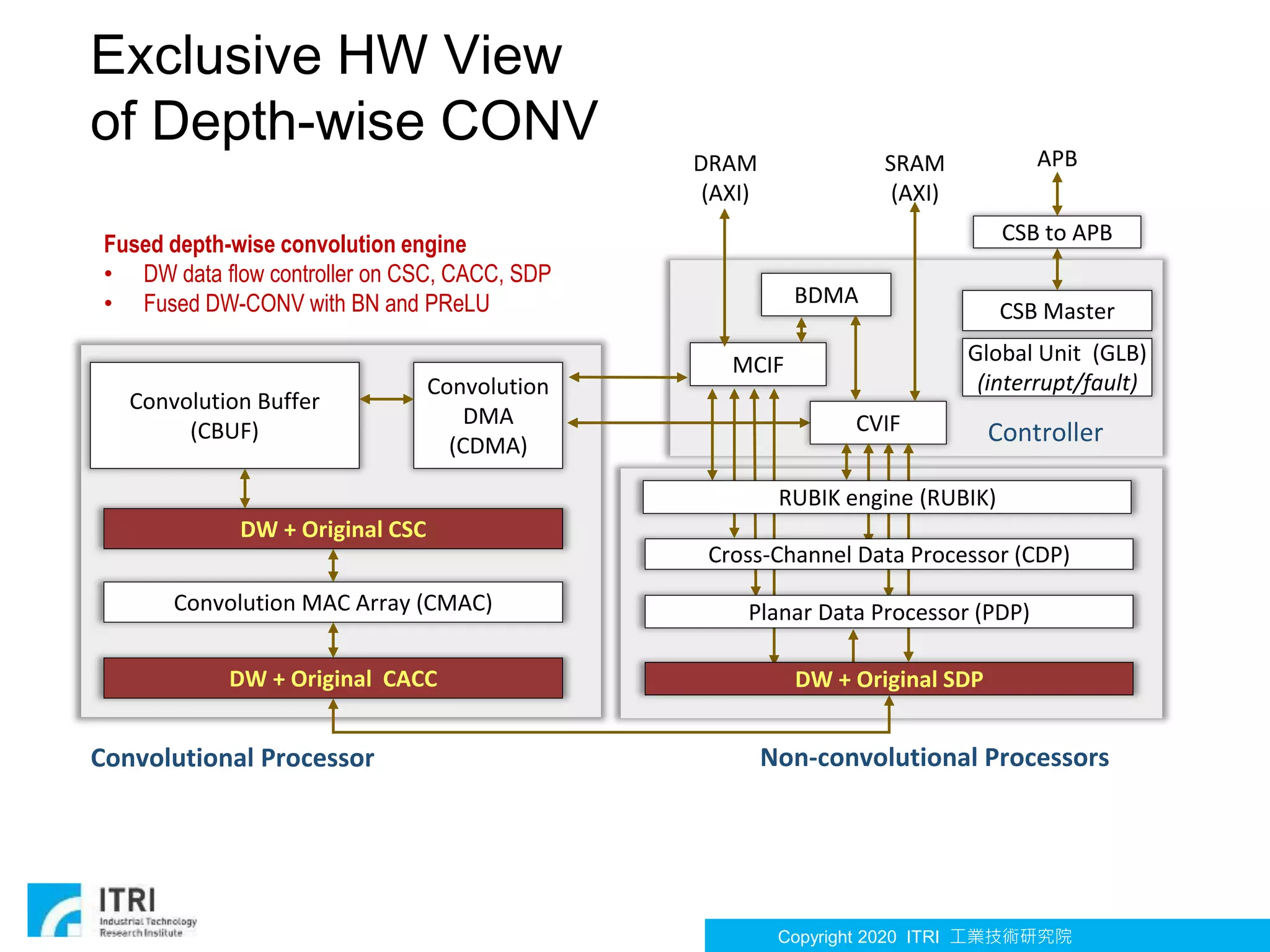

3. Revision for depth-wise convolution

• Output pixel first, channel = 1 convolution

• Support any kernel size (n x m) ,the same data flow

4. Data reuse and hetero-layer fusion

• Input reuse or weight reuse by setup

• Fuse popular layers [CONV(BN)–Quantize-PReLU–Pooling ]

5. Program time hiding

• Configure N and N+1 layer simultaneously

• Cover the configuration time during layer change](https://image.slidesharecdn.com/2020-icldla-updated-200519094016/75/2020-icldla-updated-10-2048.jpg)