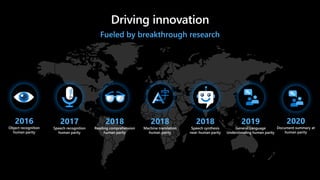

1. Azure AI provides updates on advances in AI capabilities such as object recognition reaching human parity in 2016 and machine translation reaching human parity in 2018.

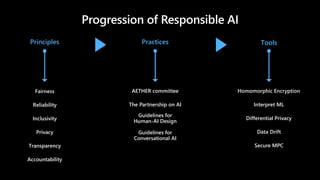

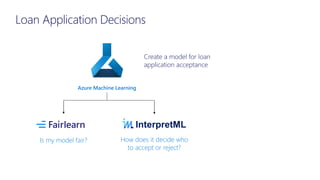

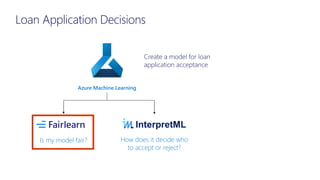

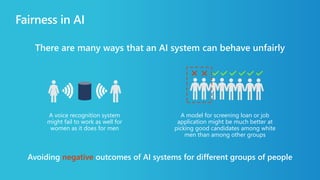

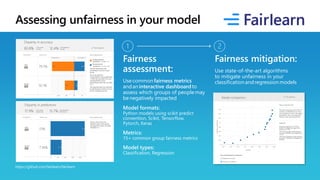

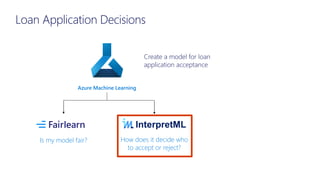

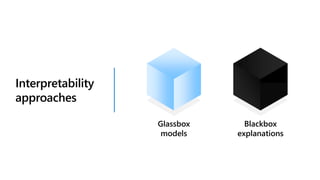

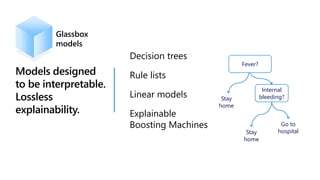

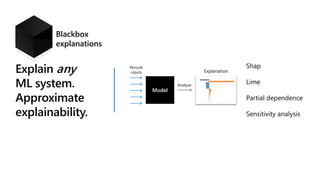

2. Responsible AI practices at Microsoft include interpretability, fairness, and privacy tools to ensure AI systems are understandable, unbiased, and protect user data.

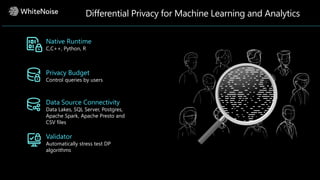

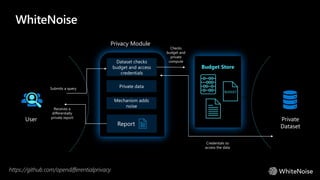

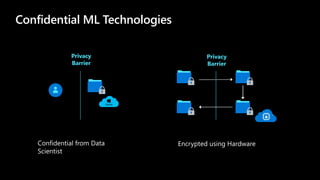

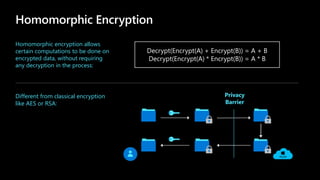

3. Differential privacy and homomorphic encryption techniques allow training models and performing inferences on encrypted user data to enable private and confidential machine learning.

![Private Prediction

on Encrypted Data

Through a trained machine

learning model, private prediction

enables inferencing on encrypted

data without revealing the content

of the data to anyone.

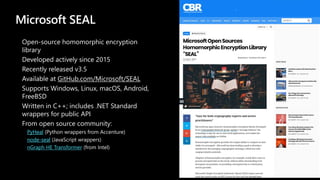

Microsoft SEAL can be deployed in

a variety of applications to protect

users personal and private data

Privacy Barrier

[cryptographic]

Medical prediction](https://image.slidesharecdn.com/20200530ml15minazureai-200602091349/85/45-Machine-Learning-15minutes-Broadcast-Azure-AI-Build-2020-Updates-30-320.jpg)