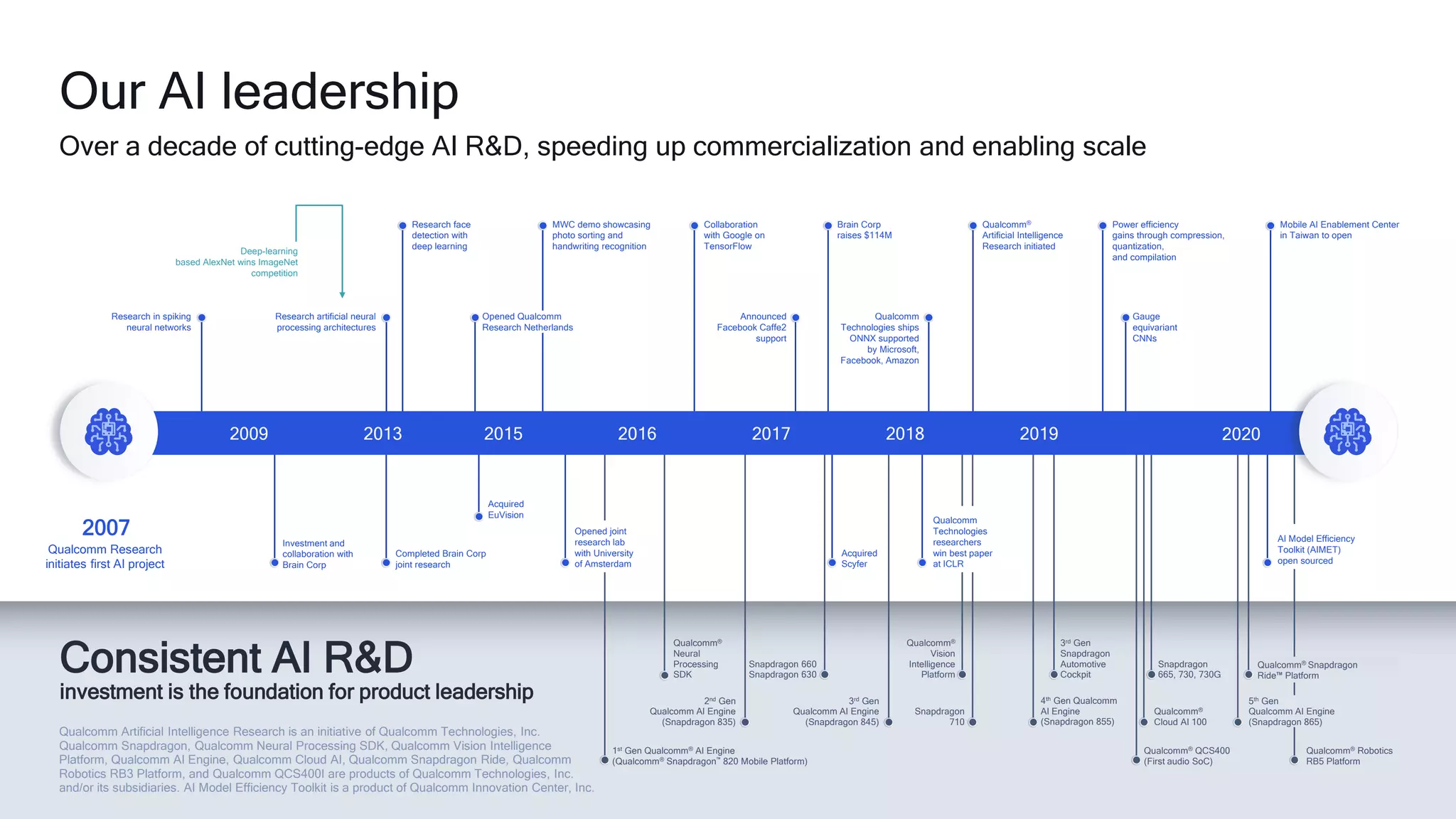

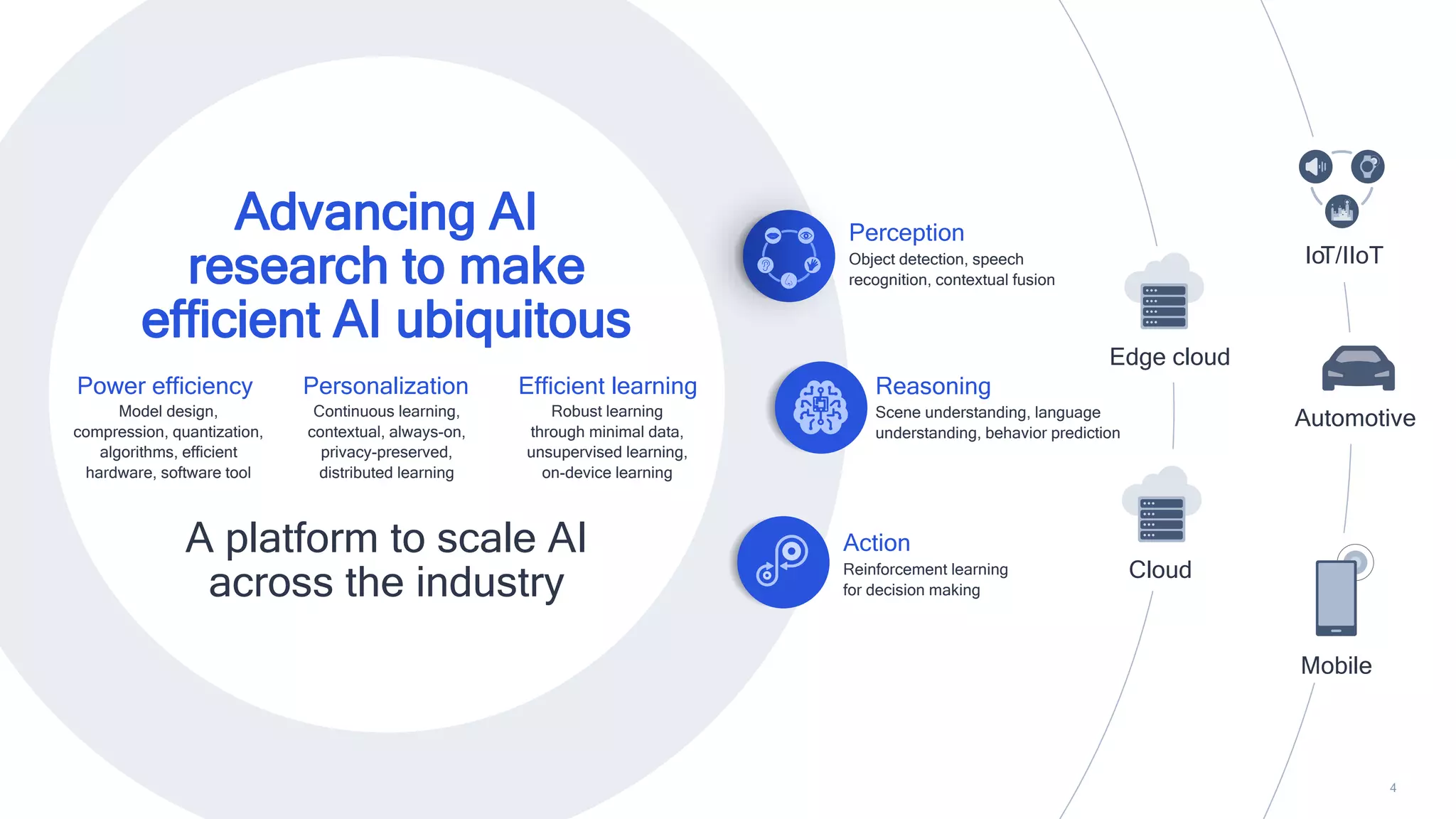

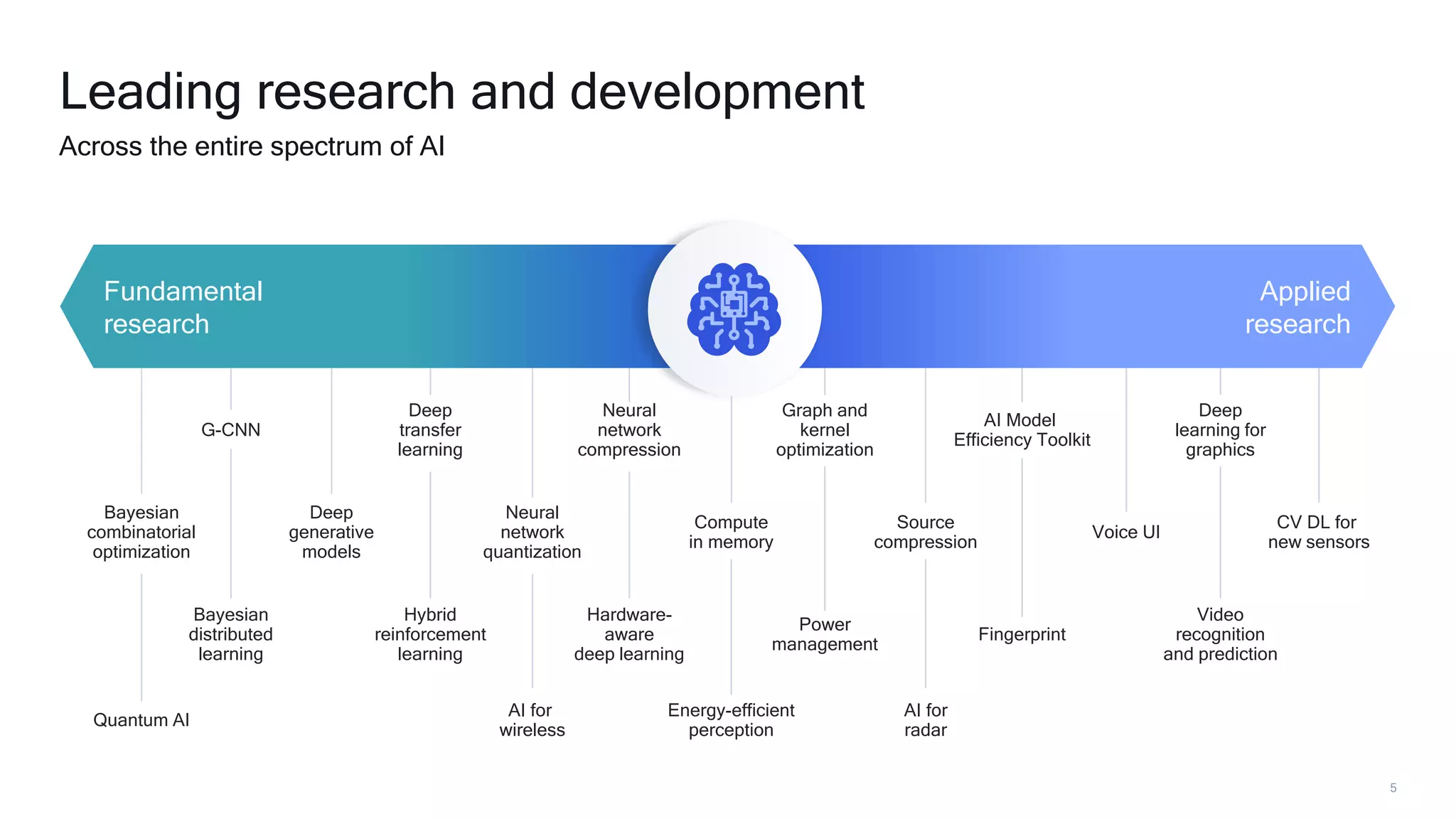

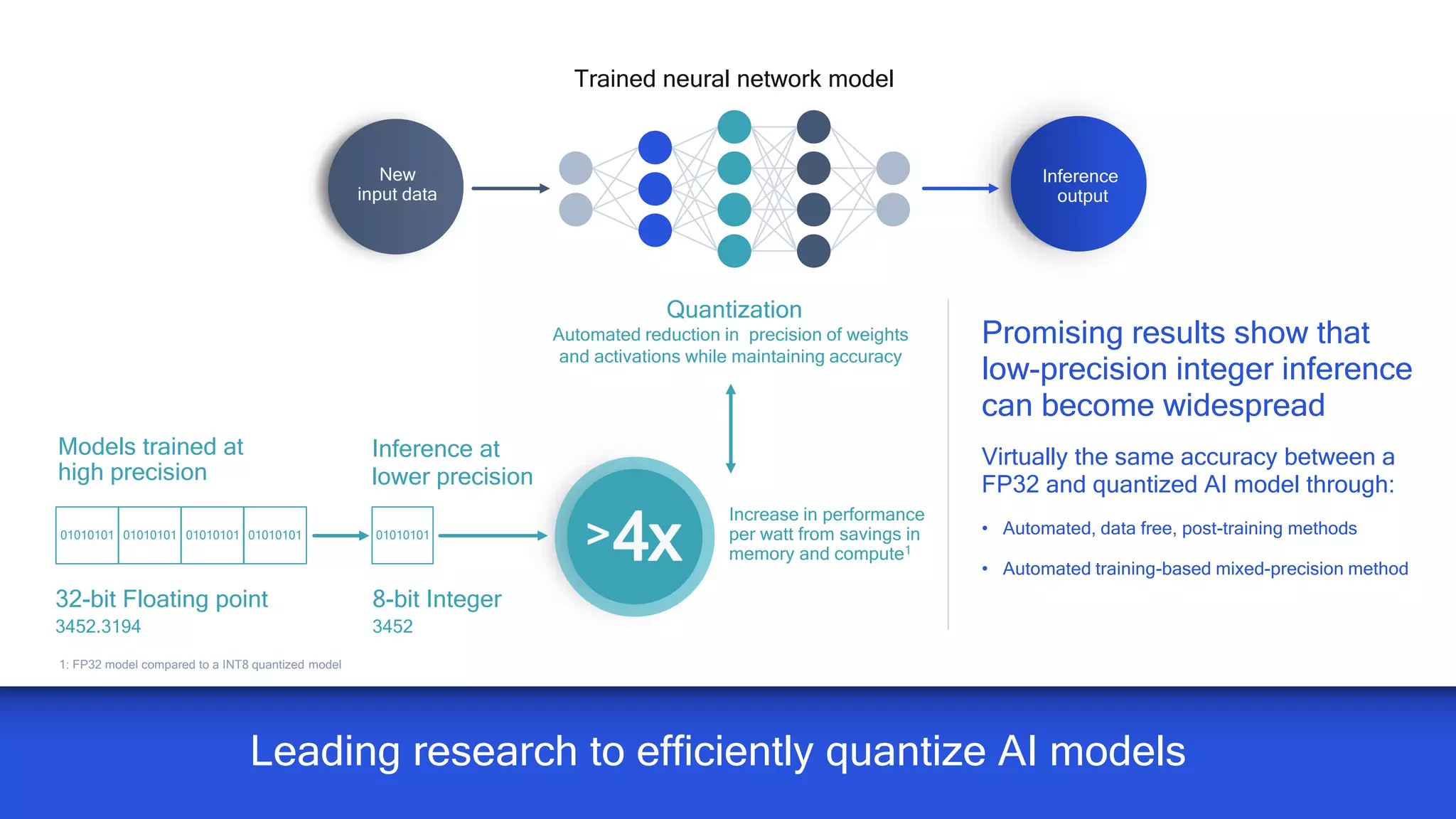

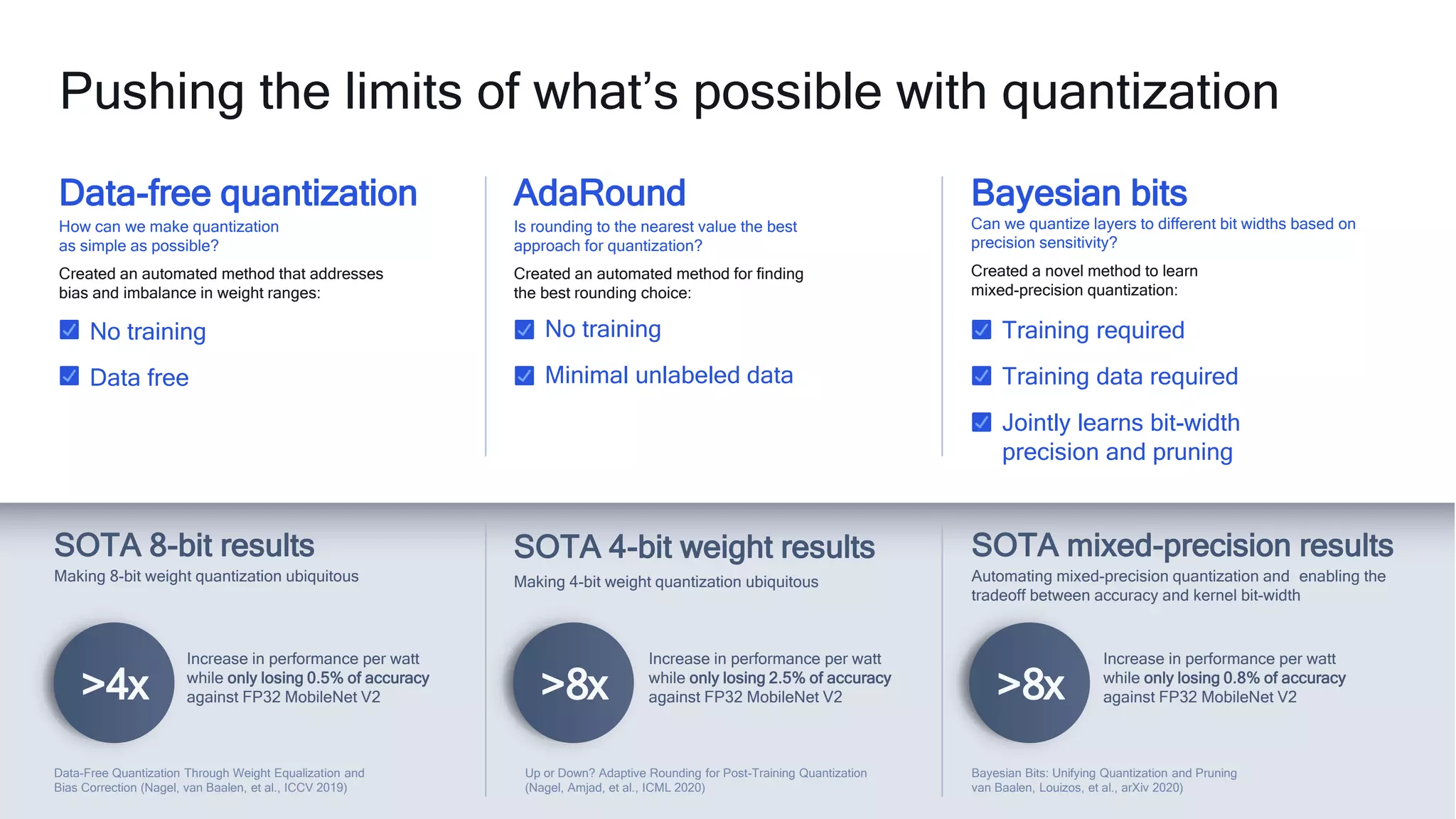

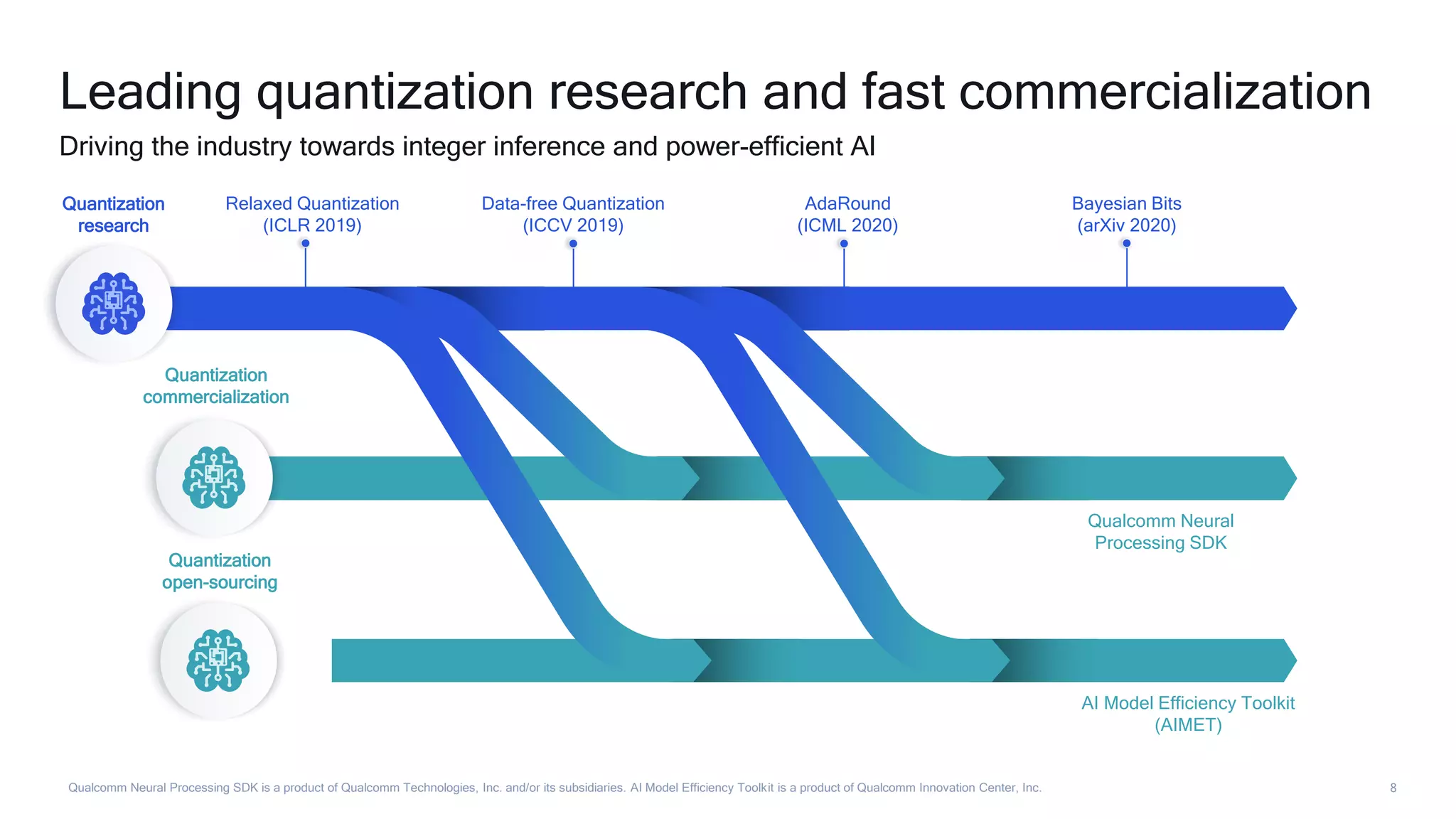

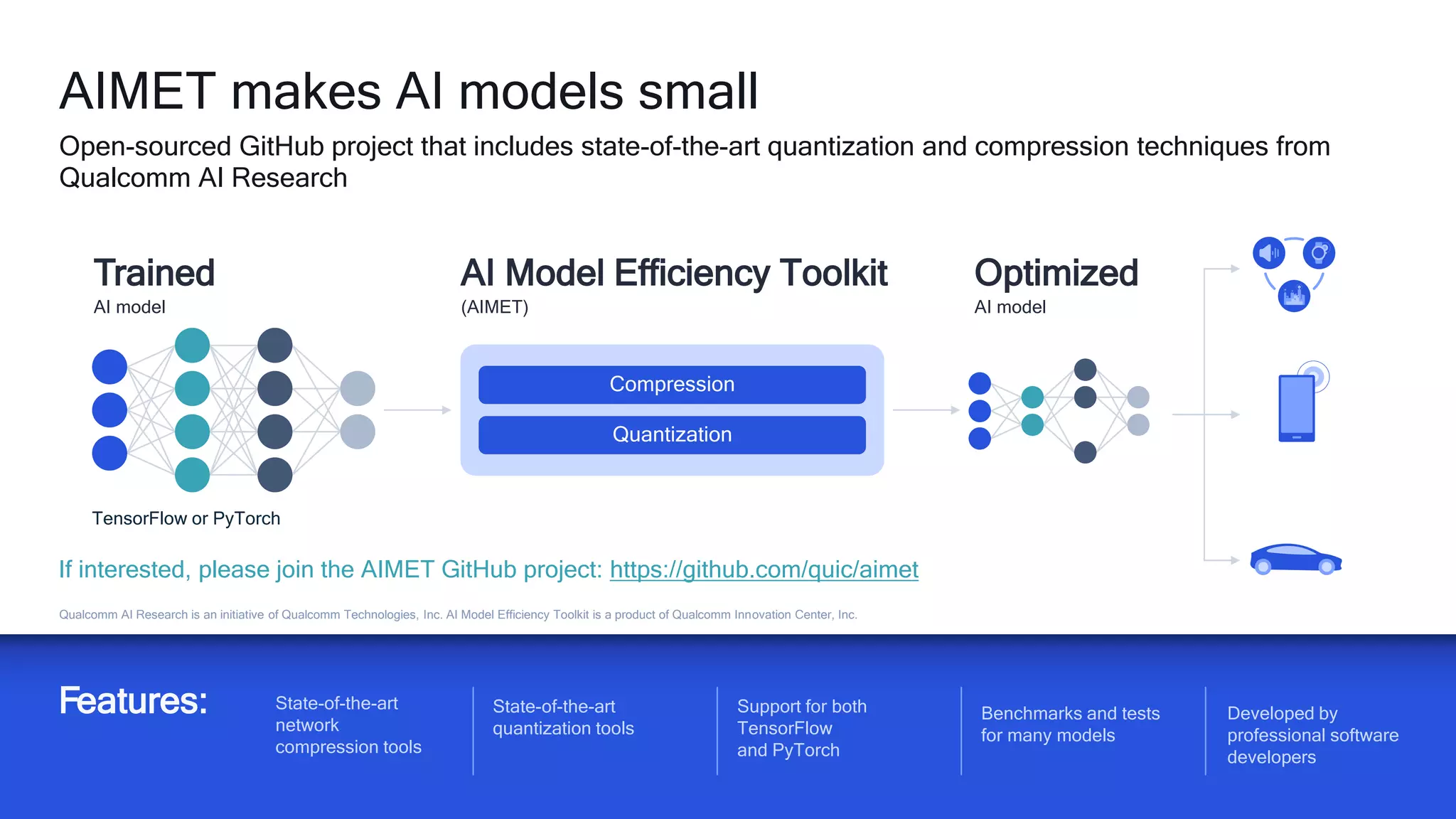

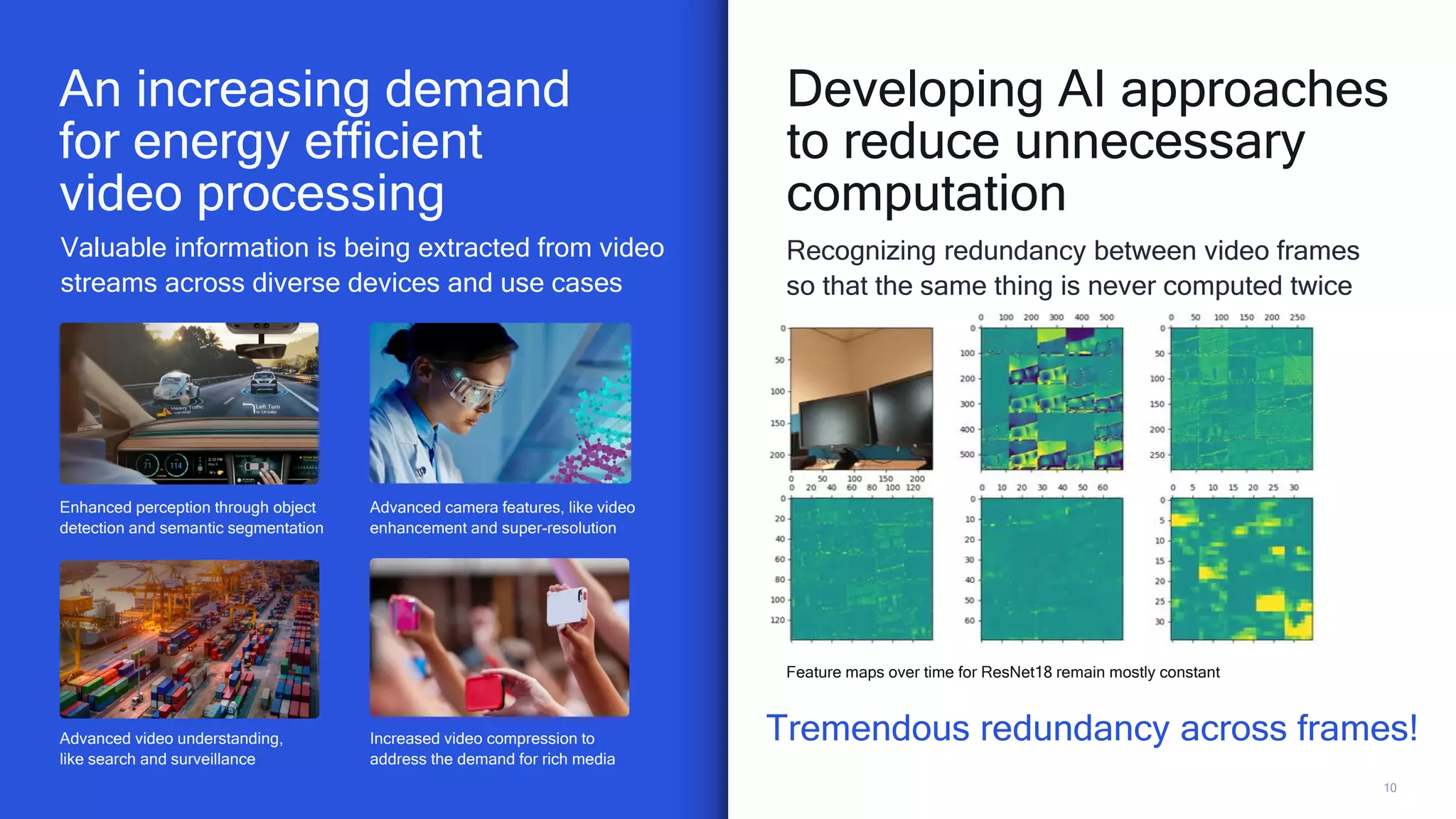

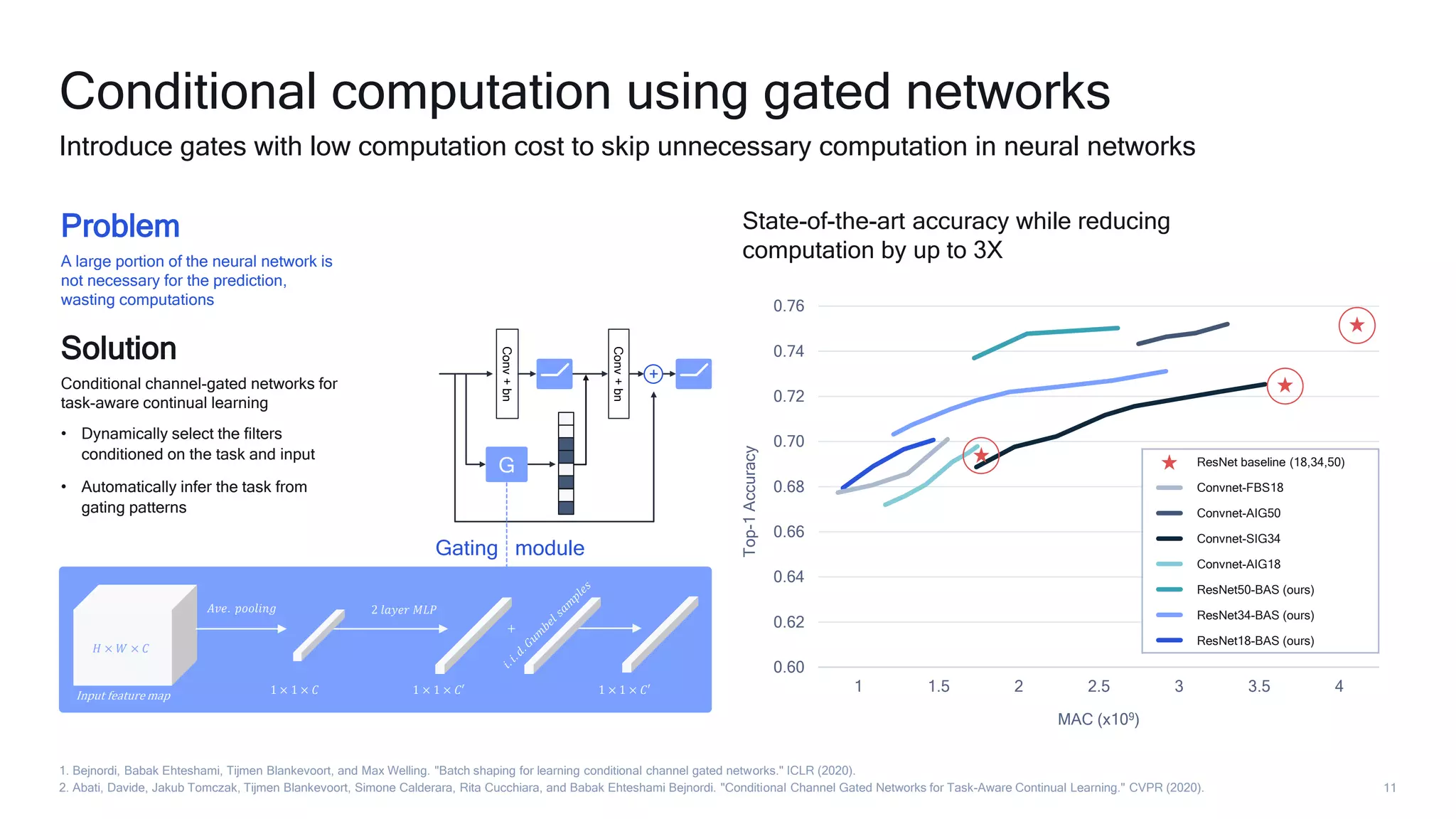

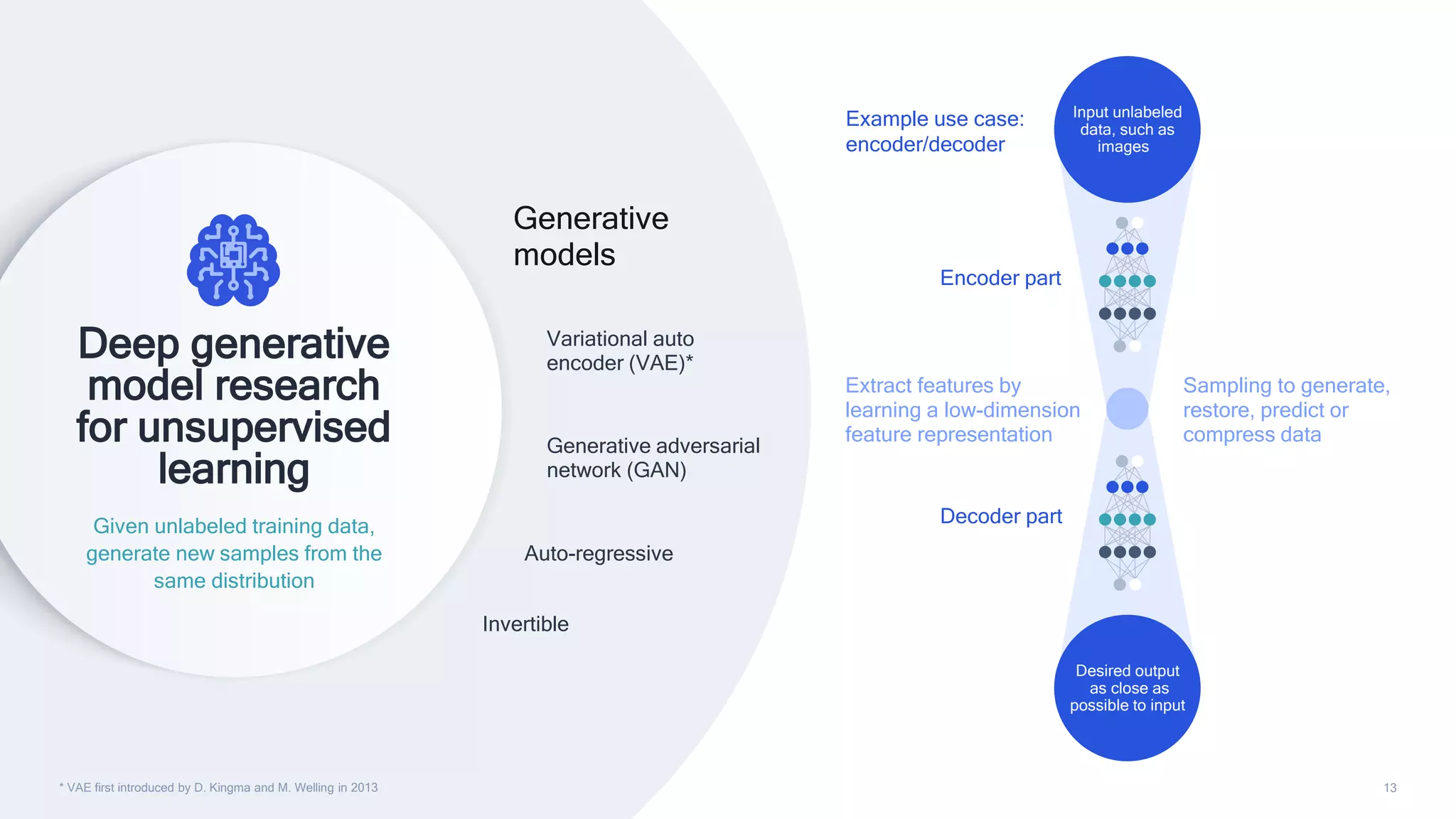

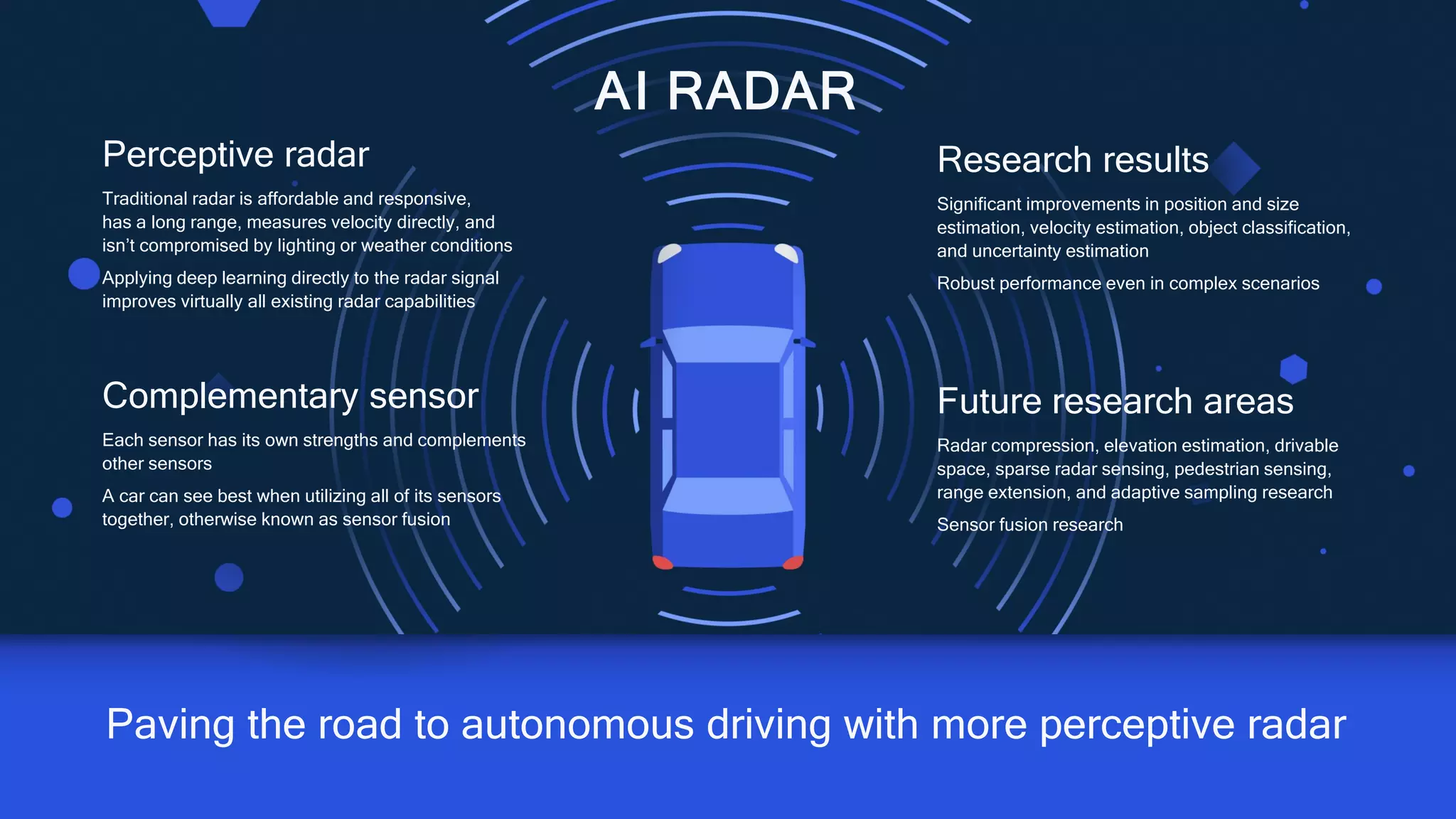

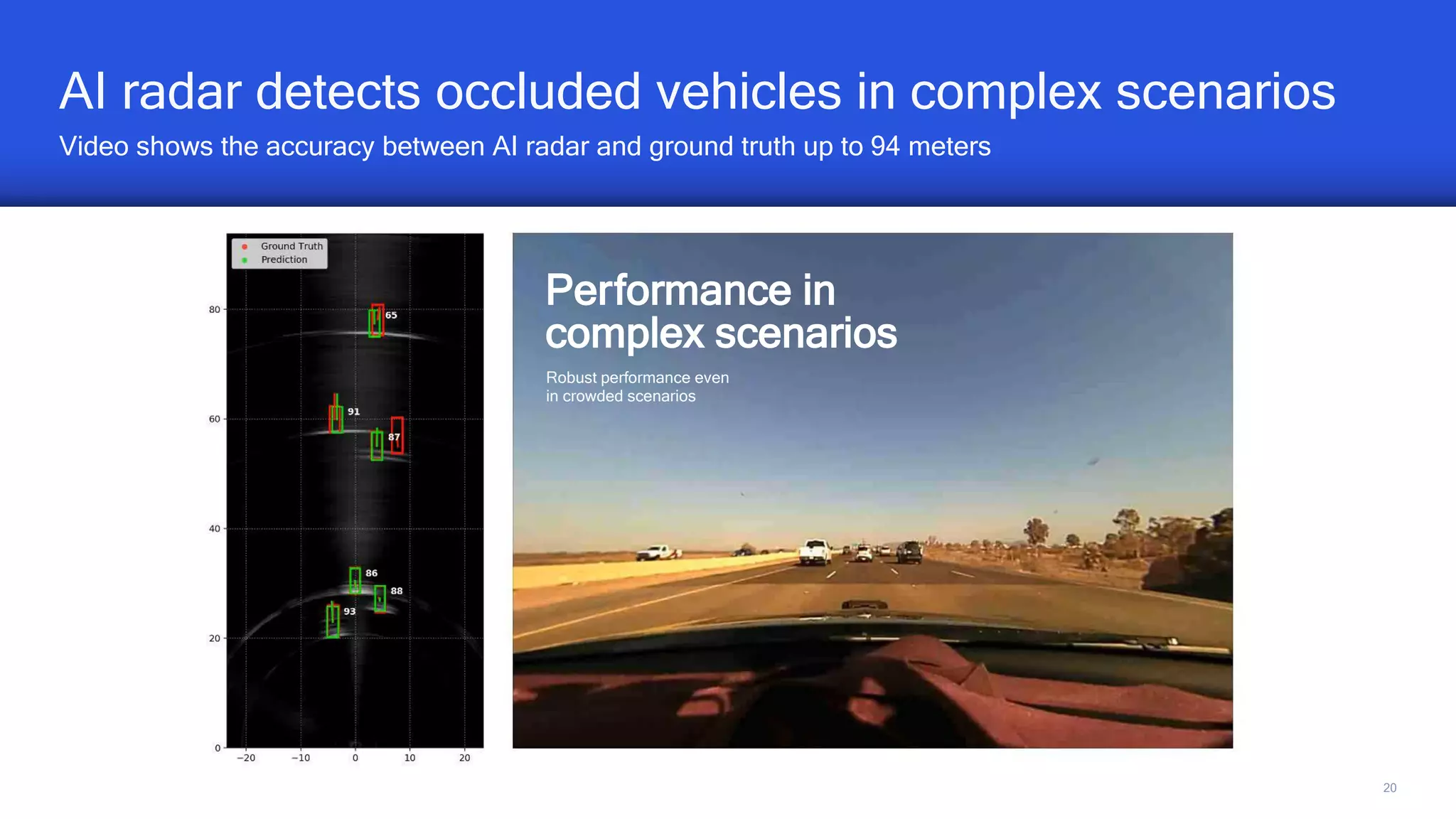

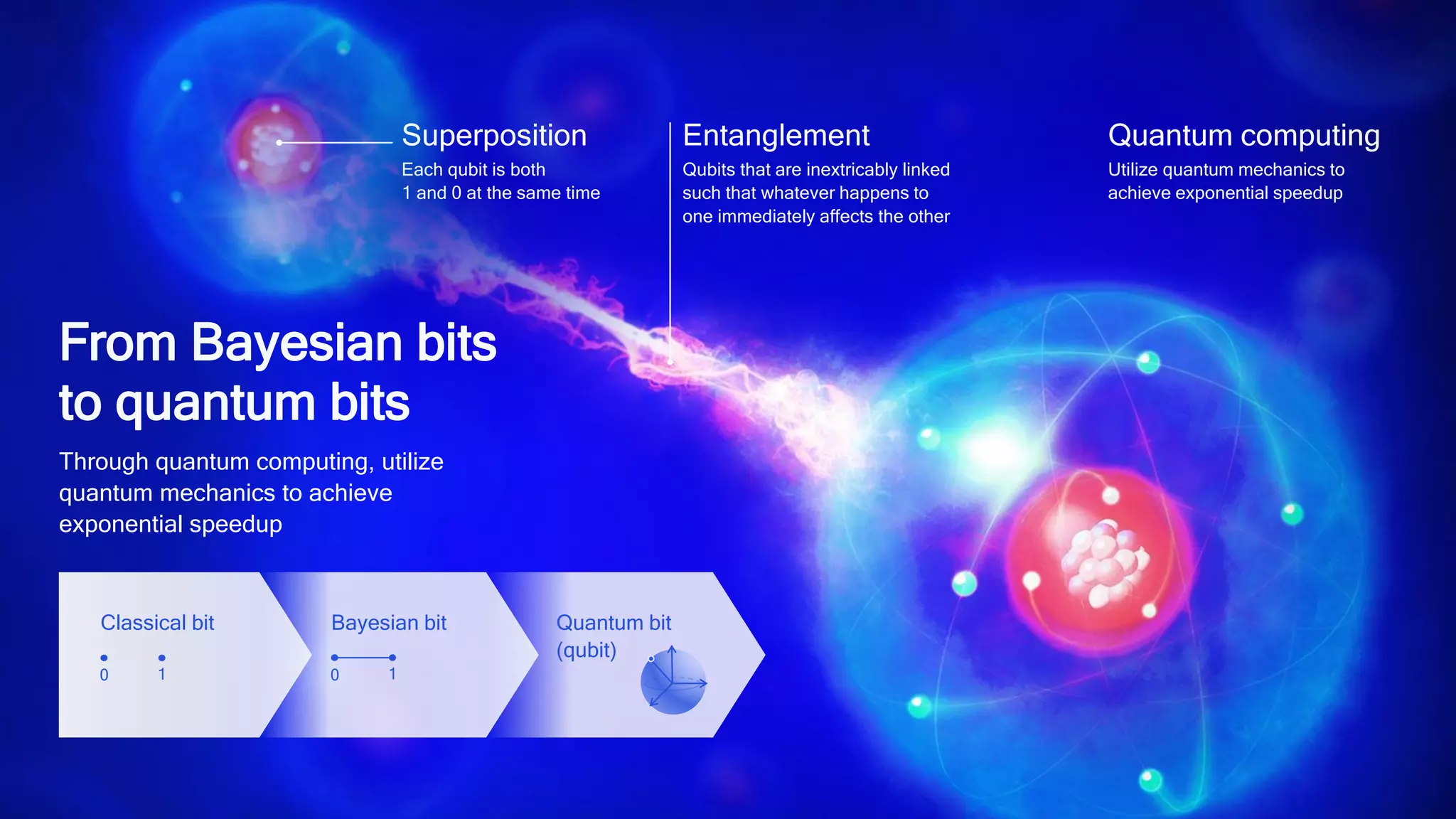

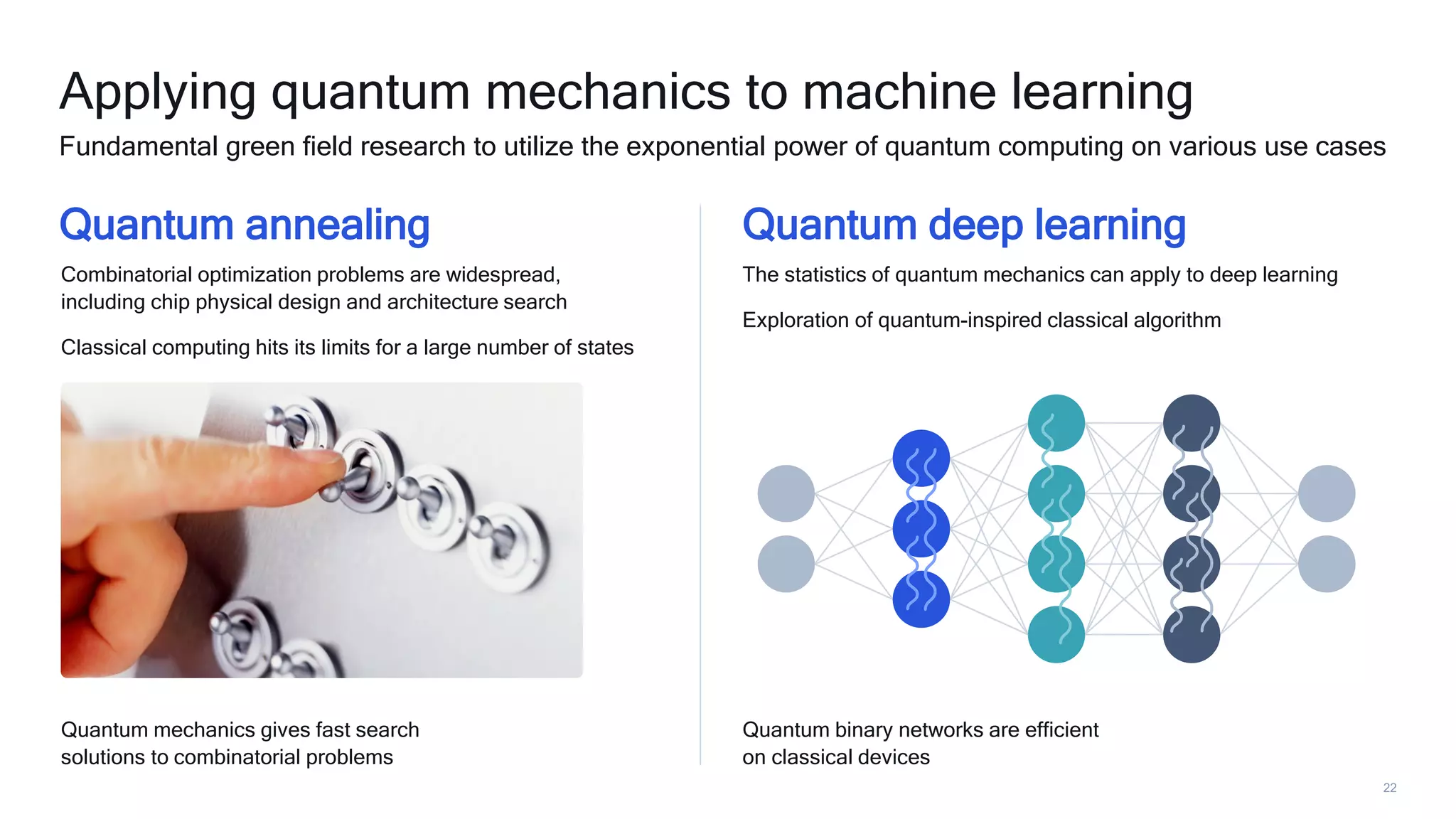

Qualcomm Technologies, Inc. highlights its extensive investment in AI research and development, showcasing the advancements from its AI engines and collaborations with leading companies like Google and Facebook. The document emphasizes breakthroughs in quantization, model efficiency, and various applications of AI in fields such as wireless communication, video processing, and autonomous driving. Additionally, it outlines the future research directions and the role of AI in optimizing performance and enabling scalable solutions across industries.