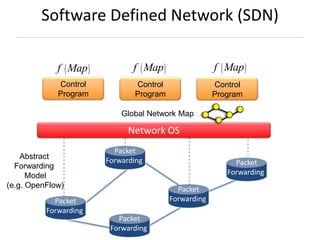

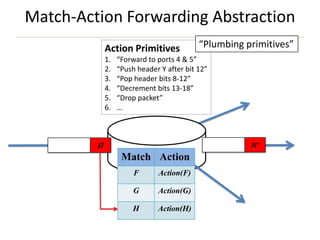

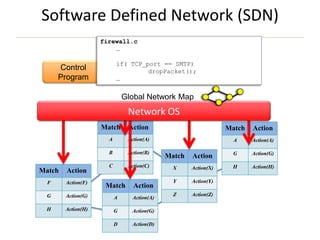

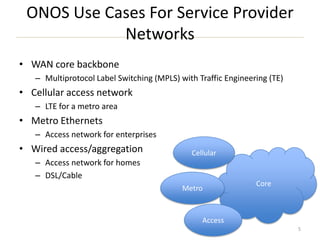

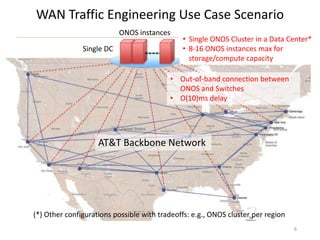

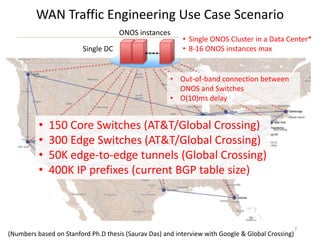

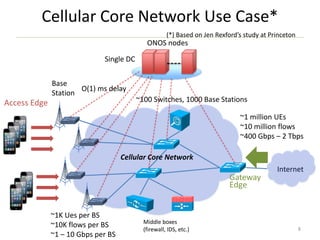

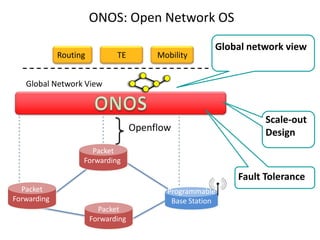

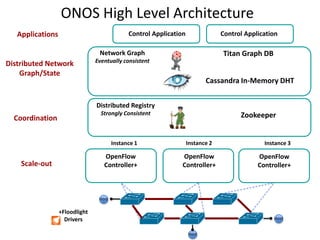

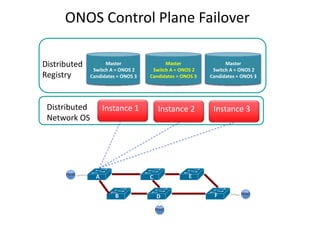

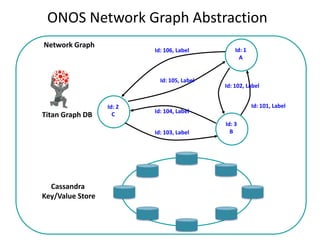

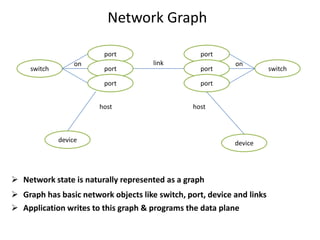

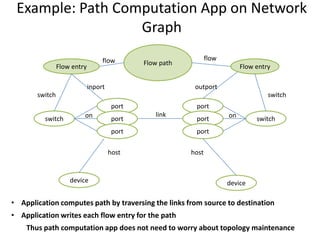

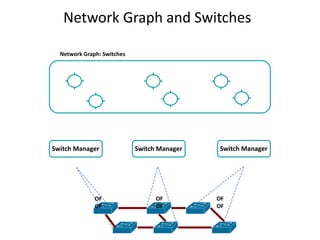

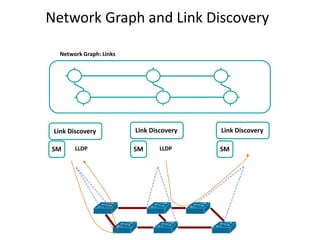

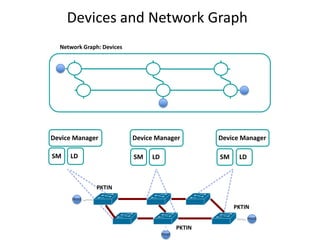

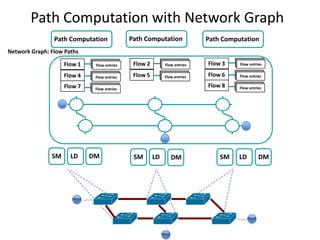

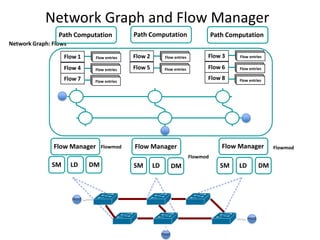

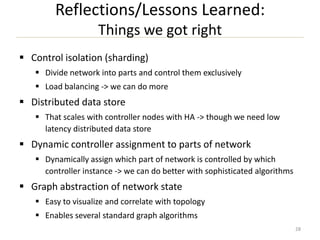

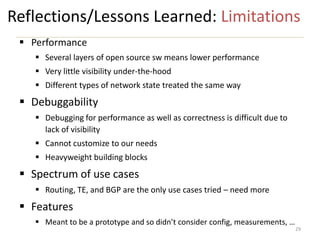

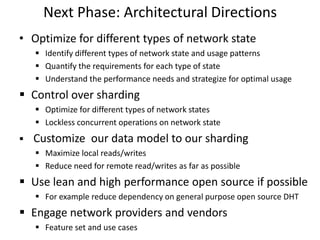

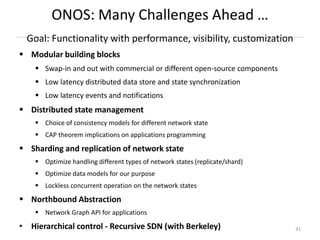

The ONOS (Open Network Operating System) is an open-source distributed software-defined networking (SDN) operating system designed for various use cases including WAN, cellular, and enterprise networks. It emphasizes features such as a global network view, fault tolerance, and scalability, utilizing a control-plane architecture based on a network graph abstraction. The document outlines the architecture, use cases, operational strategies, and lessons learned from its development and implementation.