The document provides a comprehensive overview of Google BigQuery, a fully managed analytics data warehouse designed for petabyte-scale data analysis without the need for a database administrator. It details installation instructions, command line usage, tips for efficient data management, and pricing structures for storage and queries. Additionally, it discusses the advantages of using BigQuery for managing large datasets and offers sample queries and operations to get started.

![Activate your GPC account (1)

1. Preparation (create account)

2. Go to Google Cloud platform (has no account)

3. “Try IT Free”

https://cloud.google.com

nemo@ubuntu-14:~$ gcloud init

Welcome! This command will take you through the configuration of gcloud.

Your current configuration has been set to: [default]

To continue, you must log in. Would you like to log in (Y/n)?

Go to the following link in your browser:

https://accounts.google.com/o/oauth2/auth?redirect_uri=ur&xxxxxxxxxxxxx

xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

access_type=offline

Enter verification code: xxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

You are now logged in as: [xxxx@example.com]

This account has no projects. Please create one in developers console (https://

console.developers.google.com/project) before running this command.

nemo@ubuntu-14:~$](https://image.slidesharecdn.com/bigquery2-160615091810/85/Big-query-Command-line-tools-and-Tips-MOSG-11-320.jpg)

![nemo@ubuntu-14:~$ gcloud init

Welcome! This command will take you through the configuration of gcloud.

Your current configuration has been set to: [default]

To continue, you must log in. Would you like to log in (Y/n)?

Go to the following link in your browser:

https://accounts.google.com/o/oauth2/auth?redirect_uri=ur&xxxxxxxxxxxxx

xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

access_type=offline

Enter verification code: xxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

You are now logged in as: [xxxx@example.com]

This account has no projects. Please create one in developers console (https://

console.developers.google.com/project) before running this command.

nemo@ubuntu-14:~$

Activate your GPC account (2)](https://image.slidesharecdn.com/bigquery2-160615091810/85/Big-query-Command-line-tools-and-Tips-MOSG-12-320.jpg)

![https://accounts.google.com/o/oauth2/auth?redirect_uri=ur&xxxxxxxxxxxxxxxxx

xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

access_type=offline

Enter verification code: xxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

You are now logged in as: [xxxx@example.com]

set the code

Activate your GPC account (6)](https://image.slidesharecdn.com/bigquery2-160615091810/85/Big-query-Command-line-tools-and-Tips-MOSG-16-320.jpg)

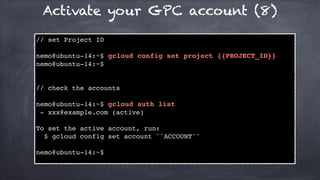

![https://accounts.google.com/o/oauth2/auth?redirect_uri=ur&xxxxxxxxxxxxxxxxx

xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

access_type=offline

Enter verification code: xxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

You are now logged in as: [xxxx@example.com]

check the accounts

Activate your GPC account (7)](https://image.slidesharecdn.com/bigquery2-160615091810/85/Big-query-Command-line-tools-and-Tips-MOSG-17-320.jpg)

![Create table and import data (2)

Schema (schema.json)

[

{

"name":"id",

"type":"INTEGER"

},

{

"name":"name",

"type":"STRING"

},

{

"name":"engineer_type",

"type":"INTEGER"

}

]](https://image.slidesharecdn.com/bigquery2-160615091810/85/Big-query-Command-line-tools-and-Tips-MOSG-26-320.jpg)