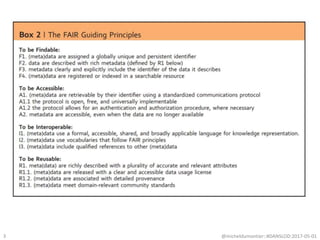

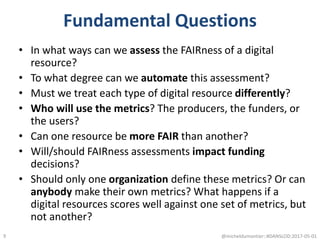

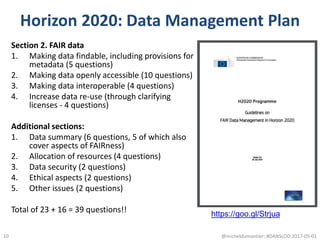

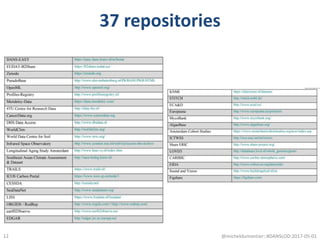

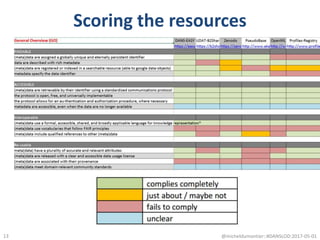

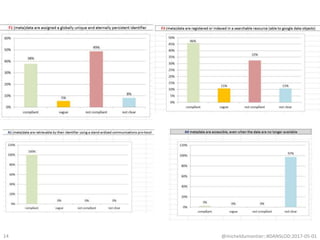

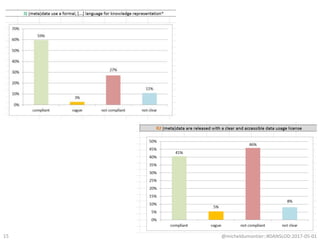

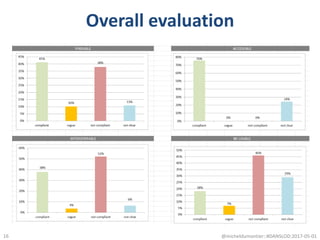

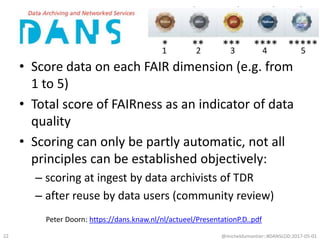

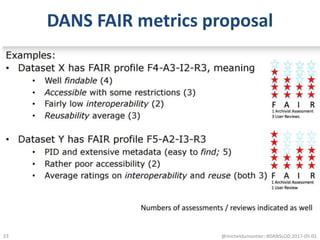

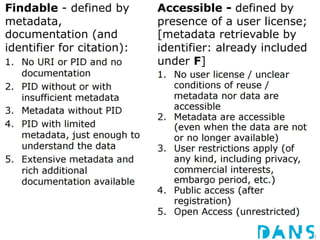

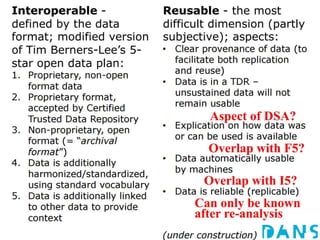

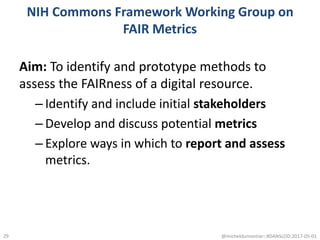

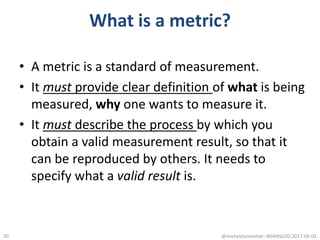

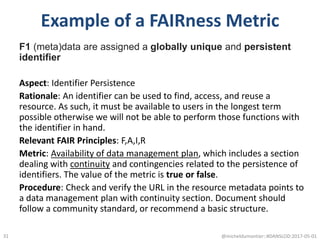

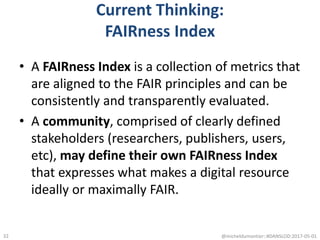

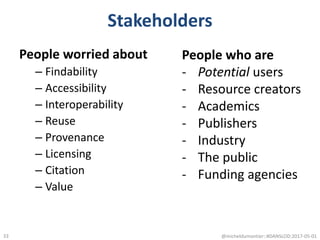

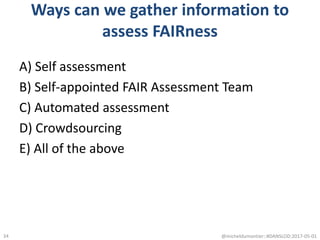

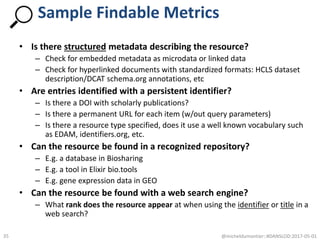

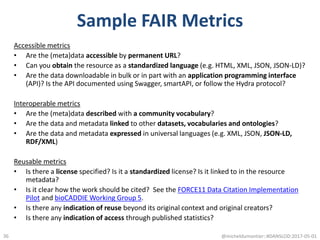

This document discusses developing metrics to assess how well digital resources adhere to the FAIR principles of findability, accessibility, interoperability, and reusability. It provides examples of potential metrics that could be used to measure compliance with each of the FAIR principles. It also discusses challenges around developing standardized and automated metrics given differences in resource types and communities. The goal is to define FAIRness indices made up of agreed upon metrics to help improve the findability, accessibility, interoperability and reusability of digital resources.