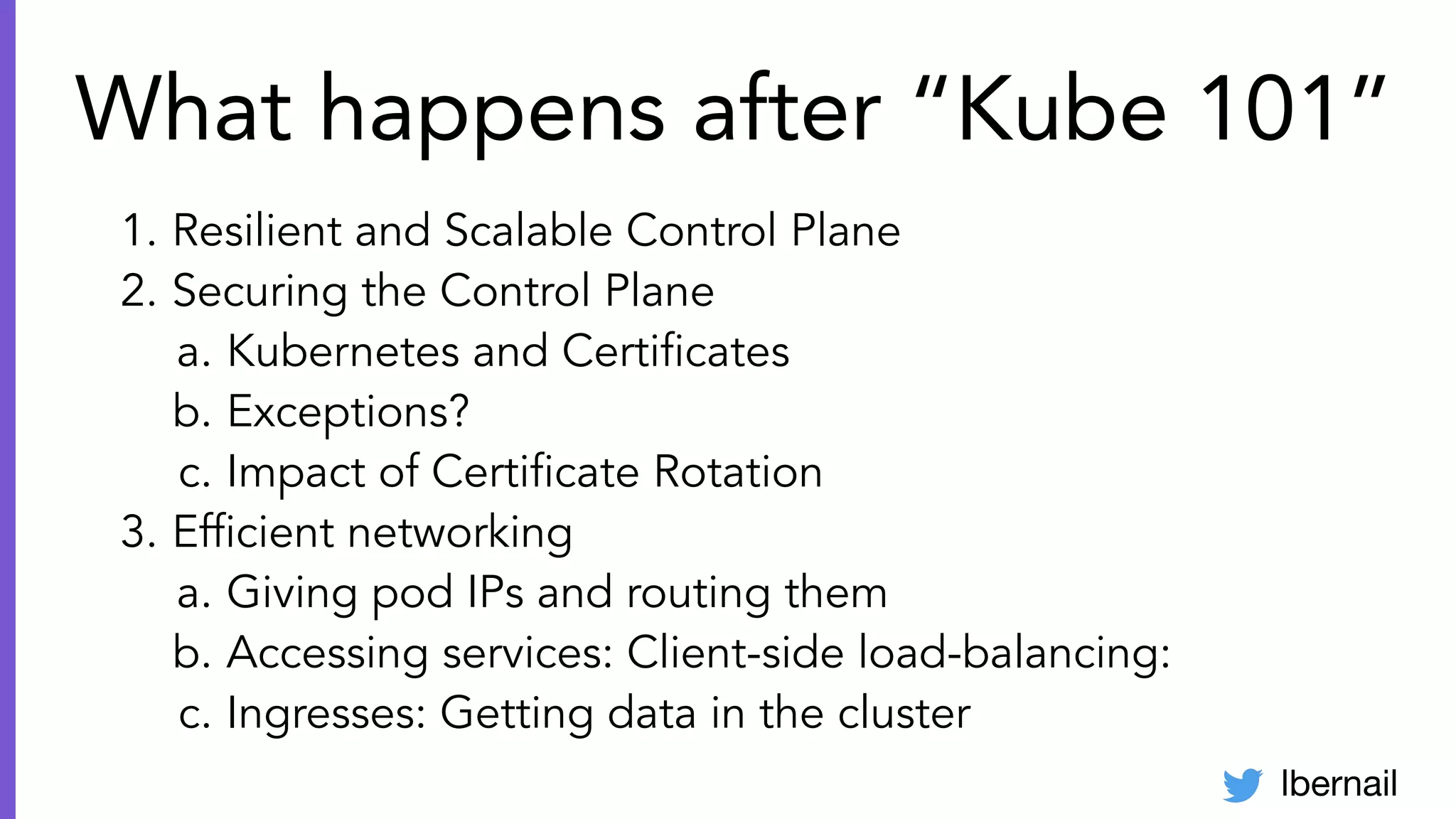

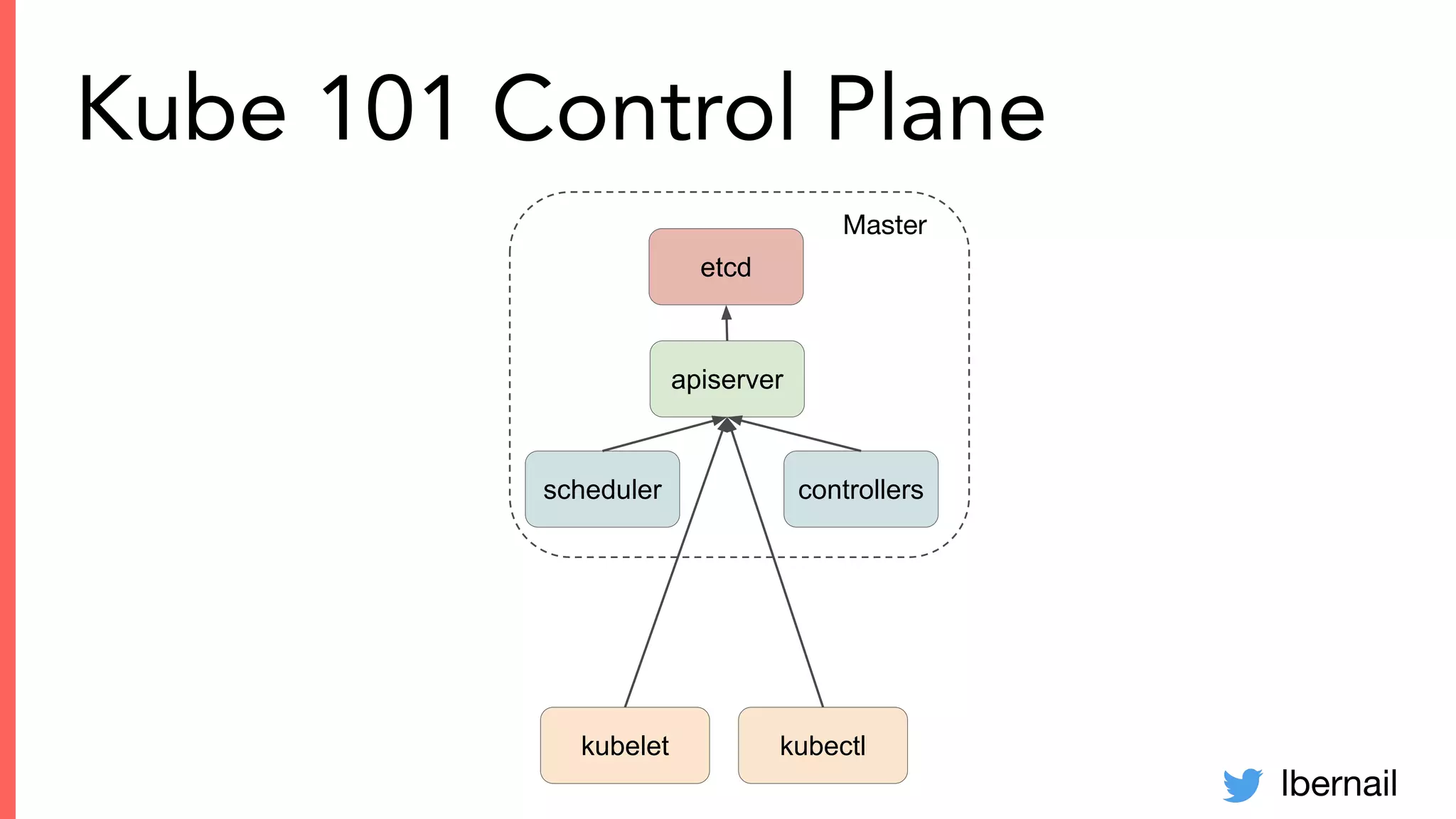

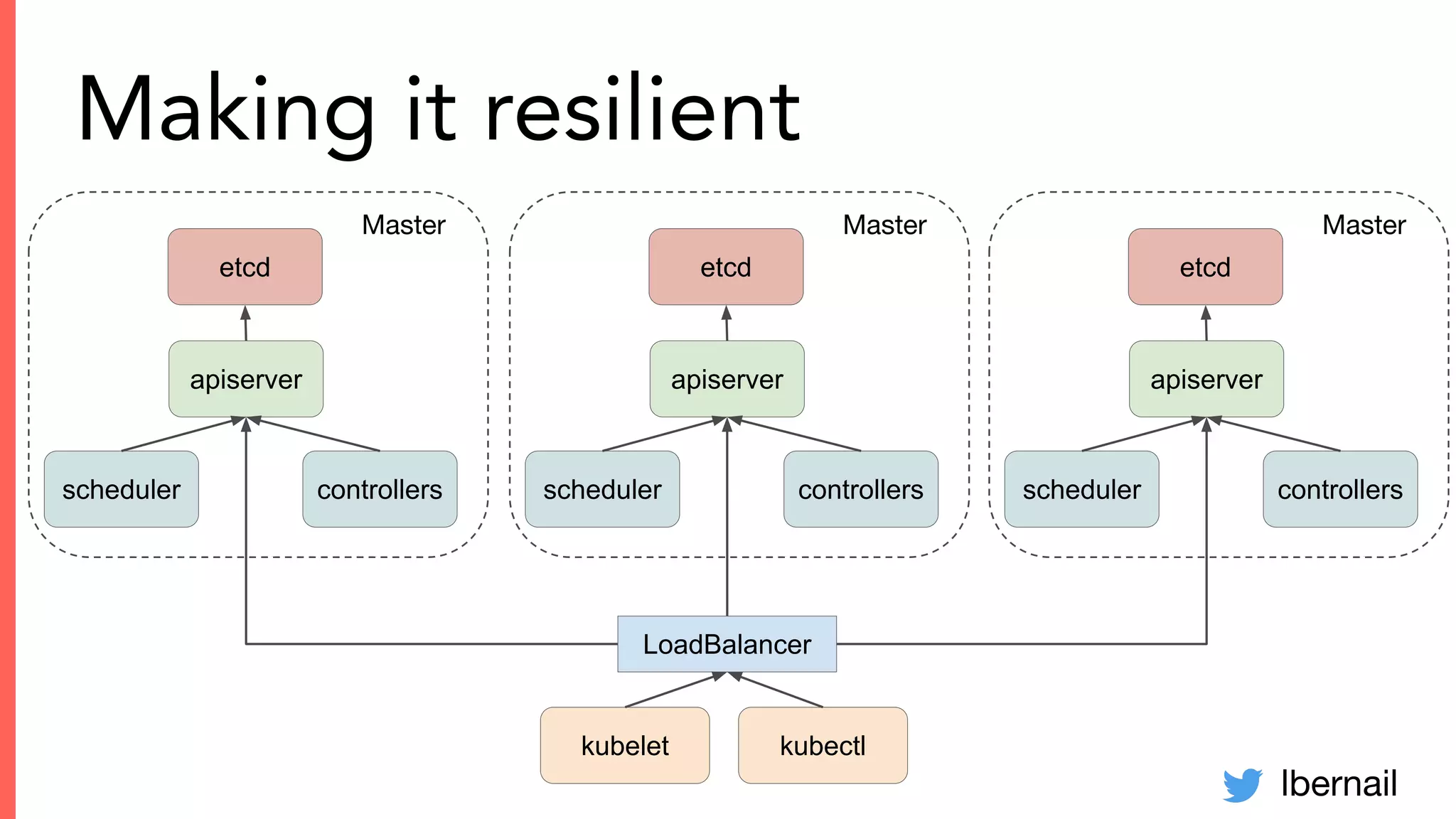

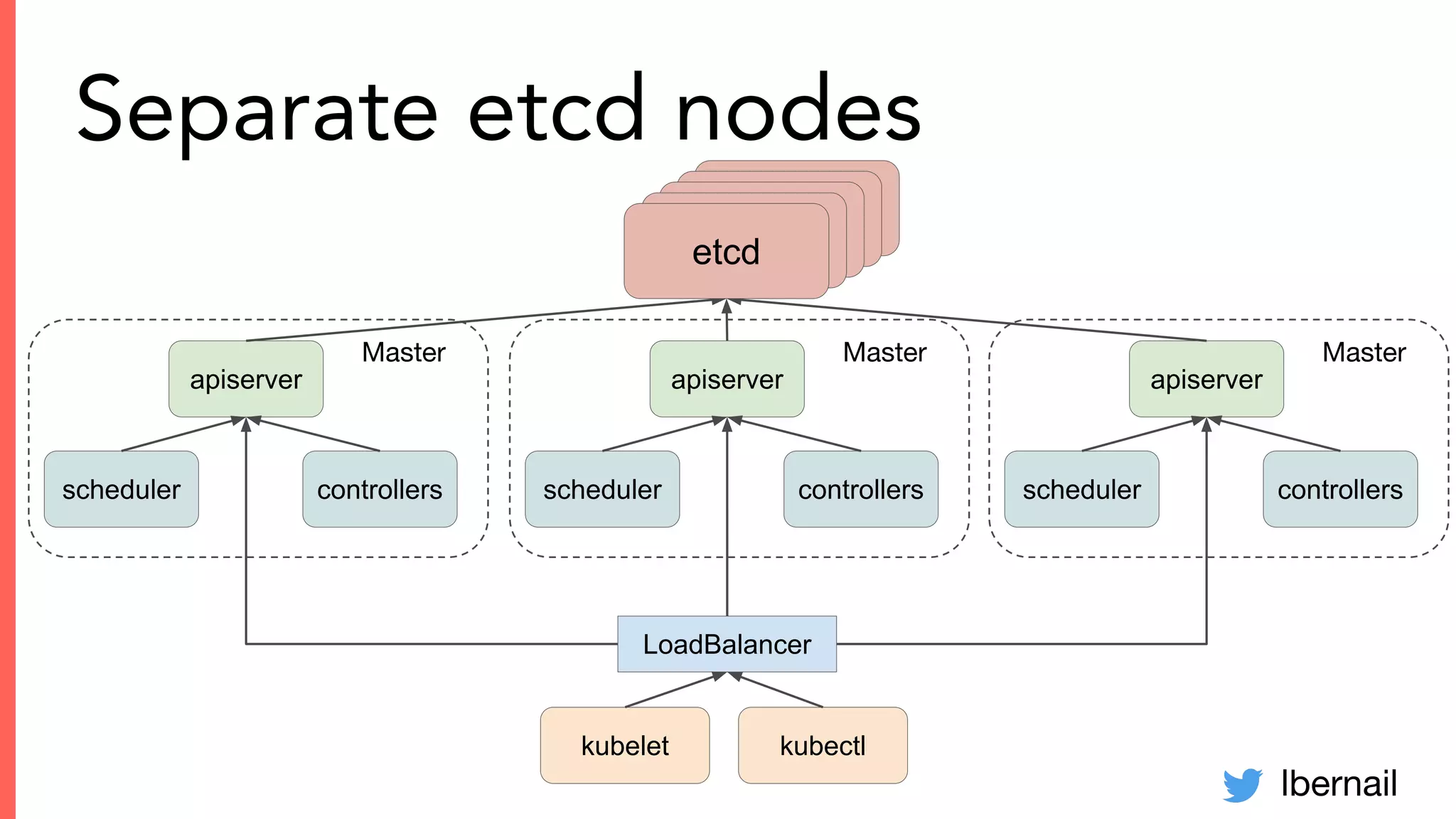

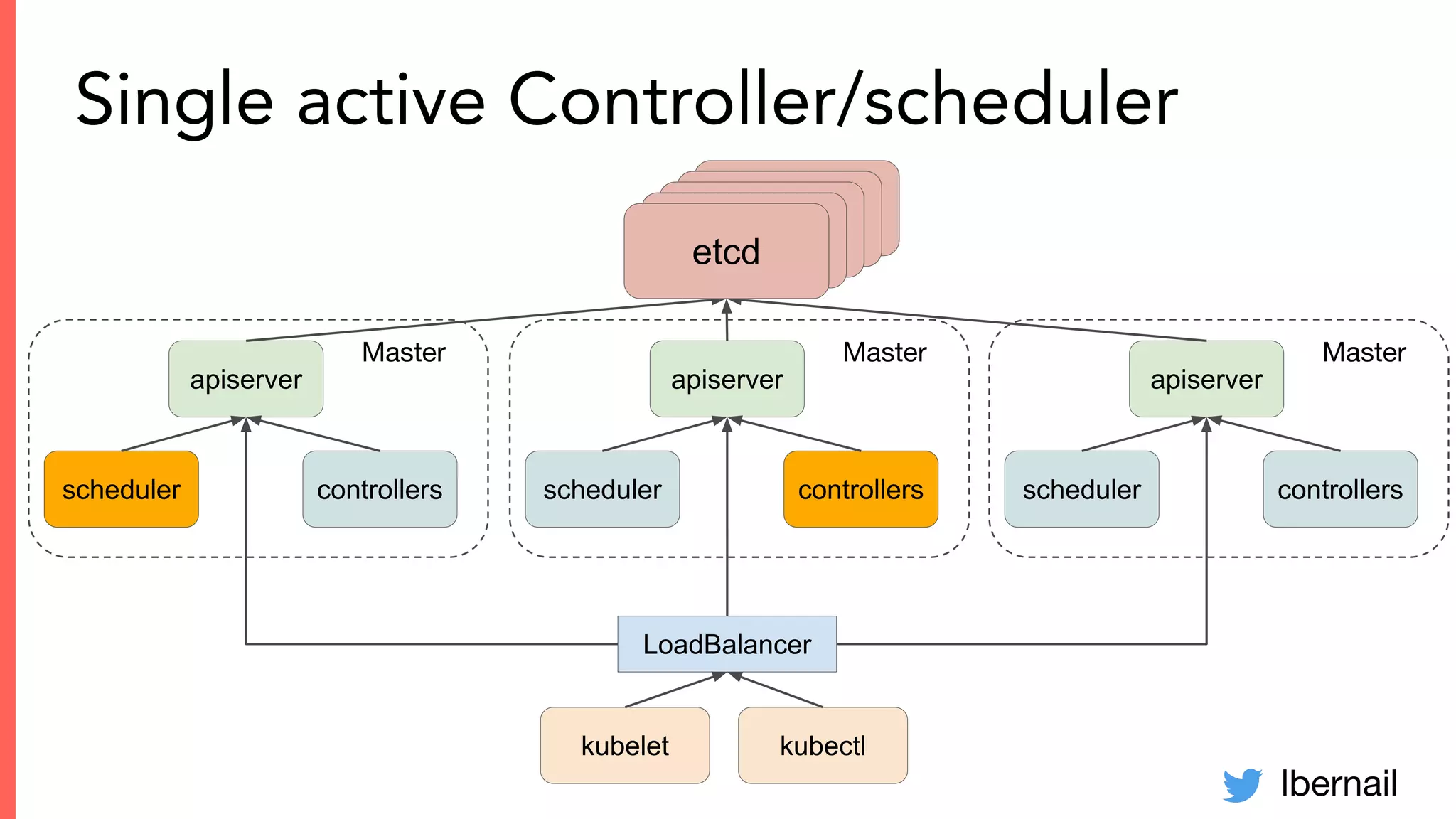

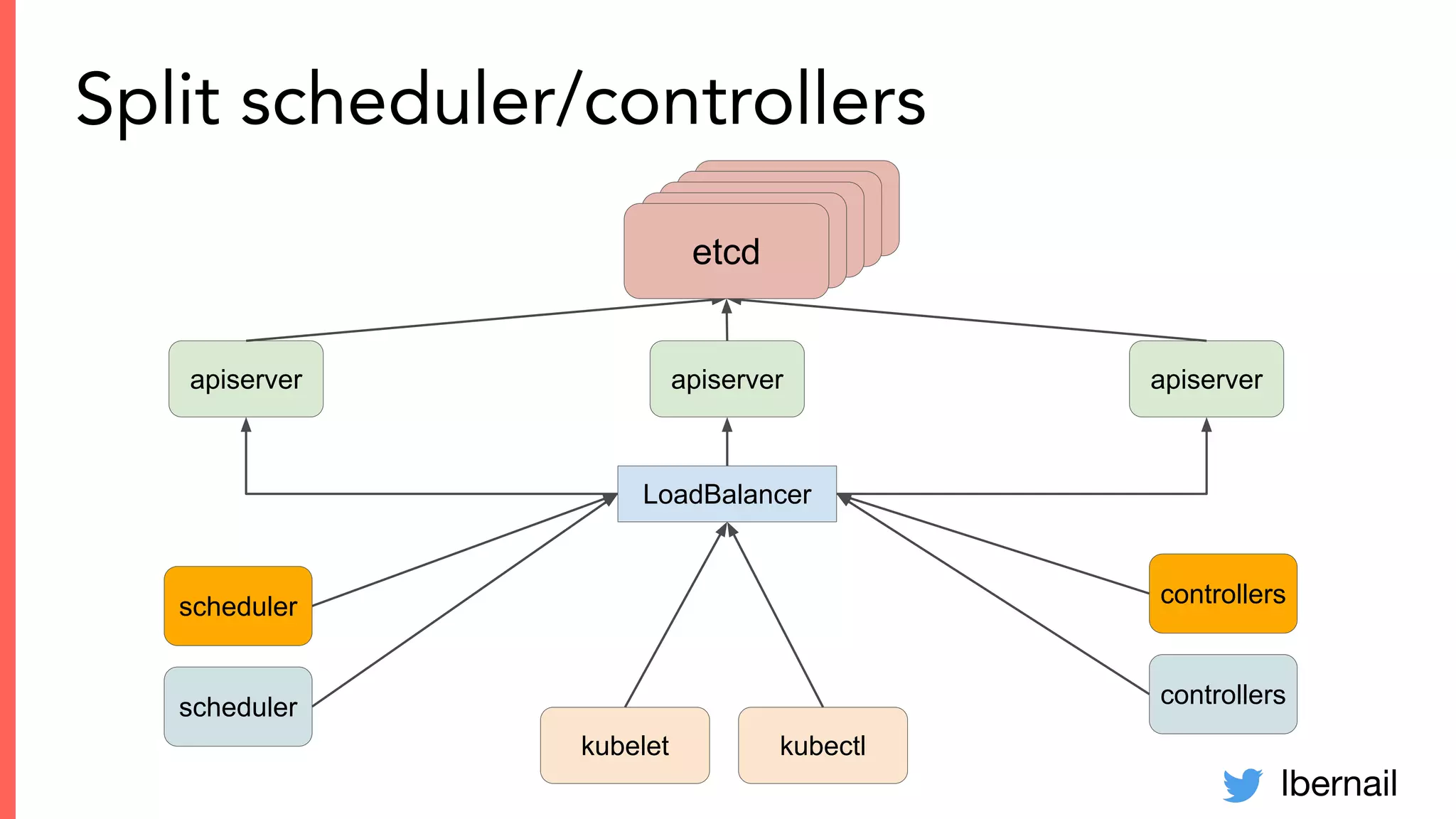

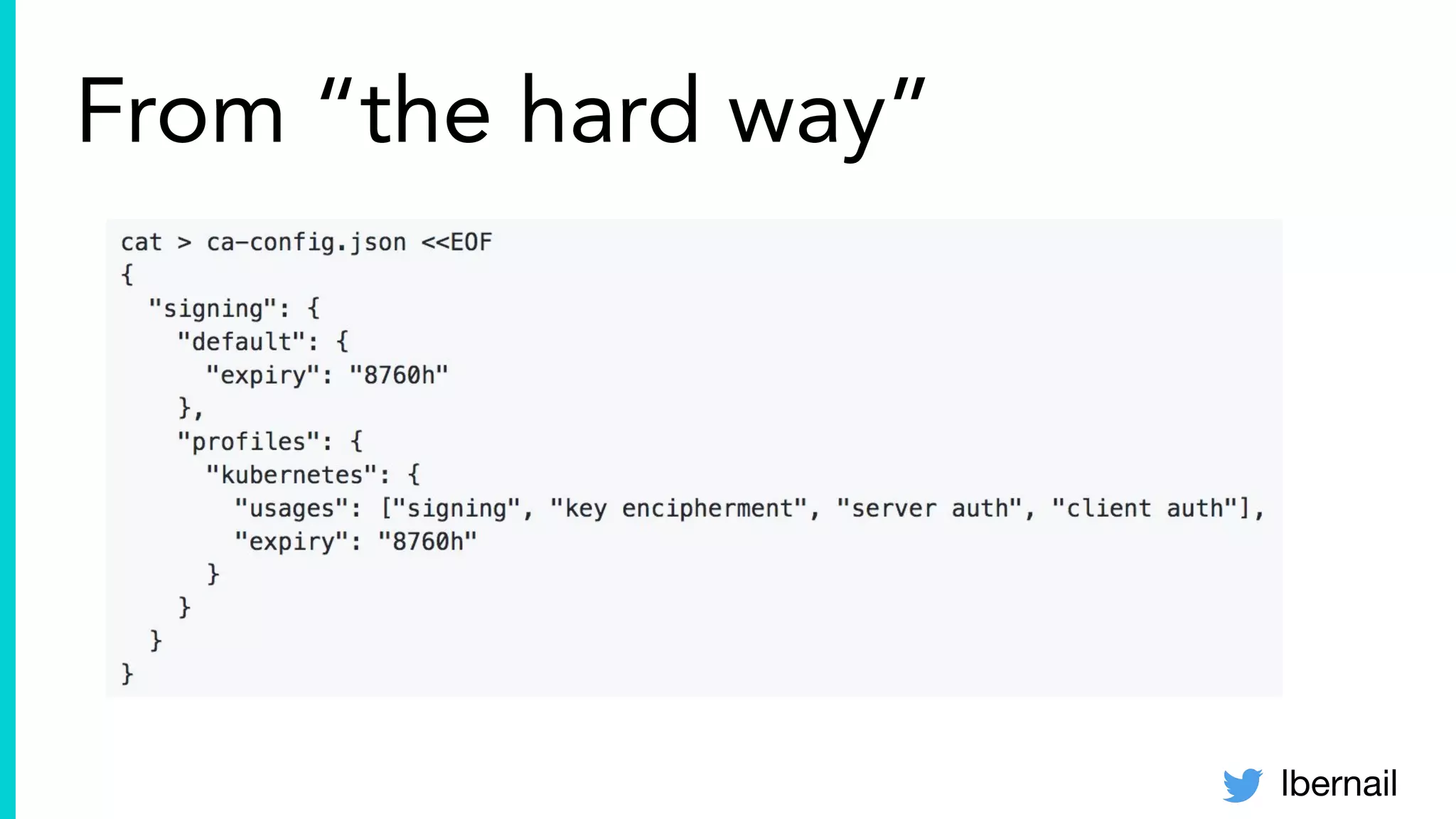

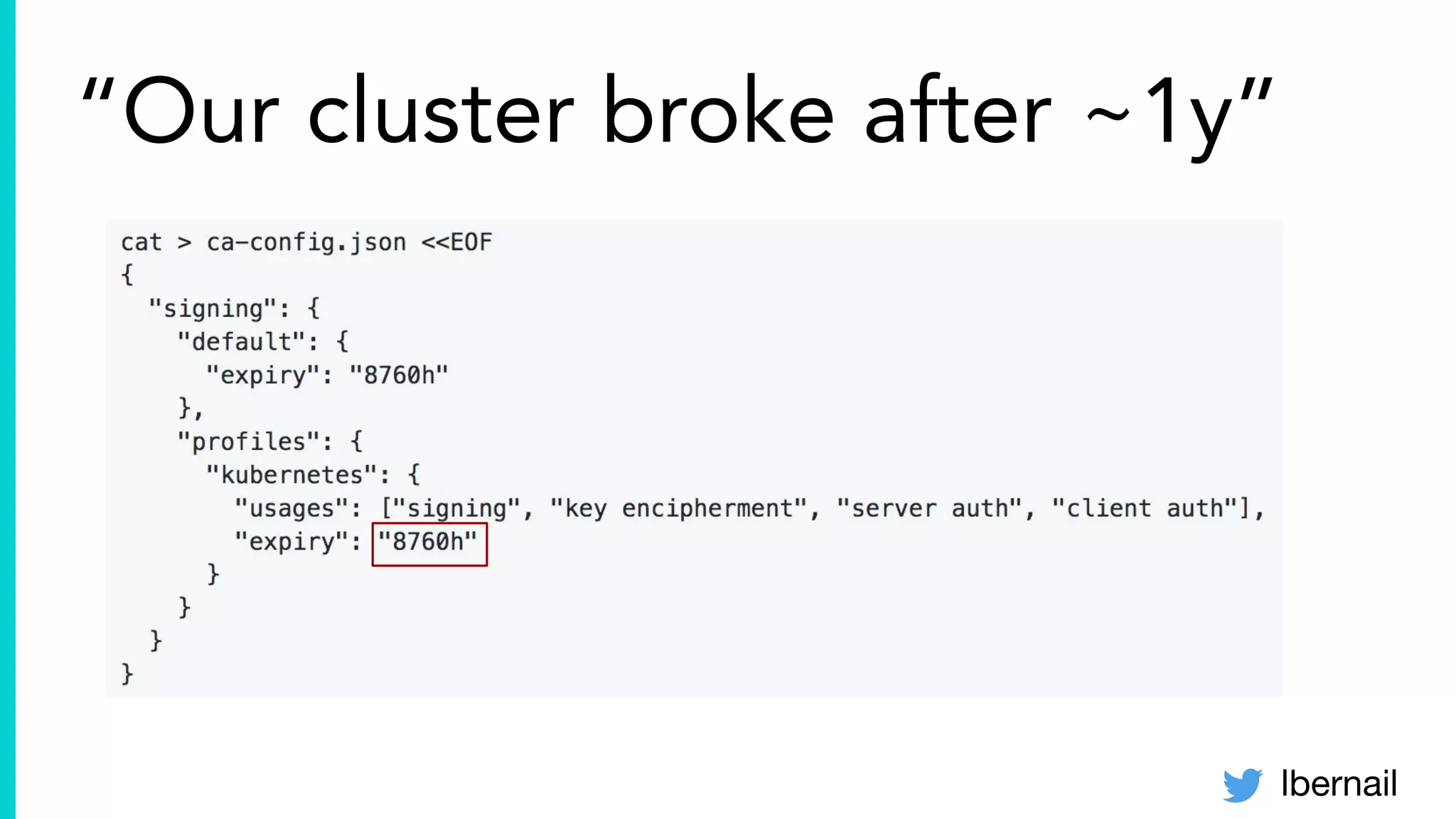

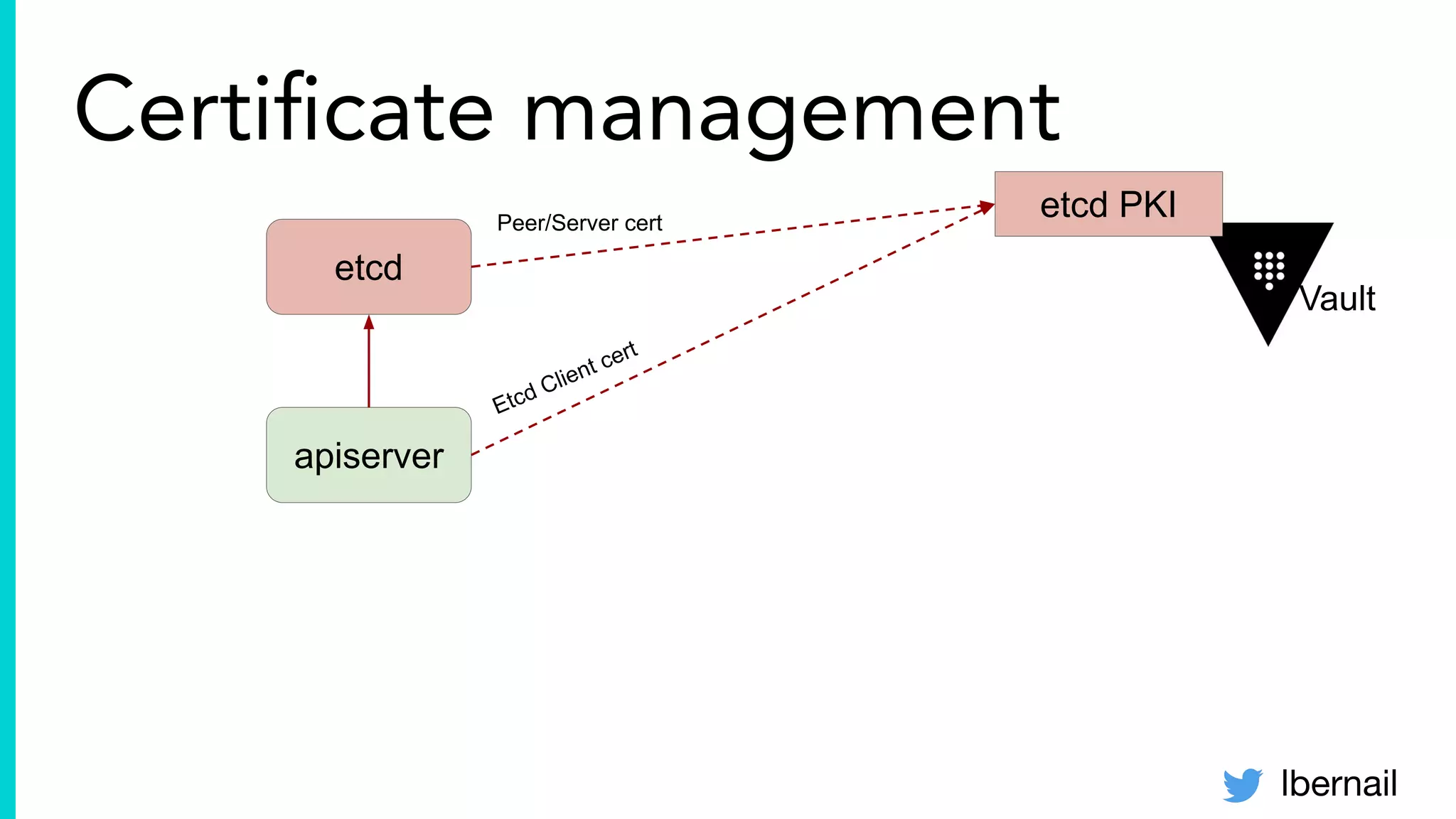

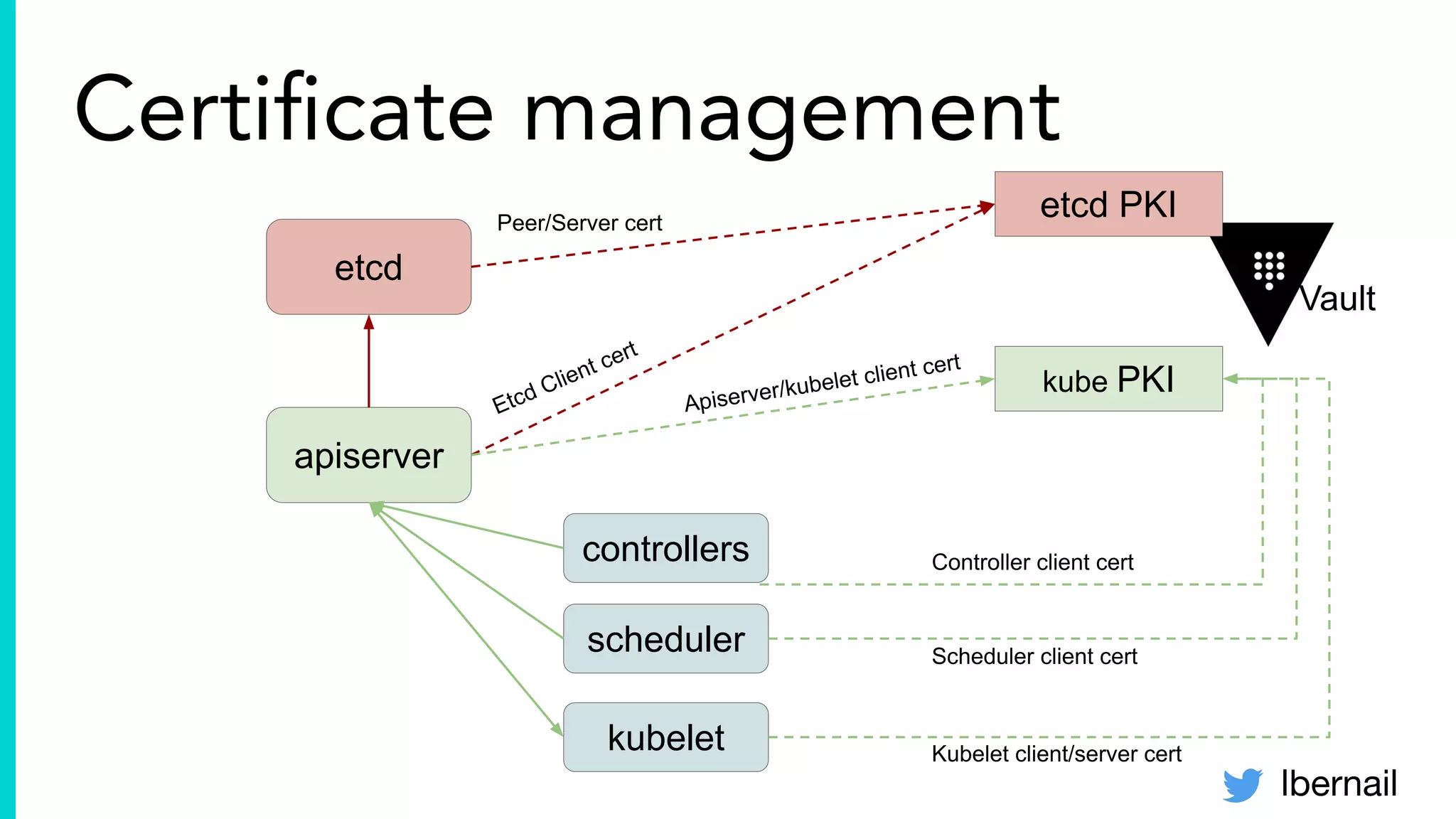

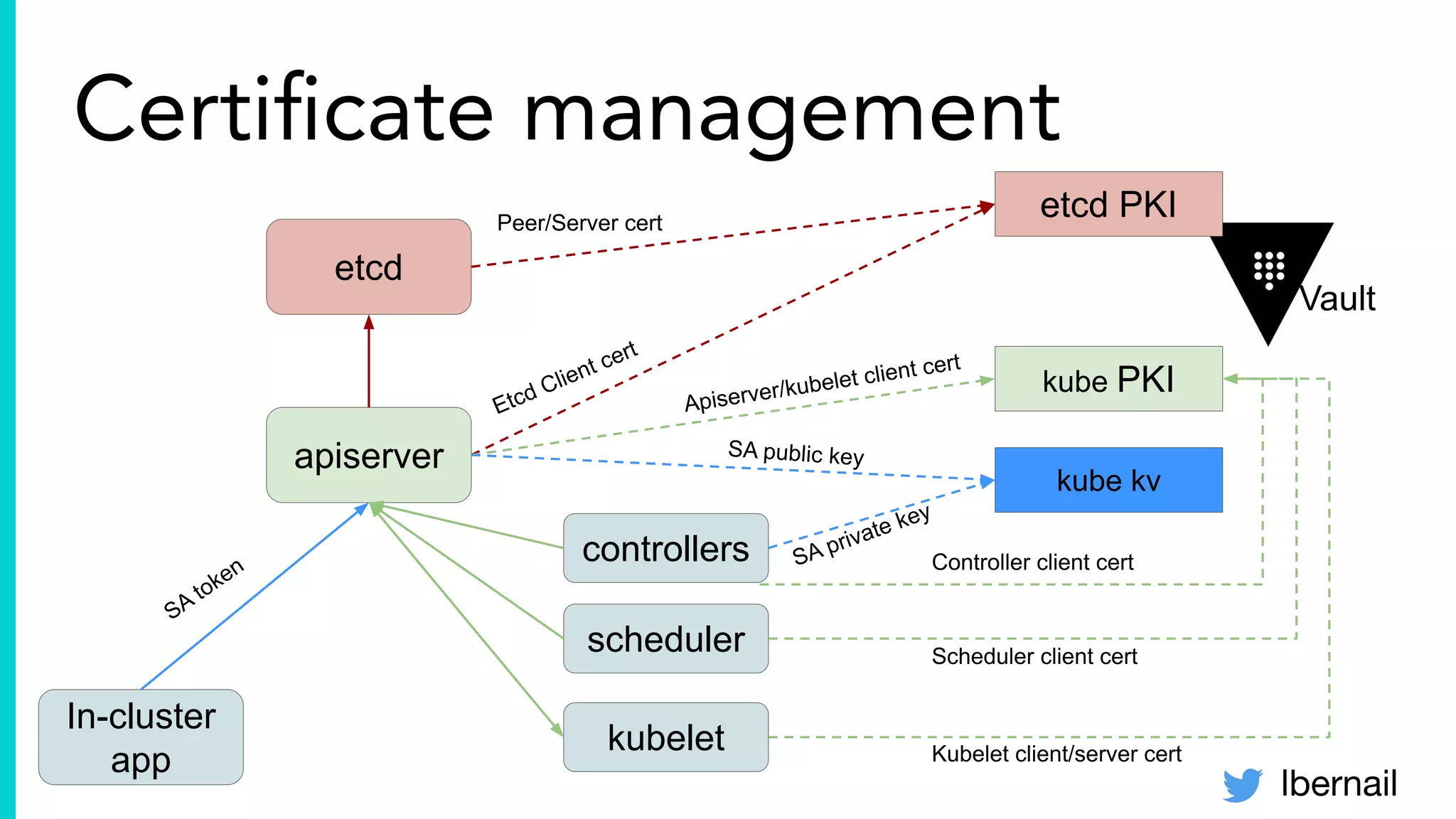

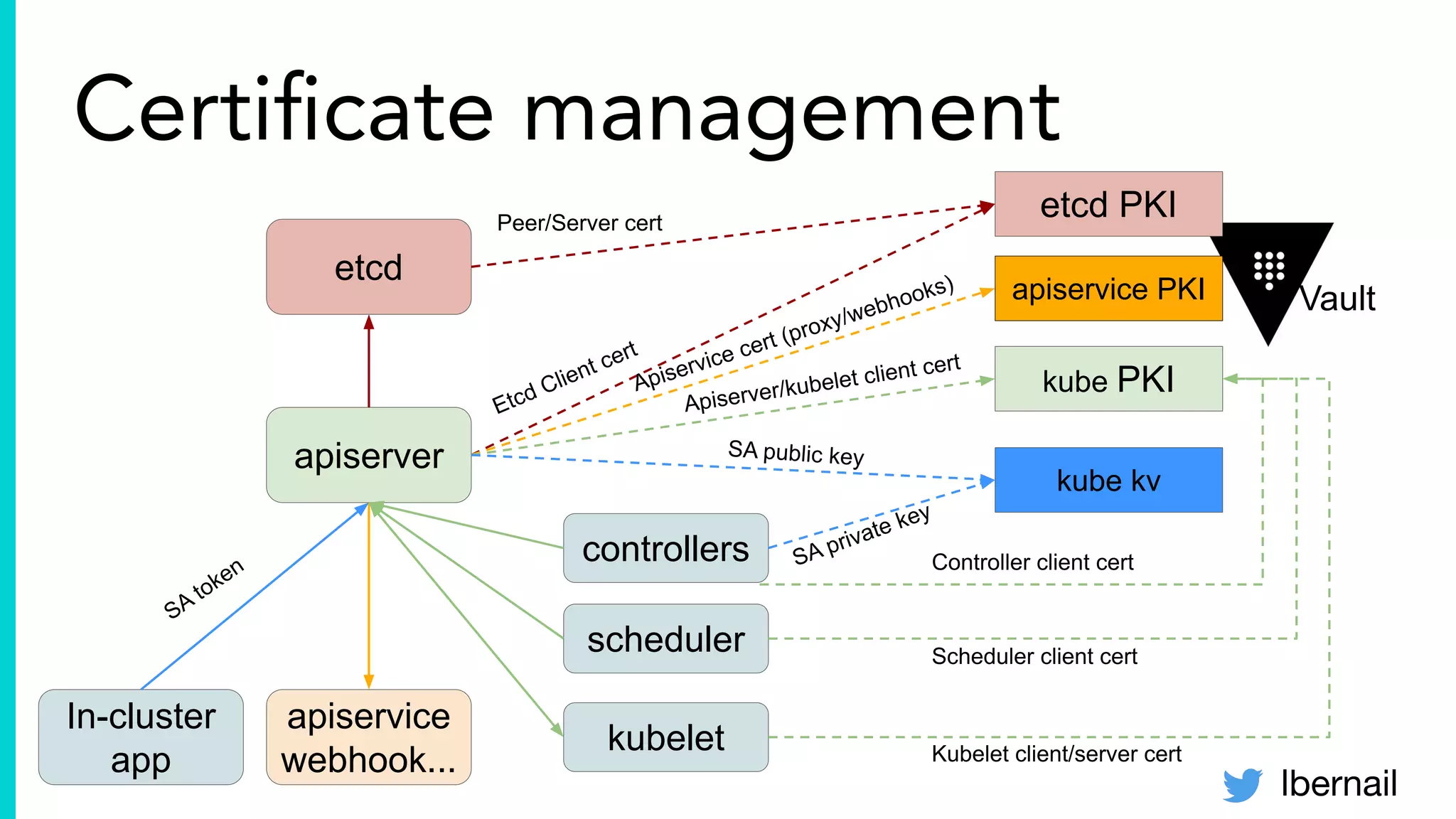

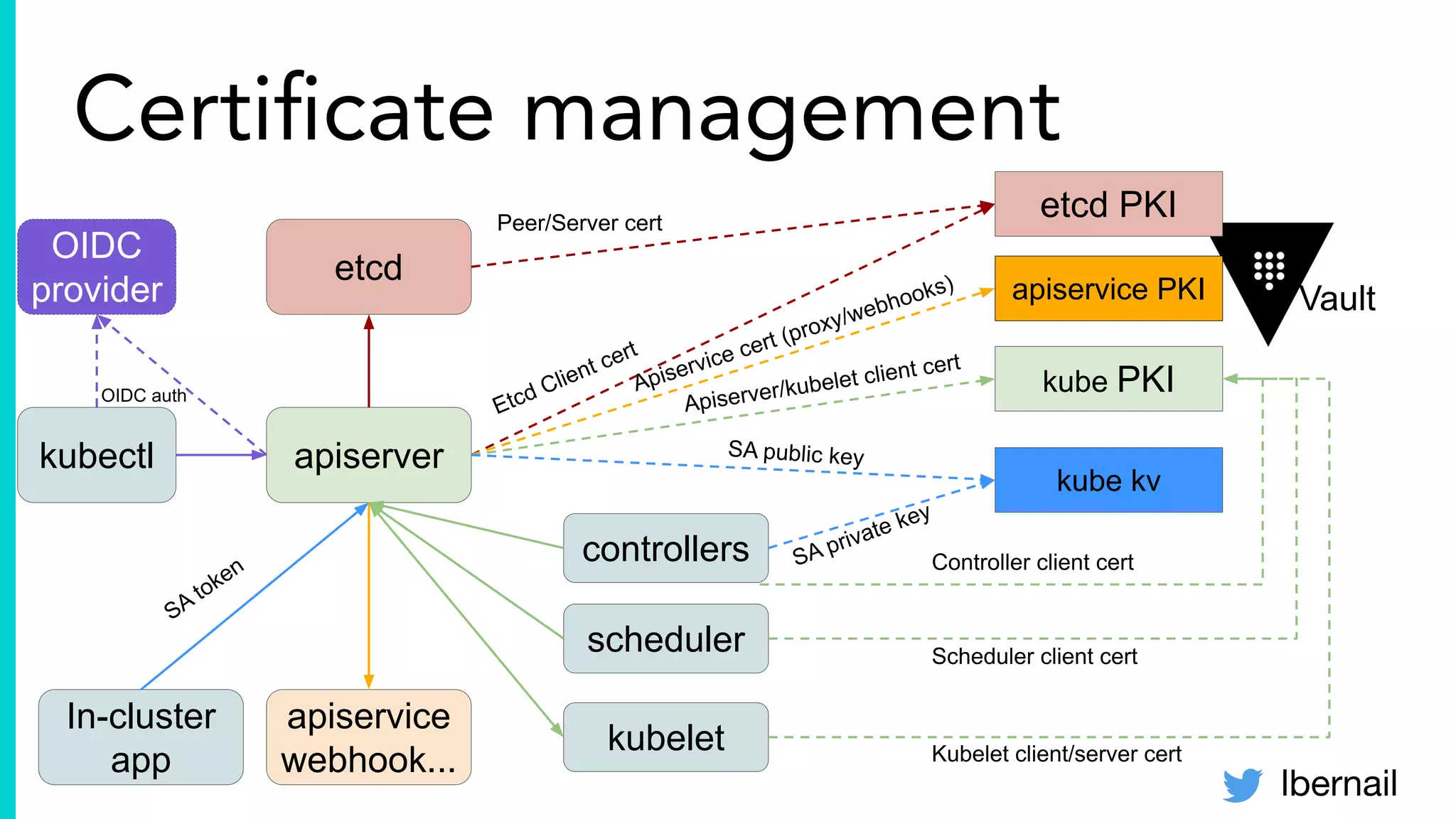

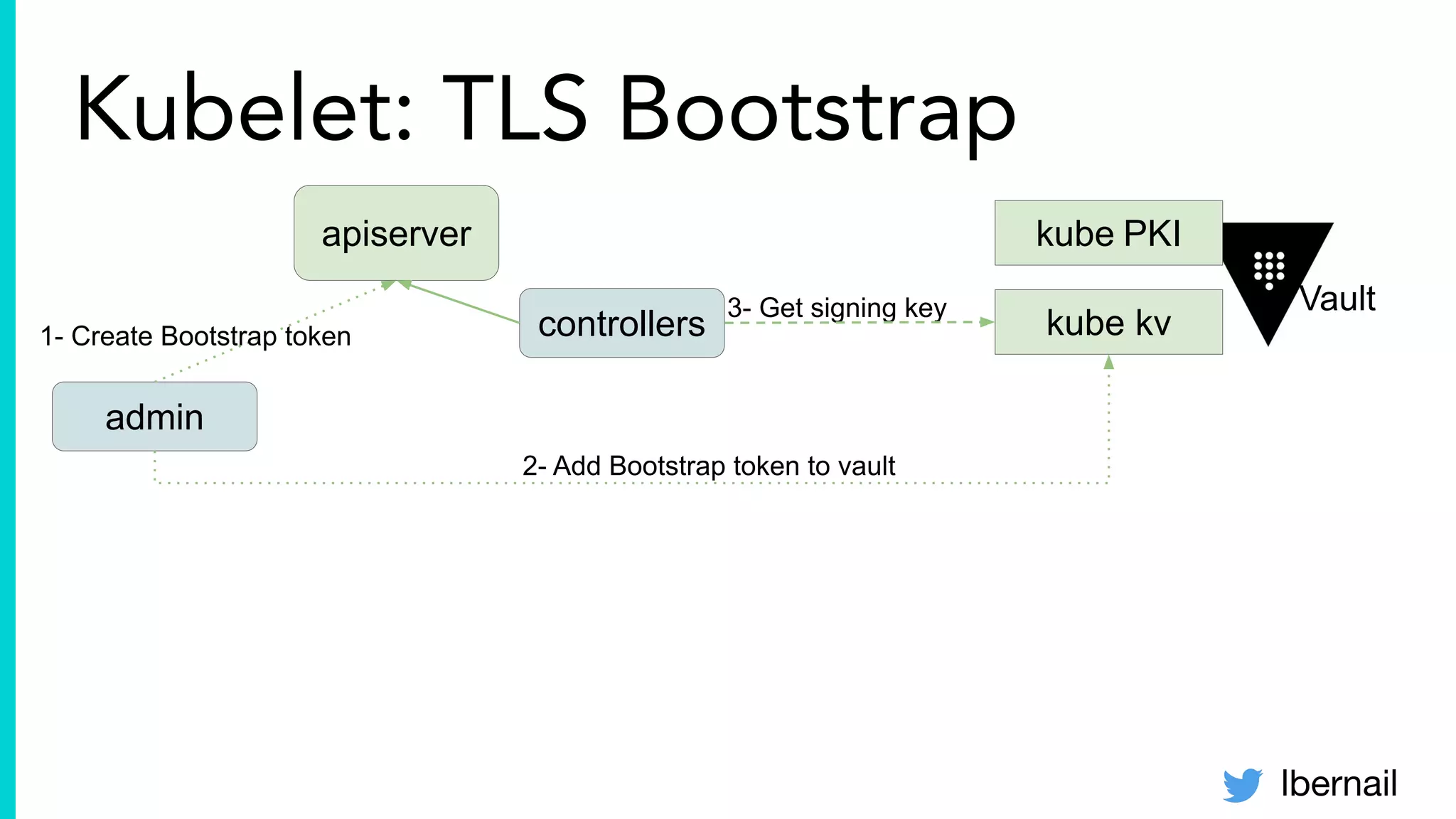

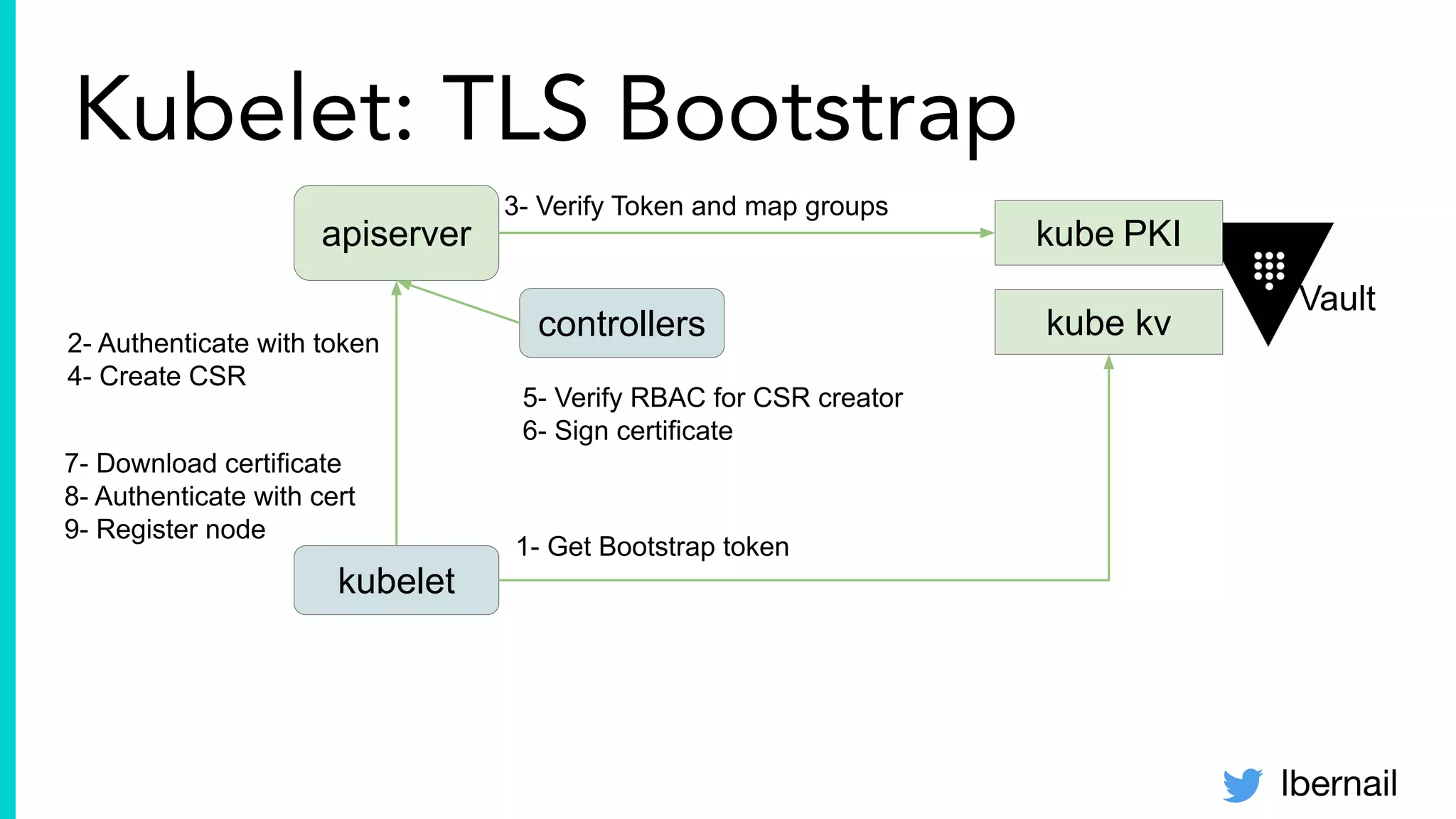

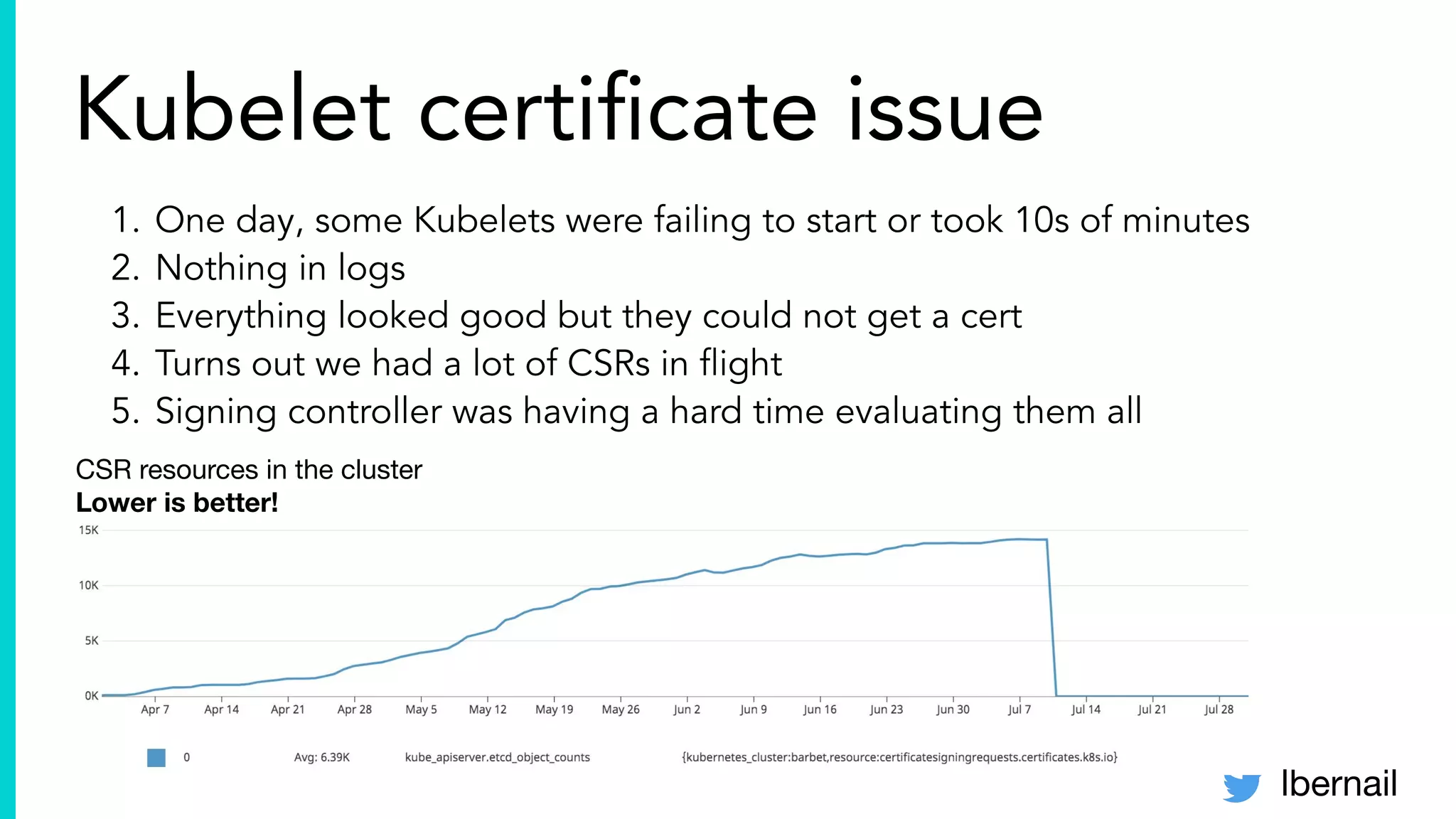

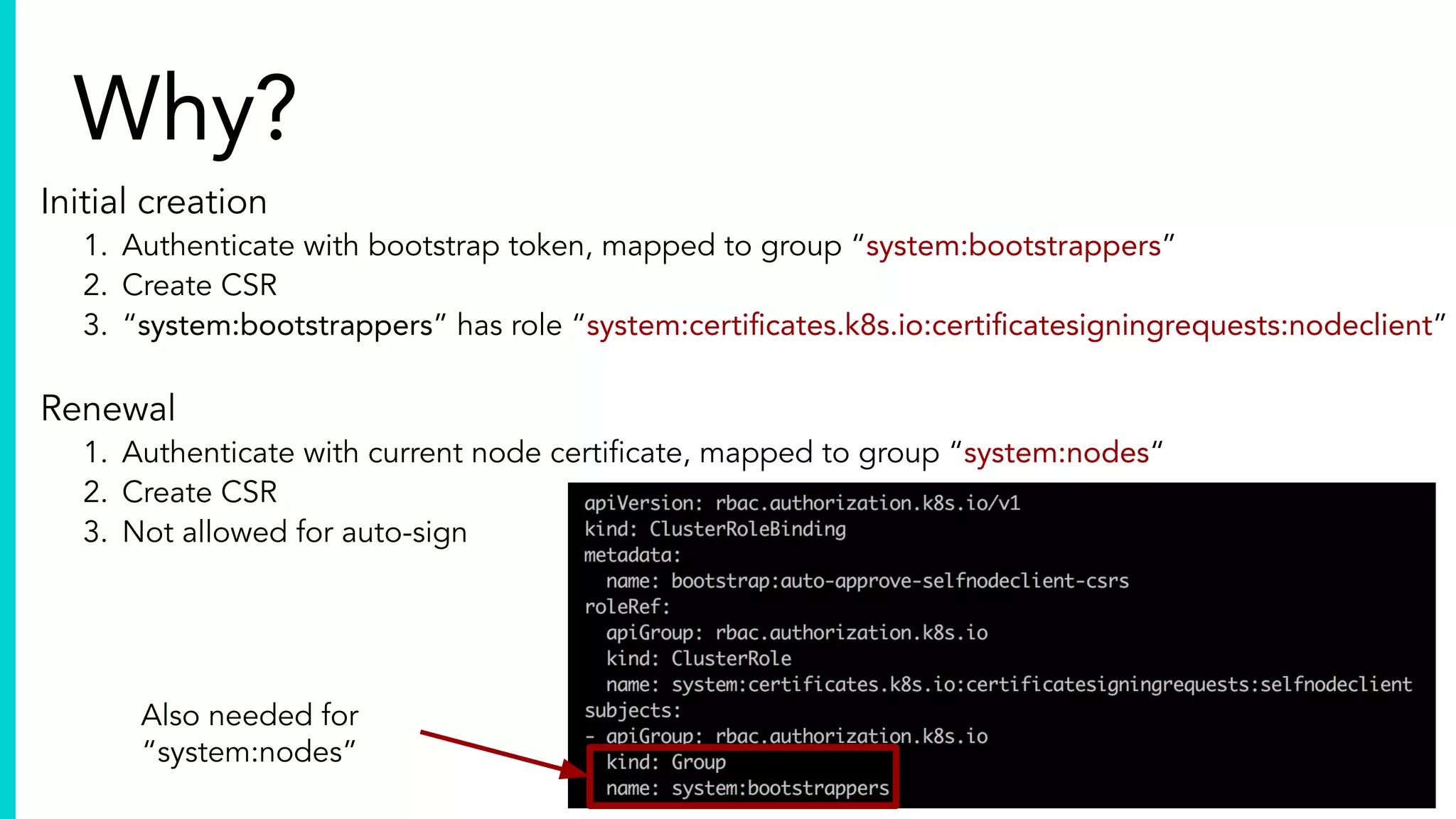

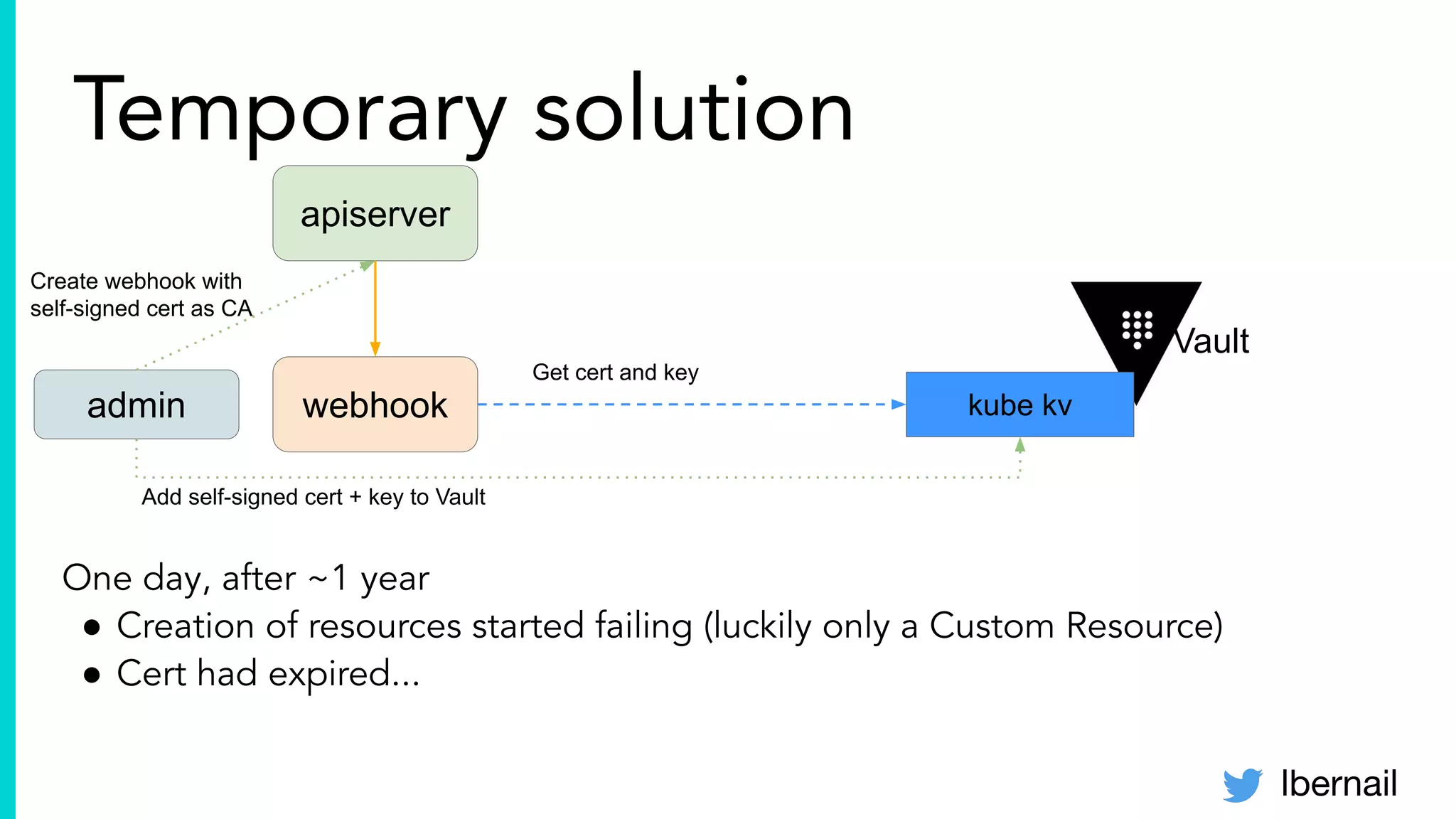

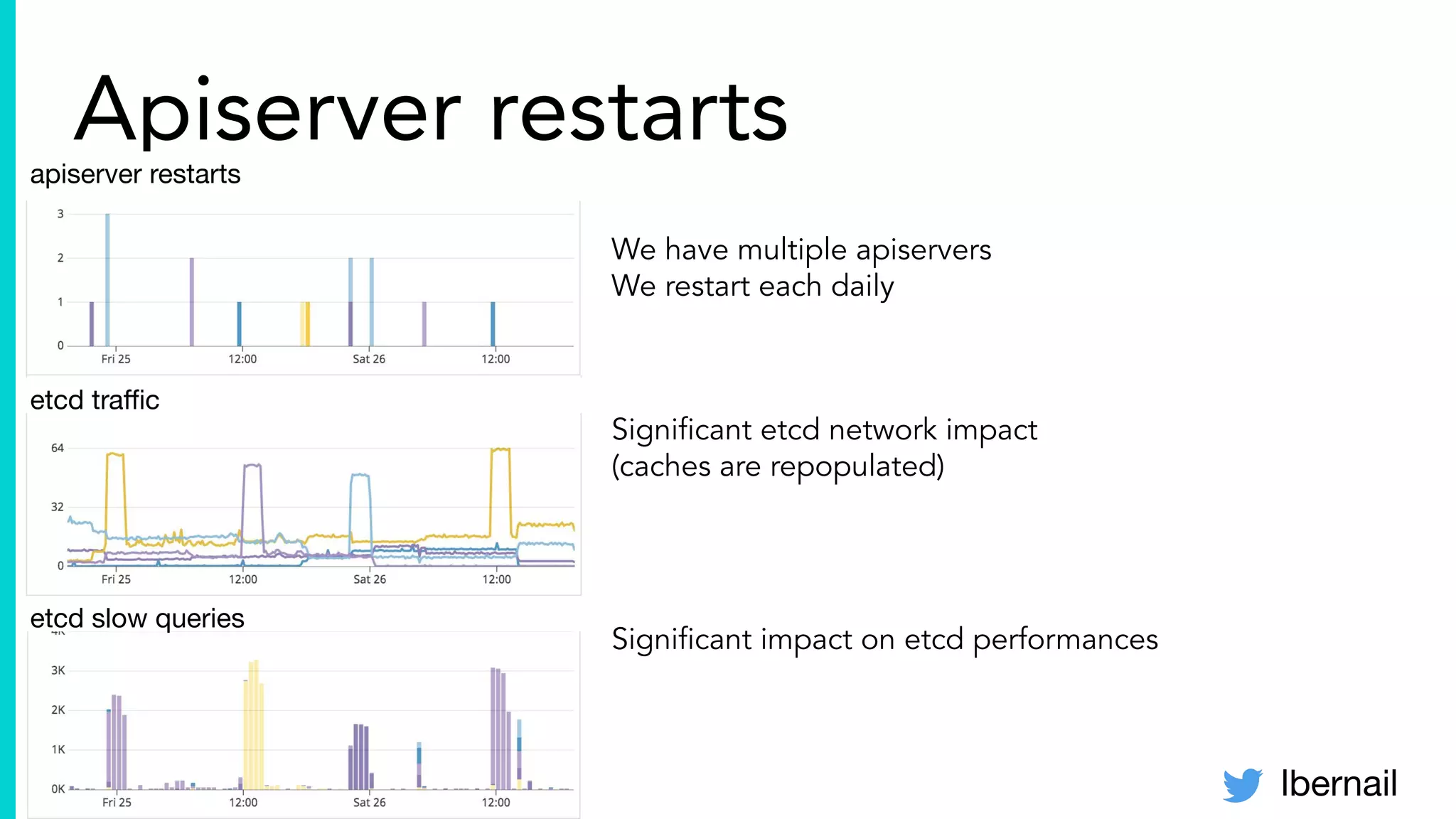

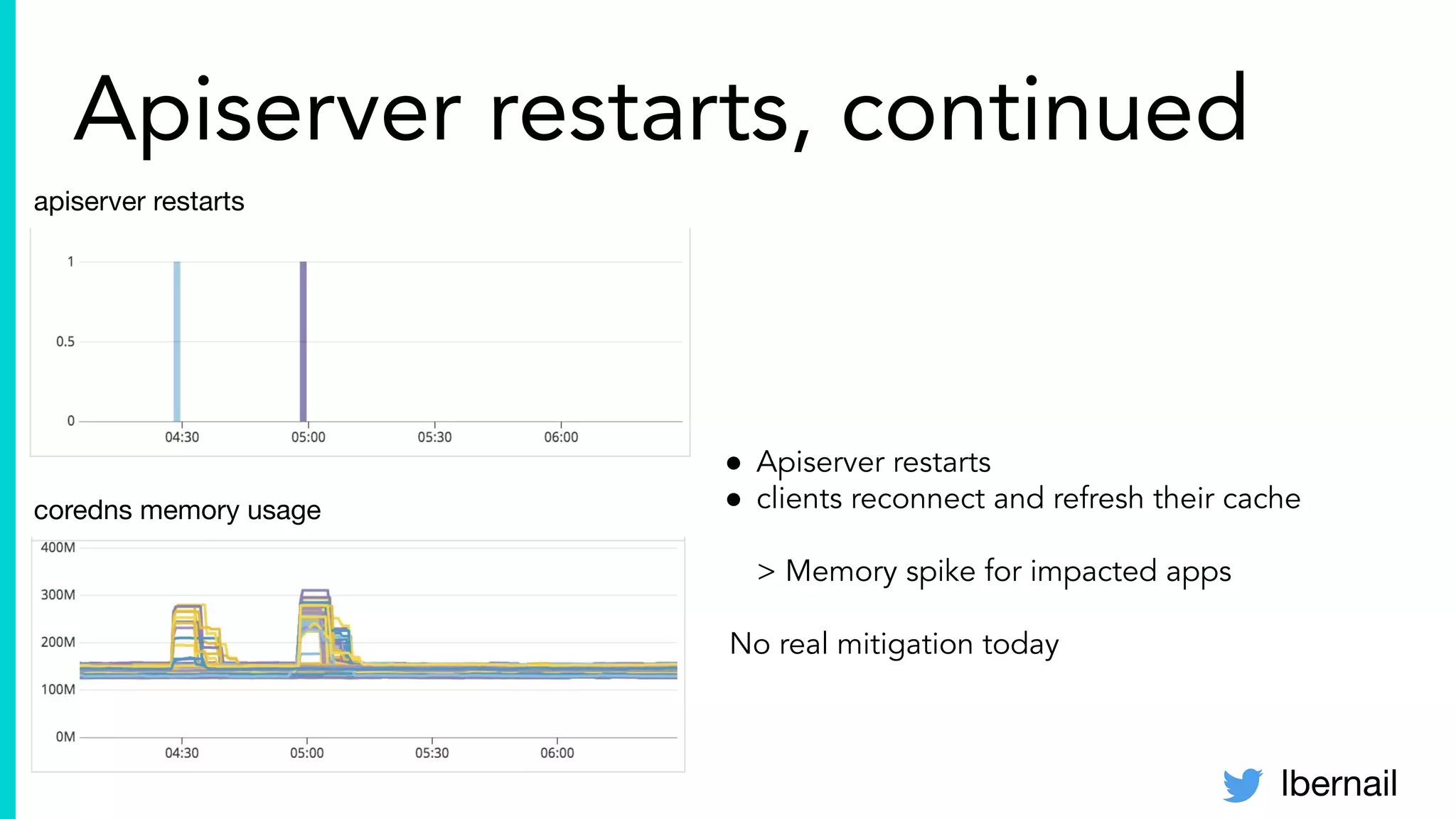

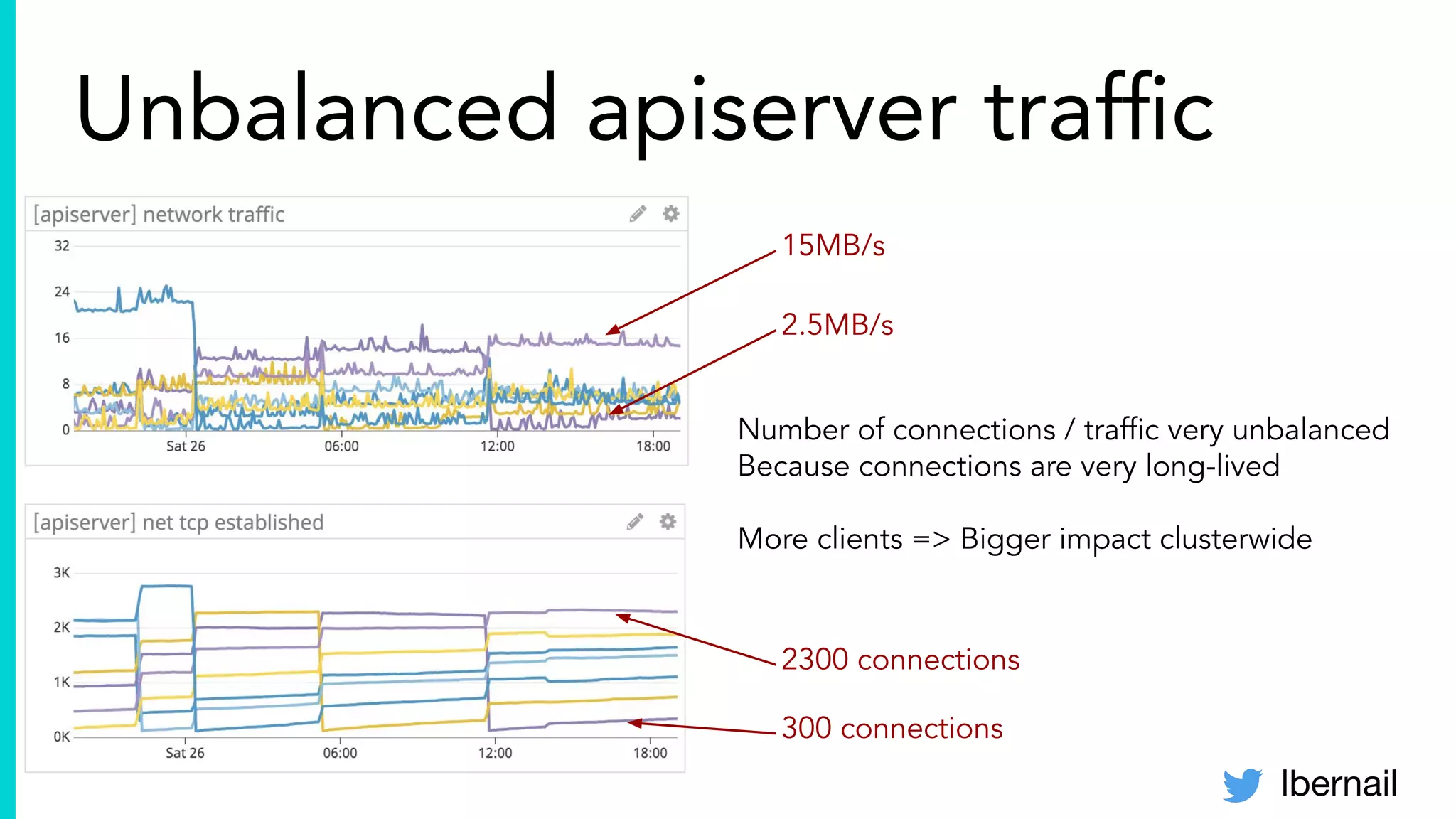

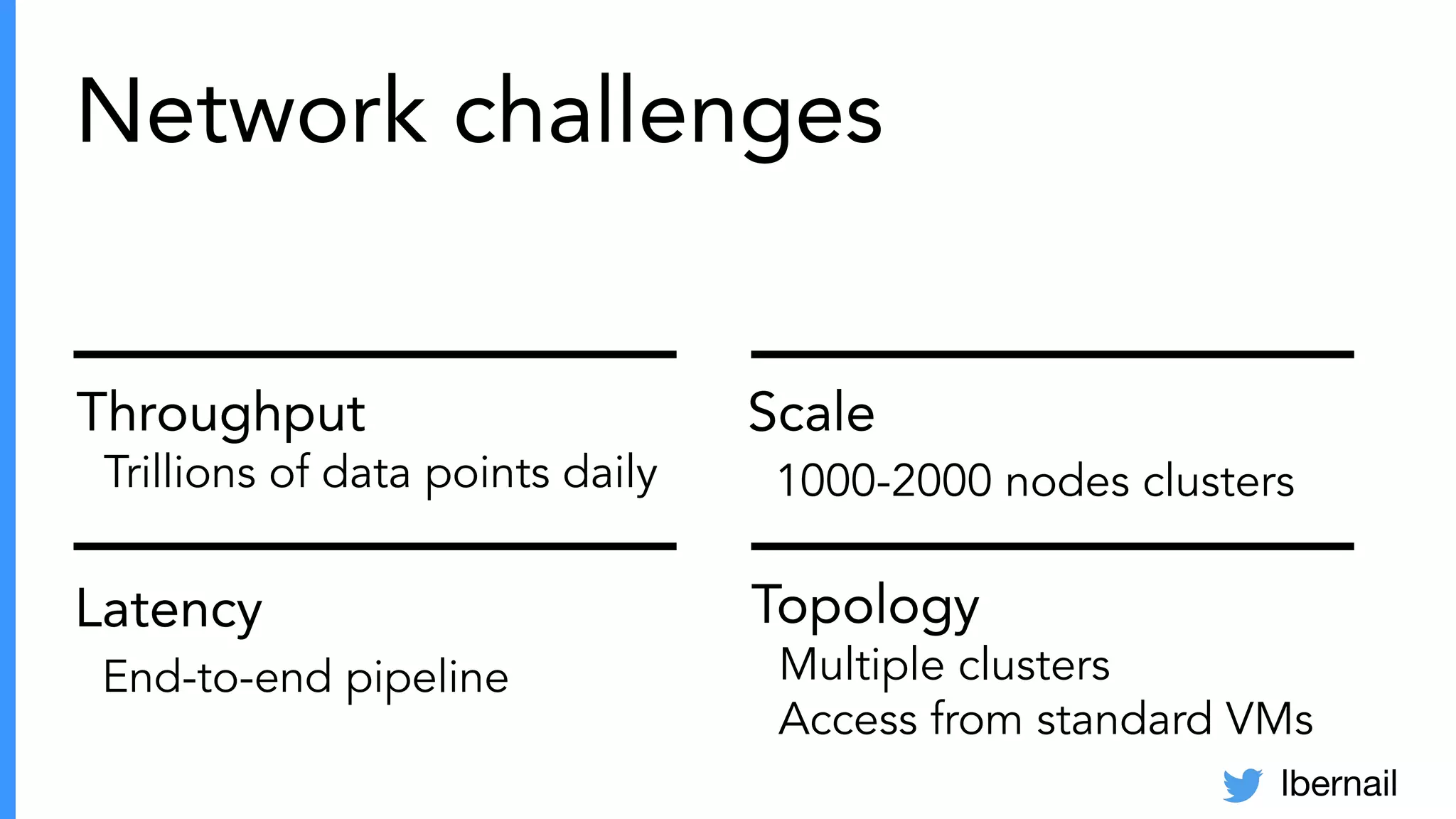

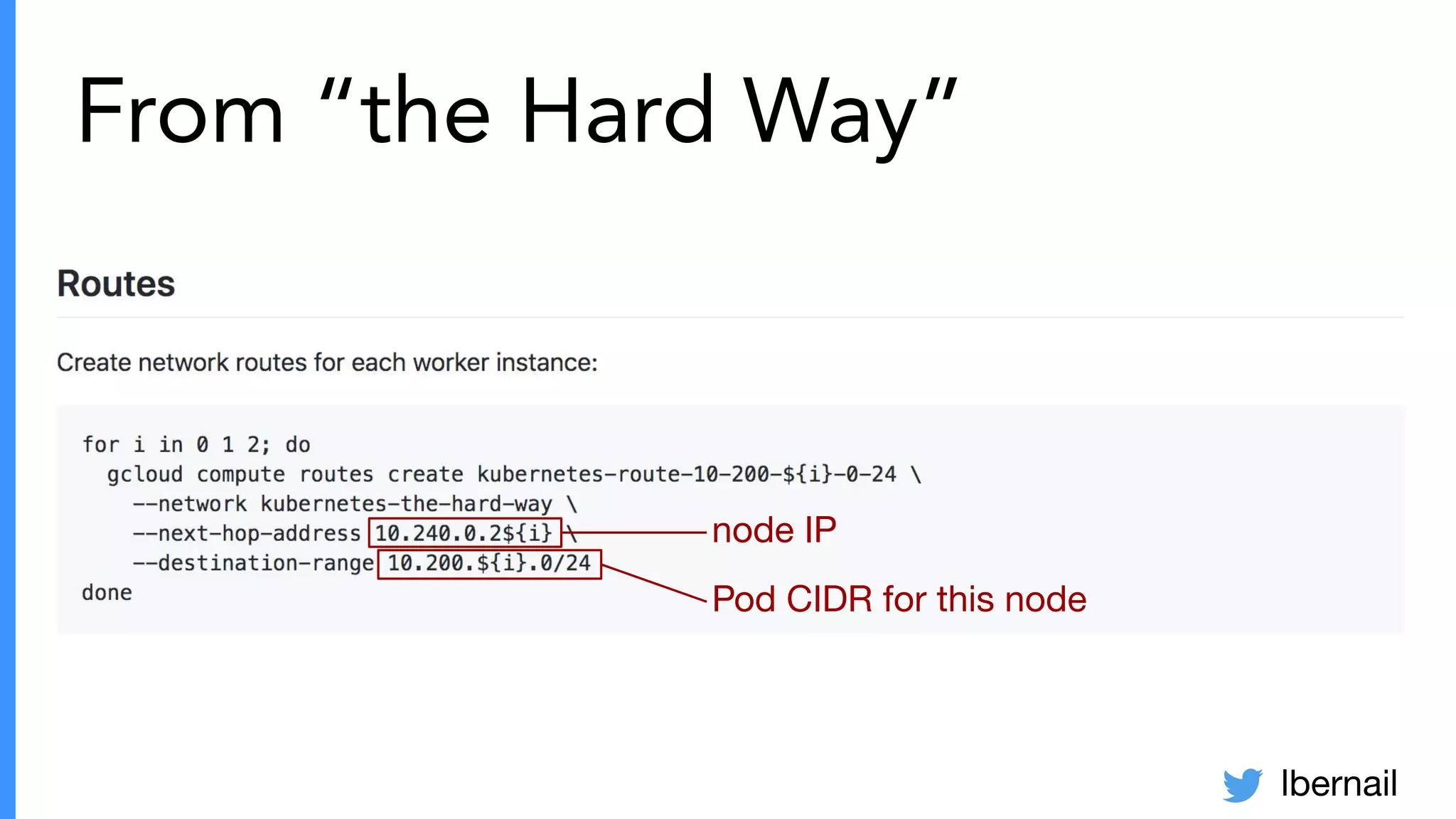

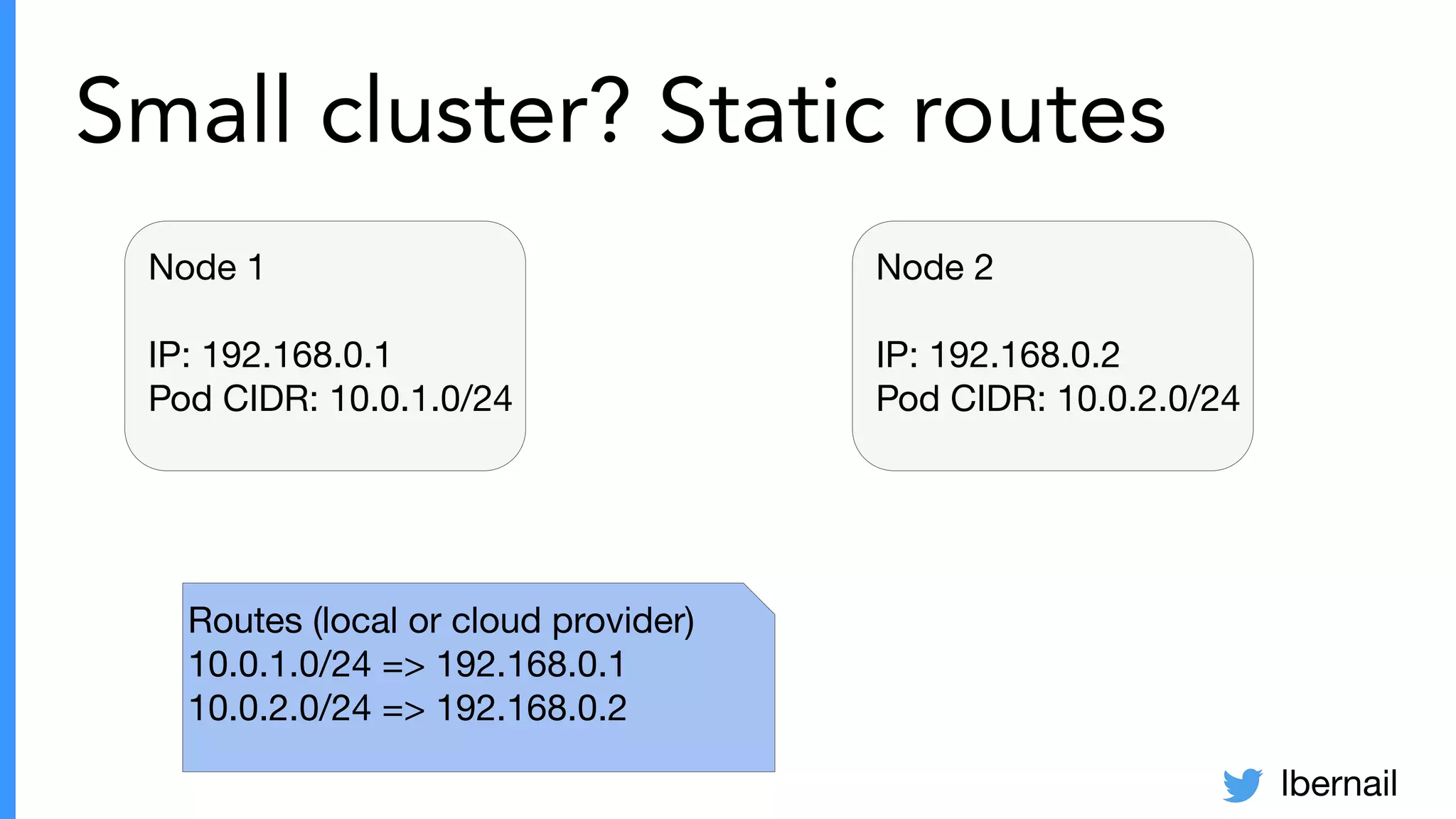

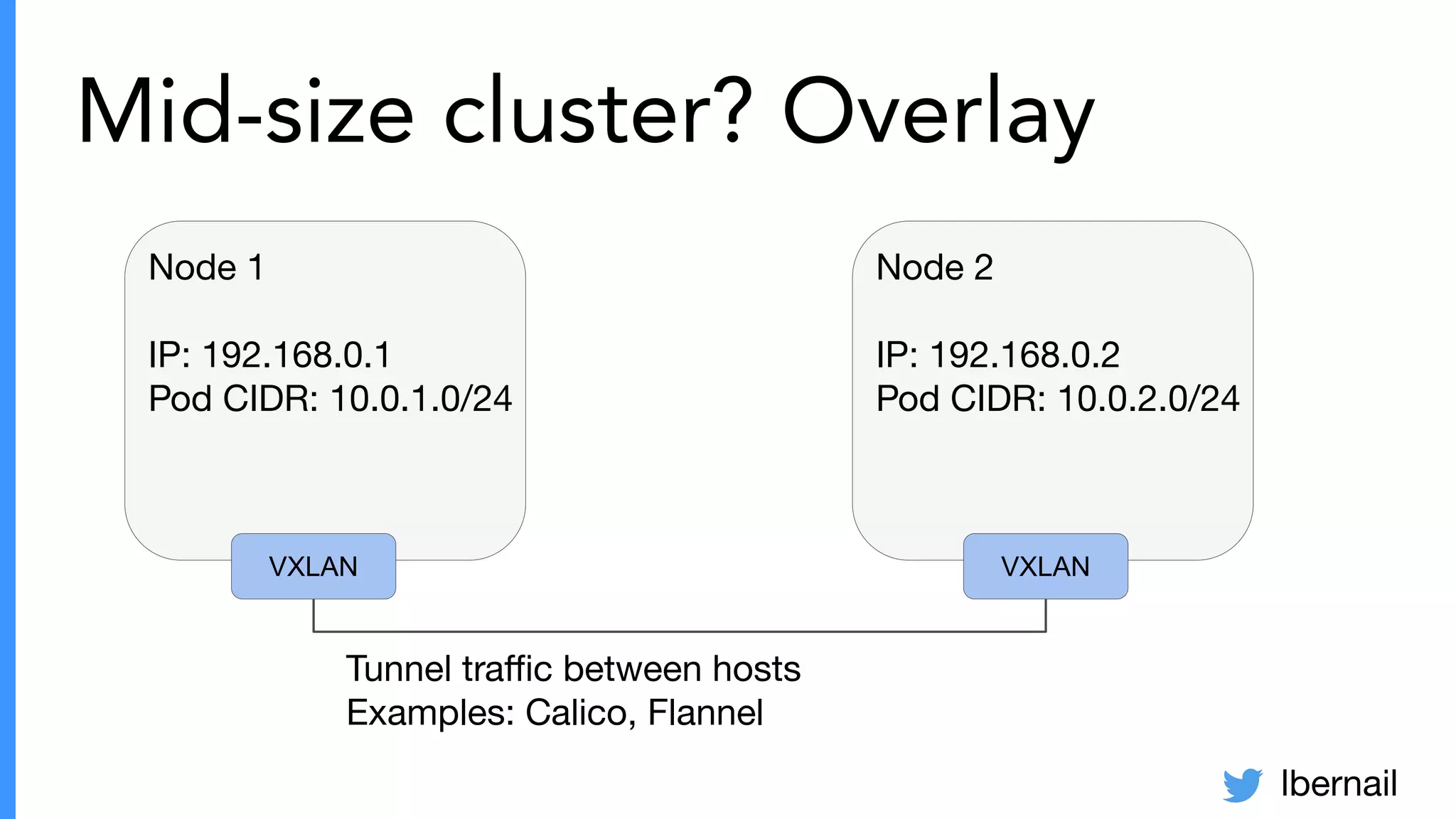

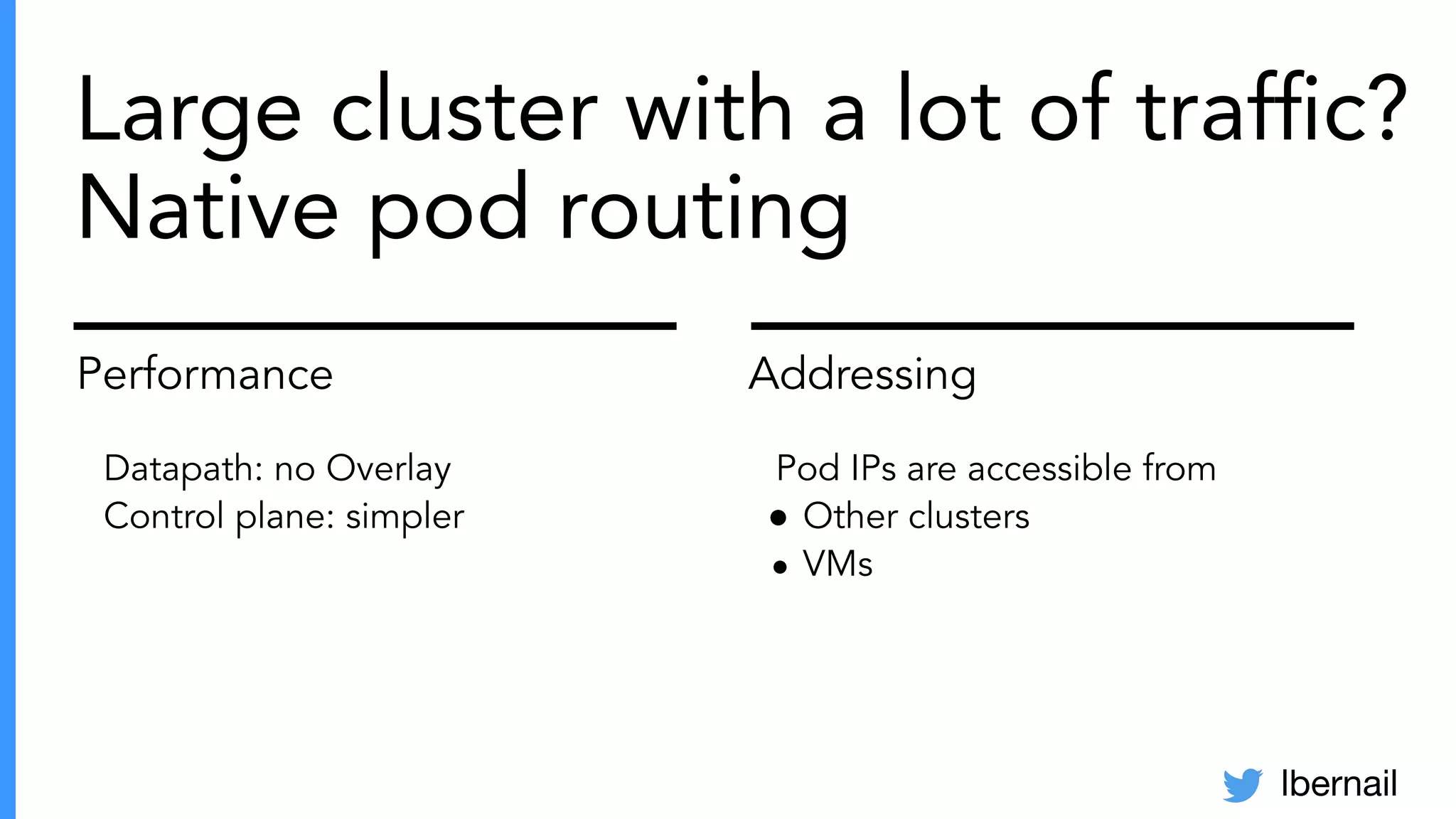

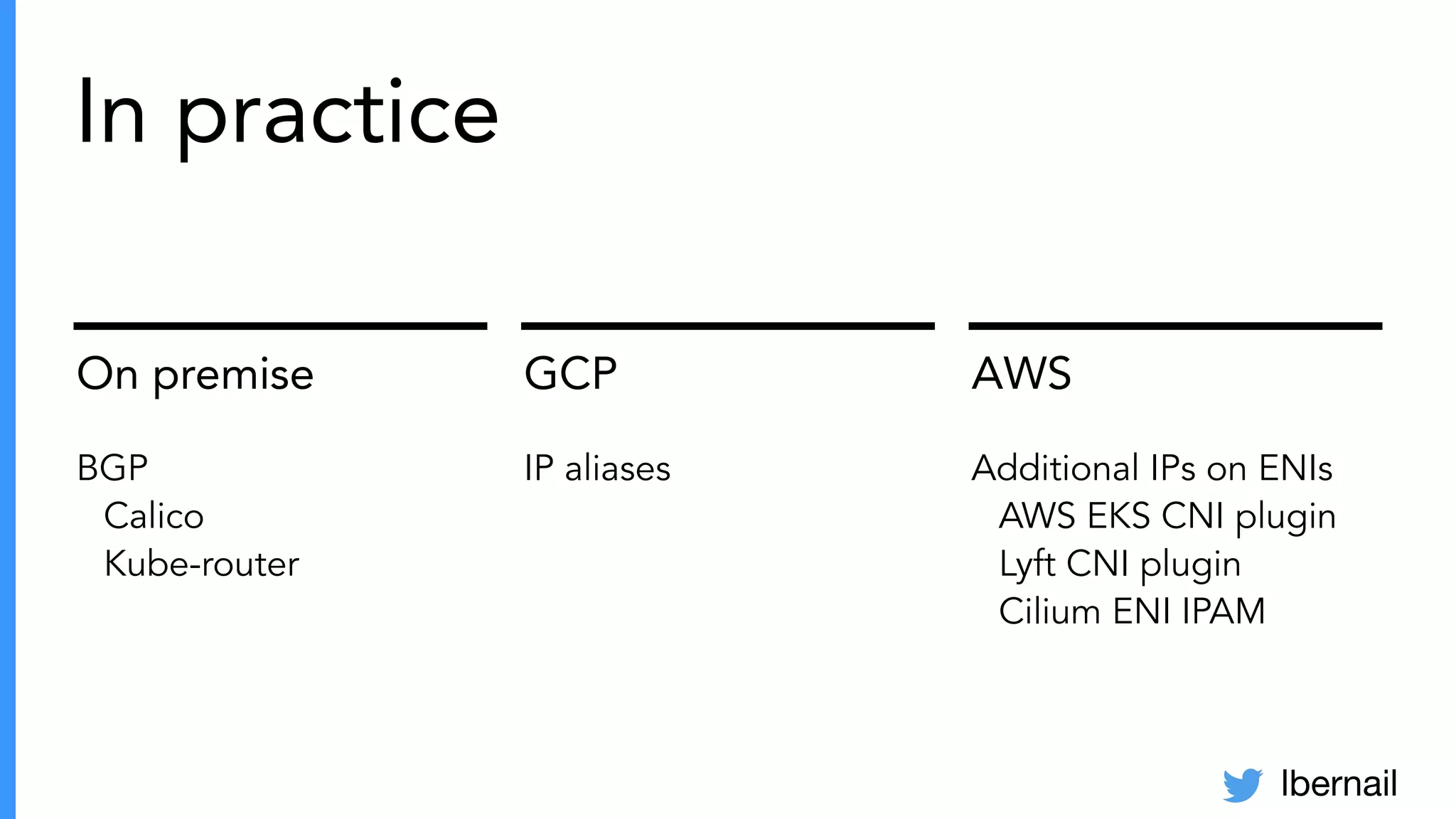

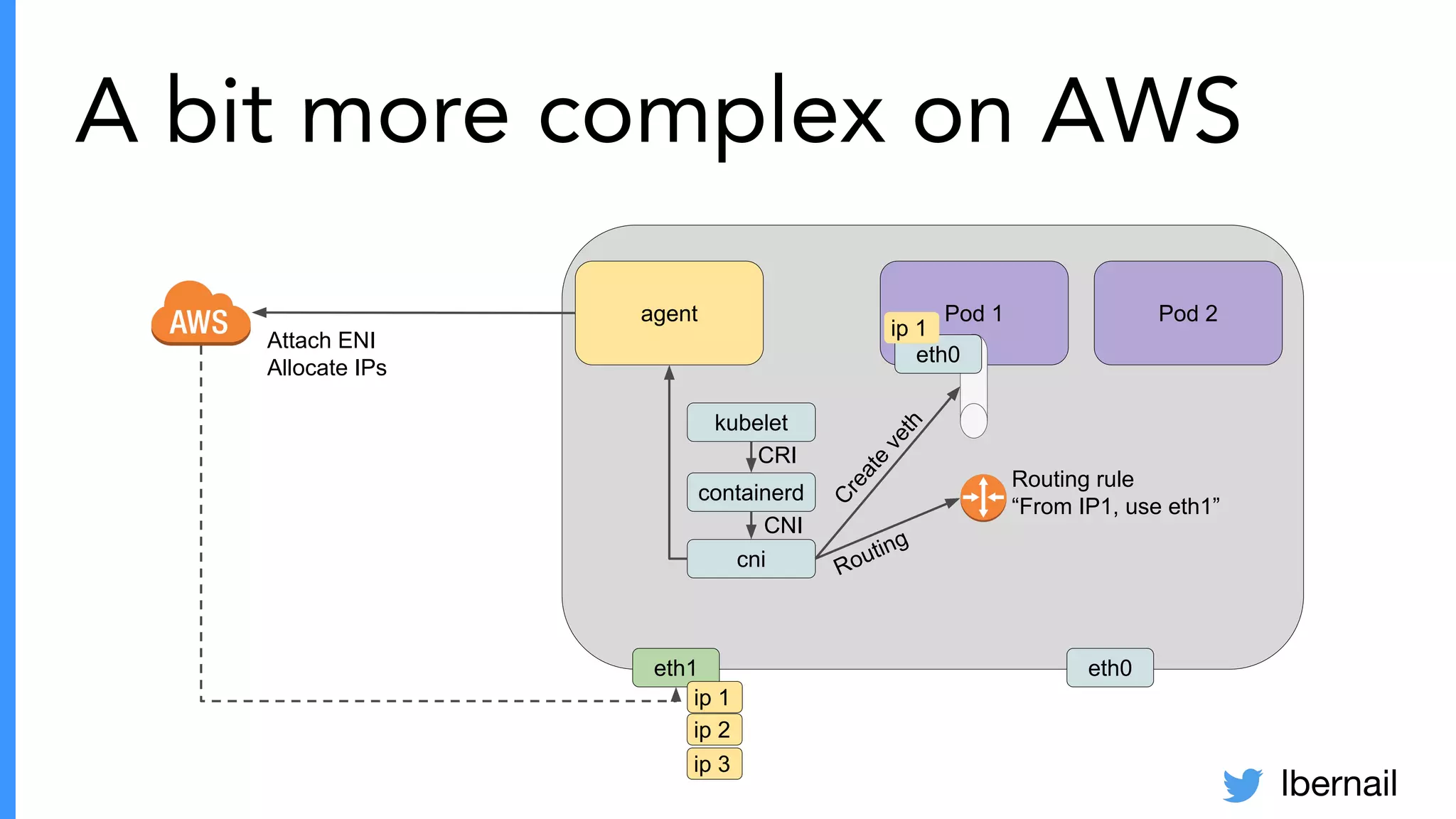

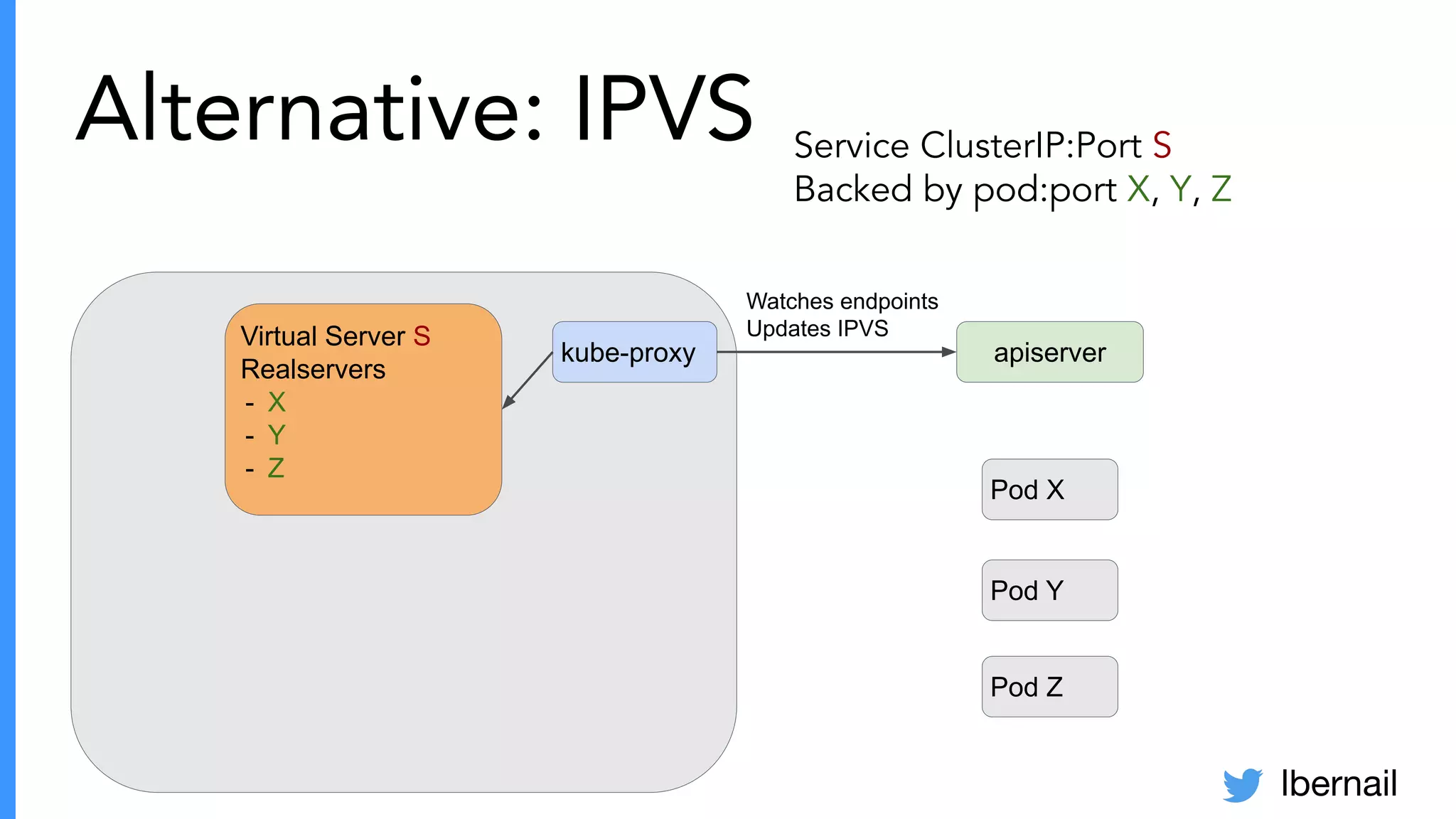

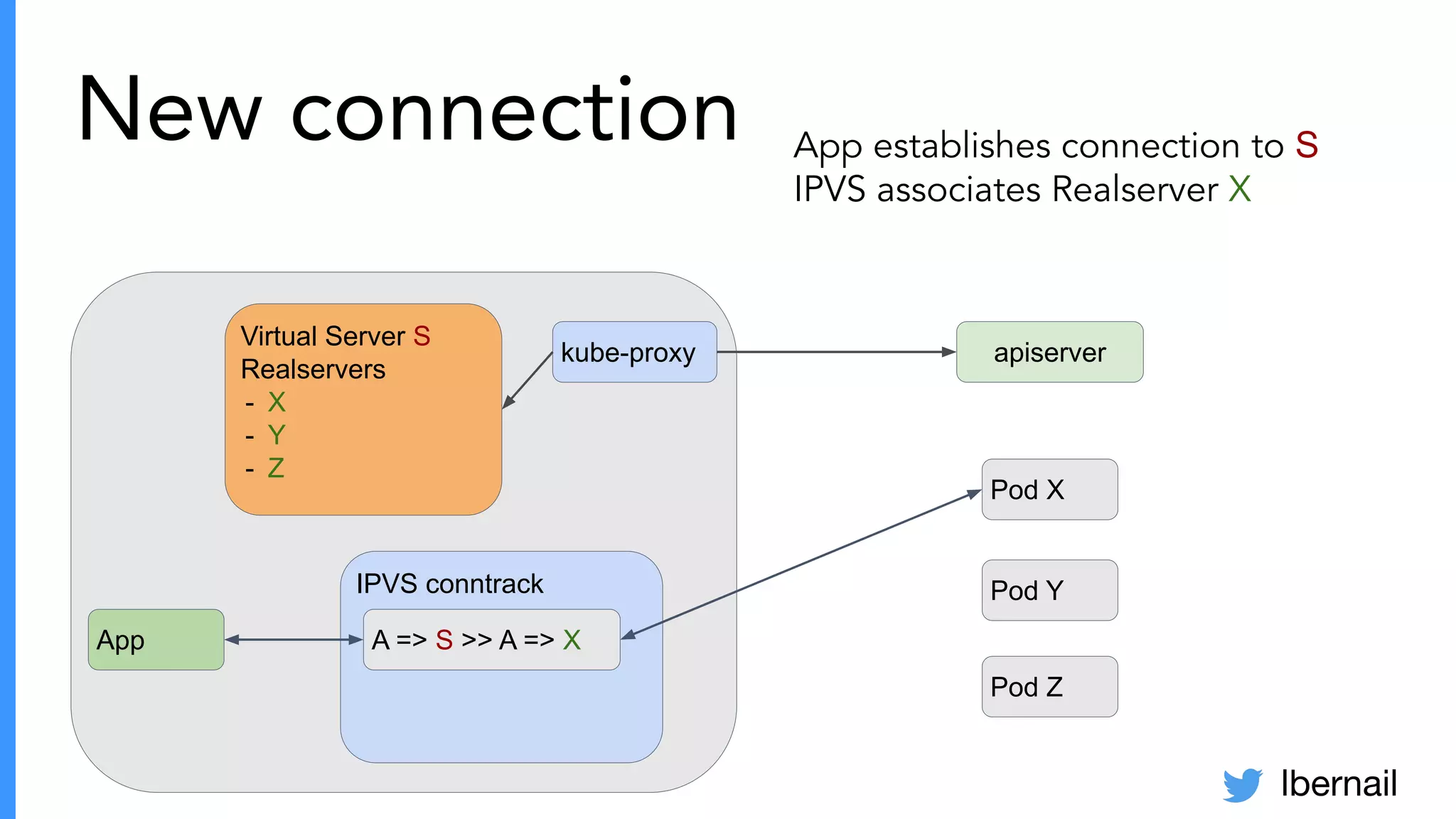

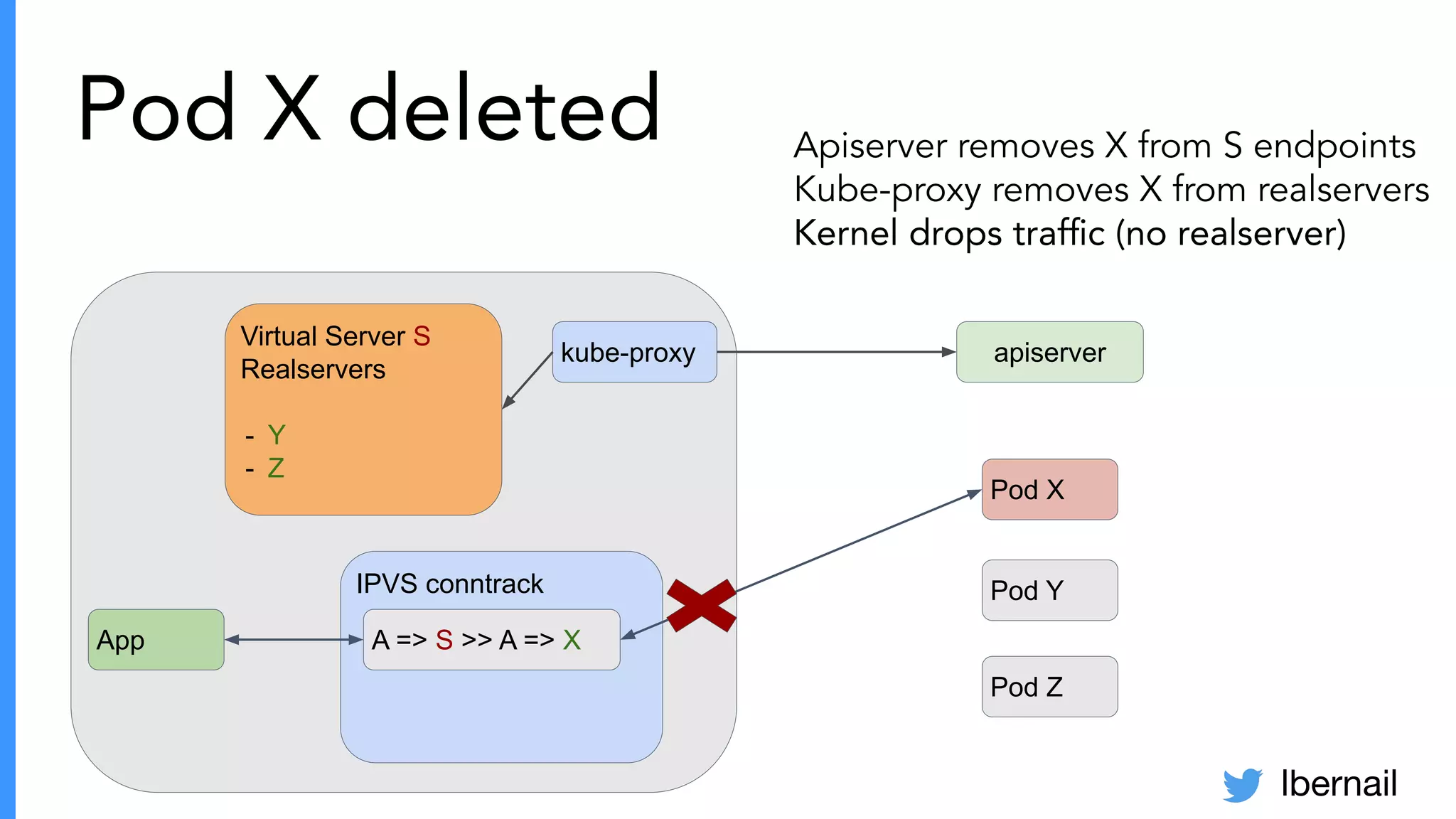

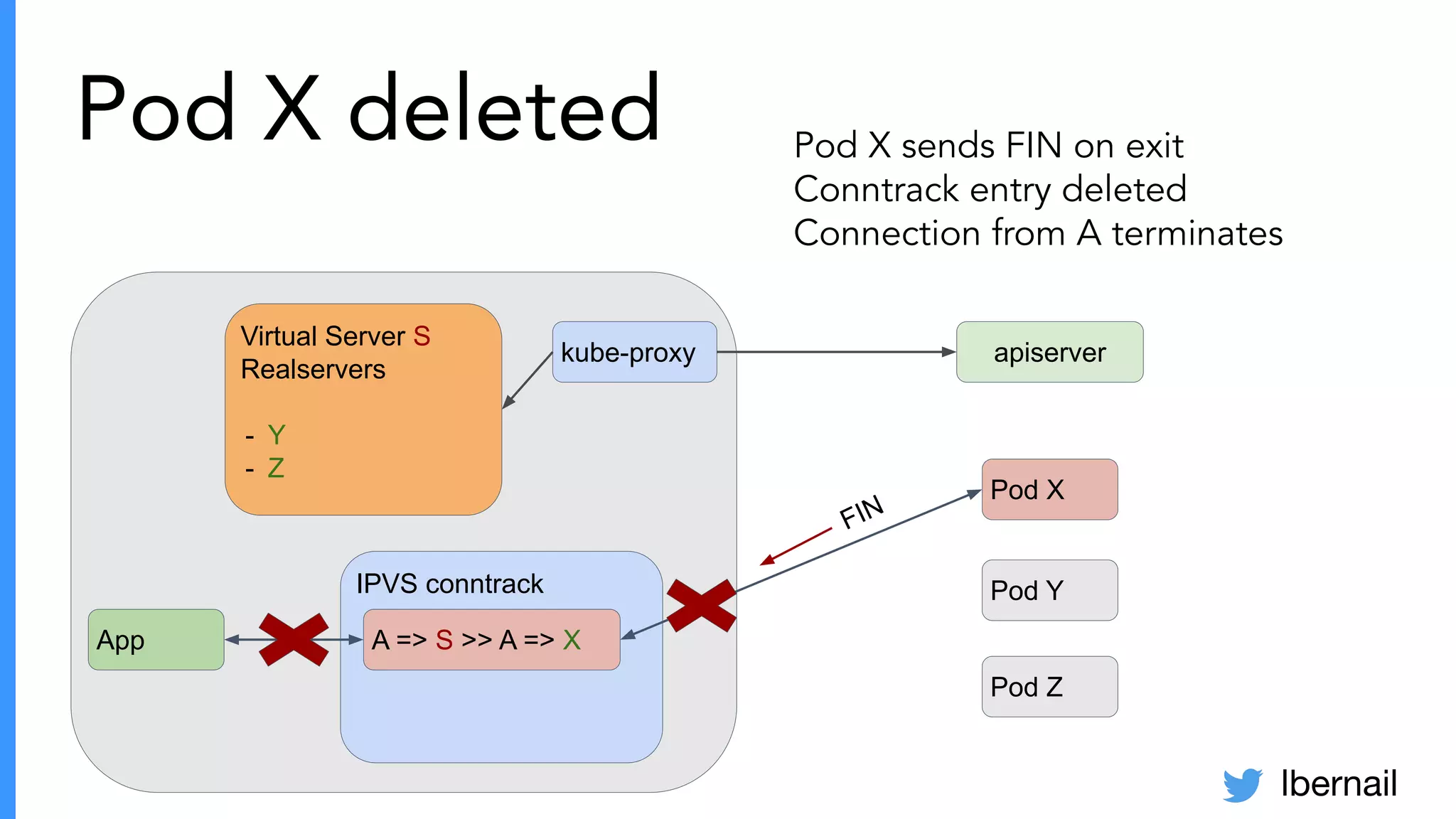

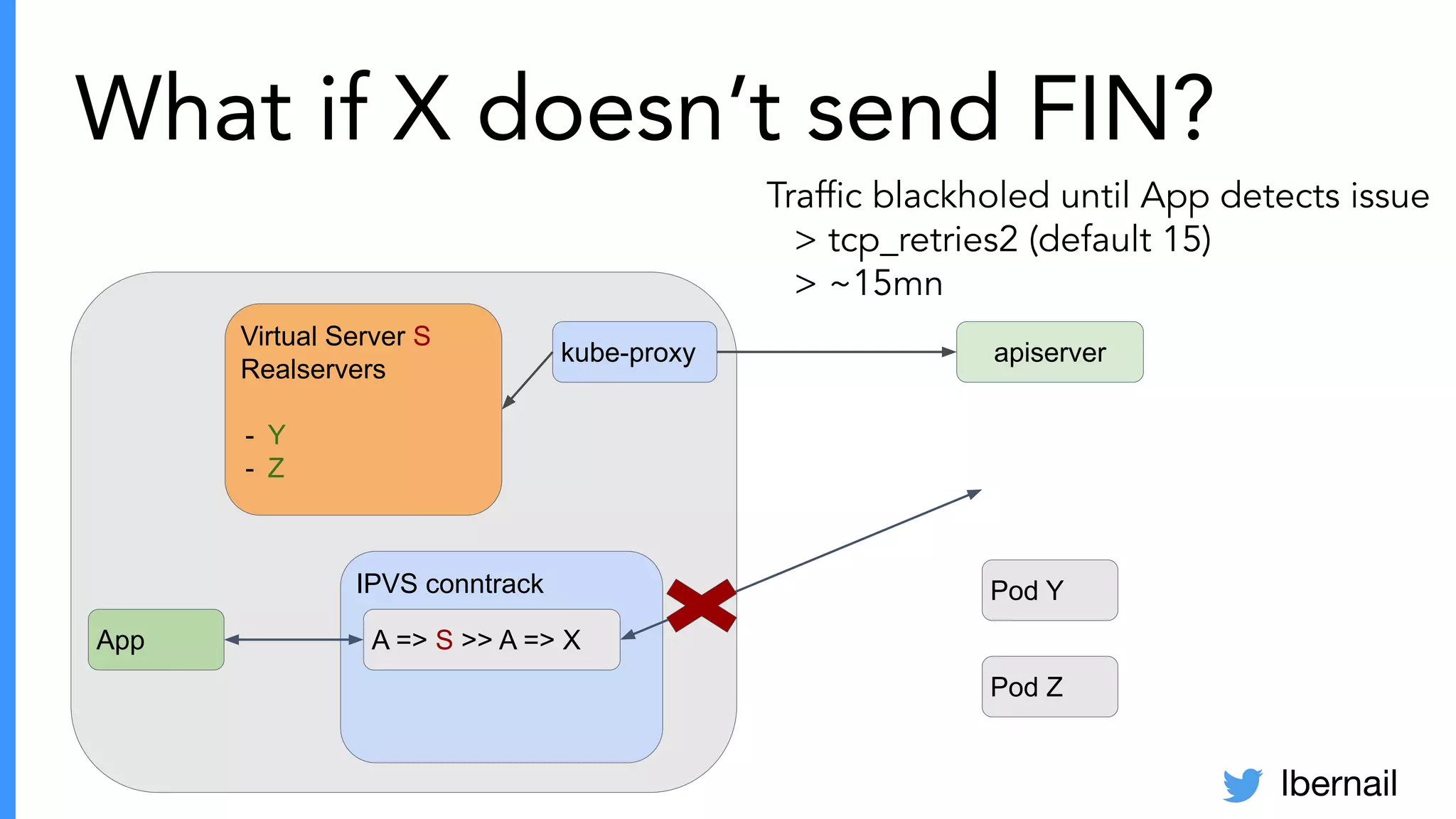

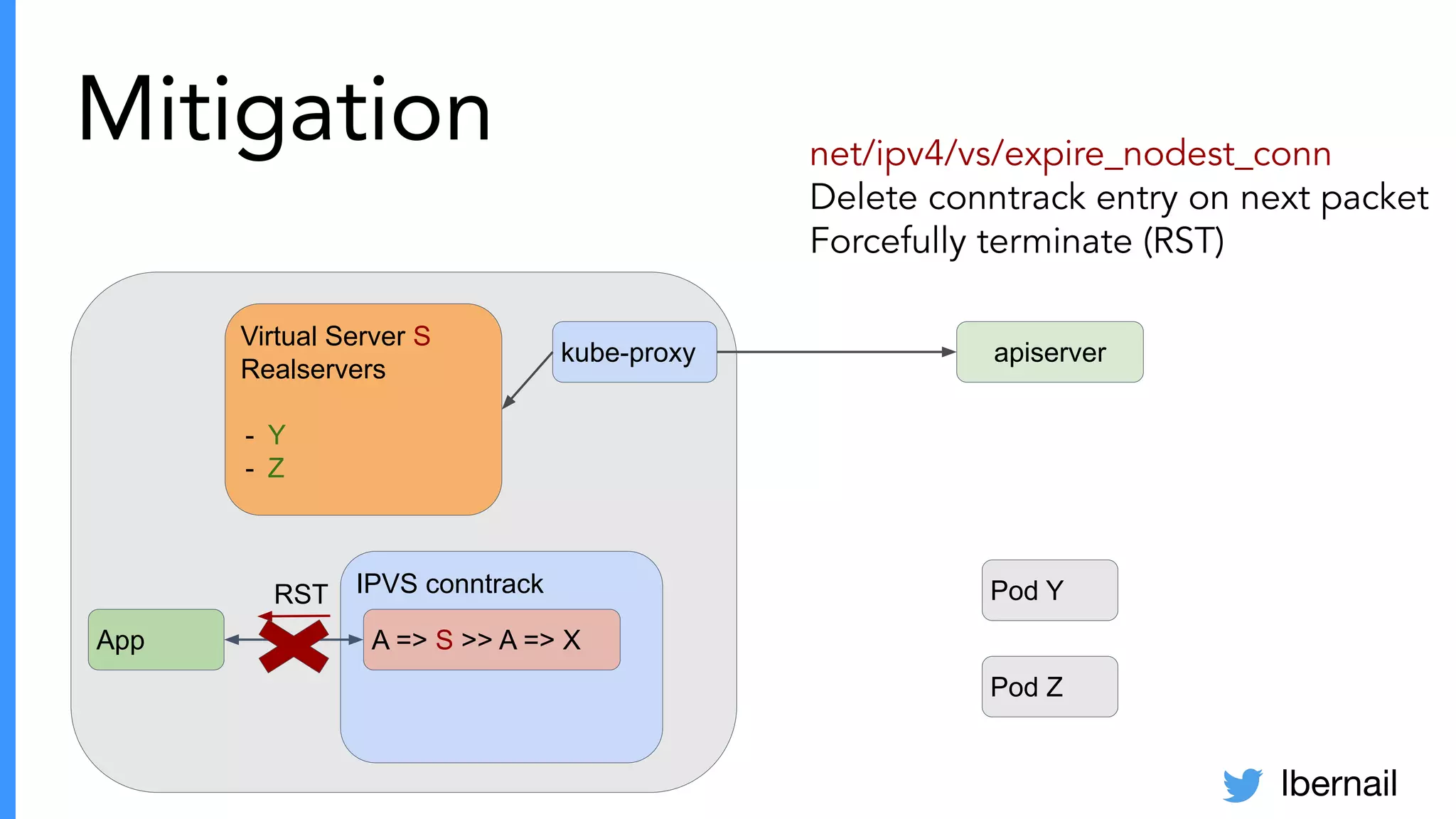

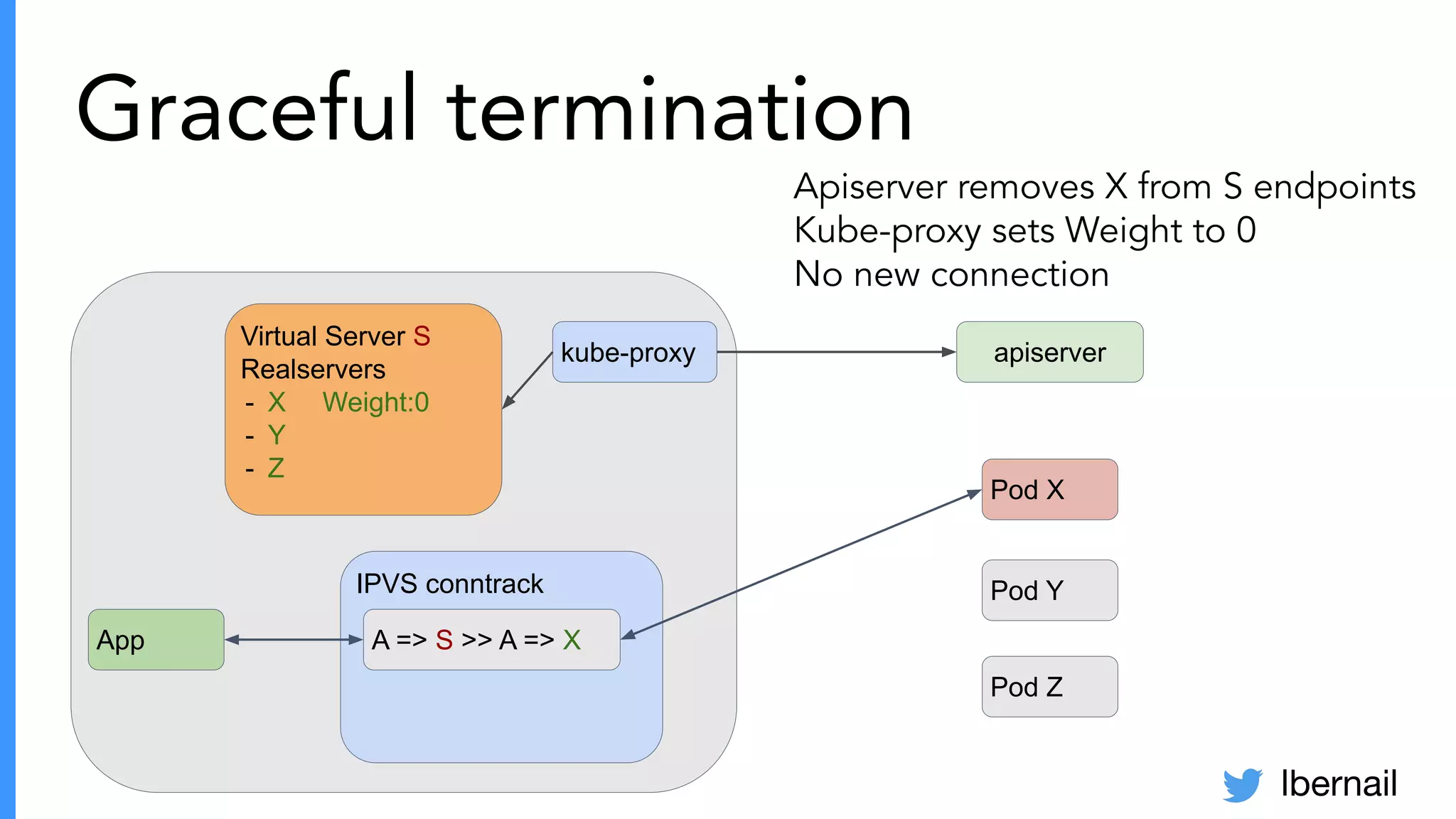

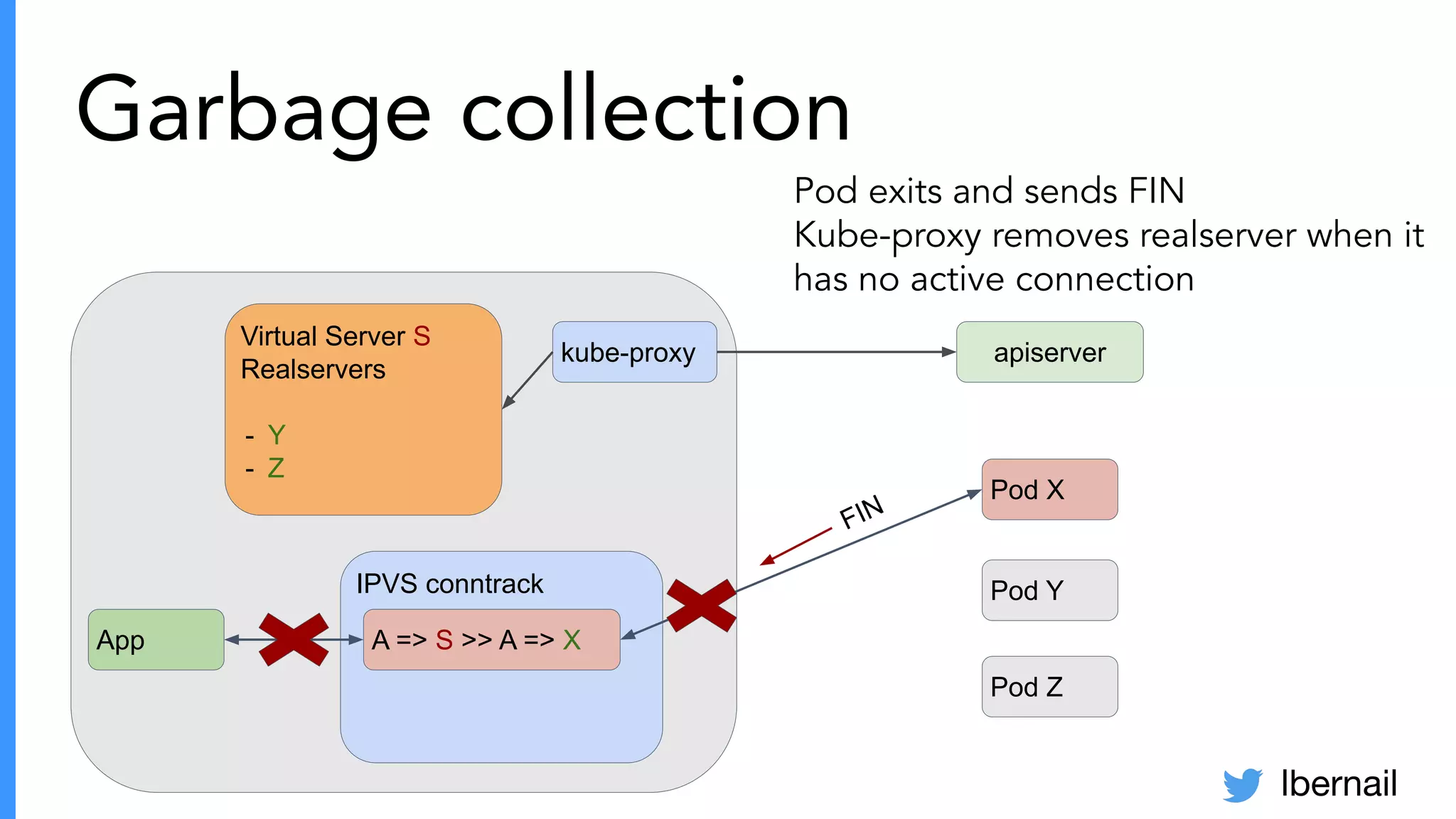

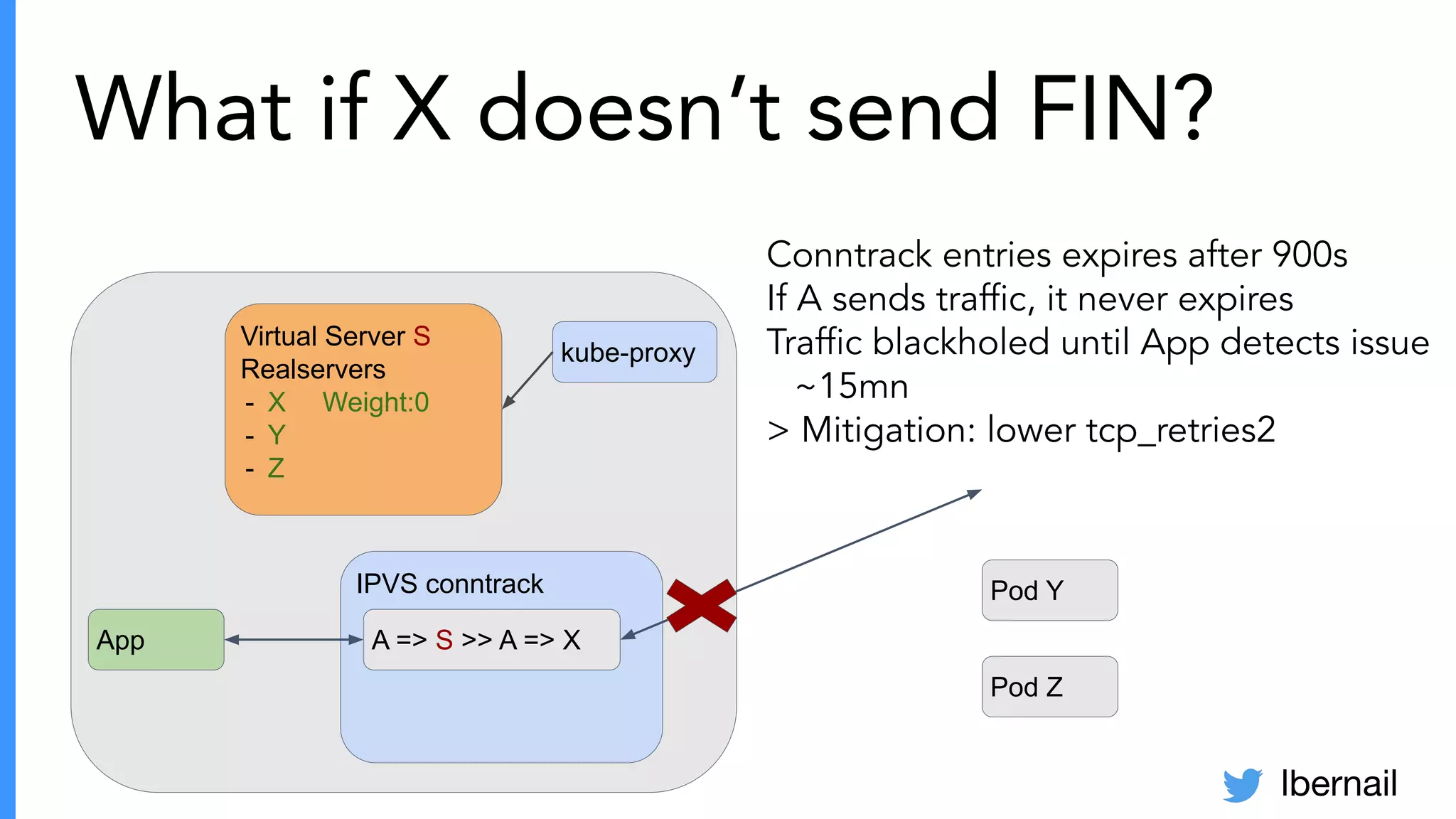

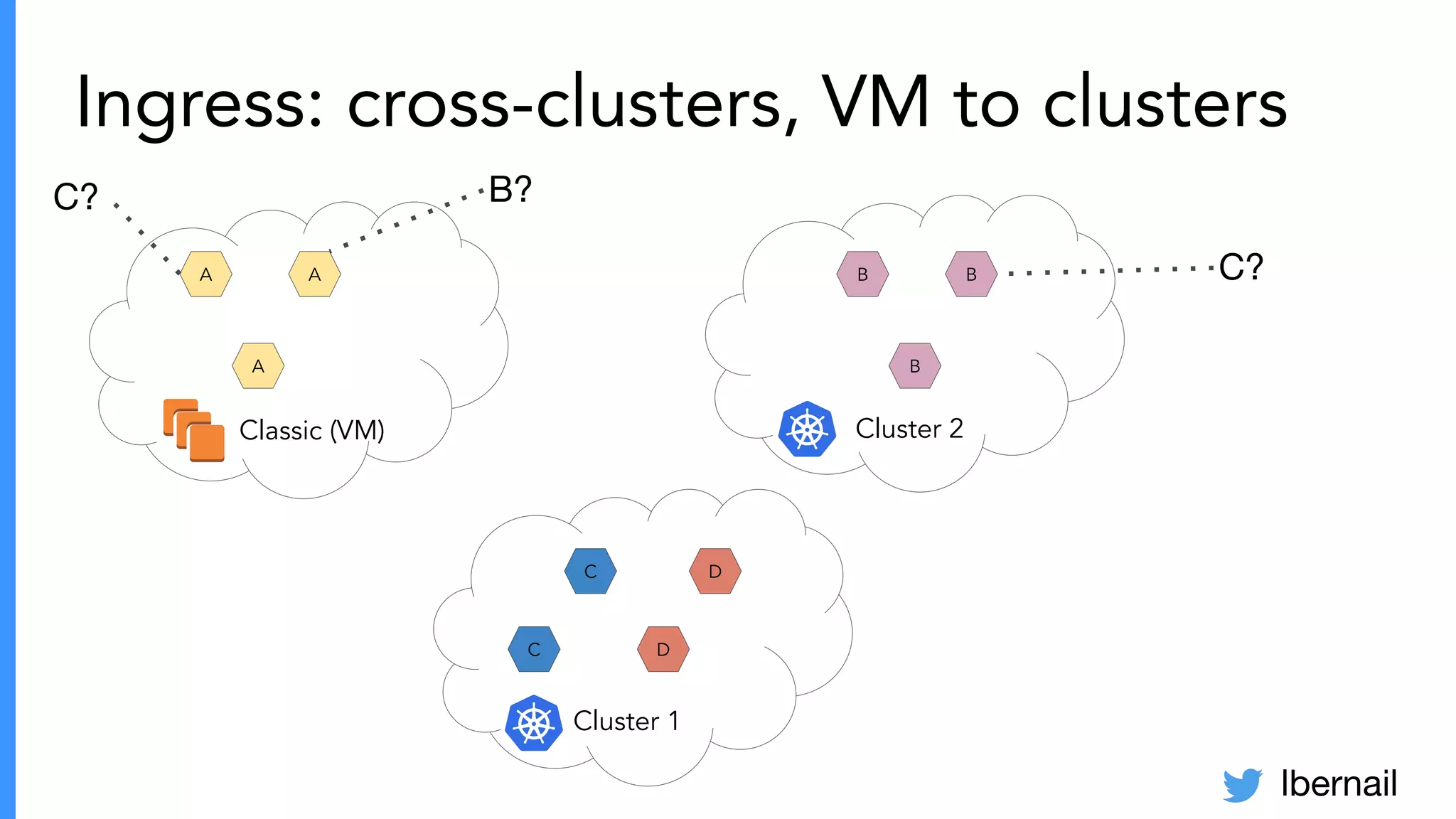

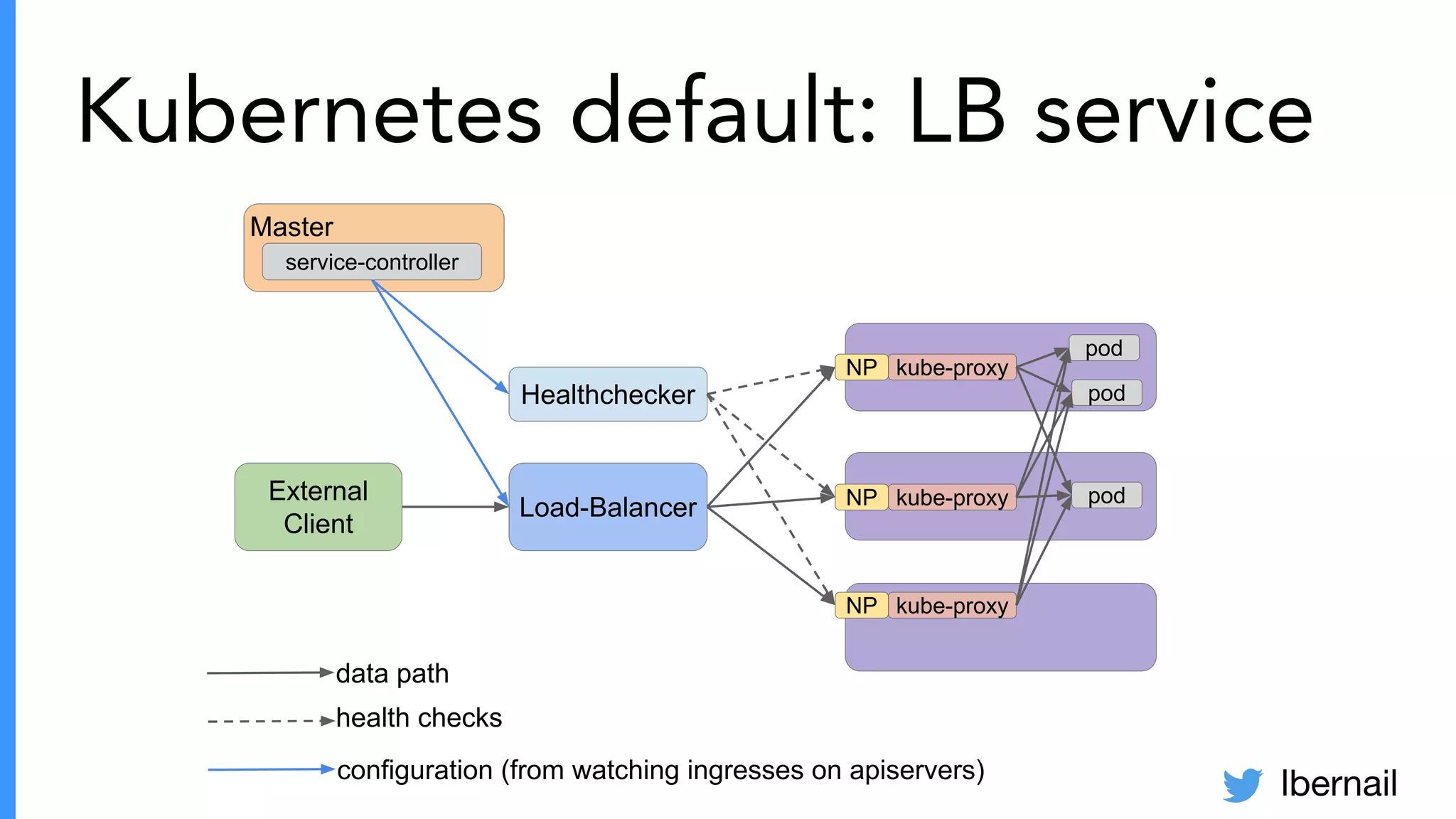

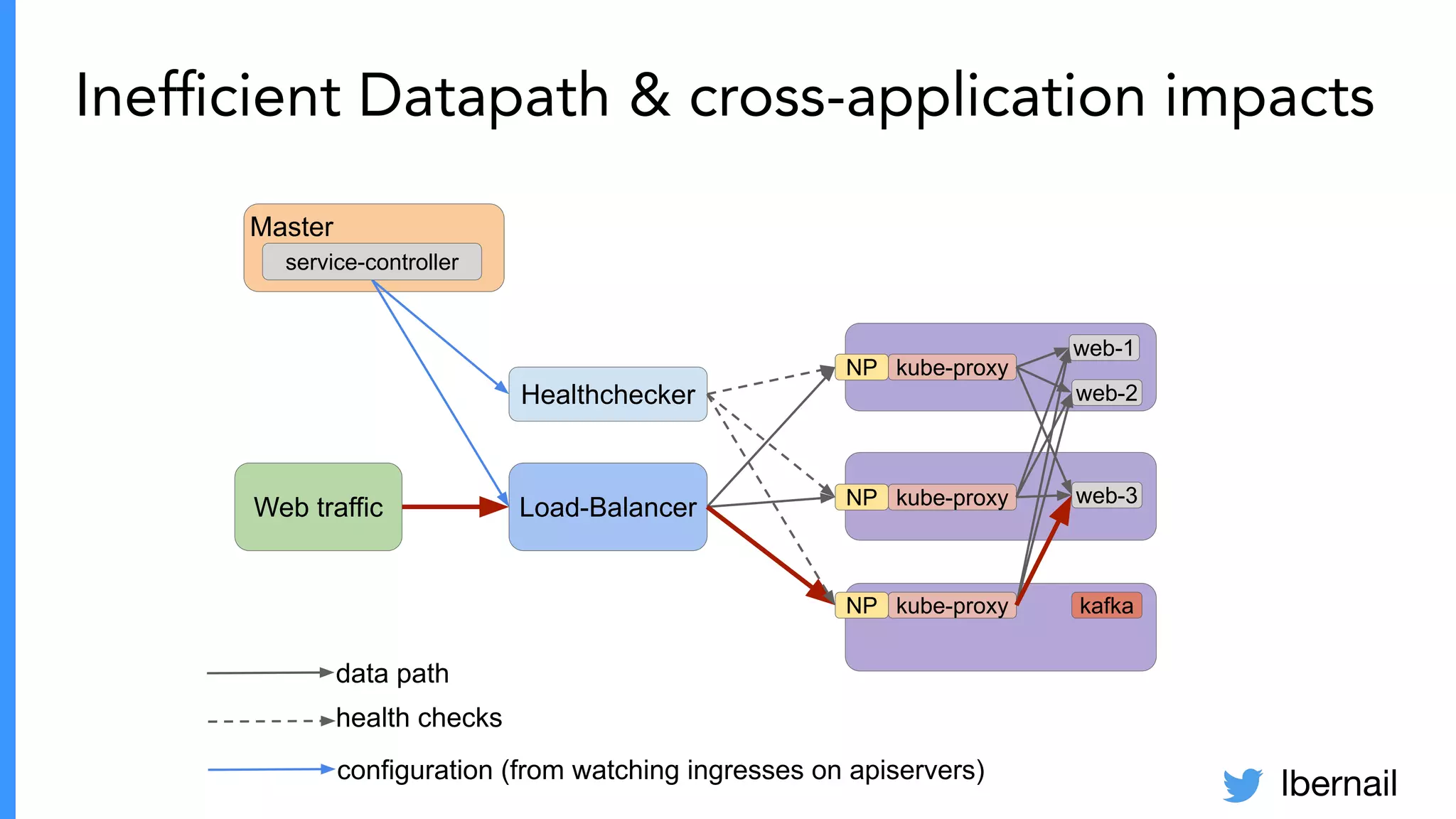

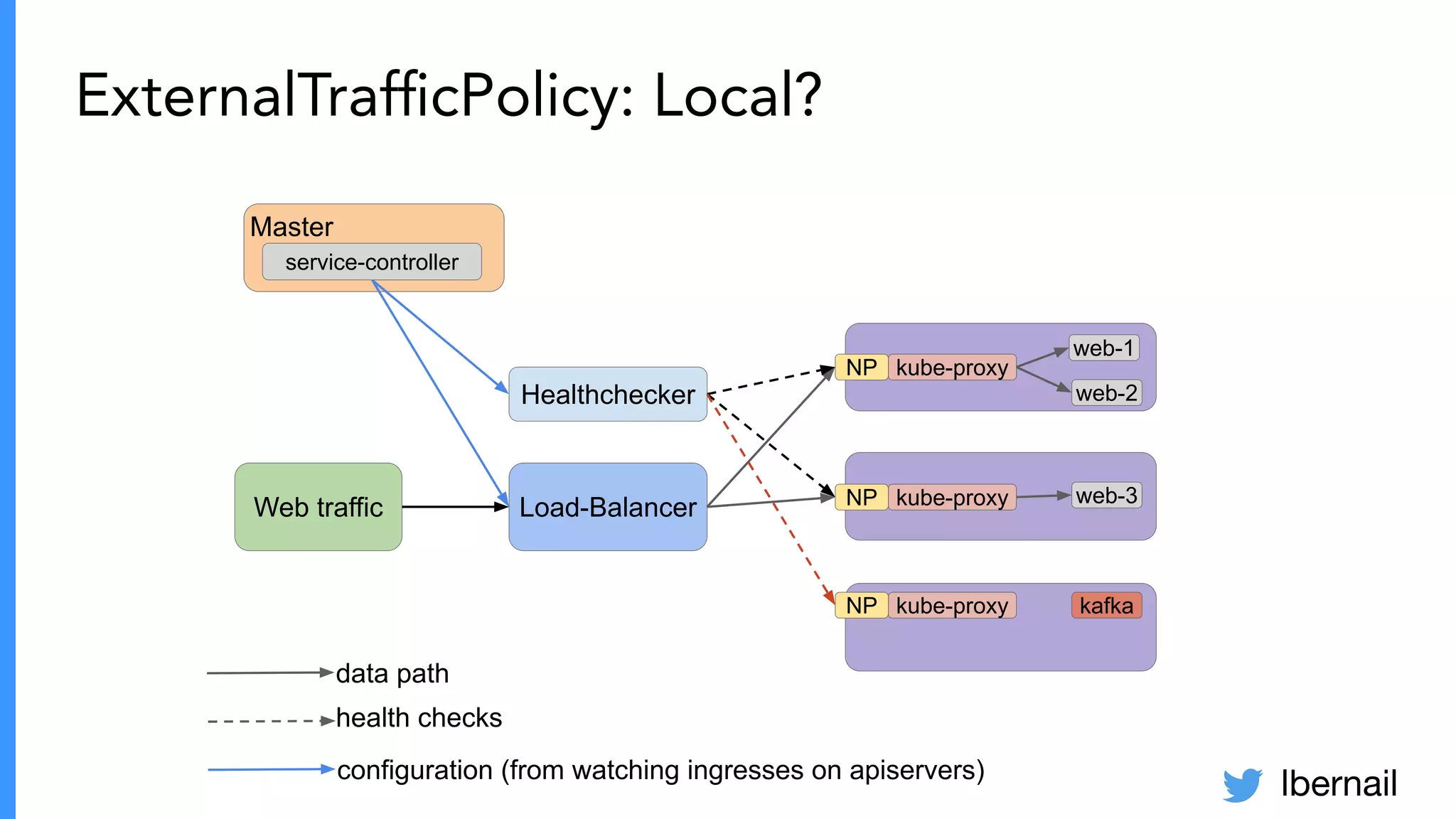

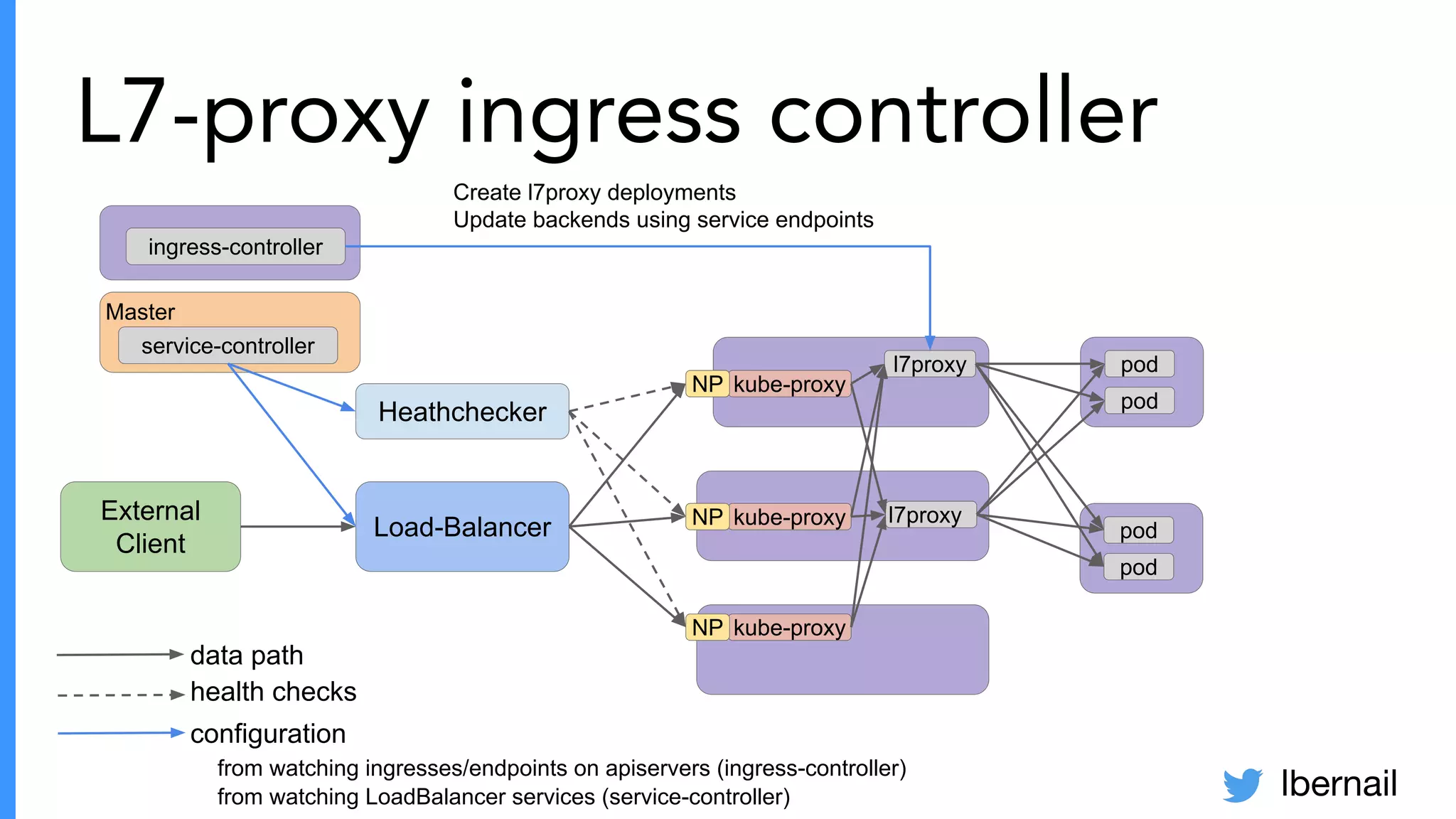

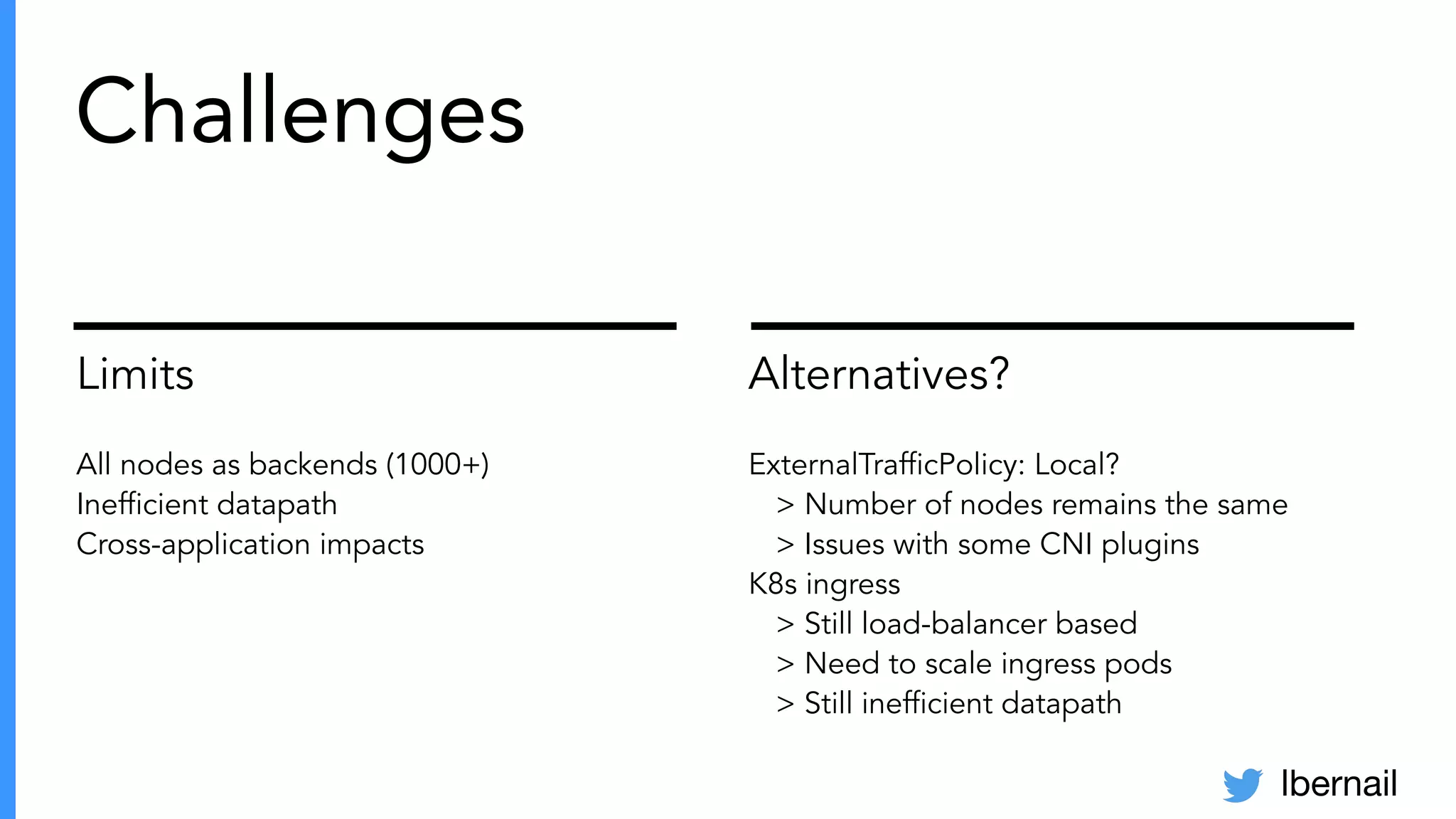

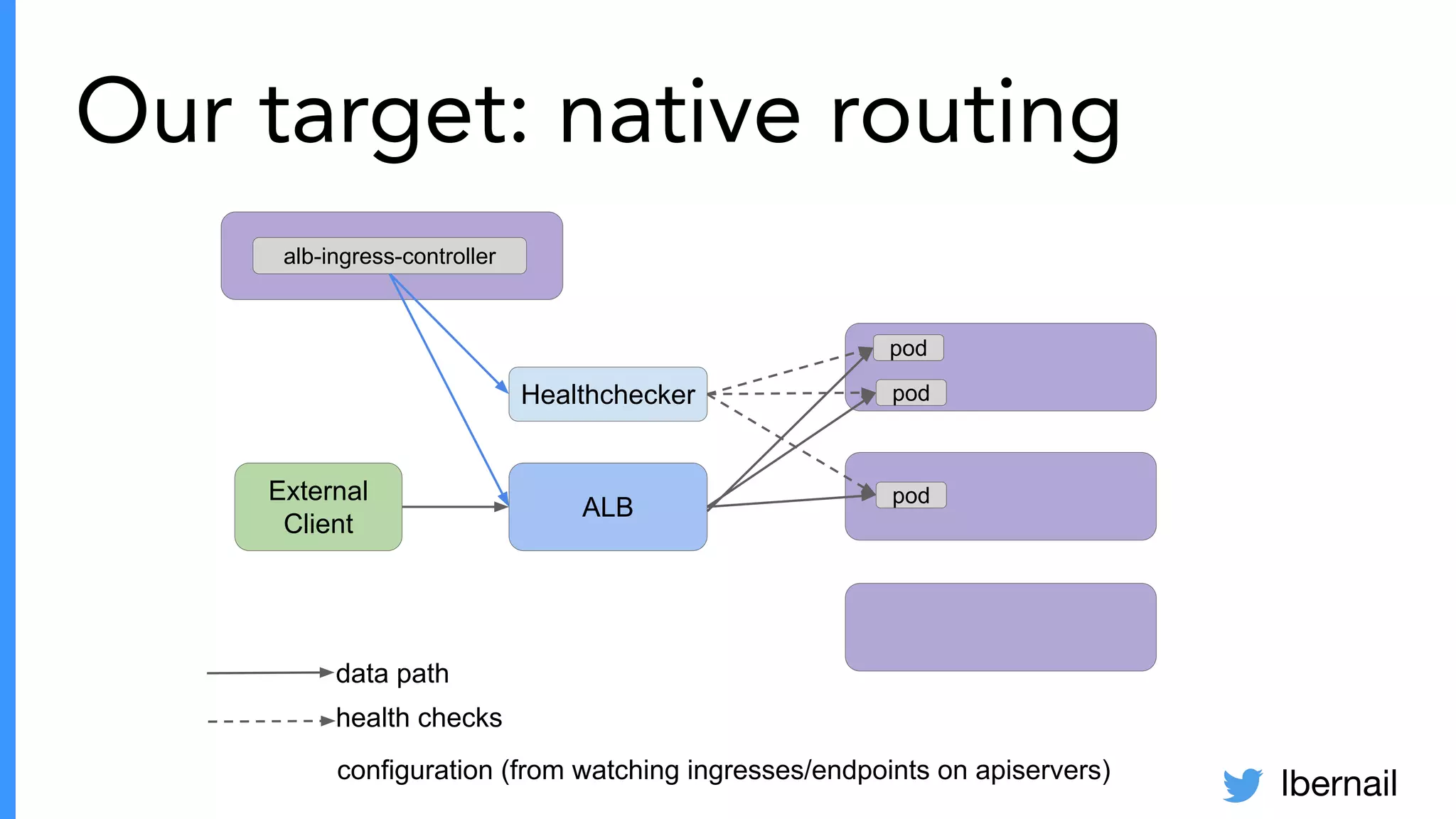

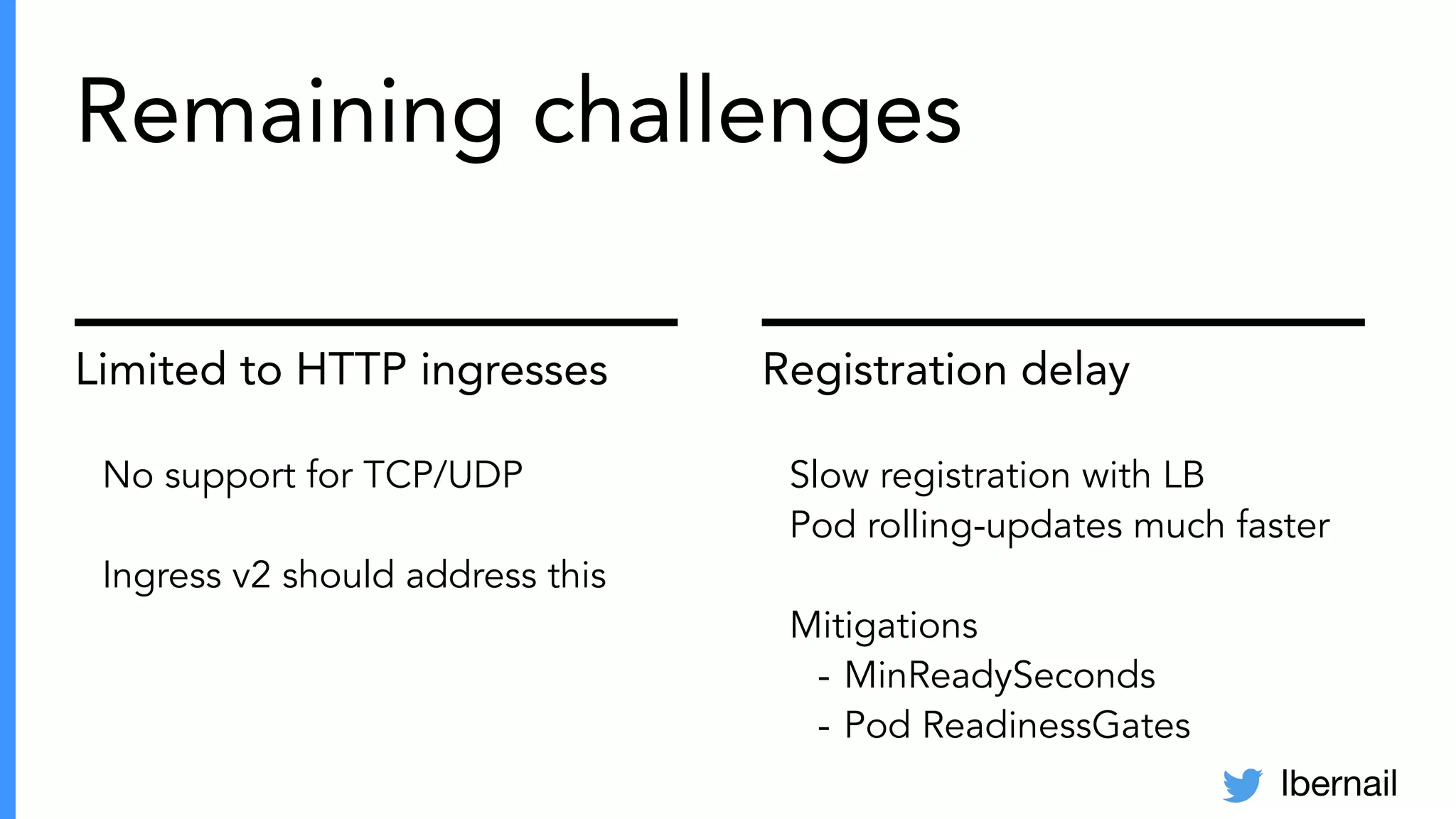

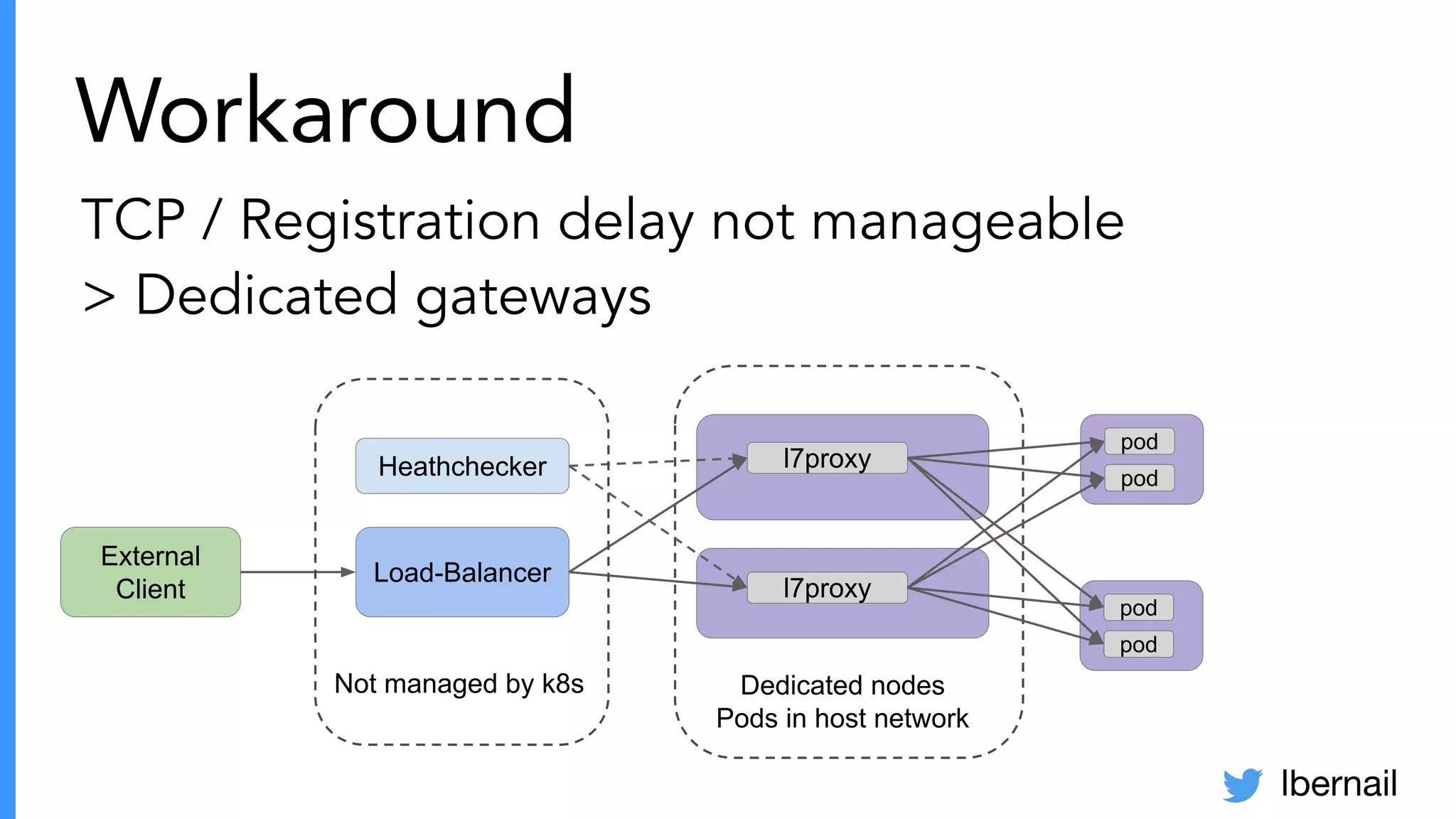

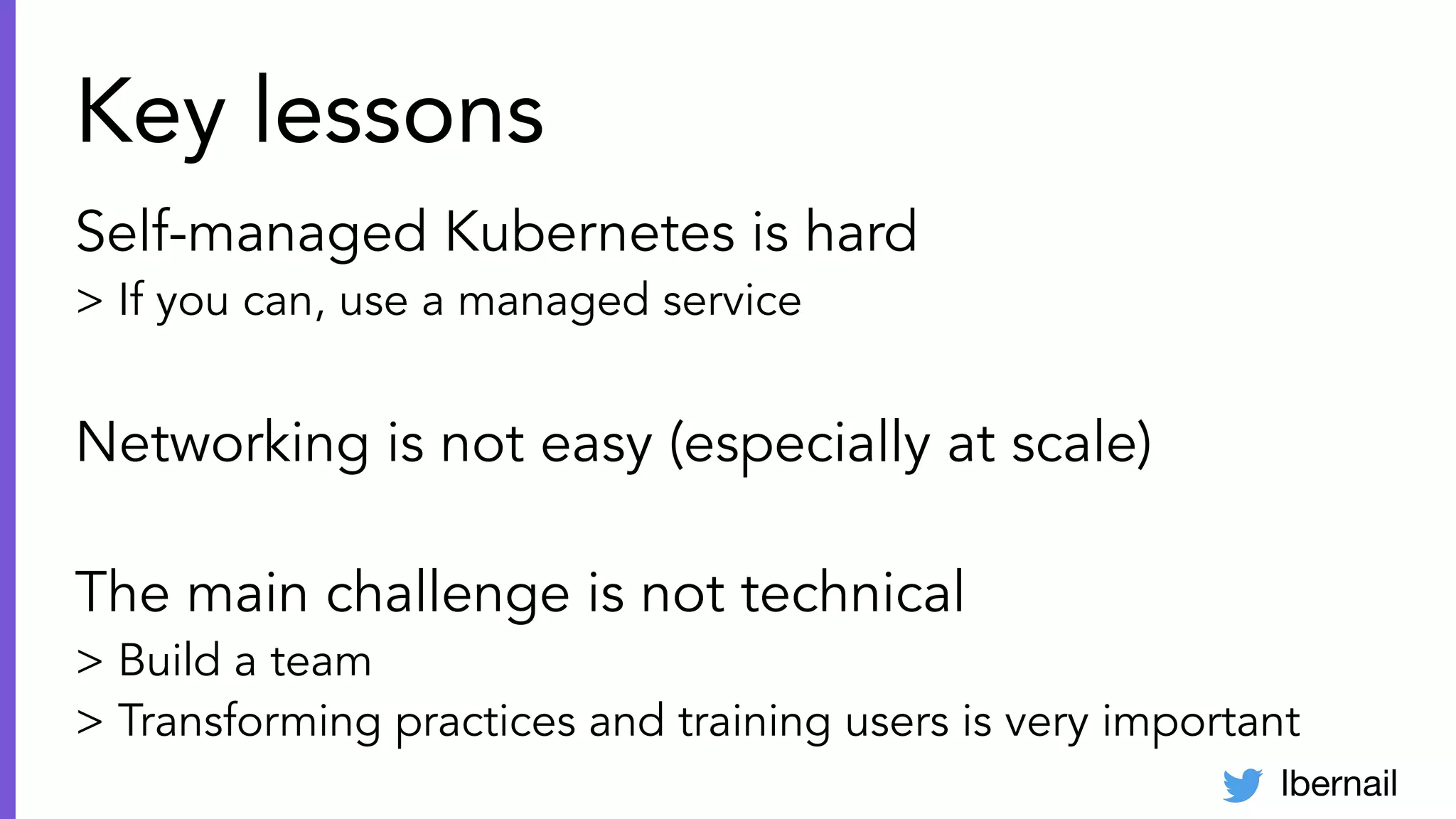

The document elaborates on the complexities and challenges faced in deploying Kubernetes at scale by Datadog, including the necessity for a resilient control plane, management of certificates, and efficient networking strategies. It discusses incidents related to certificate management, networking inefficiencies, and the importance of graceful termination in services while highlighting learnings from practical experiences. The presentation emphasizes that self-managed Kubernetes is challenging and encourages using managed services, underscoring the necessity for team building and user training.