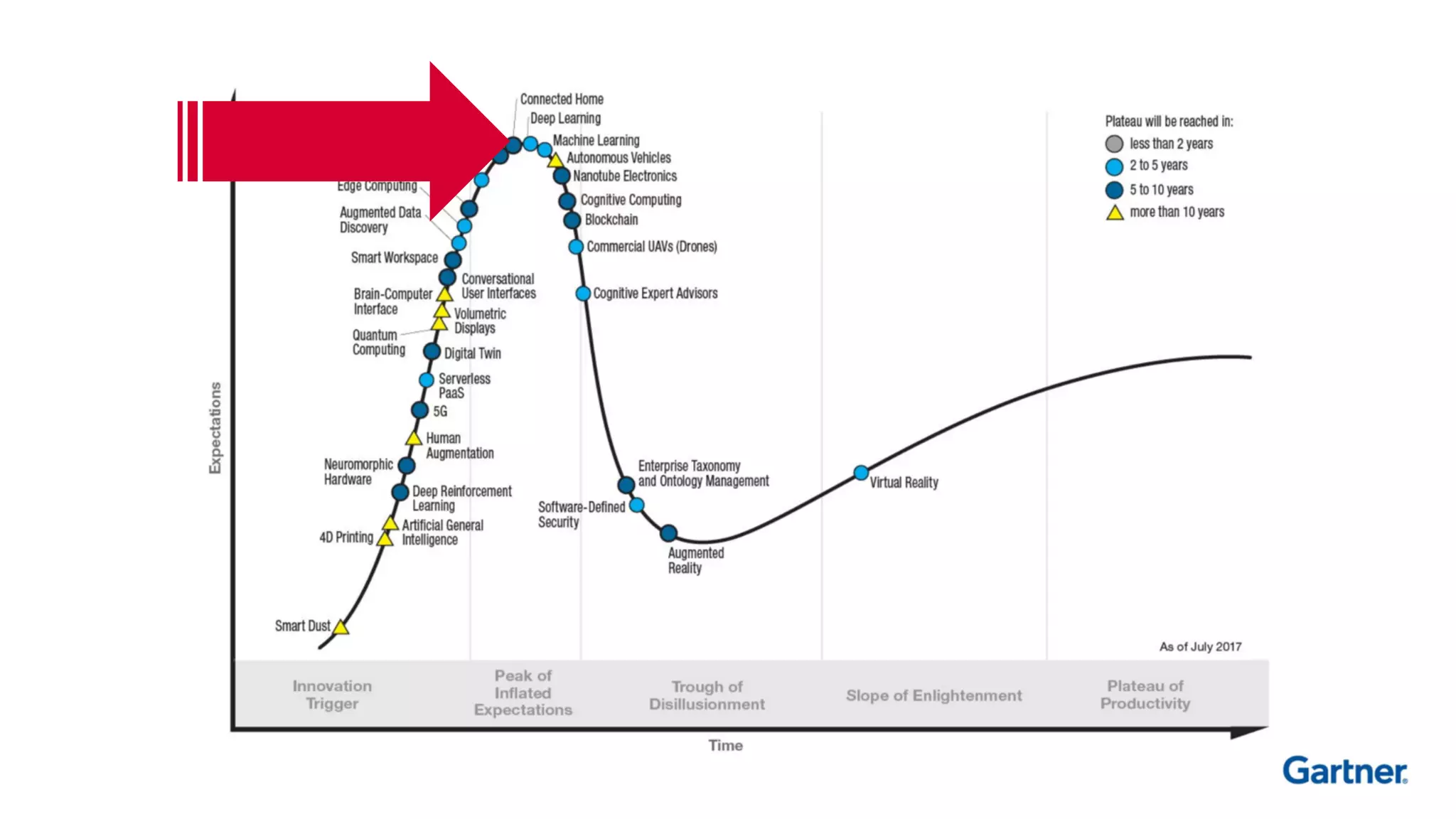

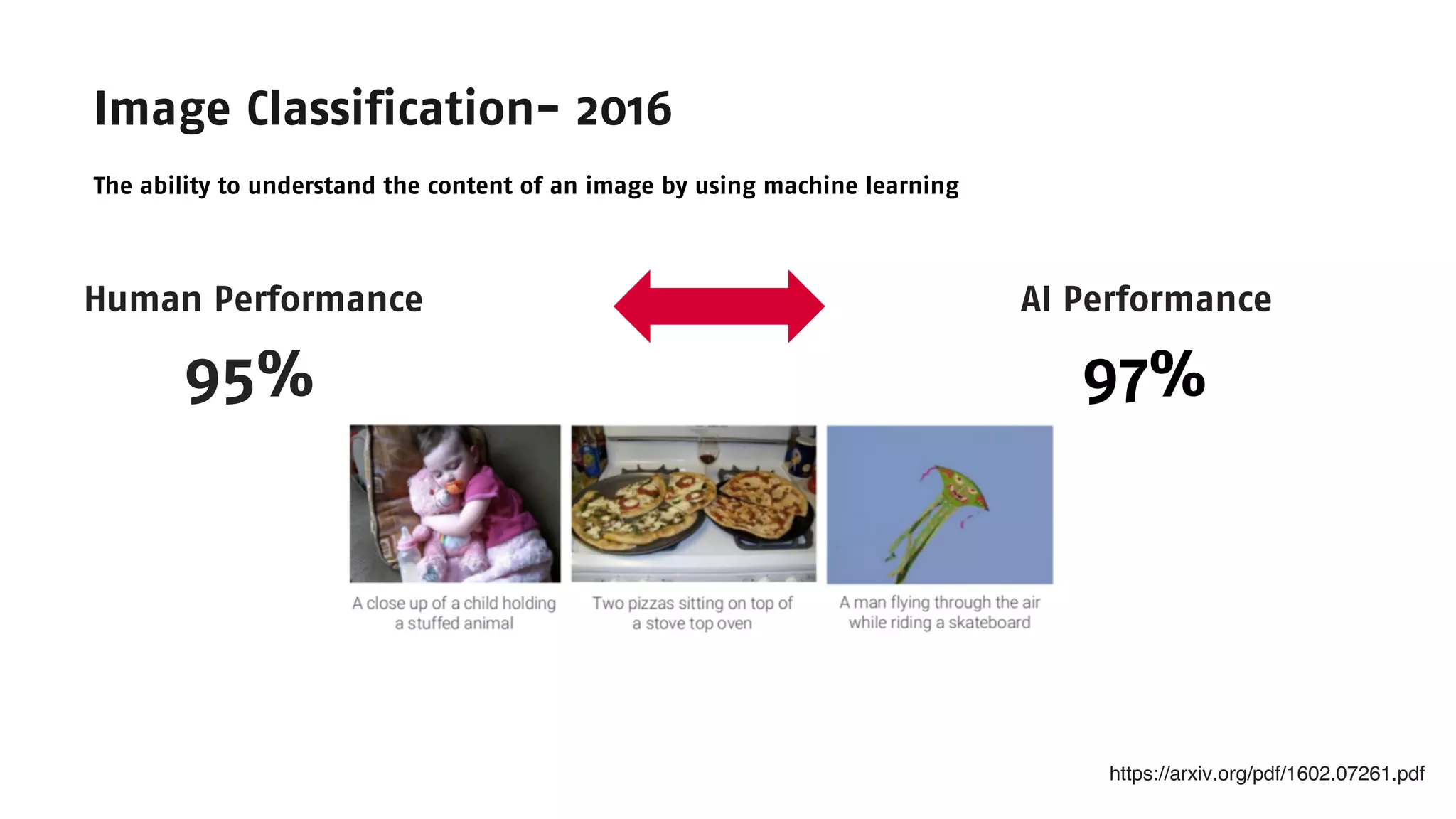

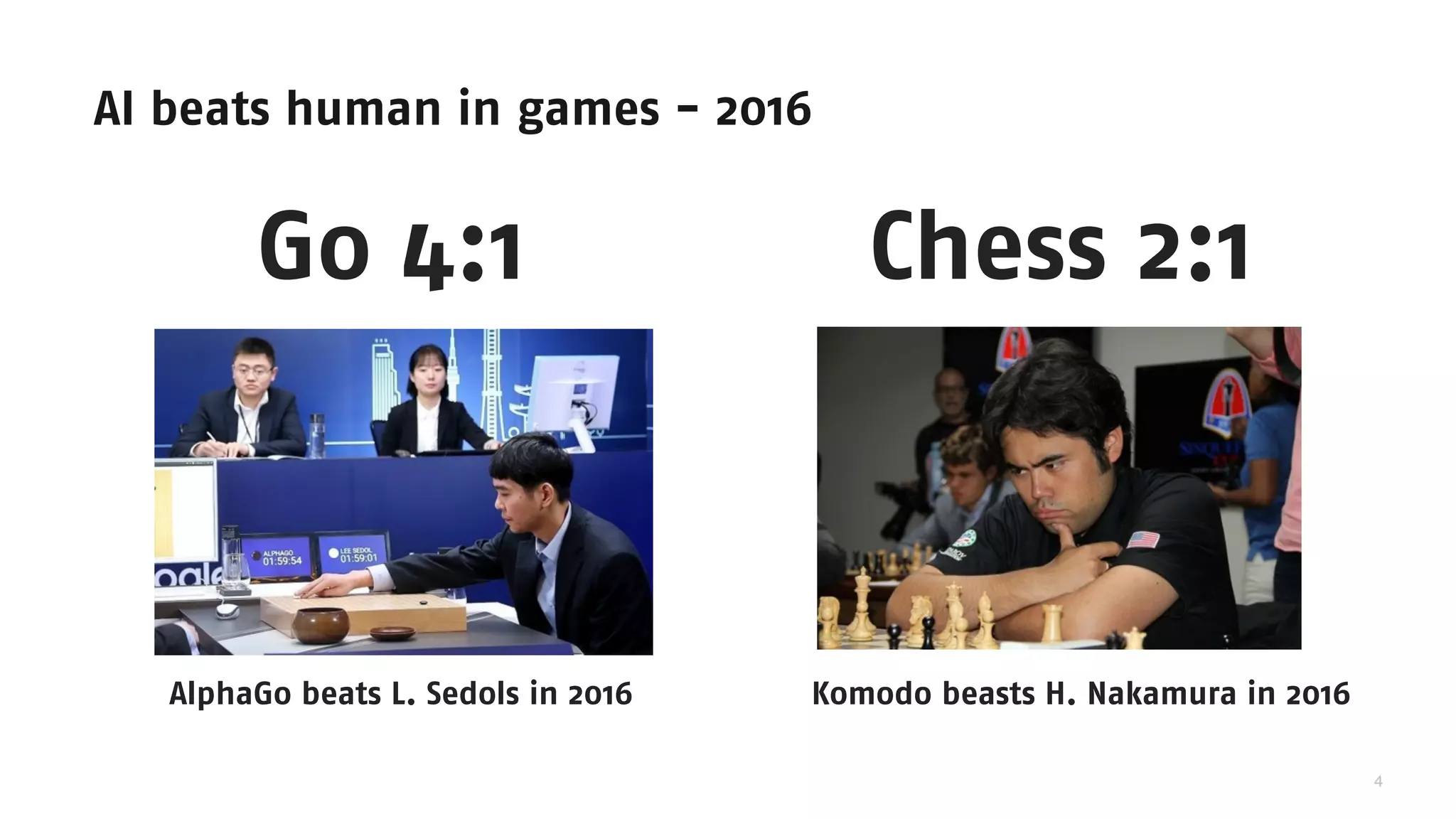

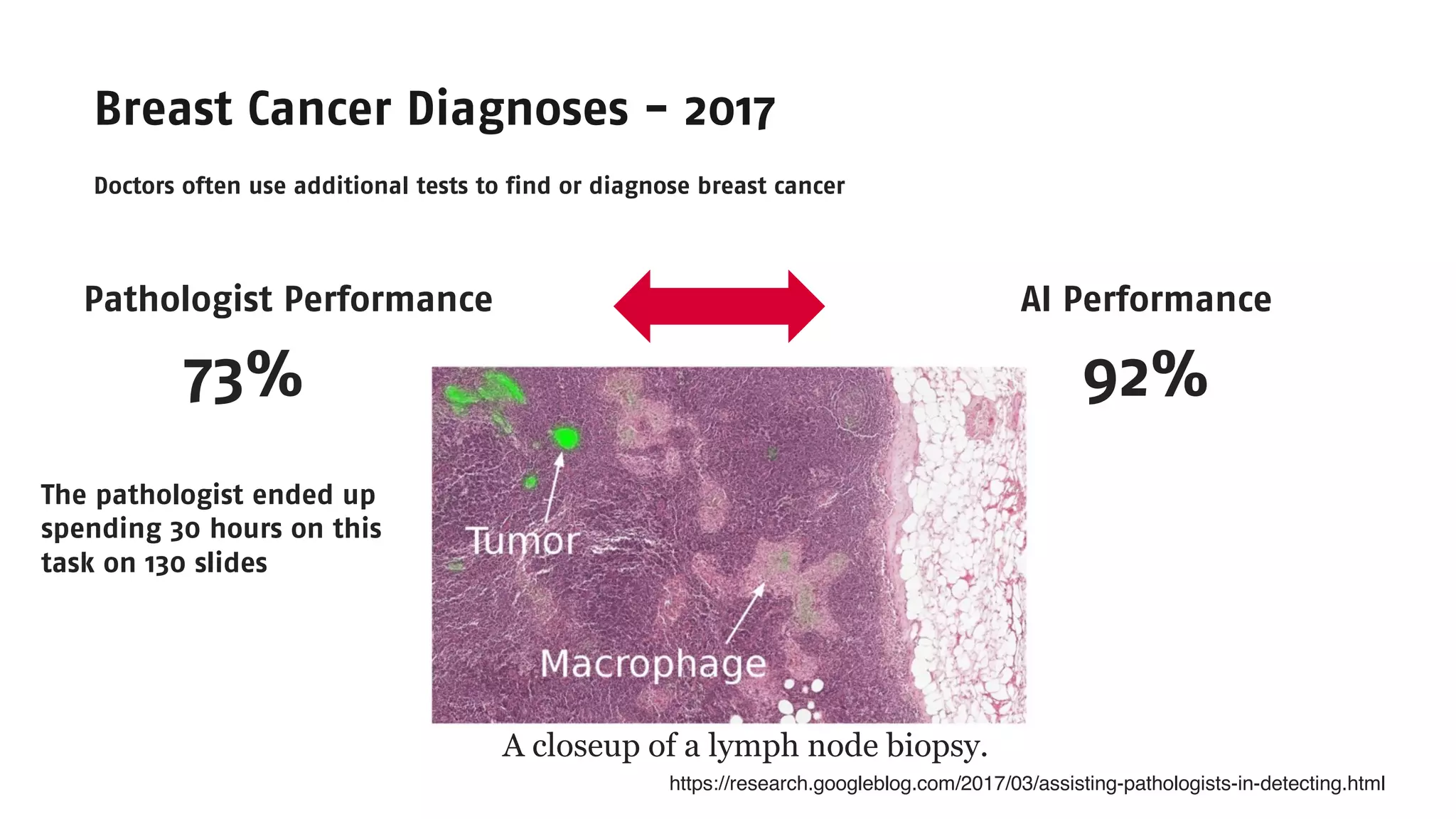

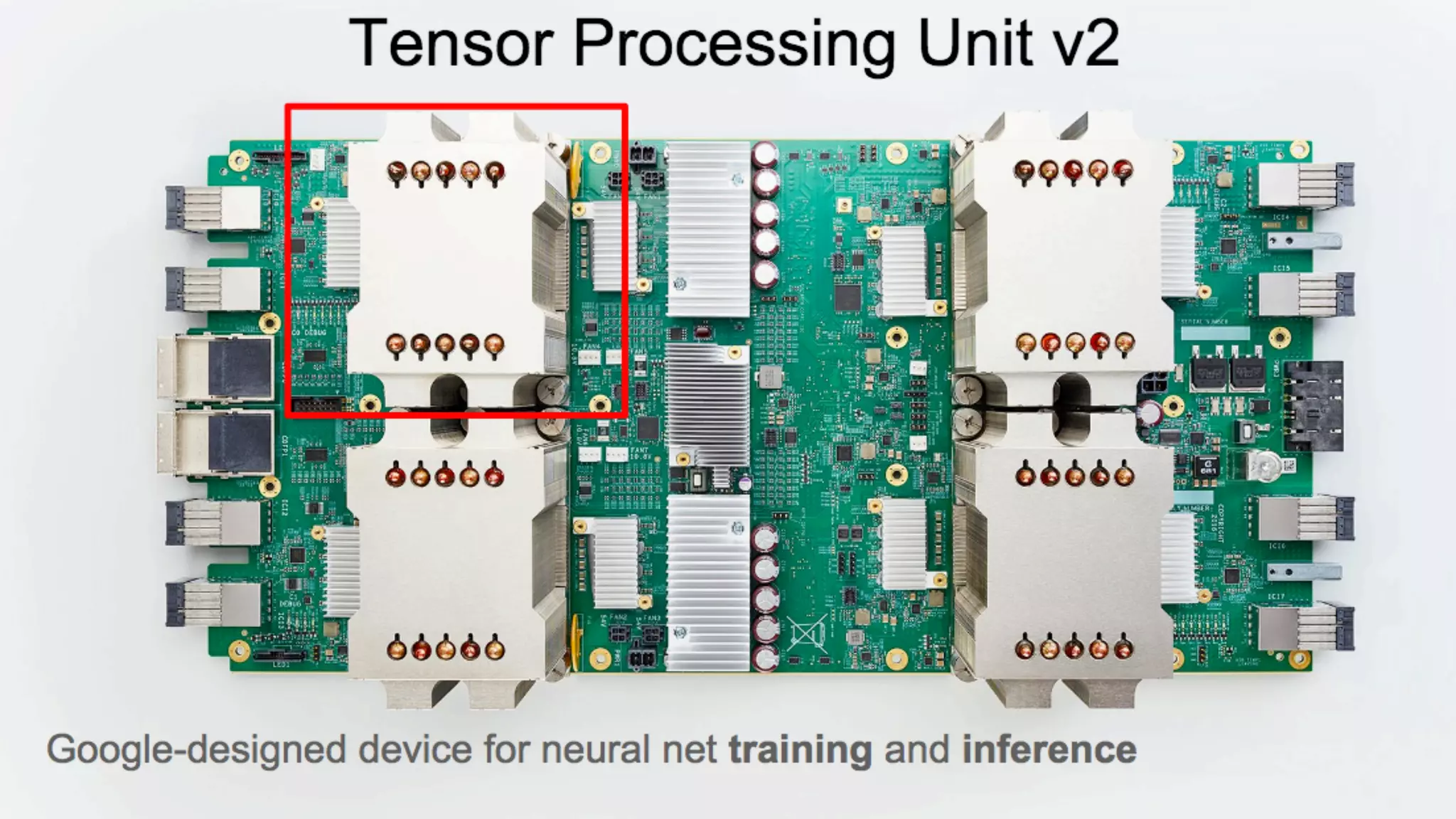

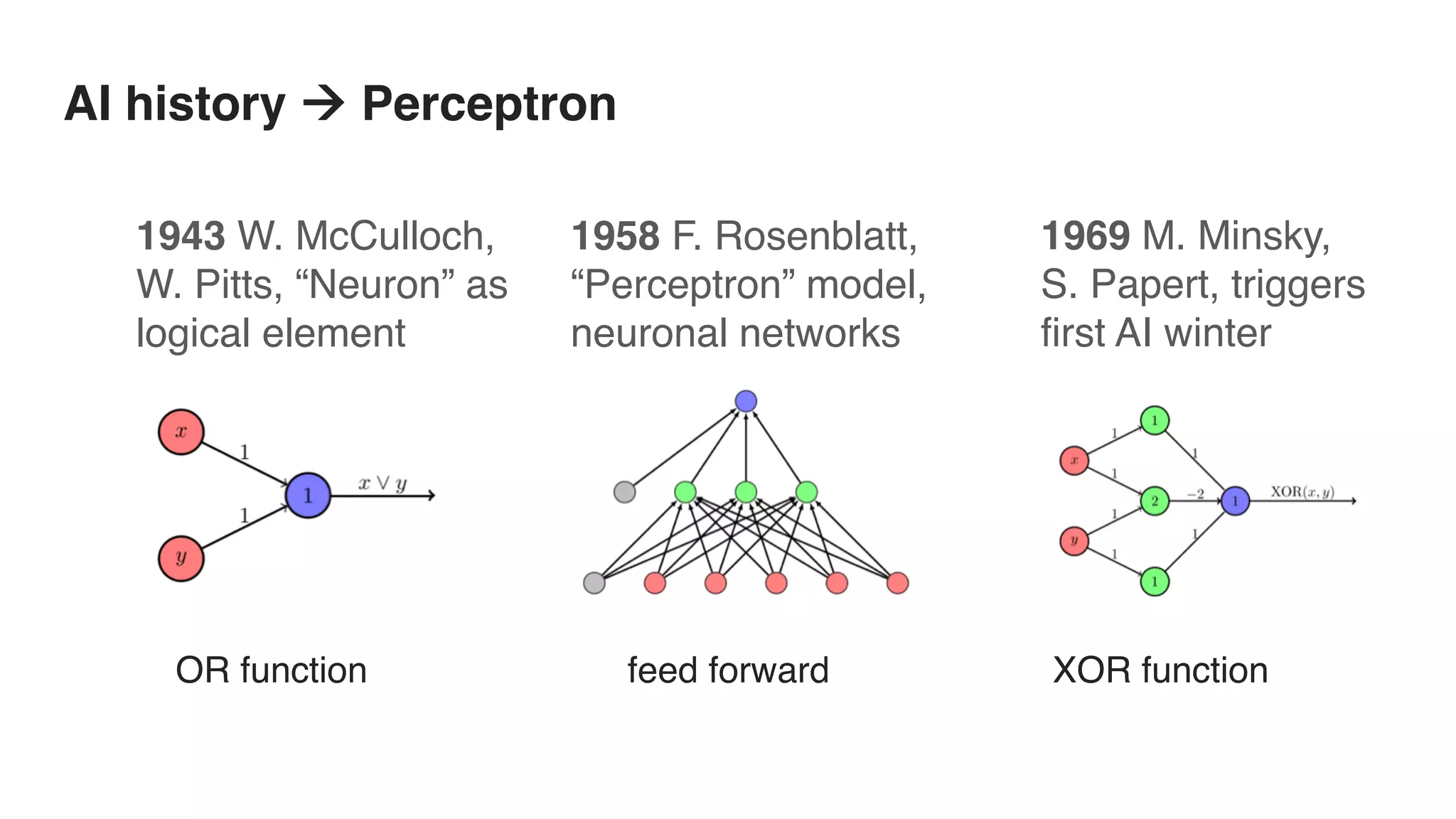

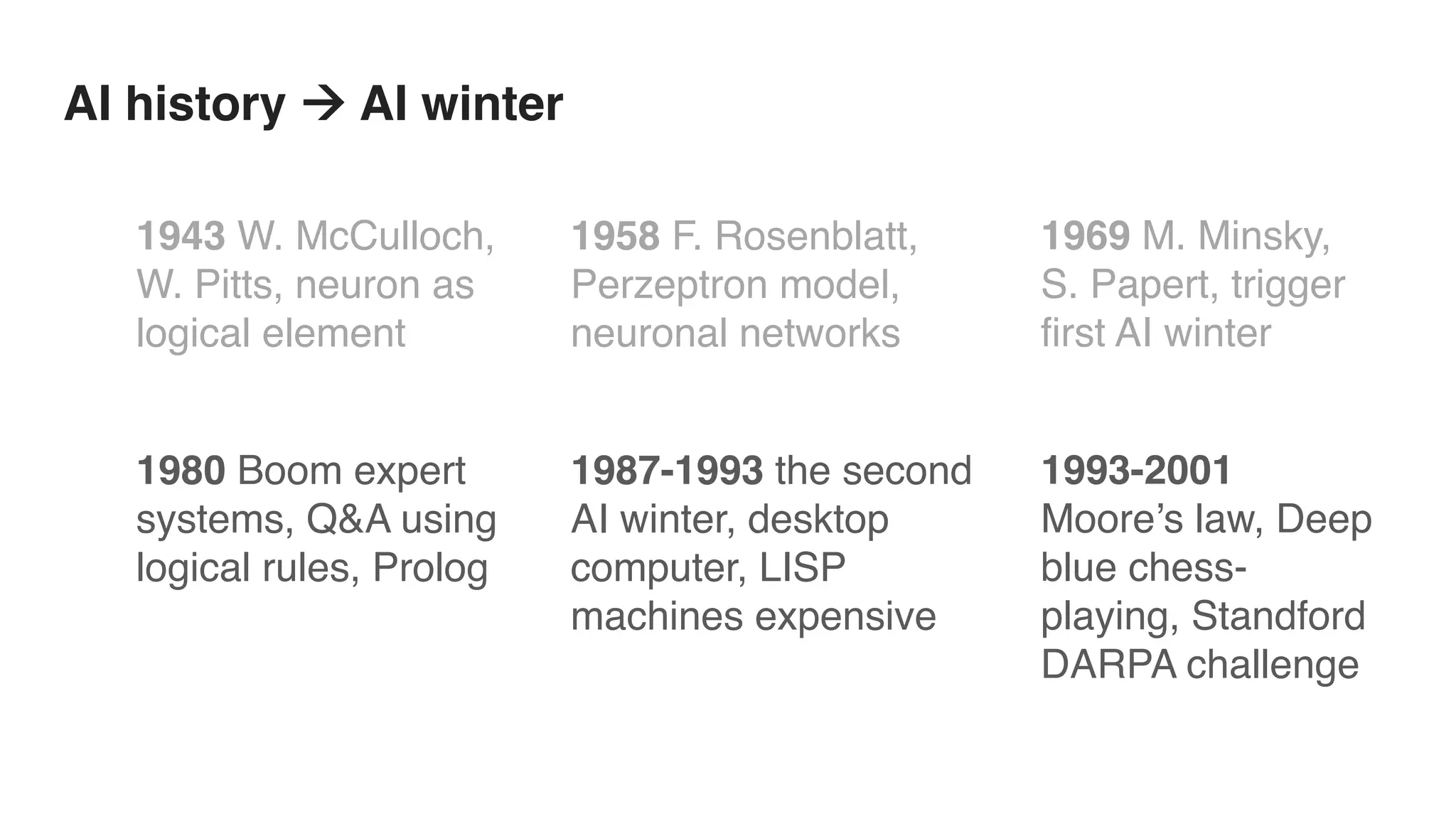

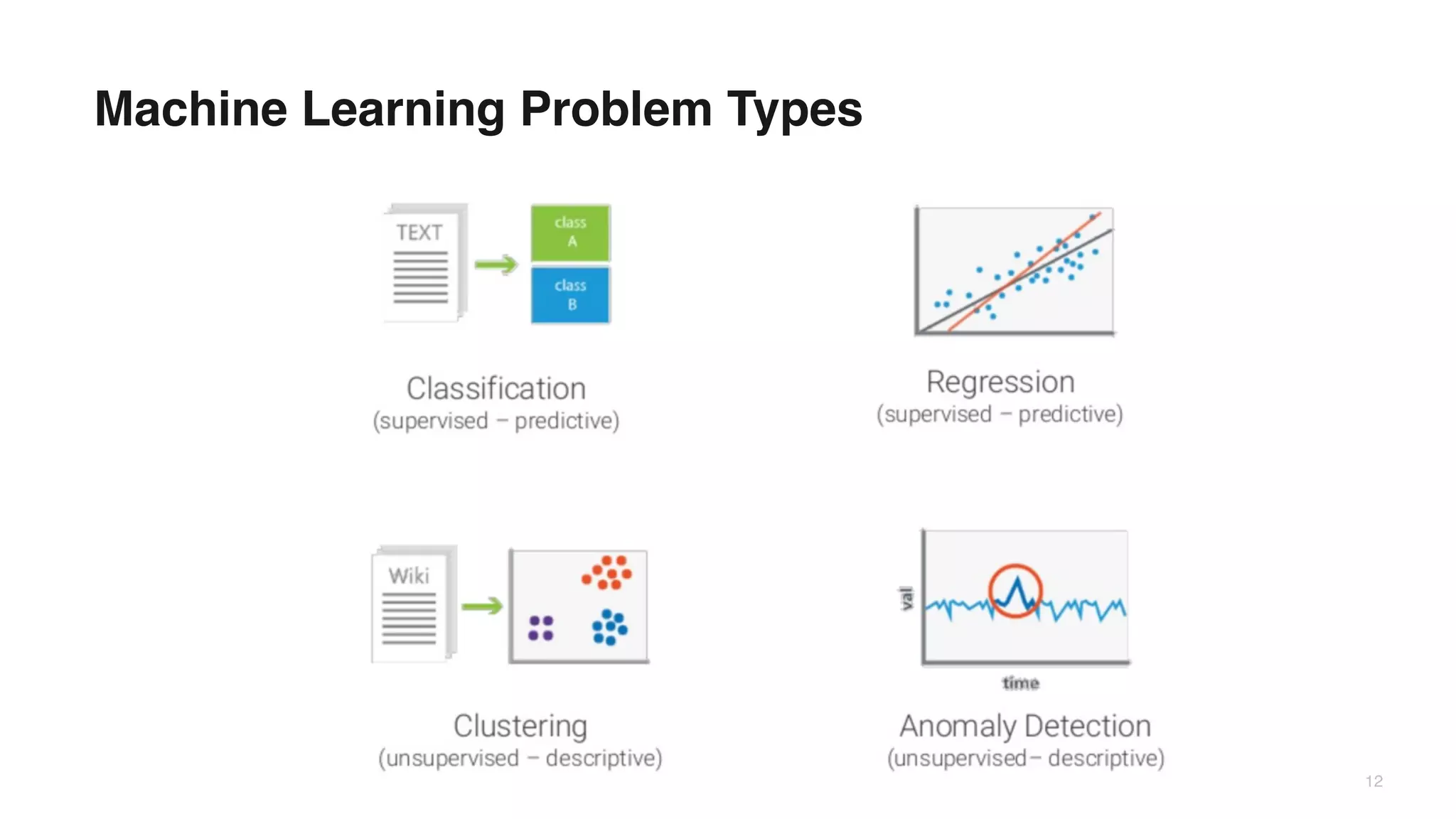

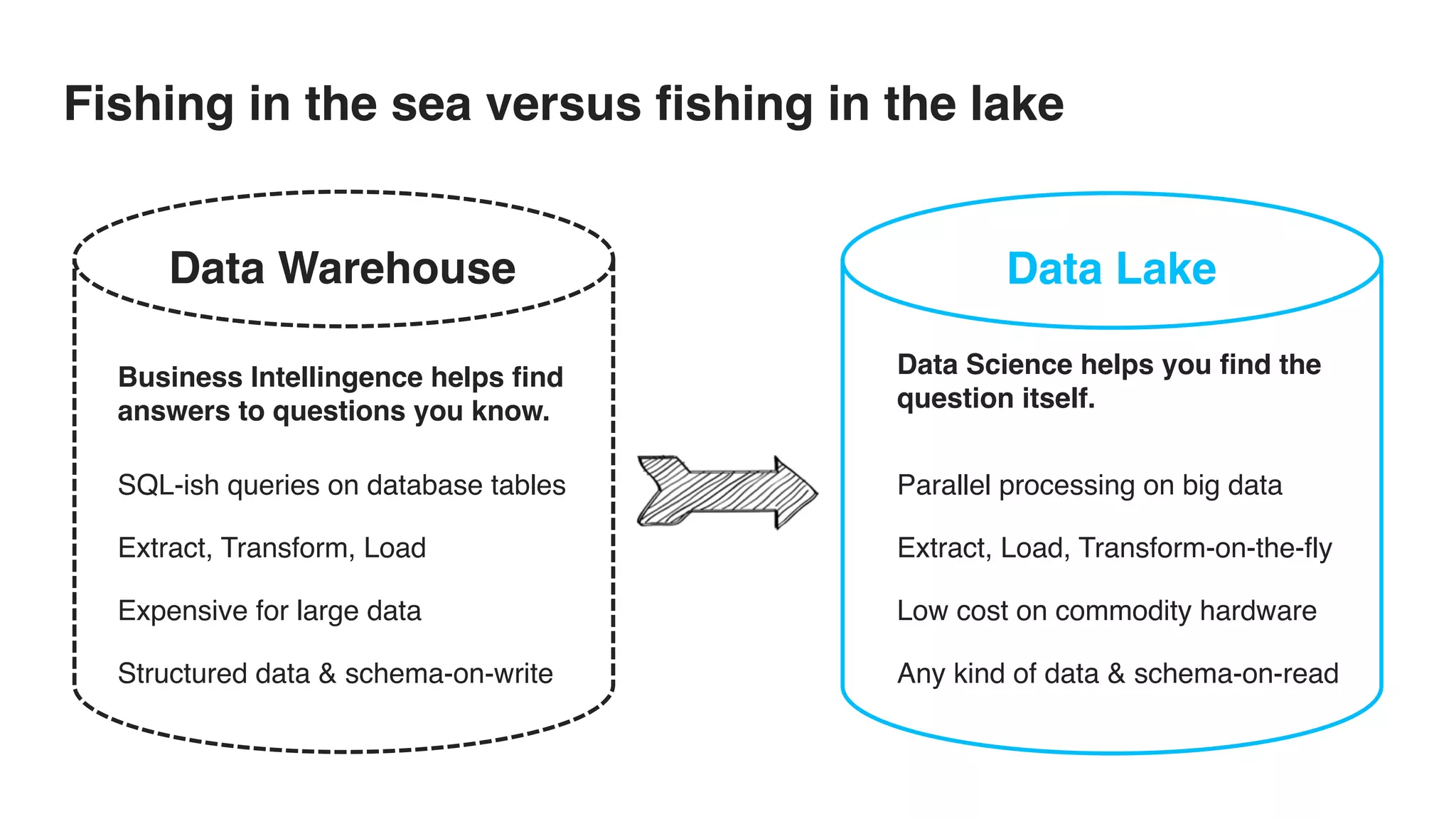

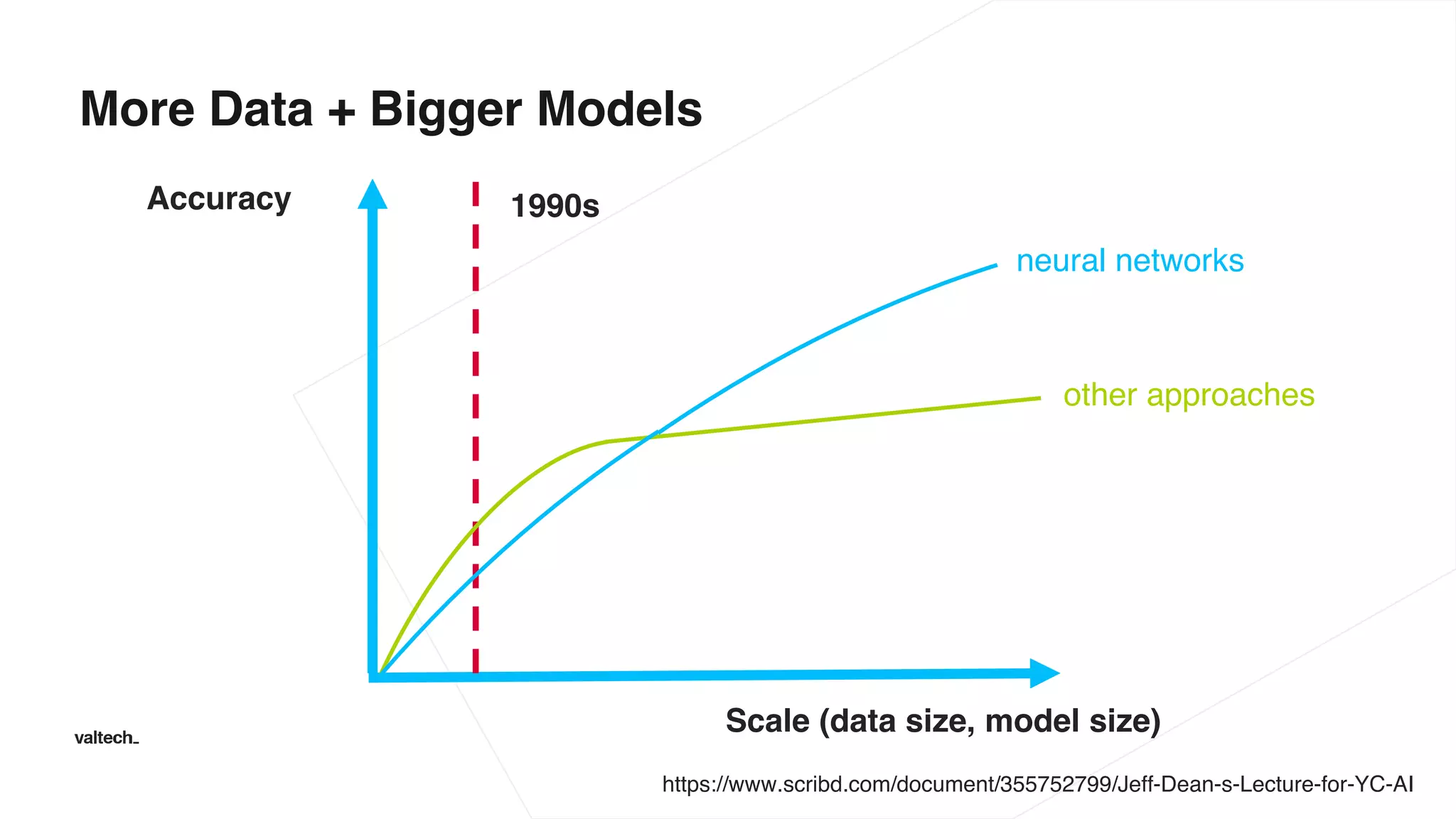

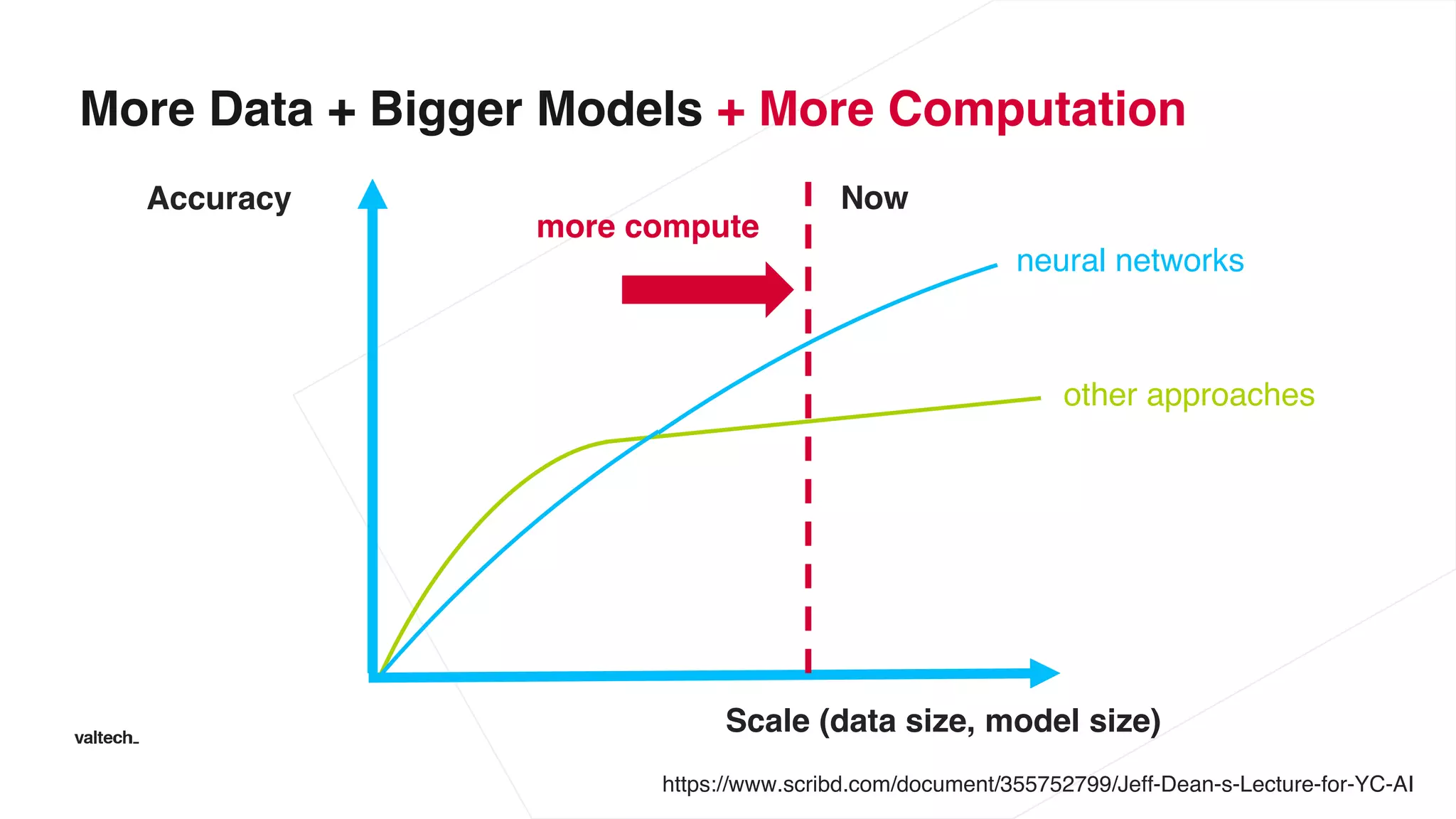

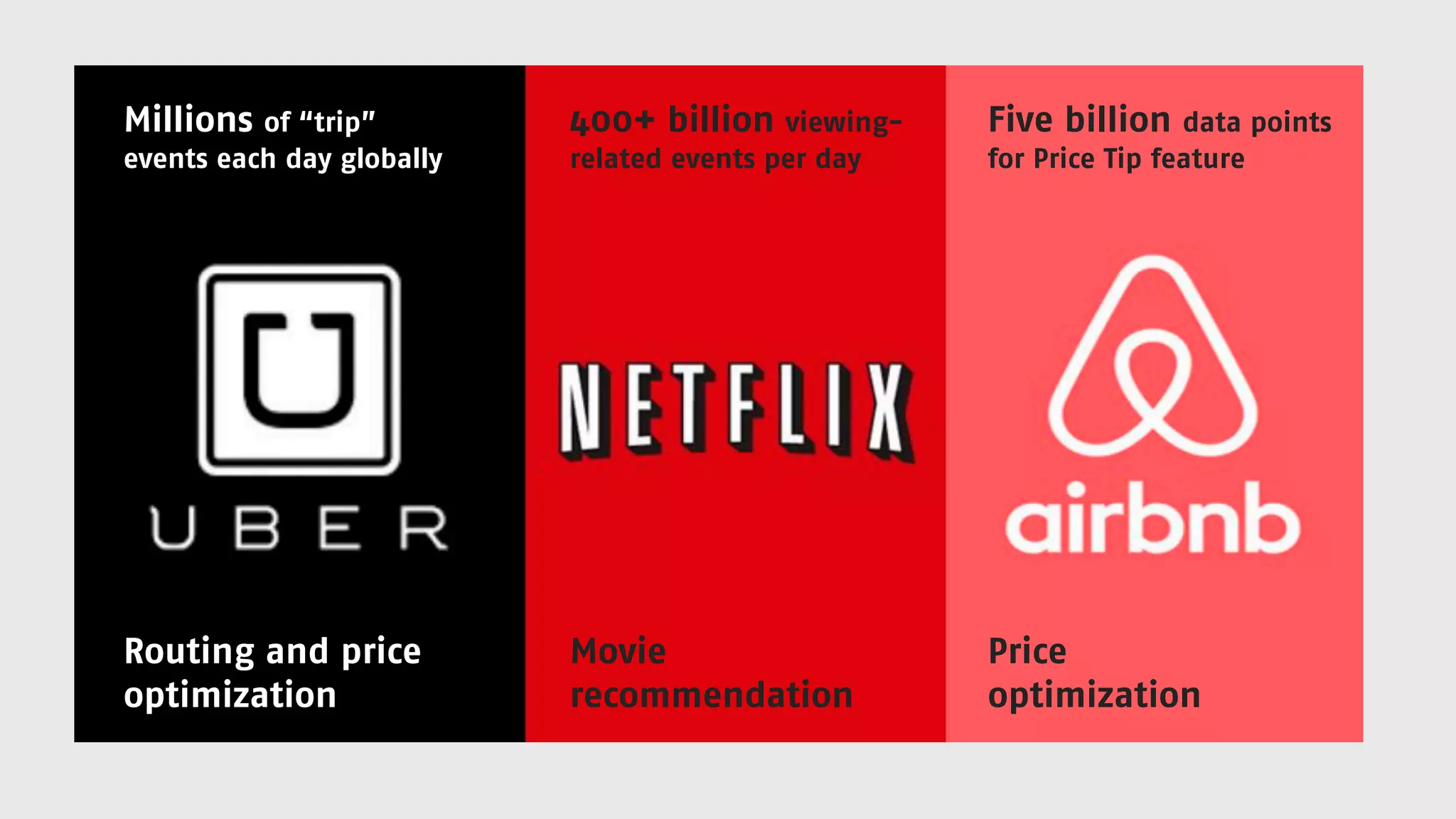

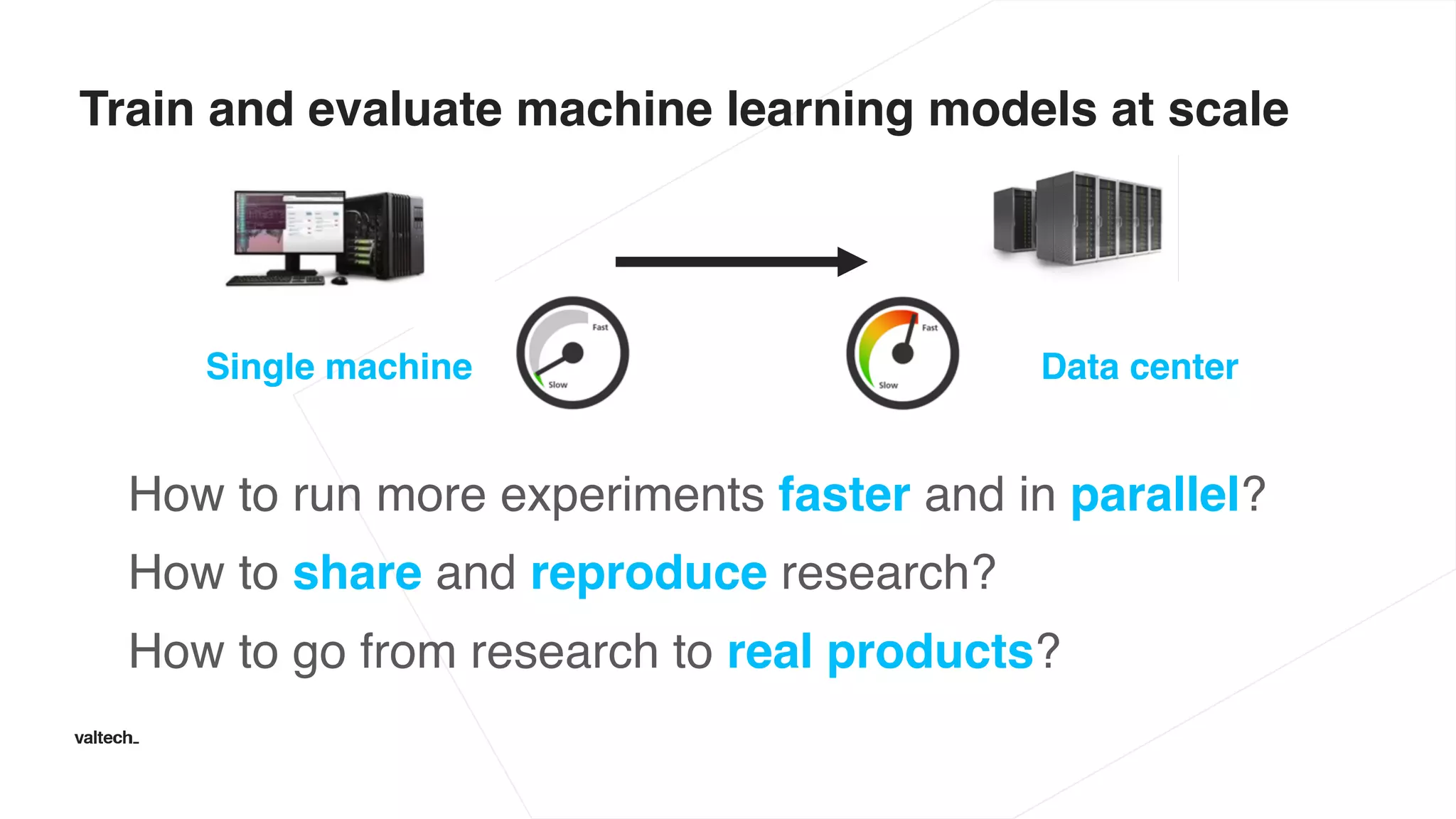

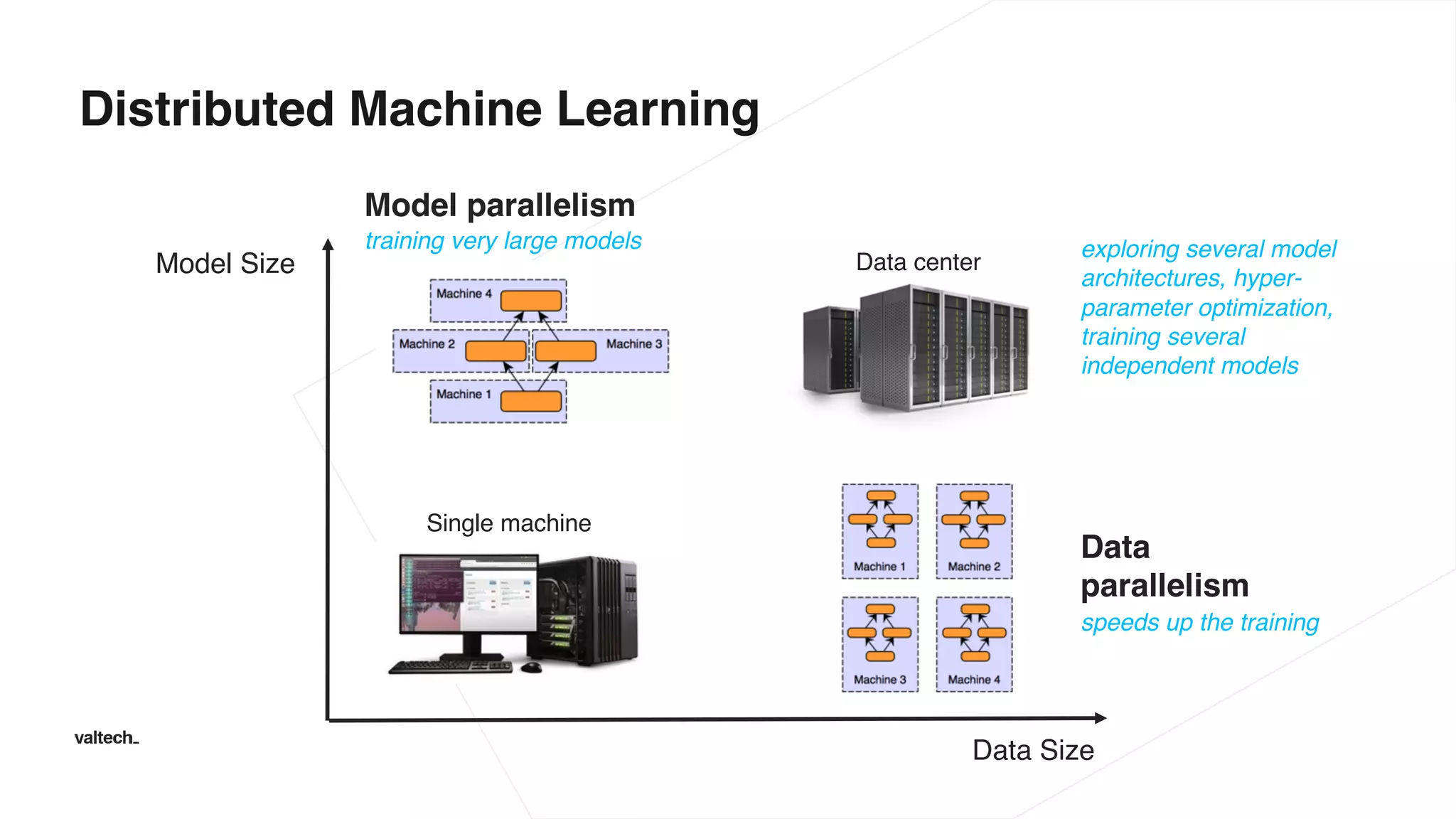

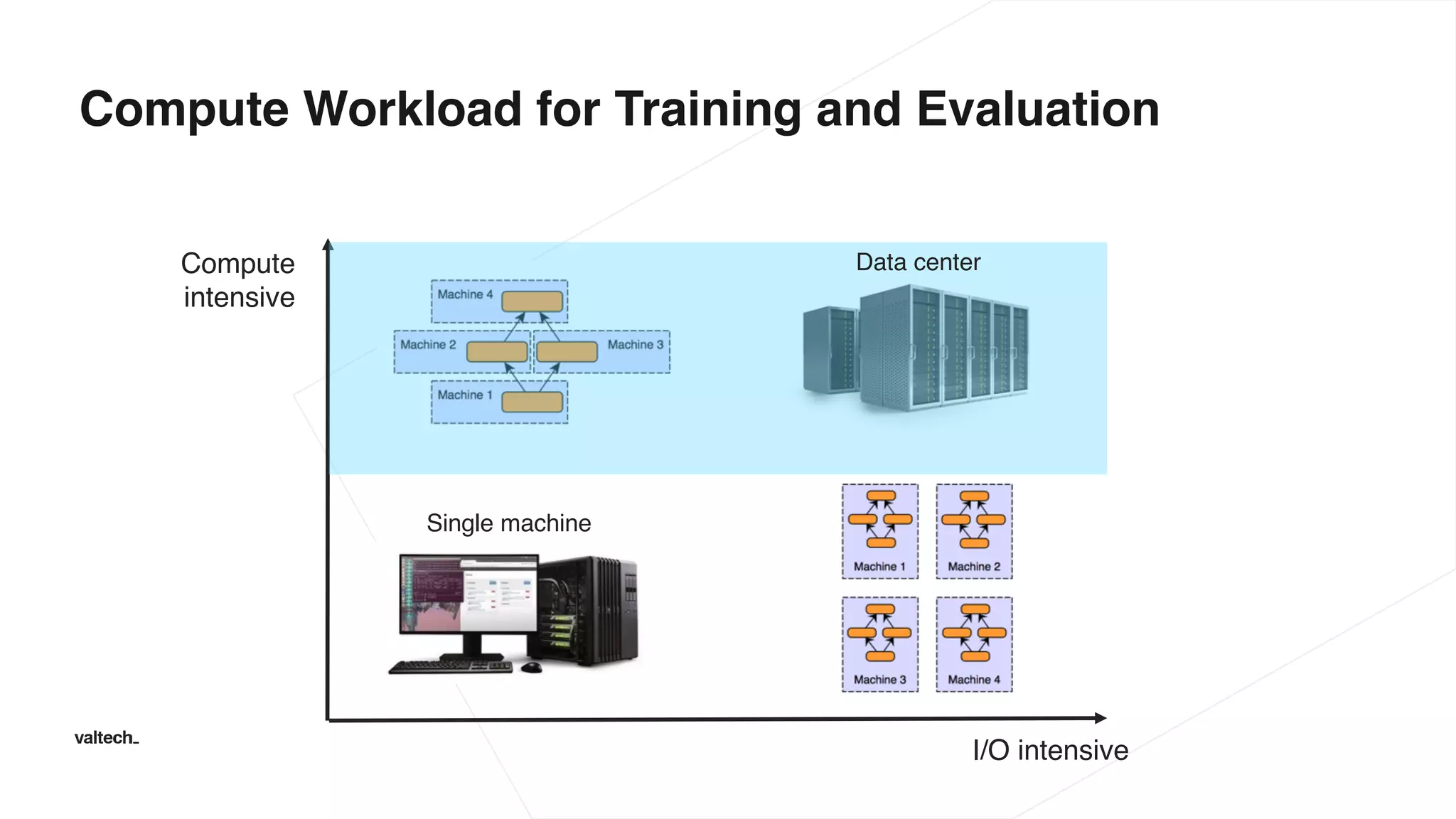

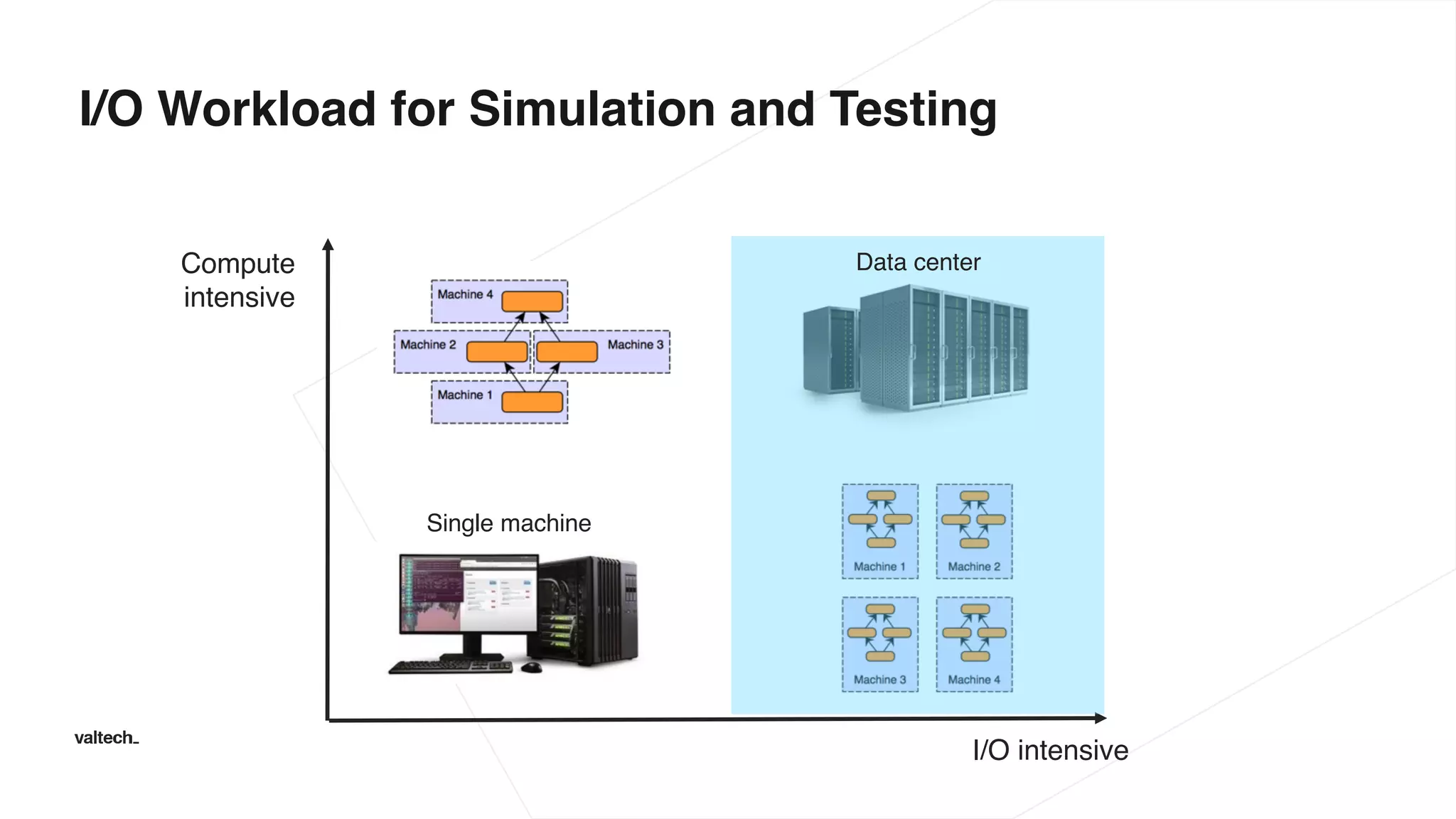

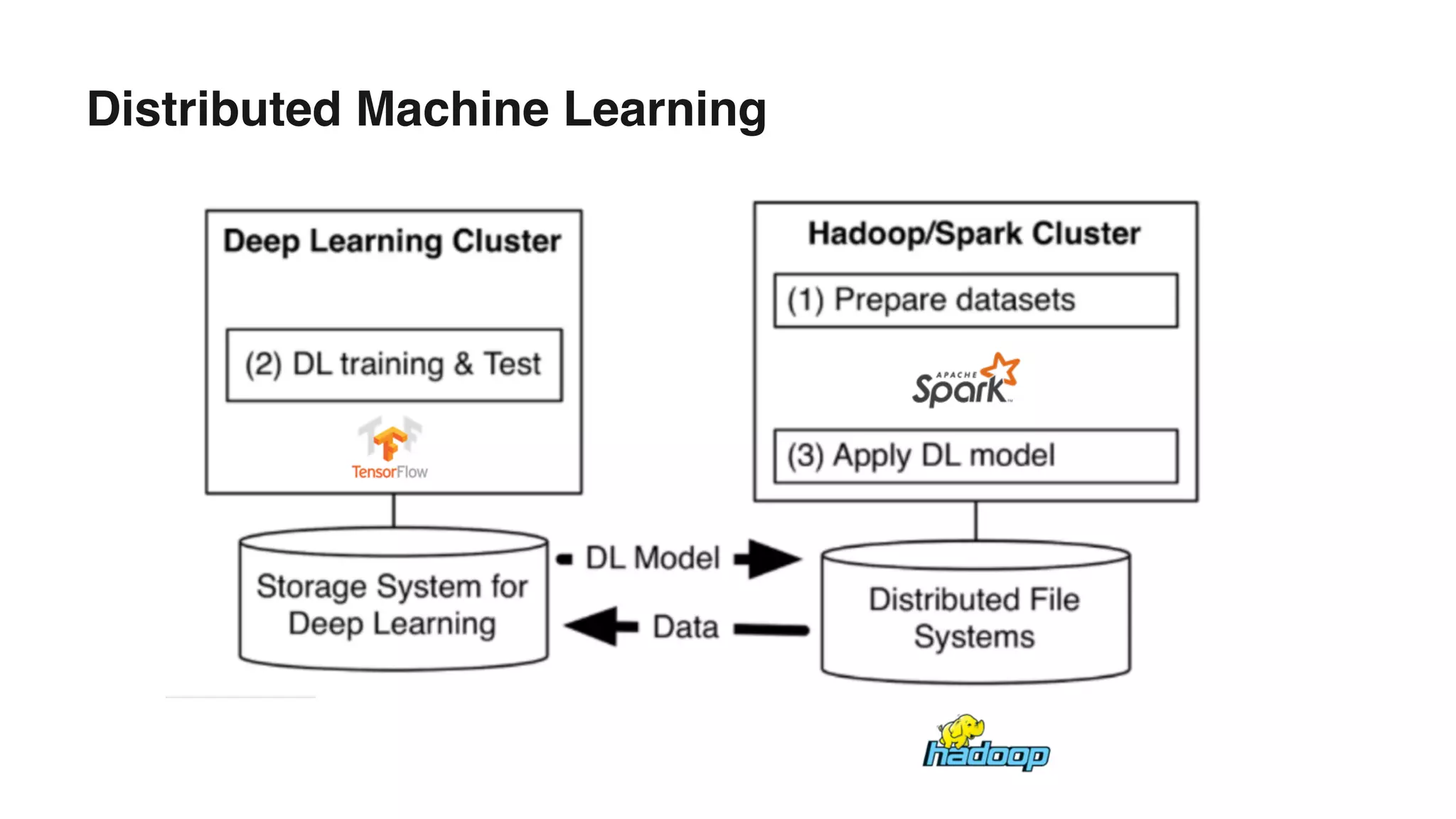

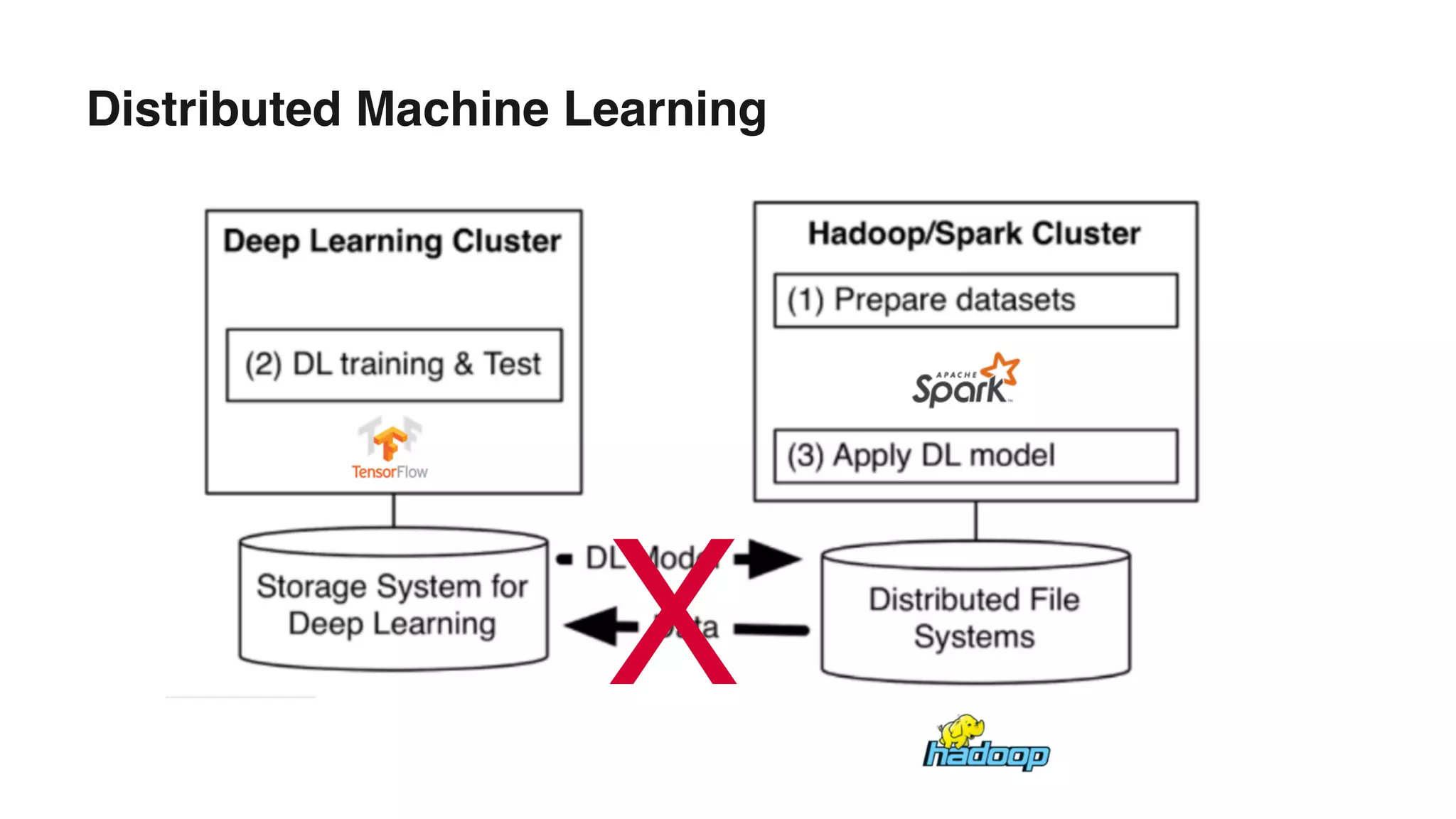

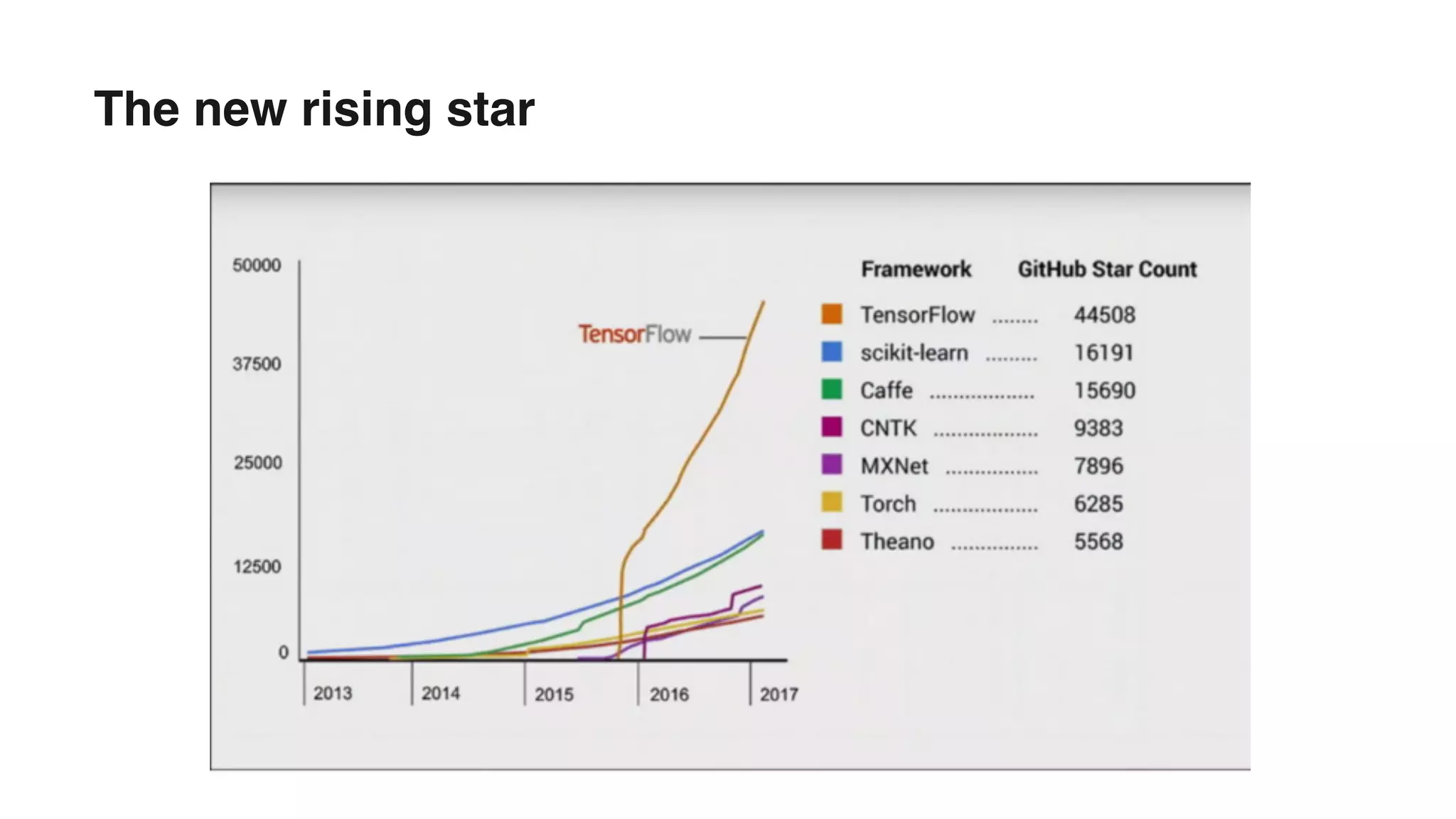

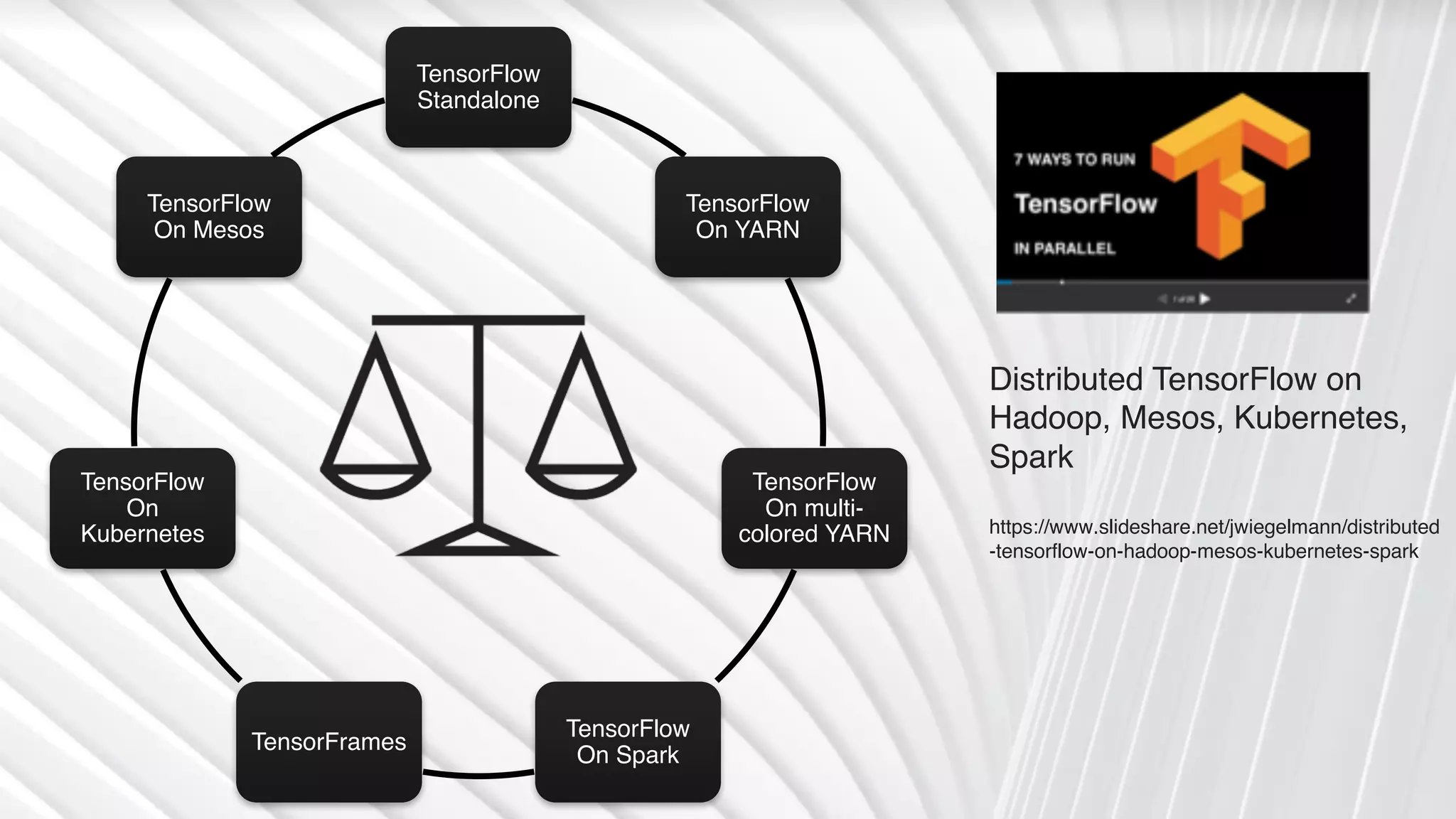

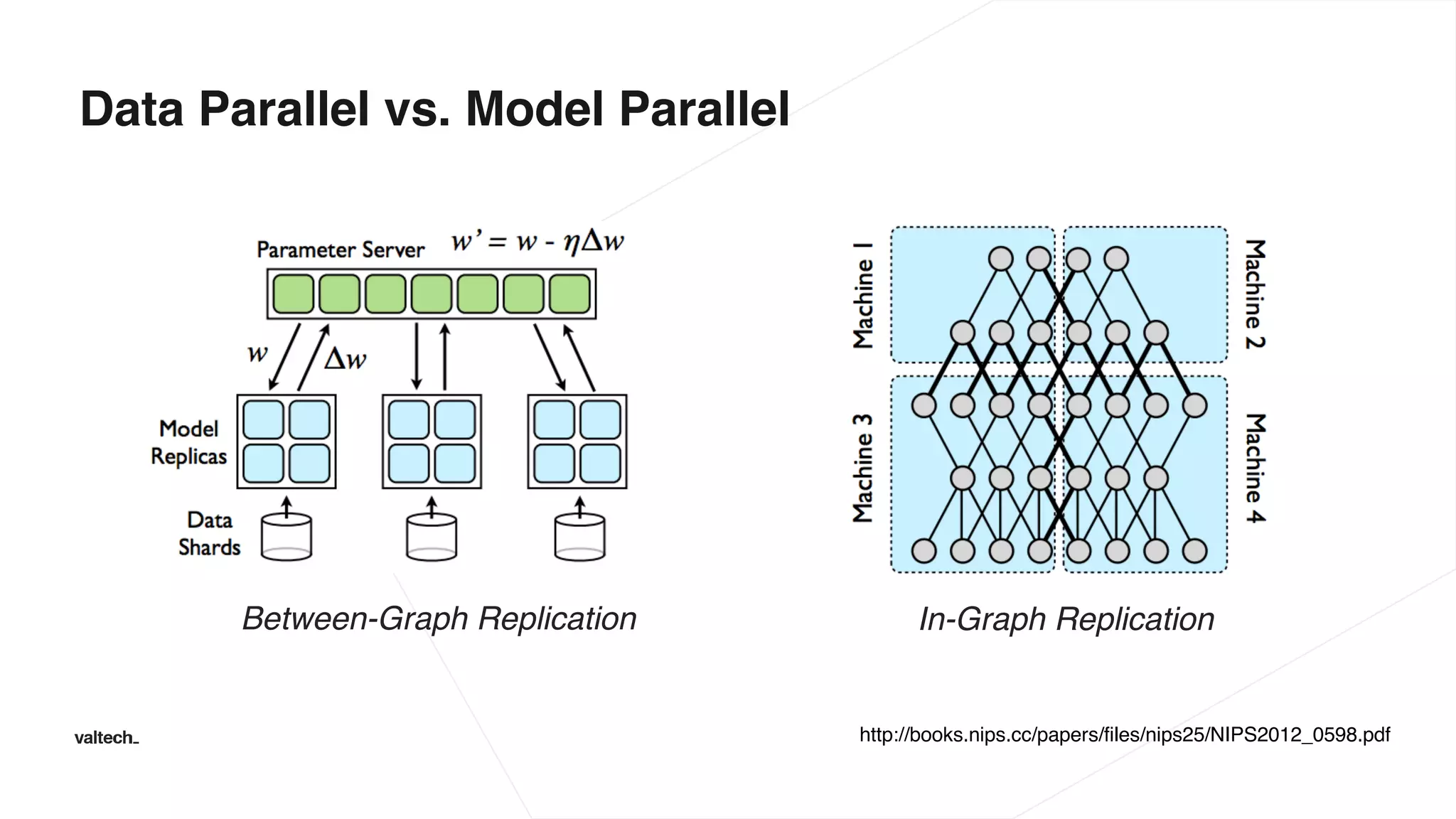

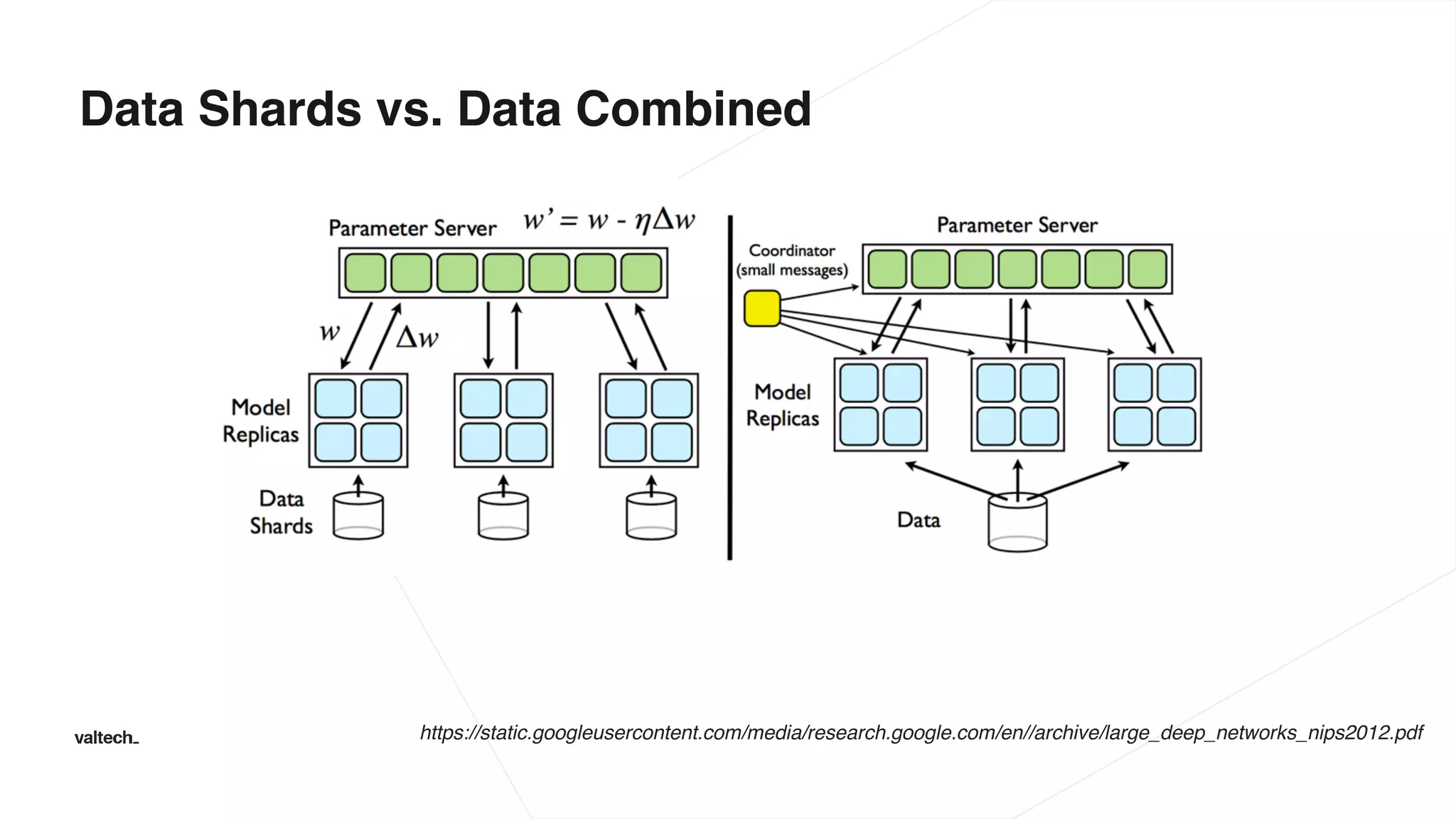

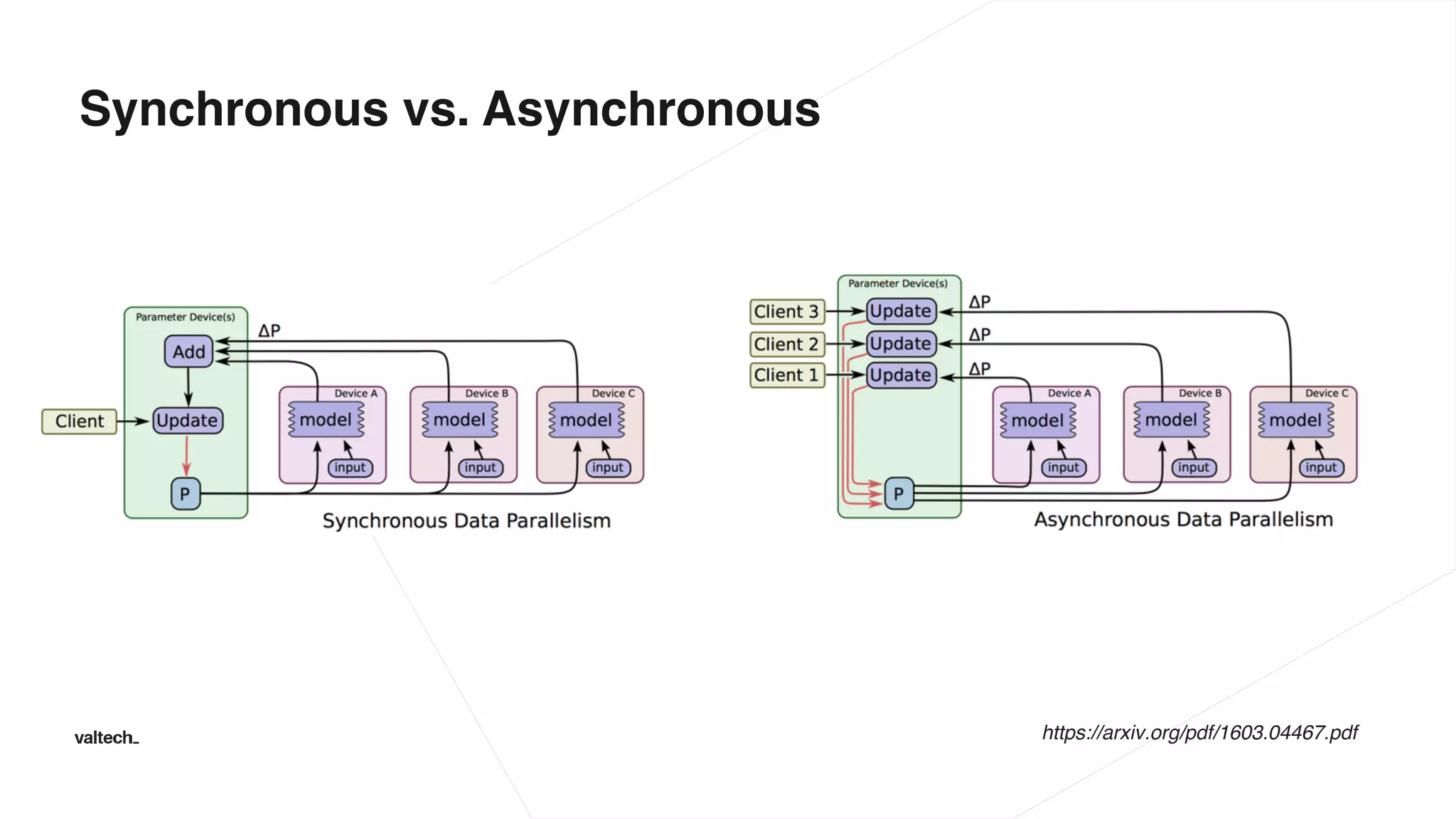

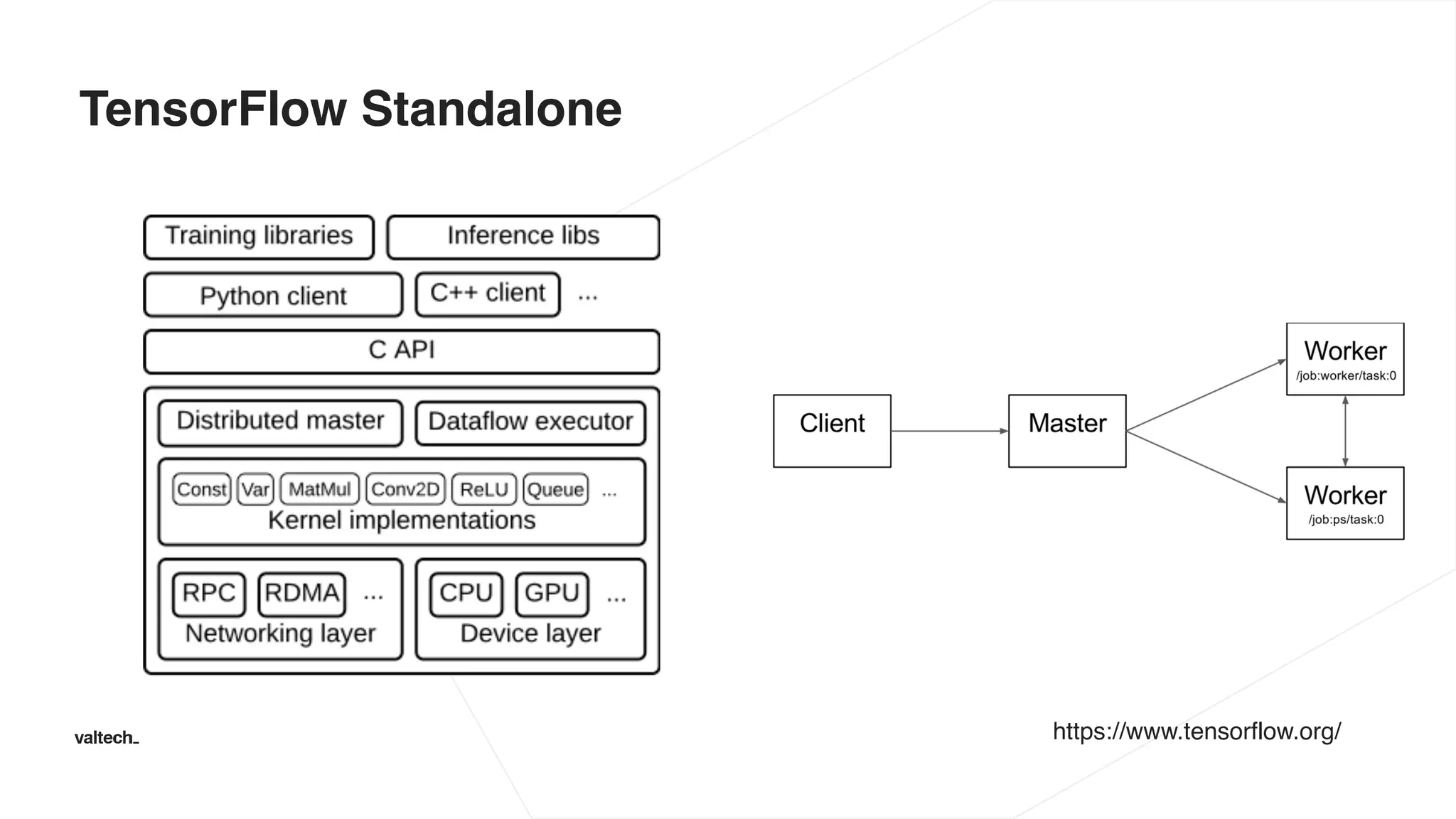

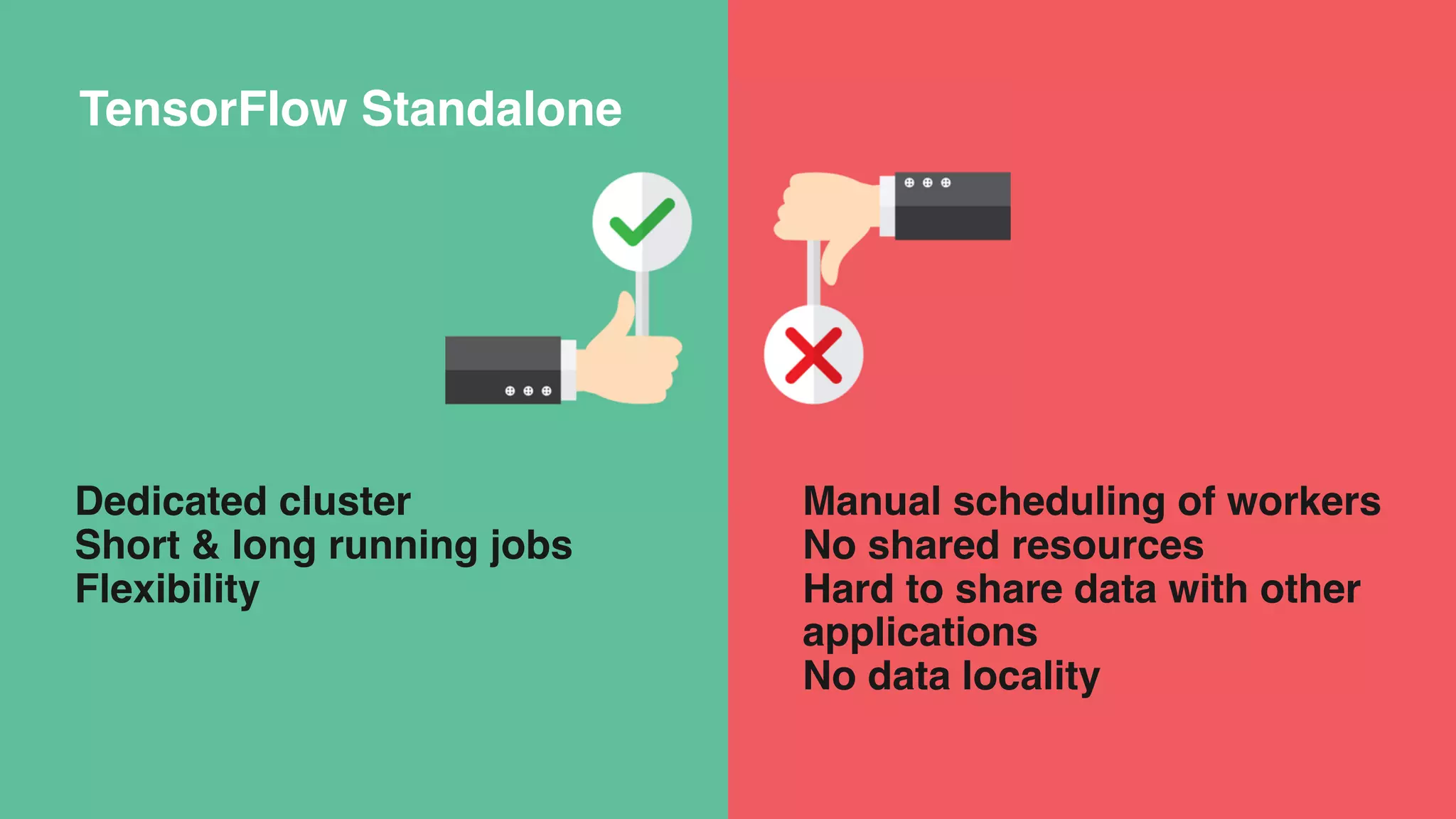

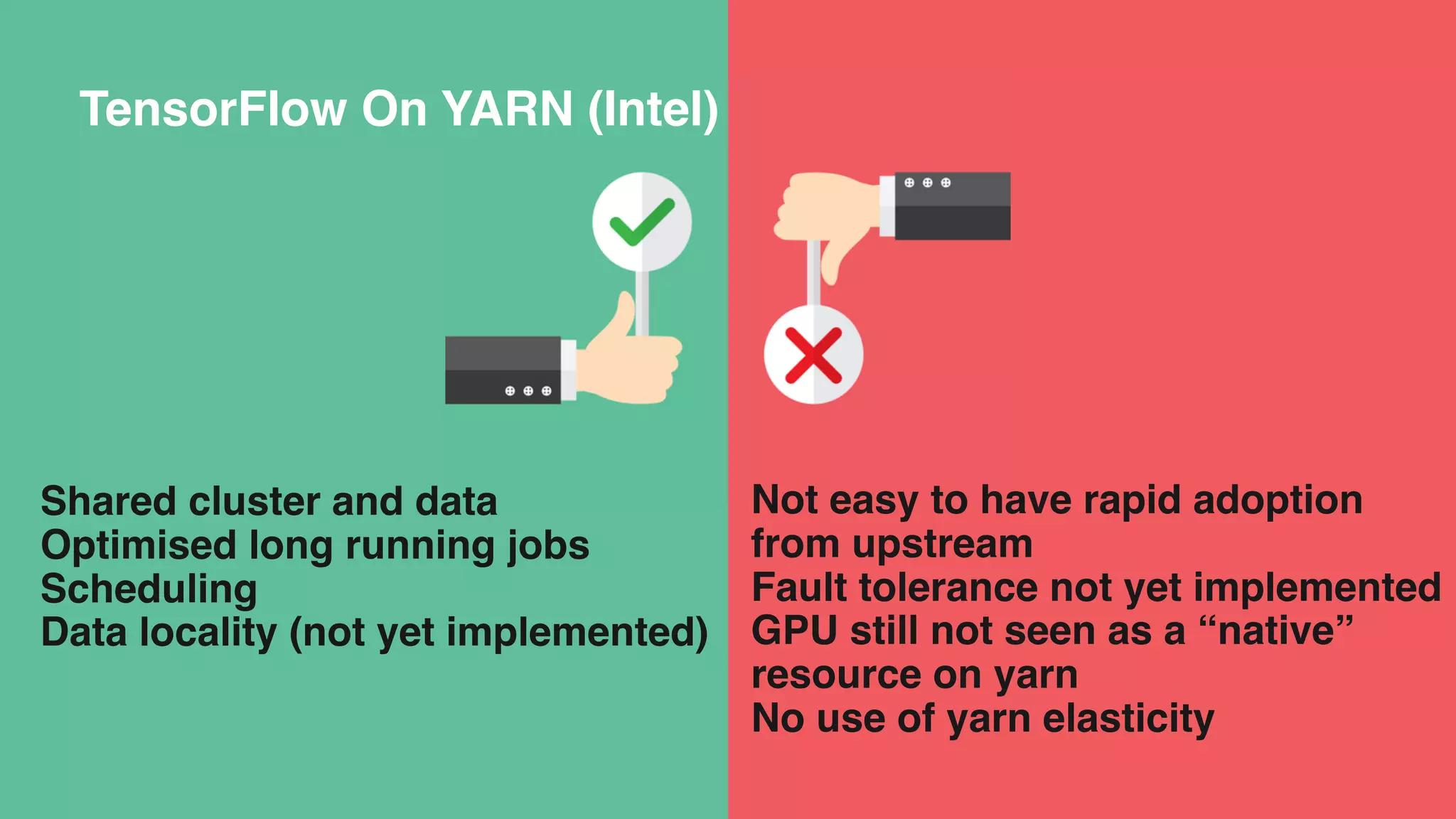

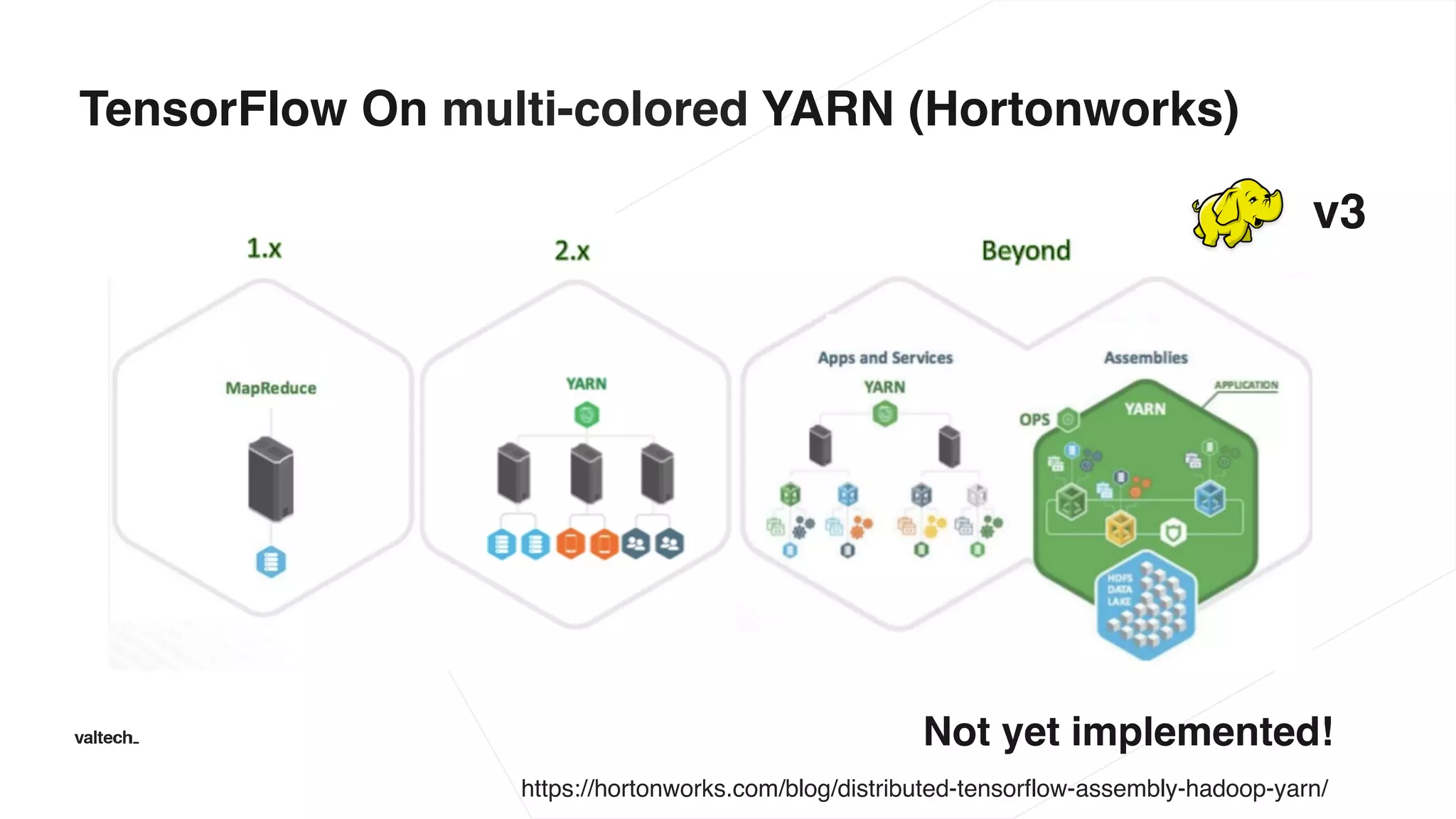

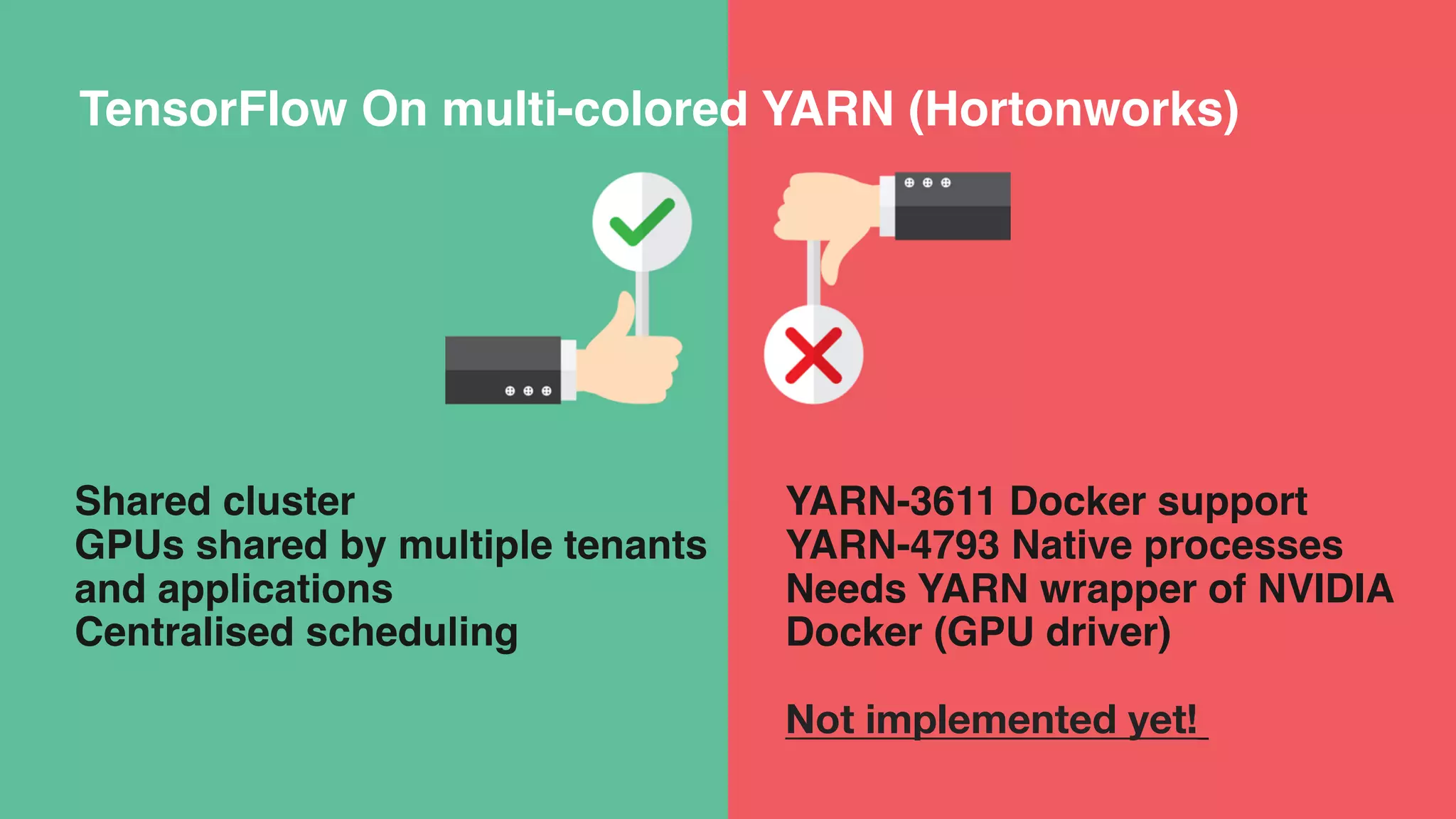

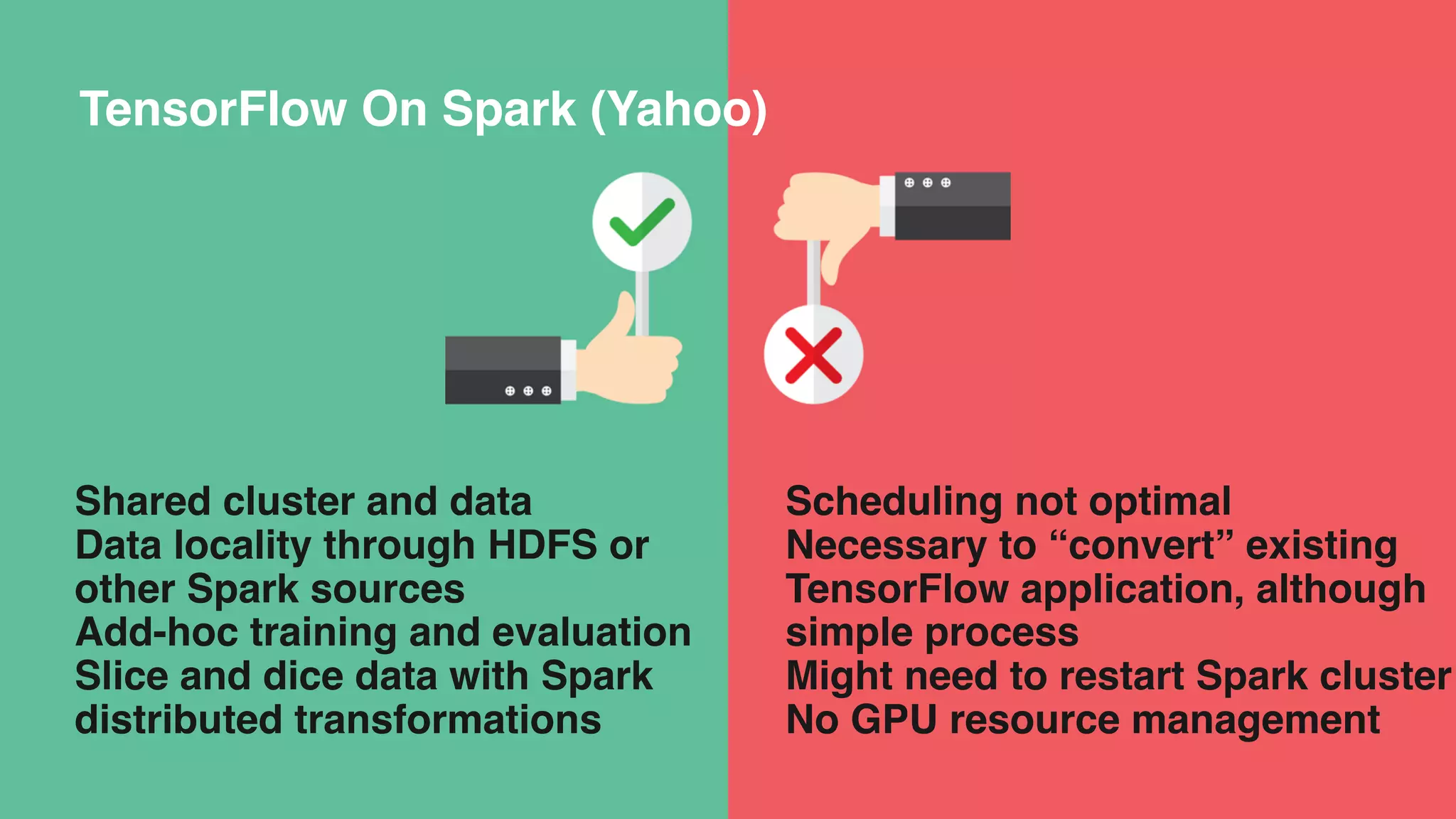

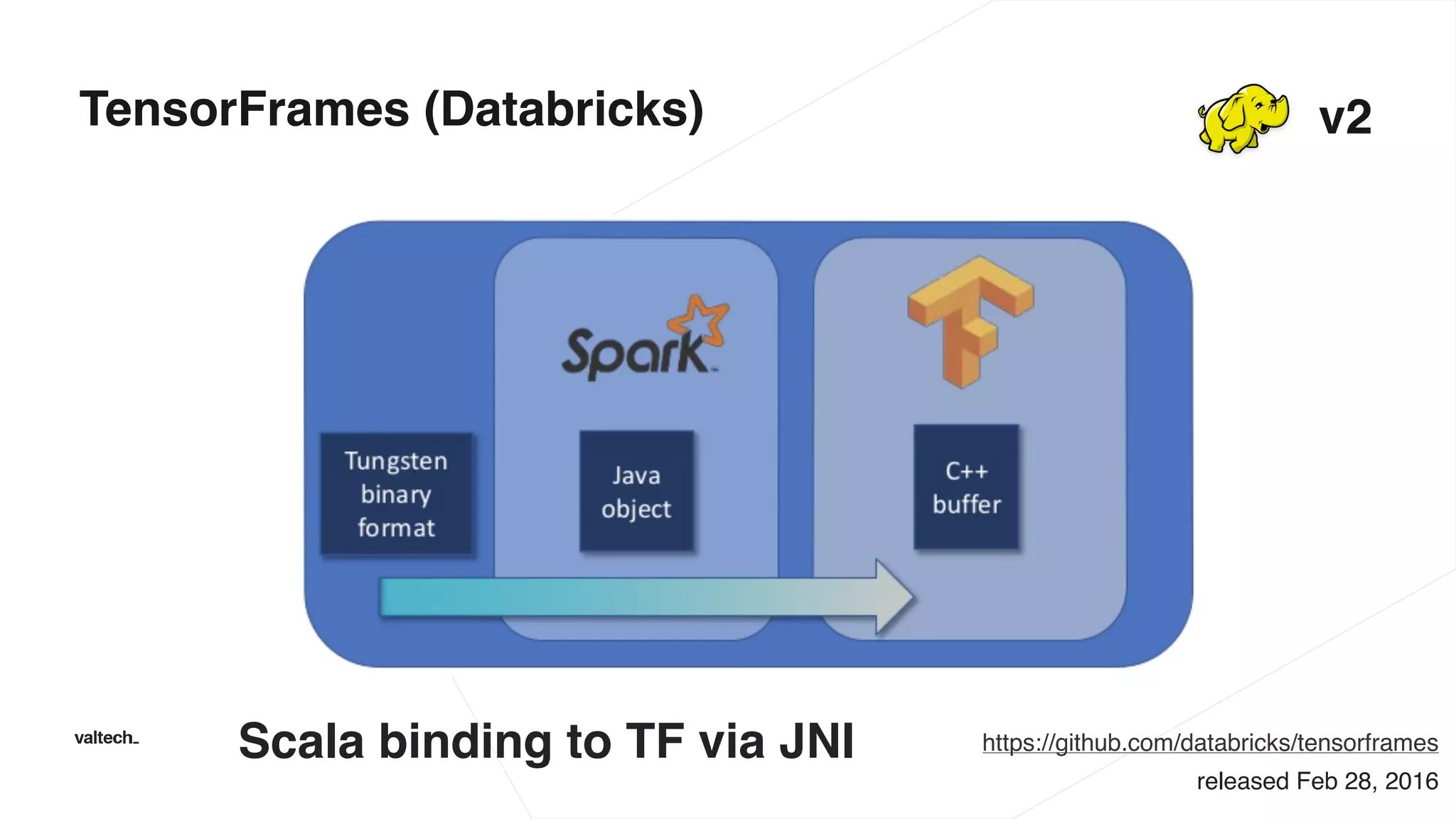

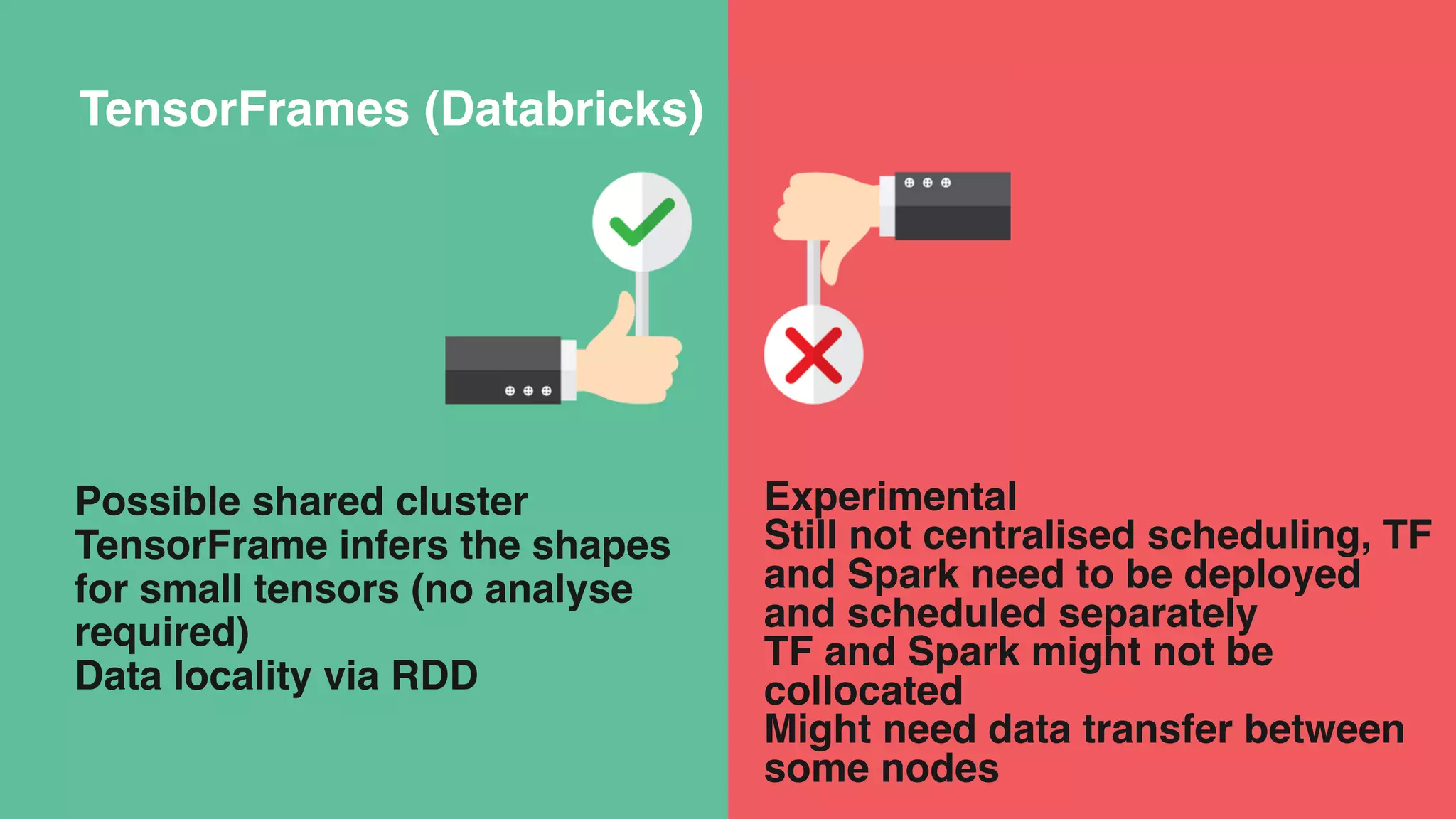

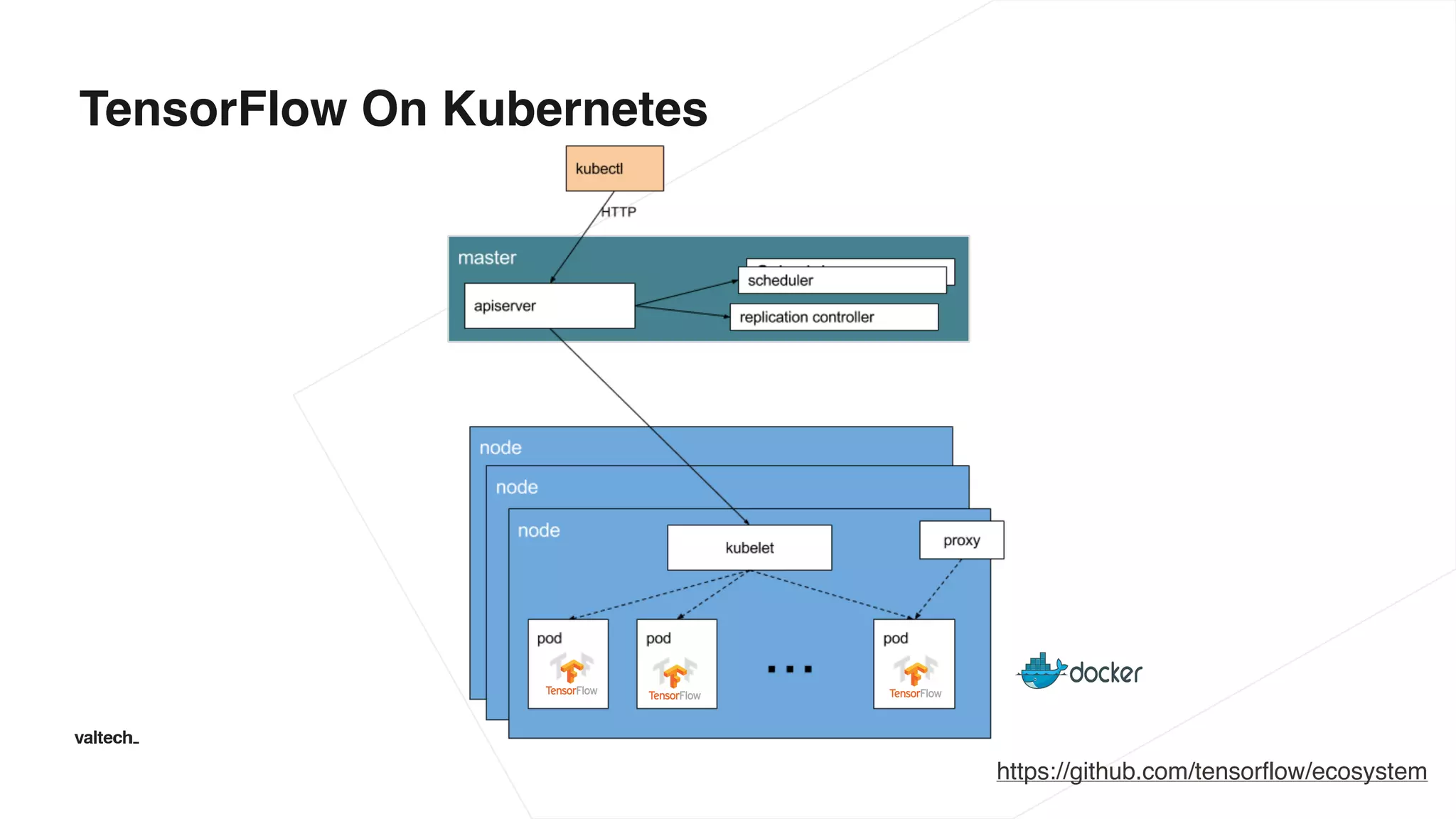

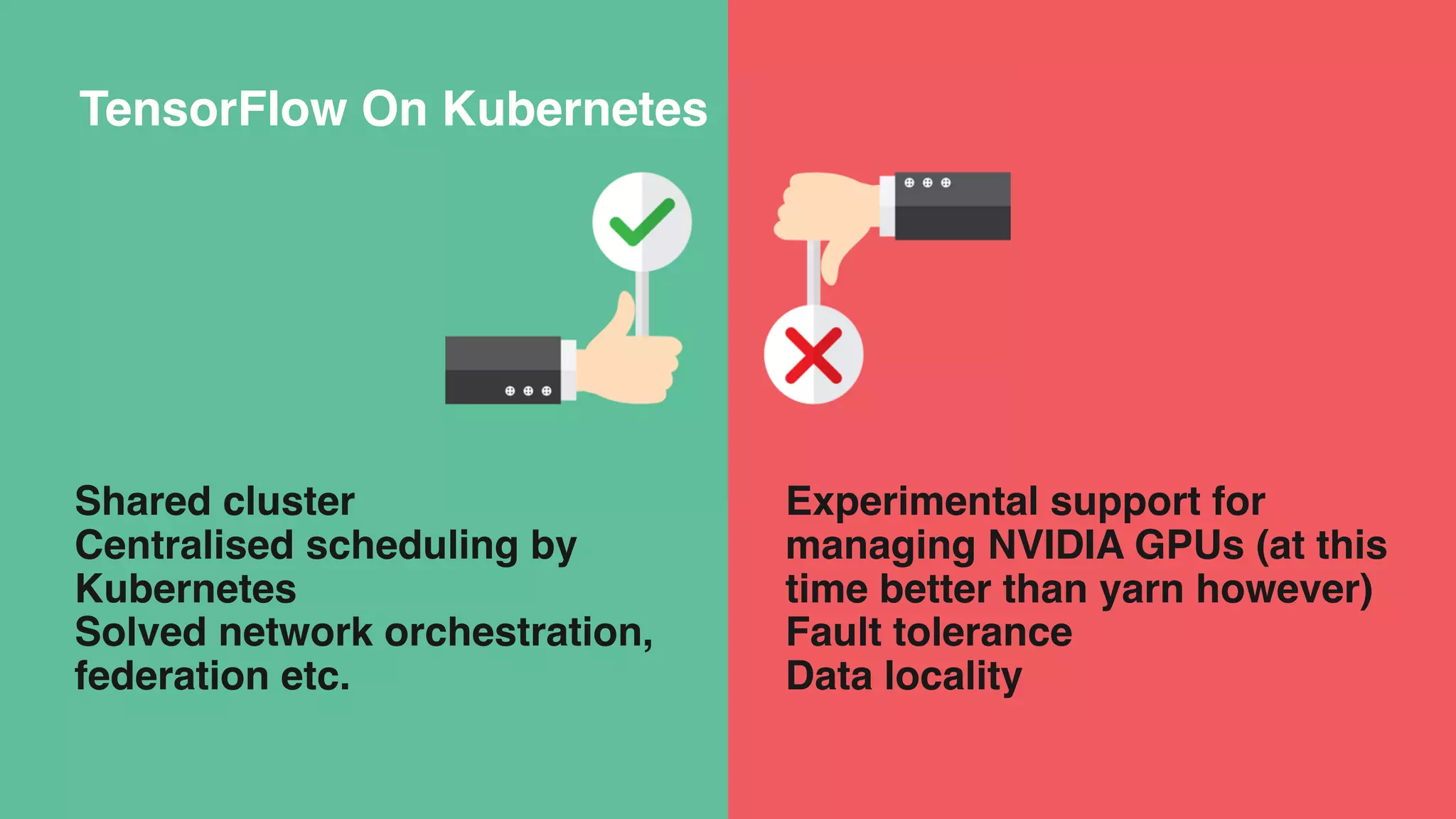

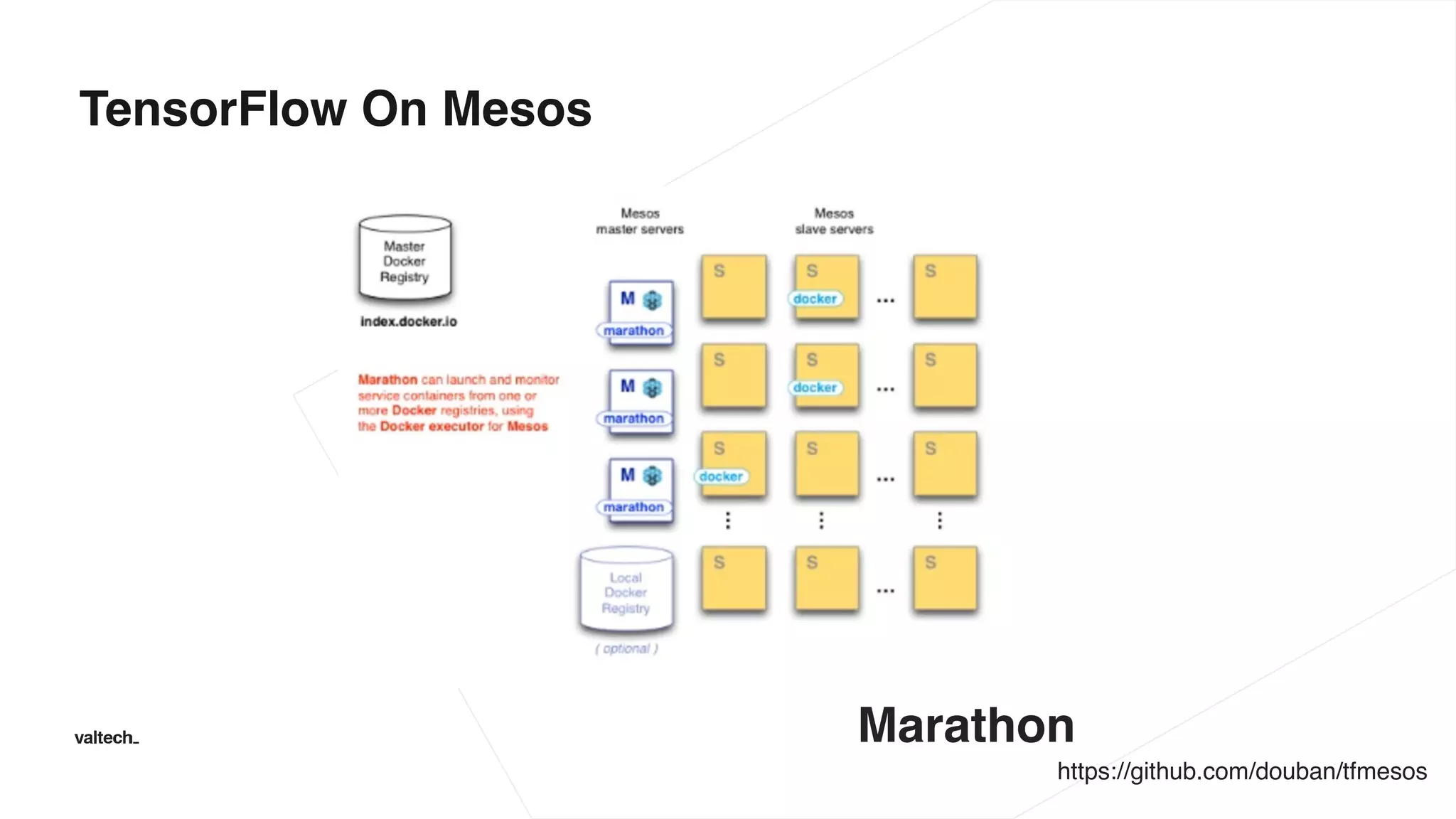

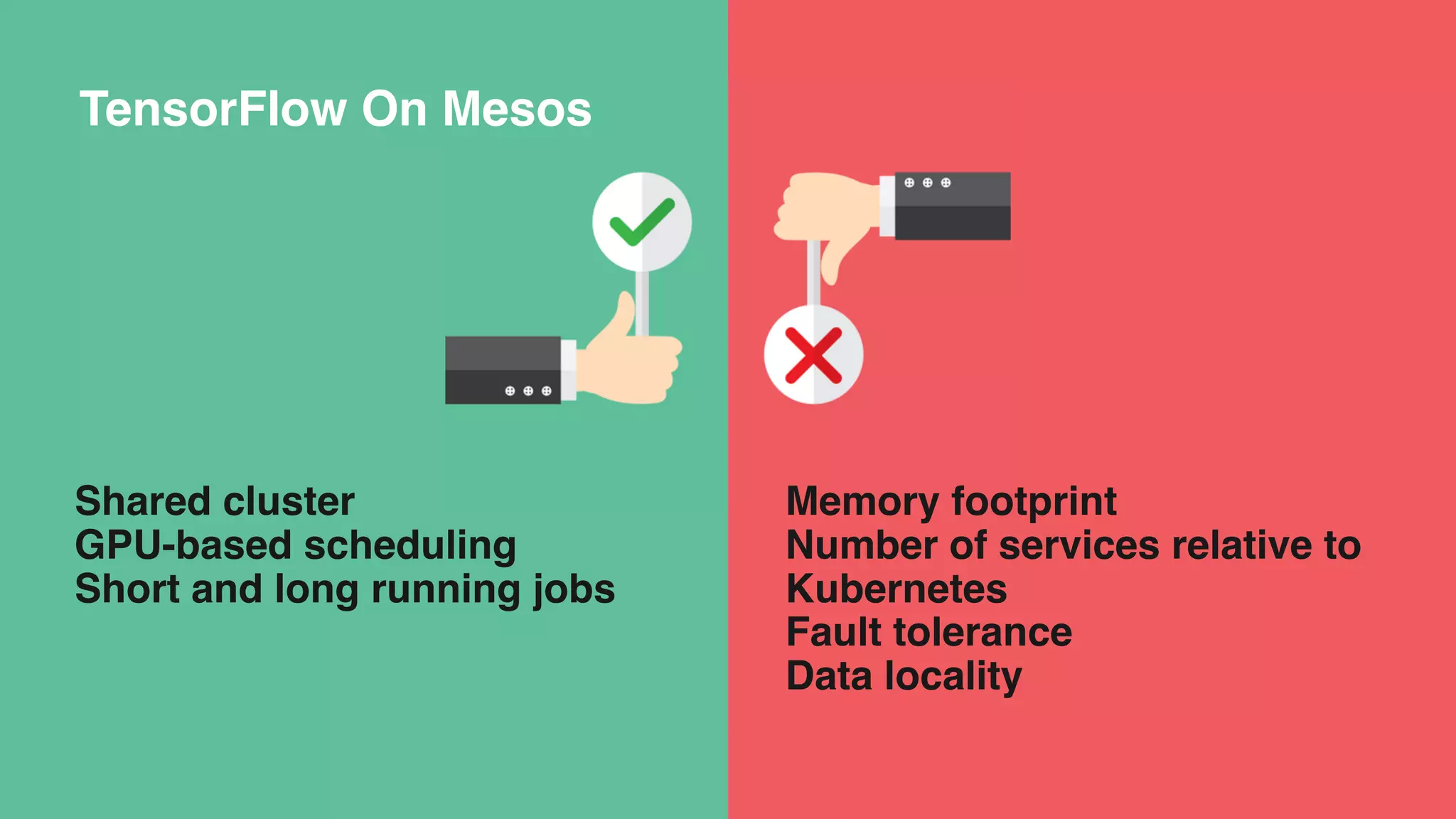

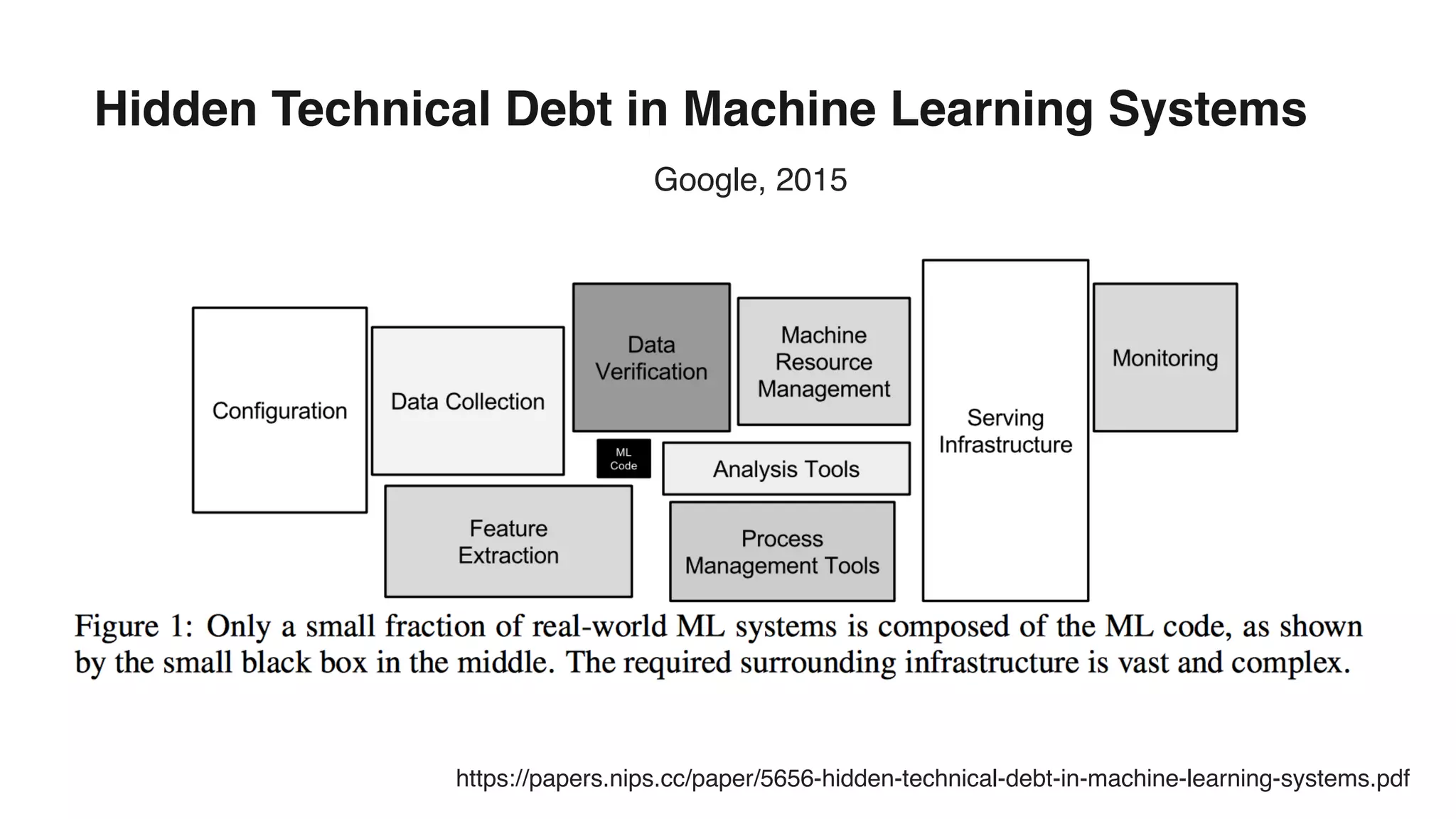

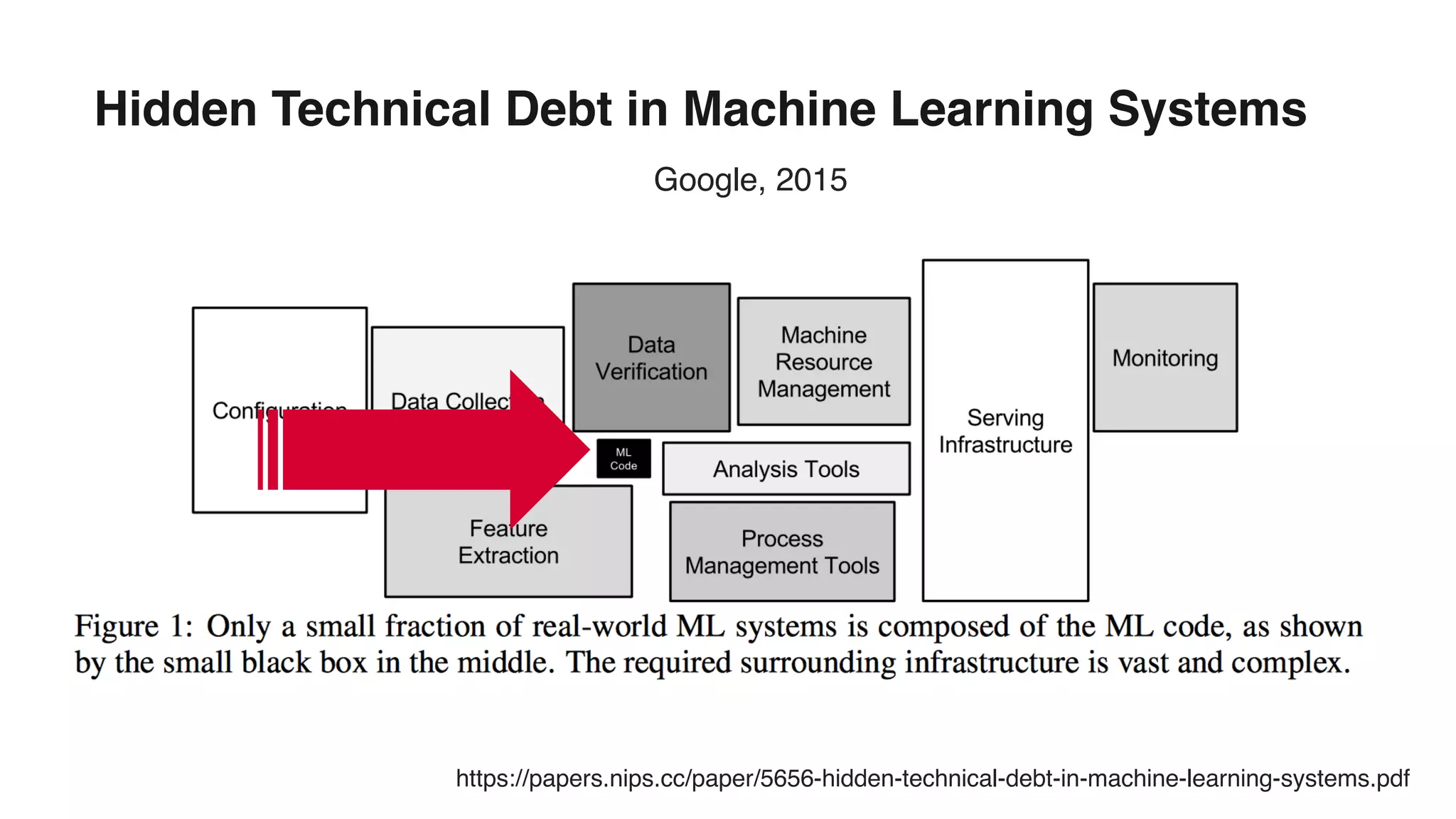

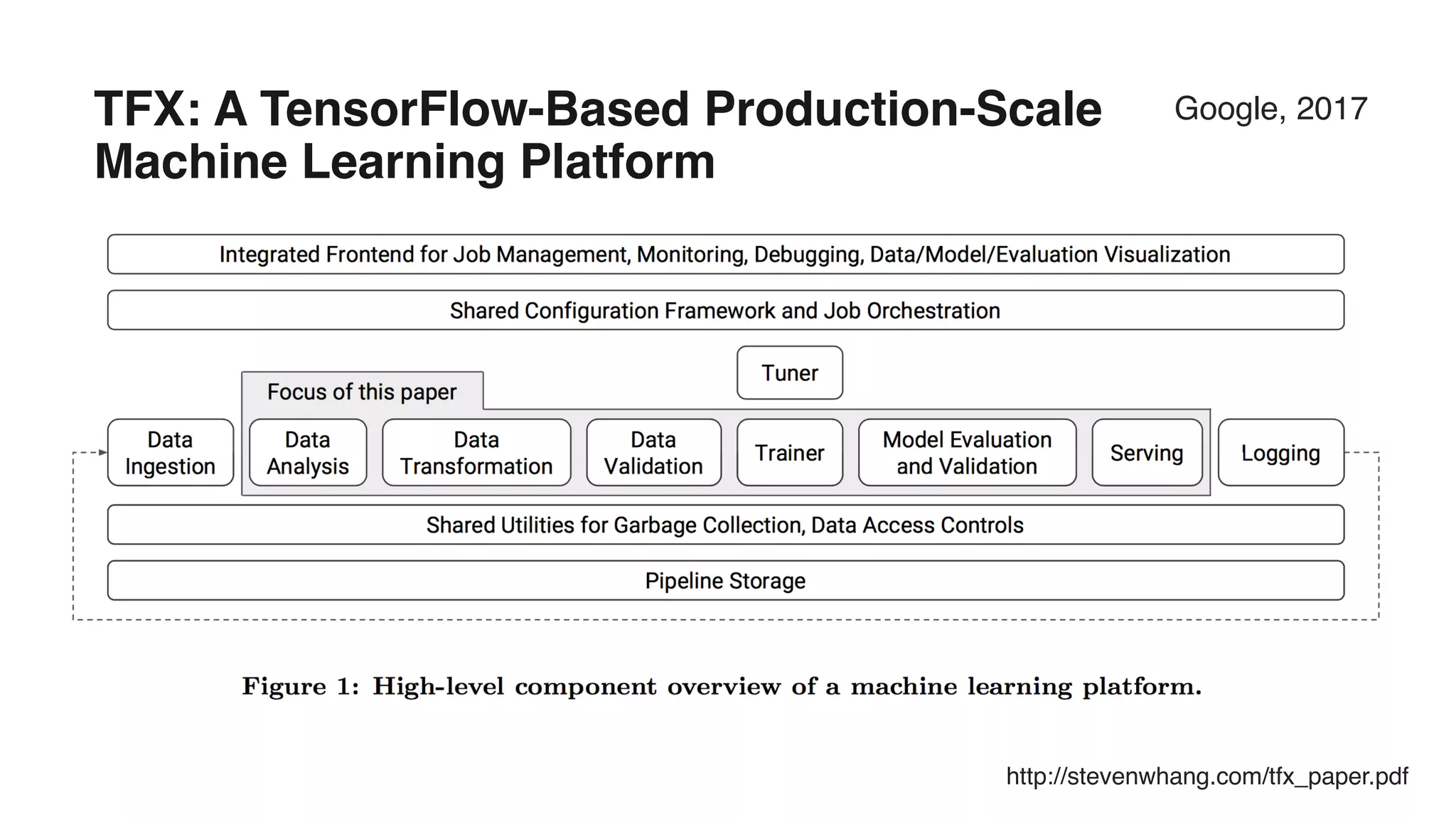

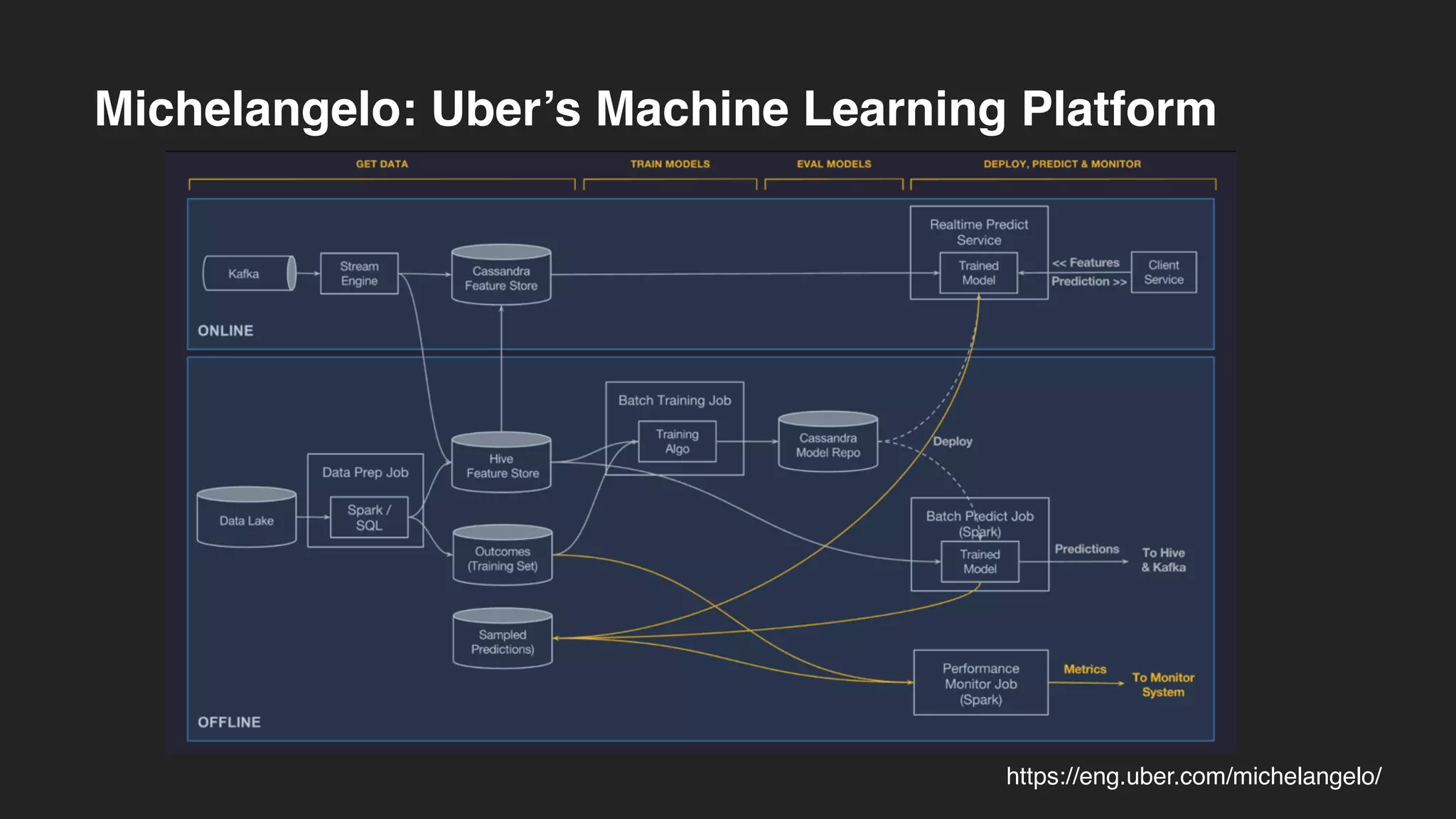

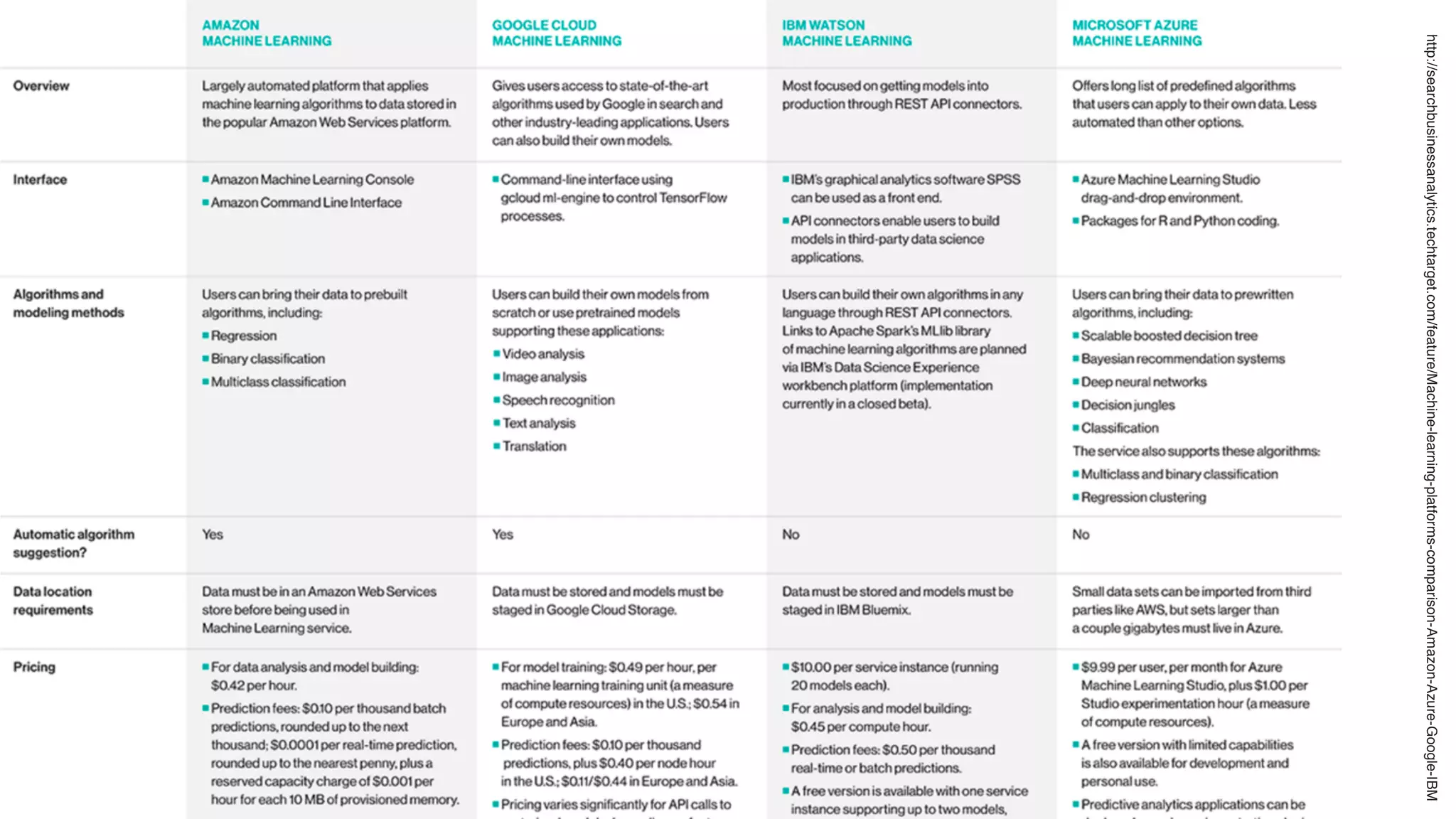

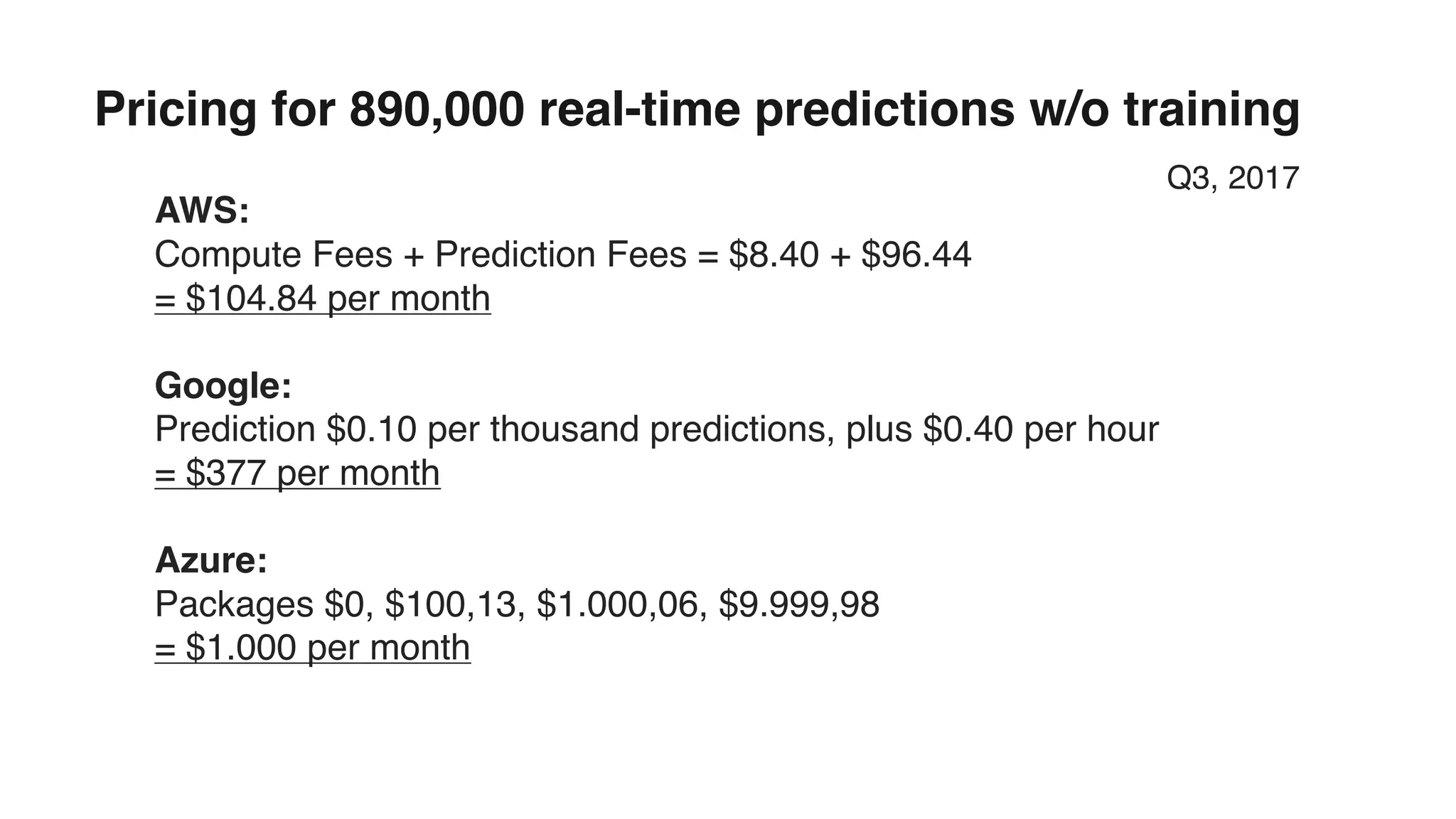

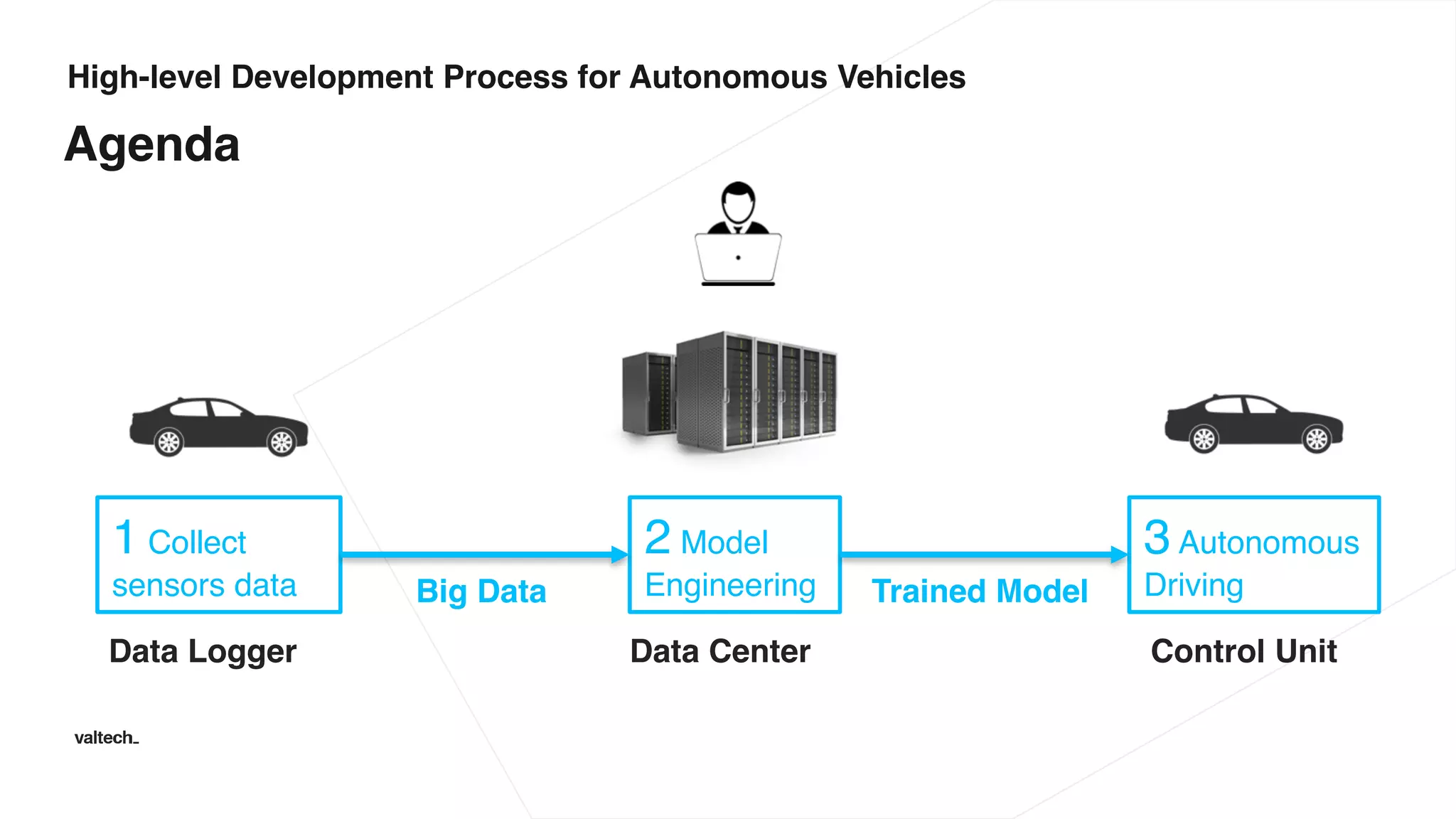

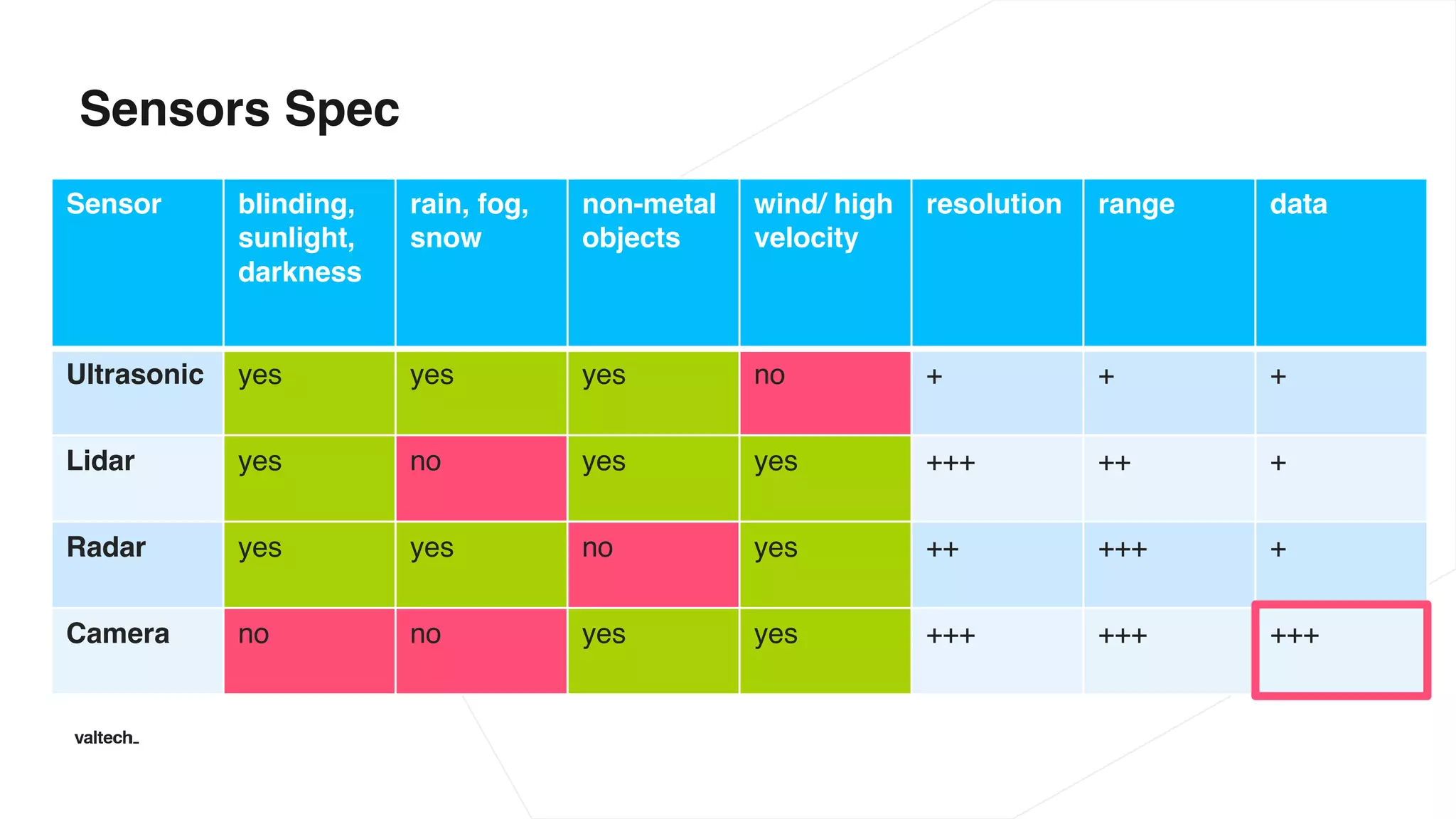

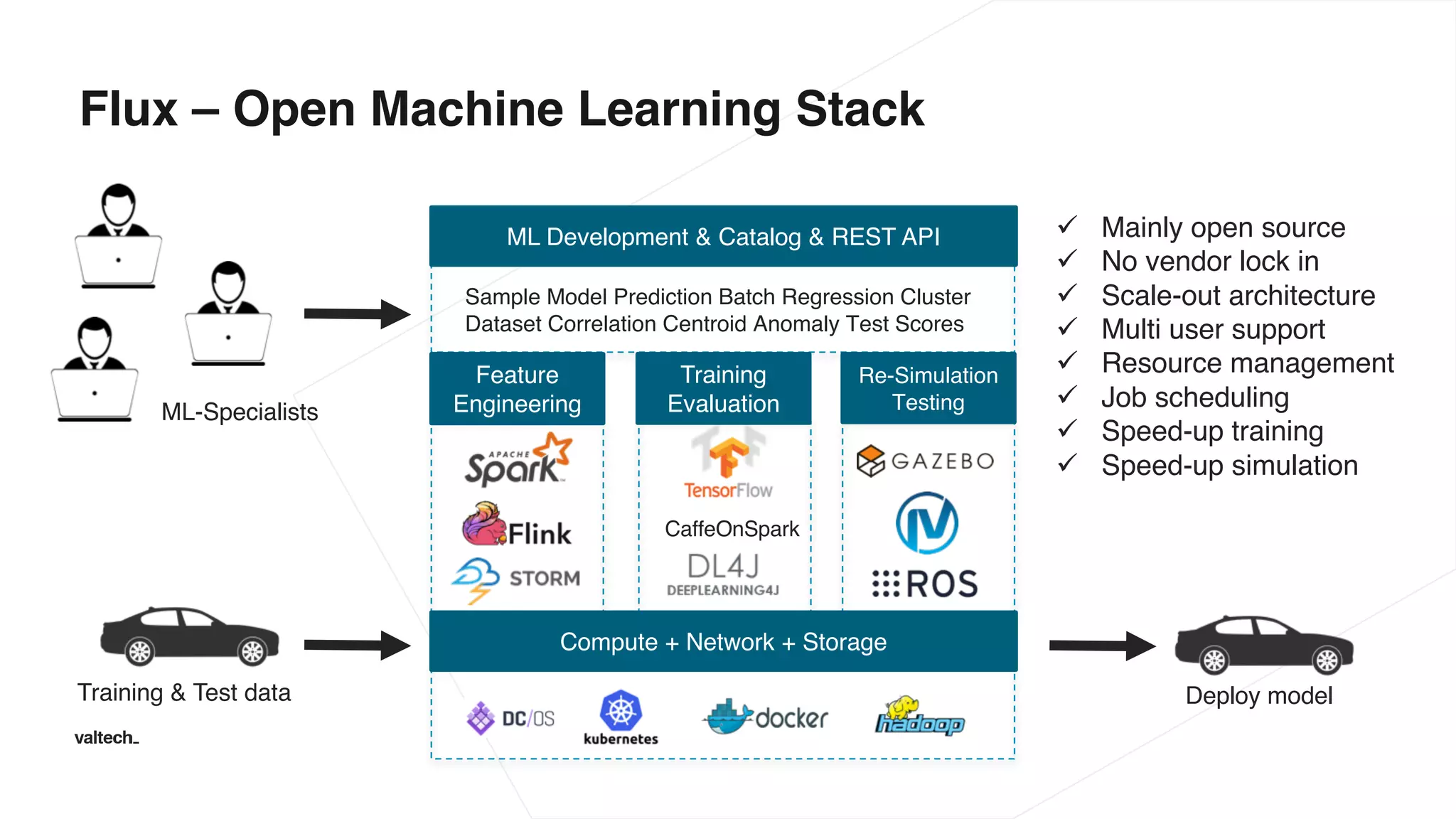

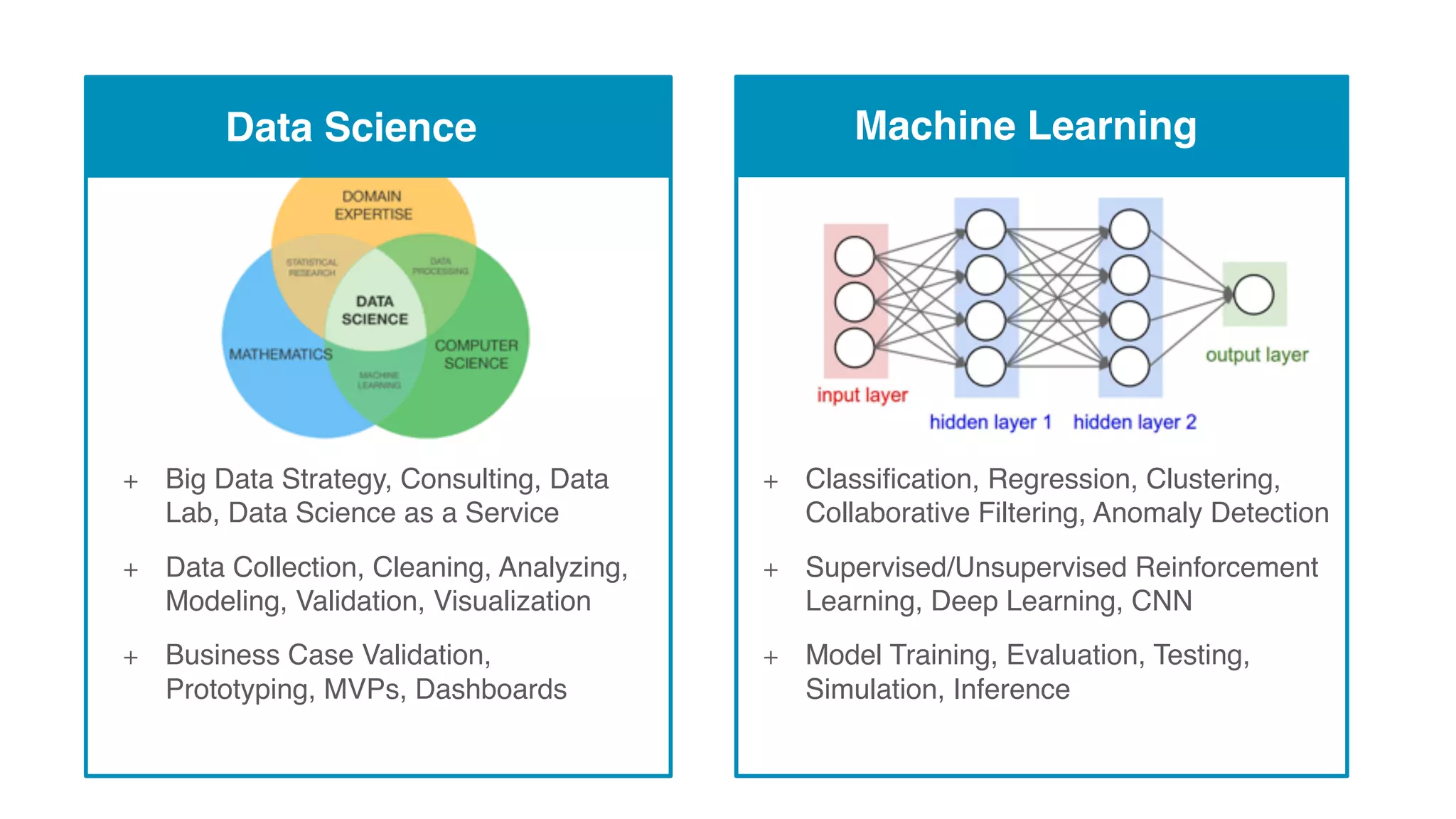

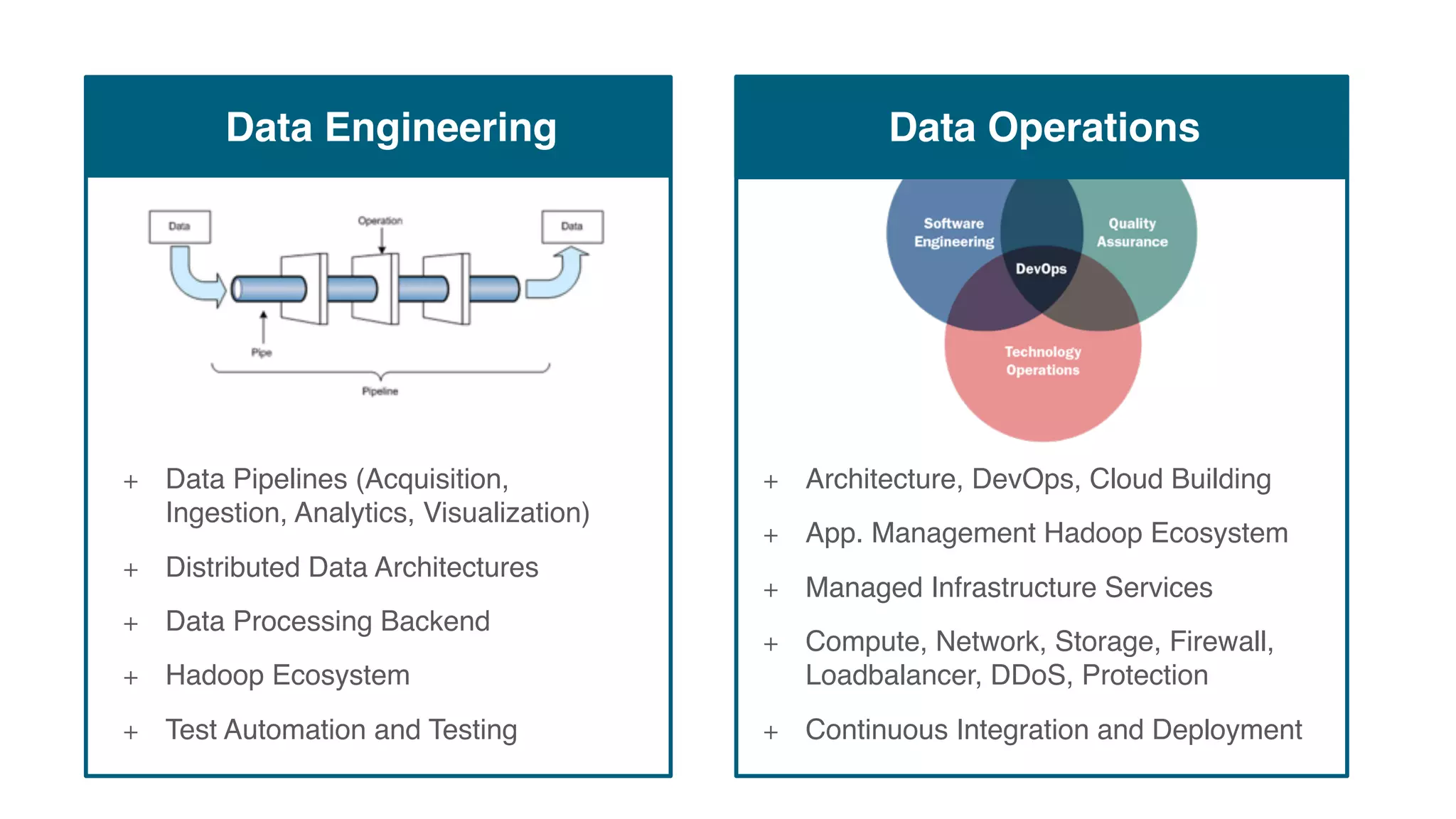

The document discusses various advancements in distributed deep learning using platforms like Hadoop and TensorFlow, illustrating their applications in image classification and AI performance compared to human capabilities. It reviews key developments in machine learning, covering historical milestones, data processing strategies, and computational improvements for training large models. Additionally, it touches on real-world case studies and highlights platforms for machine learning deployments such as Google AI and Uber's Michelangelo.