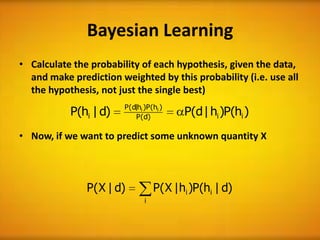

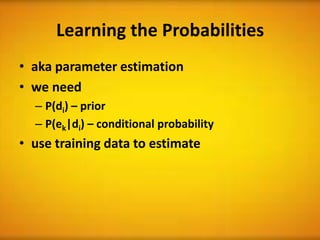

This document discusses statistical learning approaches, including Naive Bayes classification. It provides an example of predicting the flavor of candy from different bags based on prior probabilities. It explains how Bayesian learning uses all hypotheses weighted by their probabilities to make predictions. The document also discusses Naive Bayes, which makes a strong independence assumption to simplify probability calculations for diagnosis problems using symptoms. It provides an example of using symptom probabilities learned from training data to determine the most likely diagnosis.