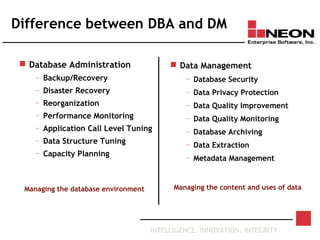

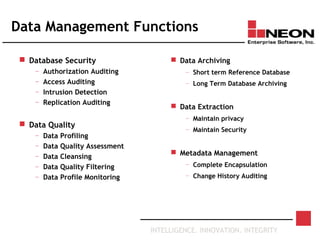

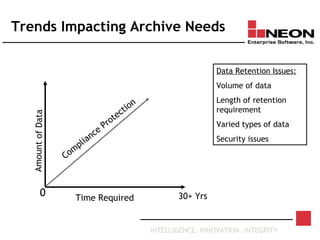

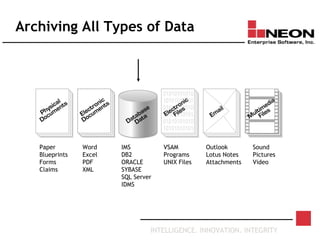

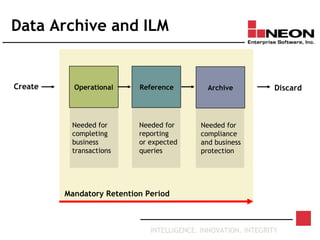

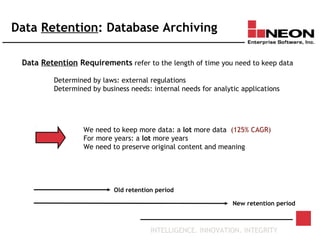

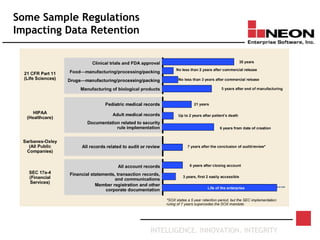

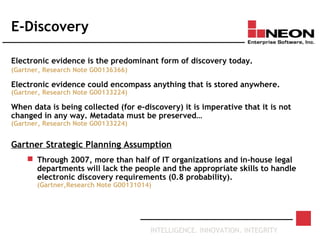

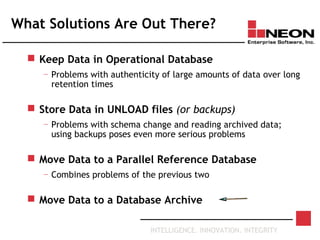

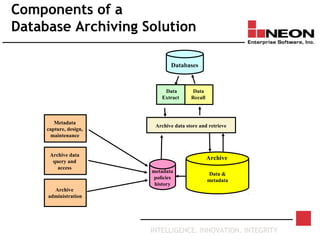

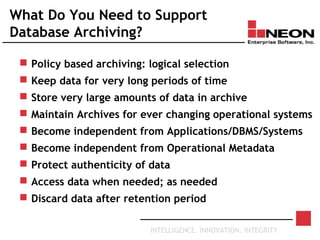

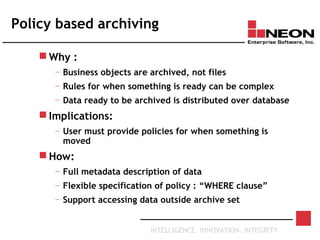

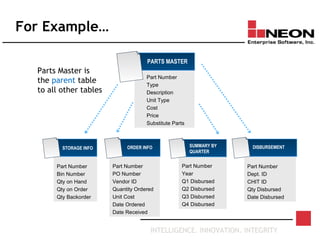

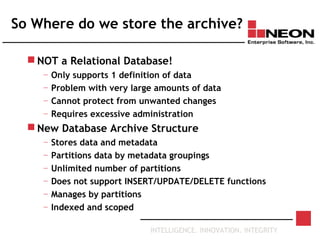

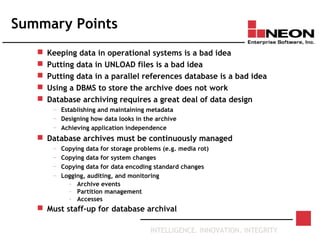

This document discusses database archiving and long-term data storage. It notes that data retention requirements are increasing in terms of volume, length of retention, and types of data. Traditional solutions like keeping data in operational databases or backups are inadequate for long-term archiving. An effective solution requires a separate archive system that can store large amounts of data long-term, maintain independence from original applications and databases, and access and discard data as needed according to retention policies.