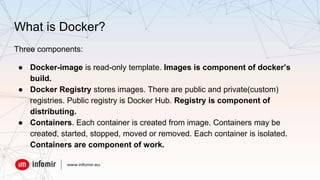

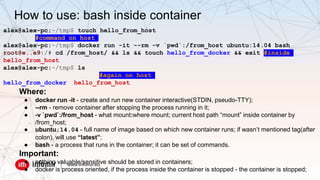

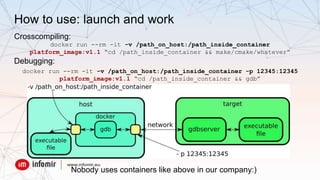

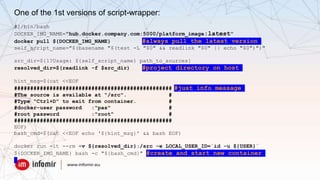

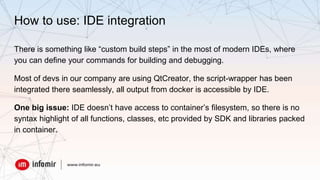

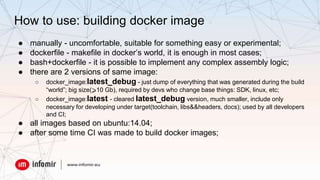

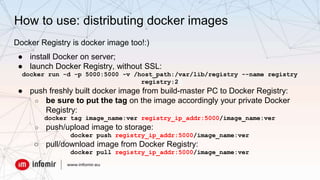

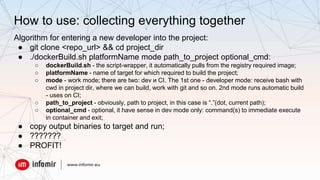

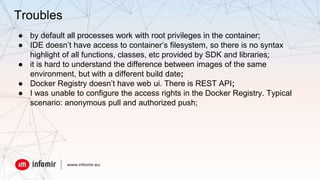

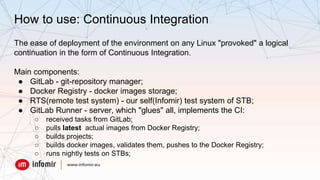

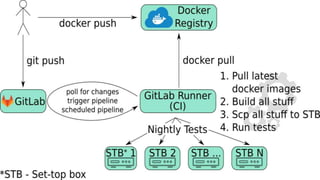

The document discusses the setup of a Docker-based development environment for cross-compilation, detailing its advantages and challenges compared to traditional server environments. It highlights the ease of creating isolated, consistent environments for developers and integrating with continuous integration systems. Additionally, it covers Docker's components, usage for building and debugging, and the overall impact on development workflows, leading to reduced time costs and improved satisfaction among developers.