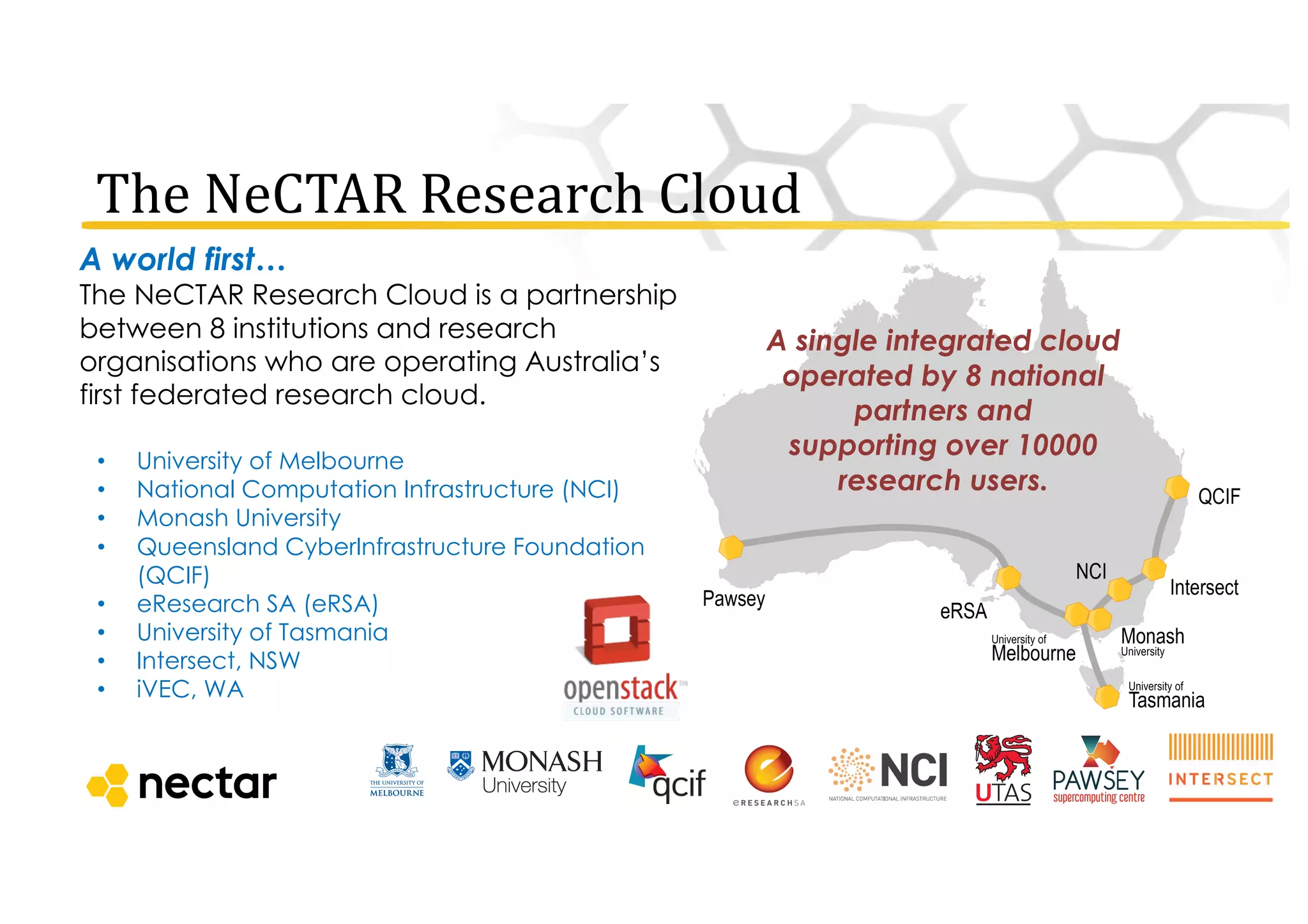

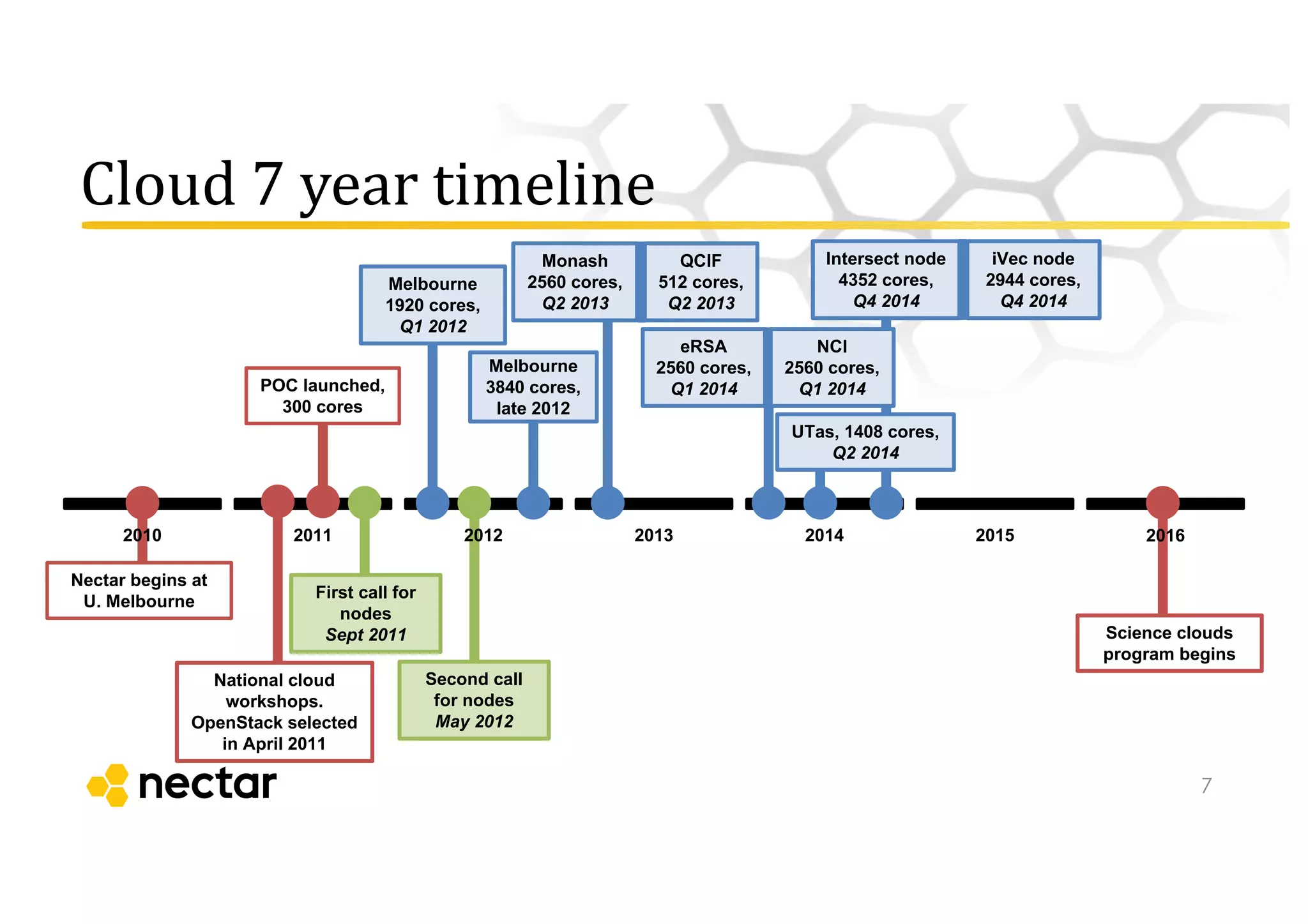

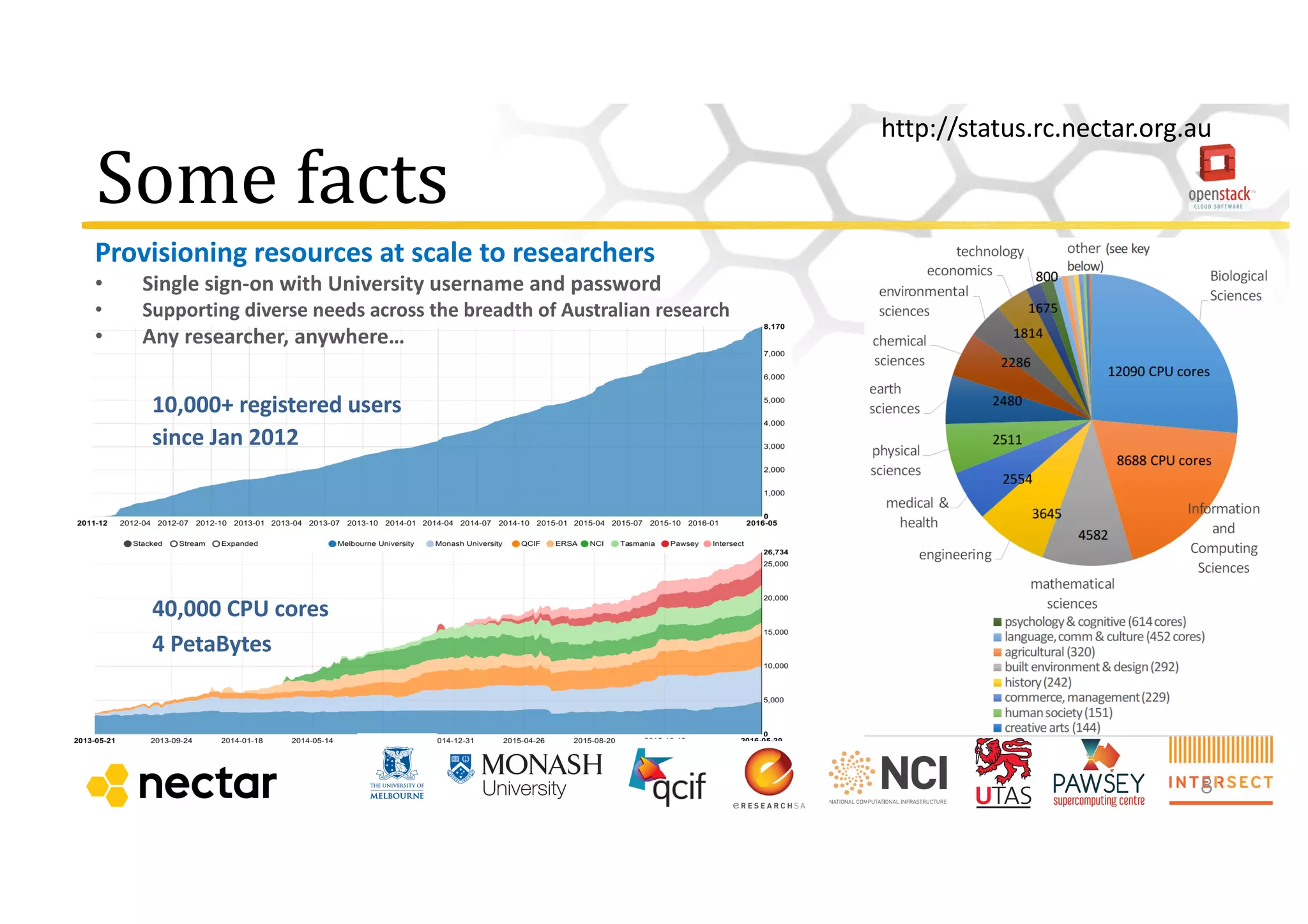

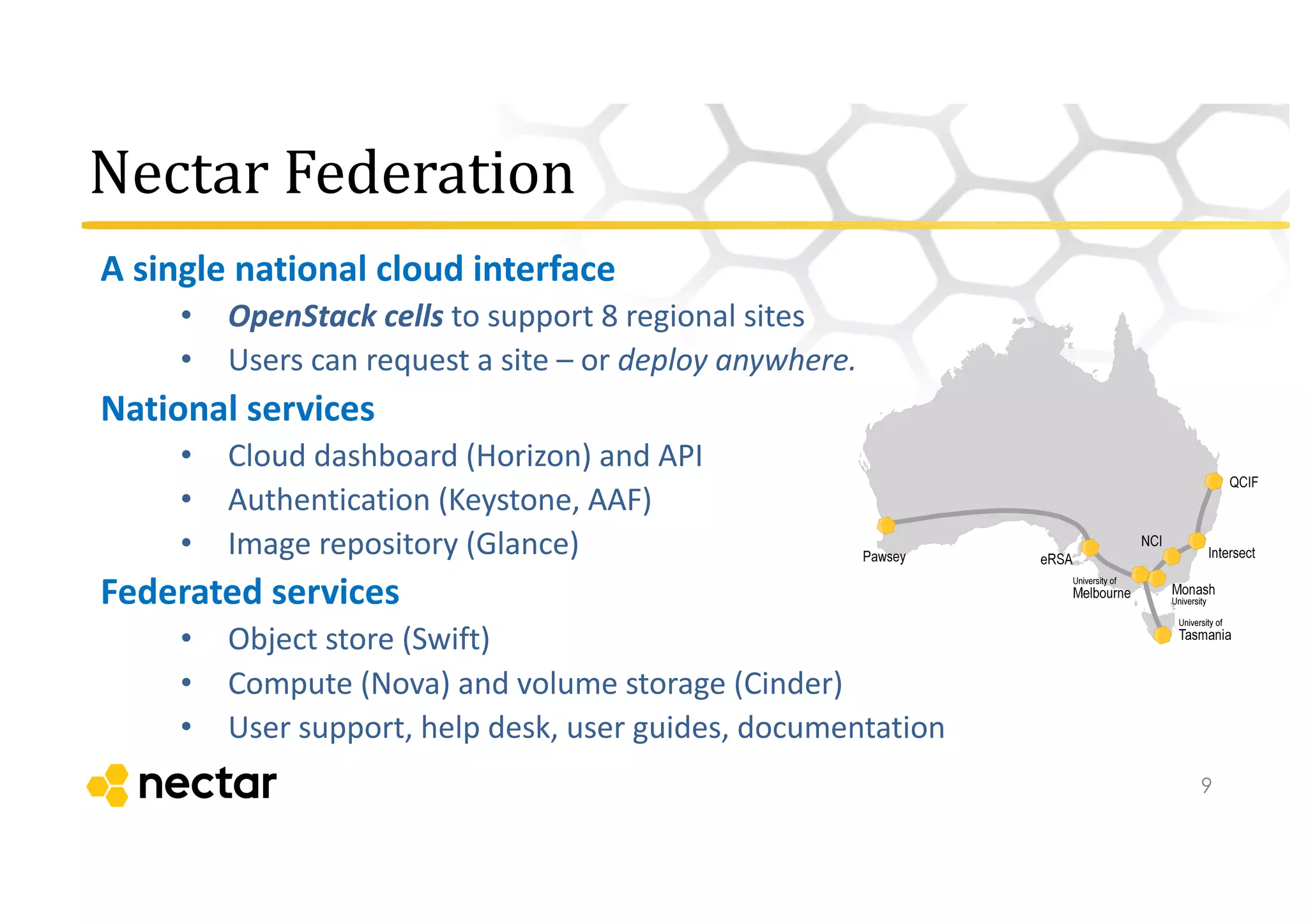

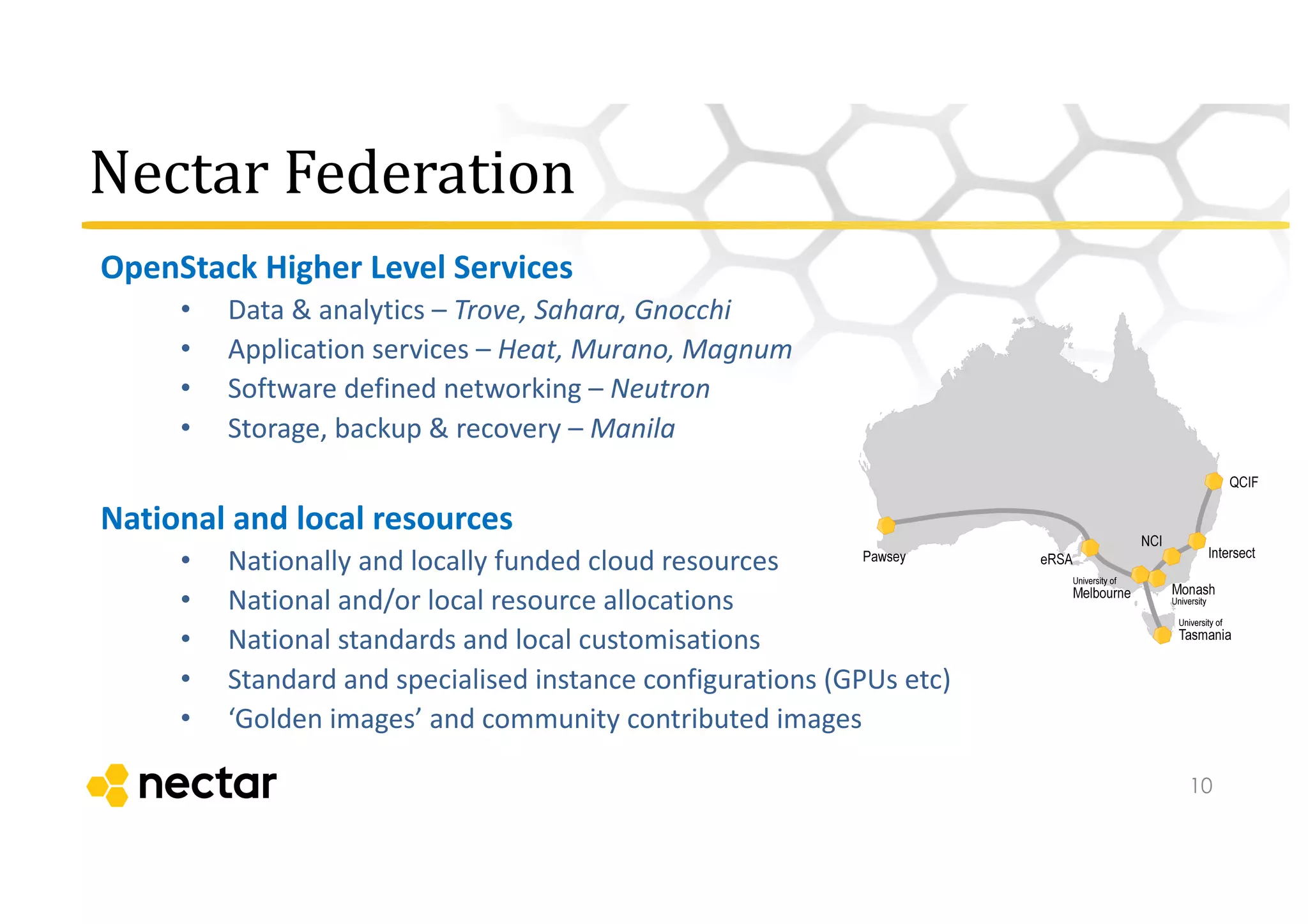

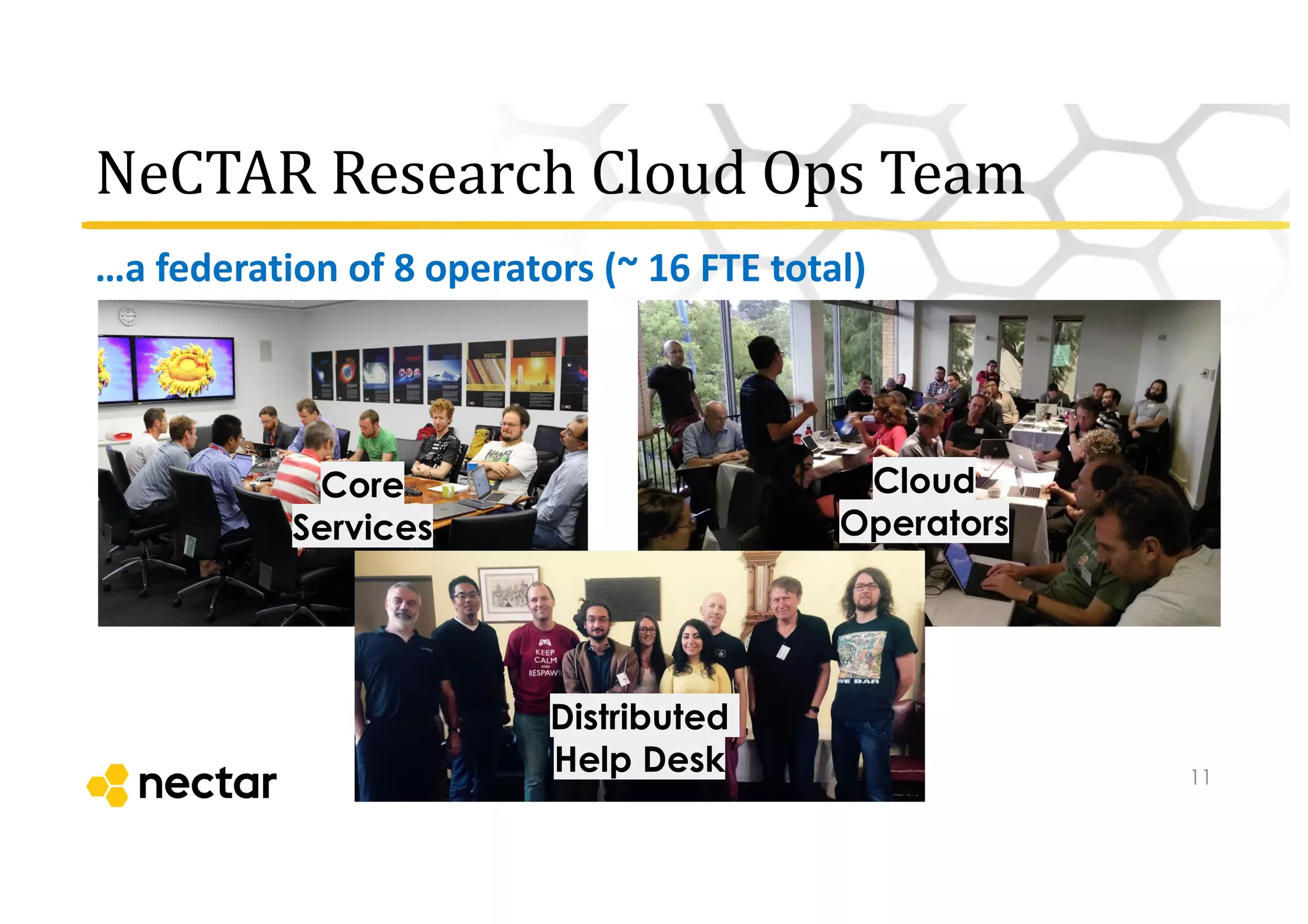

Nectar, supported by the Australian government, provides cloud-based e-research infrastructure to facilitate collaboration across research institutions in Australia. It offers a range of virtual laboratories and tools for various scientific fields, significantly enhancing the accessibility of research data and reducing collaboration barriers. The Nectar research cloud operates through a federation of eight institutions, supporting over 10,000 users and promoting innovative methodologies and cross-disciplinary collaboration.