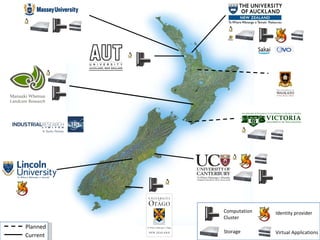

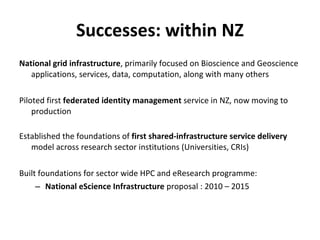

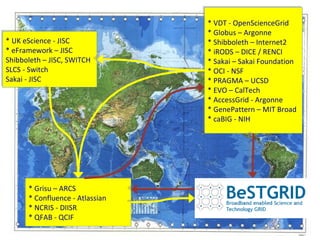

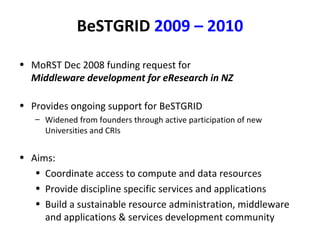

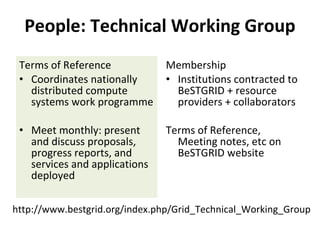

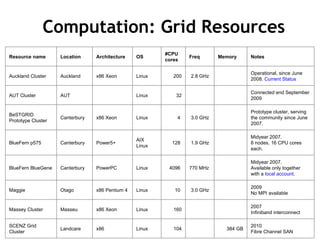

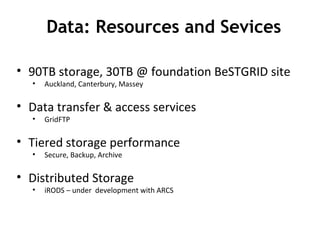

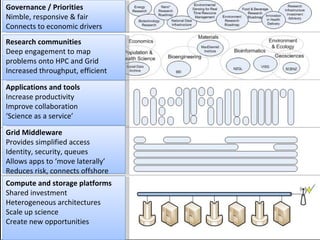

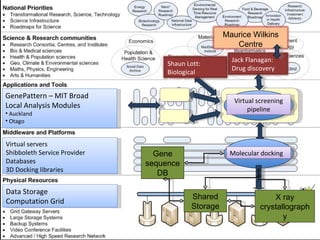

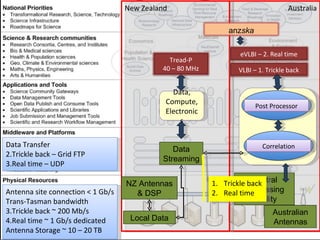

BeSTGRID aims to enhance research capability in New Zealand by providing skills training and infrastructure support. Since 2006, BeSTGRID has delivered services and tools to support research collaboration on shared data and computational resources. BeSTGRID coordinates access to compute and storage resources across New Zealand and provides discipline-specific applications and services to support researchers.

![BeSTGRID: Developing NZ research infrastructure www.bestgrid.org @bestgrid Nick Jones Director, BeSTGRID Co-Director eResearch, Centre for eResearch University of Auckland [email_address]](https://image.slidesharecdn.com/100215-skard-100219165054-phpapp01/75/SKA-NZ-R-D-BeSTGRID-Infrastructure-1-2048.jpg)

![End Questions / Discussion www.bestgrid.org @bestgrid Nick Jones Director, BeSTGRID Co-Director eResearch, Centre for eResearch University of Auckland [email_address]](https://image.slidesharecdn.com/100215-skard-100219165054-phpapp01/85/SKA-NZ-R-D-BeSTGRID-Infrastructure-20-320.jpg)